21st century crises demand new economic understanding, say top economists

Leading economists, including Nobel laureate Joseph Stiglitz, Argentina's Minister of Economy Martin Guzman, as well as academics from Oxford, Yale, Columbia, and UCLA, are calling today for a deep shift in how economists understand the overall economy. According to the new thinking, a series of massive economic shocks have left traditional economic theory in pieces and the old macroeconomic paradigm is on its way out.

Oxford Professor David Vines says, ‘The old model of ‘macroeconomics’ was built for a stable world free from large economic shocks. If we ever lived in such a world, we no longer do.’

The old model of ‘macroeconomics’ was built for a stable world free from large economic shocks. If we ever lived in such a world, we no longer do Professor David Vines

Summarising the Rethinking Macroeconomics project in the Oxford Review of Economic Policy , Oxford economists Professor David Vines and Dr Samuel Wills call for a shift away from the assumptions which have underpinned economic theory for decades – and which do not meet today’s challenges. They argue a more open, more diverse, paradigm is emerging, which is far better equipped to deal with contemporary challenges such as the global financial crisis, climate change and COVID.

Professor Vines and Dr Wills argue that the old macroeconomic paradigm is being replaced by an approach which is less tied to idealised theoretical assumptions, takes real-world data more seriously, and is, therefore, far better suited to dealing with 21 st century challenges.

Dr Wills says, ‘This shift [in thinking] breaks with more than a century of macroeconomic thinking and has wide-ranging implications for economic thought and practice.’

This shift [in thinking] breaks with more than a century of macroeconomic thinking and has wide-ranging implications for economic thought and practice Dr Samuel Wills

Professor Vines adds, ‘An economic model that cannot handle serious shocks is like a medical science that does not study major disease outbreaks; it is likely to let us down at the most critical of moments. The old economic model has already failed us badly in the 21 st century’.

Since the 2008 global financial crisis, which was not seen coming by most macroeconomists, the field has undergone a difficult decade. Its reputation was not helped by the slow and weak recovery from the 2008 crisis, which most observers now agree was made worse by world-wide austerity policies.

Macroeconomics has been dominated by one core approach for the last two decades: the ‘New Keynesian Dynamic Stochastic General Equilibrium’ (NK-DSGE) model. While this remains the profession’s generally accepted framework, the contributors to this issue of the Oxford Review of Economic Policy argue it is no longer fit for purpose.

According to today’s journal issue, the NK-DSGE model is unsuited to understanding large economic shocks. At the heart of the old model is the assumption that all disturbances are temporary and the economy eventually returns to one stable ‘equilibrium’, in which it continues to grow. This assumption makes it badly unsuited to studying large crises and transitions which see unemployment multiply and financial systems in crisis.

Professor Vines insists, ‘The old core assumption that the economy returns to one equilibrium point is a major diversion from reality. If we want an economics up to the challenges of the twenty first century, it is crucial the discipline understands the possibility of multiple economic equilibria.’

The old core assumption that the economy returns to one equilibrium point is a major diversion from reality...it is crucial the discipline understands the possibility of multiple economic equilibria Professor Vines

The NK-DSGE model is also built on over-idealised foundations. The old model is built on a set of assumptions about how people act in the economy and why: it assumes people are always well informed, rational, and dedicate all their attention and effort towards one particular goal.

Dr Wills maintains, ‘This analytical straight-jacket means the old paradigm refuses to recognise that we all act under uncertainty about the future when we make economic decisions, and that our decisions are therefore always shaped by our best guesses, habits, and rules of thumb.’

Professor Vines and Dr Wills have named the newly-emerging macroeconomic paradigm ‘MEADE’: Multiple Equilibrium and DiversE (in recognition of the Nobel prize-winning economist James Meade). The new approach studies how multiple economic equilibria can arise and uses wide range of different kinds of models to understand what policymakers can do.

Professor Vines says, ‘This new paradigm has far deeper roots in the real world: it stresses the need for models based on detailed empirical understandings of how the economy actually is, rather than how it theoretically should be.

‘Just as doctors only build up a full understanding of how a body is functioning using a whole host of empirically-grounded diagnostic tools, so economists must build up a full understanding of how economies function using a wide range of empirically-grounded tools and models.

‘Placing too much weight on the old, overly idealised model has blinkered macroeconomics; the blinkers are now coming off, and we want to speed this change along.’

DISCOVER MORE

- Support Oxford's research

- Partner with Oxford on research

- Study at Oxford

- Research jobs at Oxford

You can view all news or browse by category

A Journal of Ideas

Symposium | beyond neoliberalism, a new economic paradigm, tagged economics neoliberalism.

Thomas Kuhn

In the fall of 2018, University of California, Berkeley economist Emmanuel Saez said, to an audience of economists, policymakers, and the press, “If the data don’t fit the theory, change the theory.” He was speaking about a new data set he developed to show who gains from economic growth, the rise in monopoly and monopsony power, and the resulting importance of policies such as wealth taxes and antitrust regulation. As we in the crowd listened to his remarks, I could tell this was an important moment in the history of economic thought. Saez had fast become one of the most respected economists in the profession—in 2009, he won the John Bates Clark Medal, an honor given by the economics profession to the top scholar under the age of 40, for his work on “critical theoretical and empirical issues within the field of public economics.” And here he was, telling us that economics needs to change.

Saez is not alone. The importance of his comments is reflected in the research of a nascent generation of scholars who are steeped in the new data and methods of modern economics, and who argue that the field should—indeed, must—change. The 2007 financial meltdown and the ensuing Great Recession brought to the forefront a crisis in macroeconomics because economists neither predicted nor were able to solve the problem. But a genuine revolution was already underway inside the field as new research methods and access to new kinds of data began to upend our understanding of the economy and what propels it forward. While the field is much more technical than ever, the increasing focus on what the discipline calls “heterogeneity”—what we can think of as inequality—is, at the same time, undermining long-held theories about the so-called natural laws of economics.

It’s not clear where the field will land. In 1962, the historian of science Thomas Kuhn laid out how scientific revolutions happen. He defined a paradigm as a consensus among a group of scientists about the way to look at problems within a field of inquiry, and he argued that the paradigm changes when that consensus shifts. As Kuhn said, “Men whose research is based on shared paradigms are committed to the same rules and standards for scientific practice. That commitment and the apparent consensus it produces are prerequisites for normal science, i.e., for the genesis and continuation of a particular research tradition.” In this essay, I’ll argue that there is a new consensus emerging in economics, one that seeks to explain how economic power translates into social and political power and, in turn, affects economic outcomes. Because of this, it is probably one of the most exciting times to follow the economics field—if you know where to look. As several of the sharpest new academics—Columbia University’s Suresh Naidu, Harvard University’s Dani Rodrik, and Gabriel Zucman, also at UC Berkeley—recently said, “Economics is in a state of creative ferment that is often invisible to outsiders.”

The Twentieth Century Paradigms

We can demarcate three epochs of economic thinking over the past century. Each began with new economic analysis and data that undermined the prevailing view, and each altered the way the profession examined the intersection between how the economy works and the role of society and policymakers in shaping economic outcomes. In each of these time periods, economists made an argument to policymakers about what actions would deliver what the profession considered the optimal outcomes for society. Thanks in no small part to the real-world successes of the first epoch, policymakers today tend to listen to economists’ advice.

The first epoch began in the early twentieth century, when Cambridge University economist John Maynard Keynes altered the course of economic thinking. He started from the assumption that markets do not always self-correct, which means that the economy can be trapped in a situation where people and capital are not being fully utilized. Unemployment—people who want to work but are unable to find a job—is due to firms not deploying sufficient investment because they do not see enough customers to eventually buy the goods and services they would produce. From this insight flowed a series of policy prescriptions, key among them the idea that when the economy is operating at less than full employment, only government has the power to get back to full employment, by filling in the gap and providing sufficient demand. Keynes’s contribution is often summarized to be that demand—people with money in their pockets ready to buy—keeps the economy moving. For economists, the methodological contribution was that policymakers could push on aggregate indicators, such as by boosting overall consumption, to change economic outcomes.

Keynes explicitly framed his analysis as a rejection of the prevailing paradigm. He begins his The General Theory by decrying the “classical” perspective, writing in Chapter 1 that “[T]he characteristics of the special case assumed by the classical theory happened not to be those of the economic society in which we actually live, with the result that its teaching is misleading and disastrous if we attempt to apply it to the facts of experience.” He spends the next chapter identifying the erroneous assumptions and presumptions of the prevailing economic analysis and lays out his work as a new understanding of the economy. In short, he argues they were wrong because they assumed that the economy always reverts to full employment.

Many credit the ideas he laid out in The General Theory with pulling our economy out of the depths of the Great Depression. He advanced a set of tools policymakers could use to ensure that the economy was kept as close to full employment as possible—a measure of economic success pleasing to democratically elected politicians. Certainly, the reconceptualization of the economy brought forth by National Income and Product Accounts—an idea Keynes and others spearheaded in the years between World War I and II—shaped thinking about the economy. These data allowed the government, for the first time, to see the output of a nation—gross domestic product (GDP)—which quickly became policymakers’ go-to indicator to track economic progress.

By the late 1960s, Keynes’s ideas had become the prevailing view. In 1965, University of Chicago economist Milton Friedman wrote an essay in which he said, “[W]e are all Keynesians now,” and, in 1971, even Richard Nixon was quoted in The New York Times saying, “I am now a Keynesian in economics.”

Nonetheless, the field was shifting toward a consensus around what has become known as “neoliberalism”—and Friedman was a key player. Keynes focused on aggregates—overall demand, overall supply—and did not have a precise theory for how the actions of individuals at the microeconomic level led to those aggregate trends. Friedman’s work on the permanent income theory—the idea that people will consume today based on their assessment of what their lifetime income will be—directly contradicted Keynes’s assumption that the marginal propensity to consume would be higher among lower-income households. Whereas Keynes argued that government could increase aggregate consumption by getting money to those who would spend it, Friedman argued that people would understand this was a temporary blip and save any additional income. The microfoundations movement within economics sought to connect Keynes’s analysis, which focused on macroeconomic trends—the movement of aggregate indicators such as consumption or investment—to the behavior of individuals, families, and firms that make up those aggregates. It reached its apex in the work of Robert Lucas Jr., who argued that in order to understand how people in the economy respond to policy changes, we need to look at the micro evidence.

Together, these arguments shifted the field back toward focusing on how the economy pushed toward optimal outcomes. What we think of in the policy community as “supply-side” policy was the focus on encouraging actors to engage in the economy. In contrast, demand-side management sought to understand business cycles and was important for recessions, which the Federal Reserve could fix using interest rate policy. In other words, we were back to assuming that the economy would revert to full employment and to what economists call “optimal” outcomes, if only the government would get out of the way.

As the United States neared the end of the twentieth century, there were many indications that this was the right economic framework. The United States led the world in bringing new innovations to market and, up until the late 1970s, America’s middle class saw strong gains year to year. We had avoided another crisis like the Great Depression and our economy drew in immigrants from across the globe. If policymakers focused on improving productivity, the market would take care of the rest—or so economists thought.

The Unraveling

By the end of the twentieth century, a cadre of economists had grown up within this new paradigm. In her recent book, Leftism Reinvented , Stephanie Mudge points to the rise of what she terms the “transnational finance-oriented economists” who “specialized in how to keep markets happy and reasoned that market-driven growth was good for everyone.” But behind the scenes, there was a new set of ideas brewing. For the market fundamentalist argument to be true, the market needs to work as advertised. Yet new data and methods eventually led to profound questions about this conclusion.

My introduction to this work came in the first week of my first graduate Labor Economics course in 1993. The professor focused on a set of then newly released research papers by David Card and Alan Krueger in which they used “natural experiments” (here, comparing employment and earnings in fast food restaurants across state lines before and after one state raised their minimum wage) to examine what happened when policymakers raised the minimum wage. This was an innovation. Prior to the 1990s, most research in economics focused on the model, not the empirical methods. Indeed, Card recently told the Washington Center for Equitable Growth’s Ben Zipperer, “In the 1970s, if you were taking a class in labor economics, you would spend a huge amount of time going through the modeling section and the econometric method. And ordinarily, you wouldn’t even talk about the tables. No one would even really think of that as the important part of the paper. The important part of the paper was laying out exactly what the method was.”

This was not only an interesting and relevant policy question; it also was a deeply theoretical one. Standard economic theory predicts that when a price rises, demand falls. Therefore, when policymakers increase the minimum wage, employers should respond by hiring fewer workers. But Card and Krueger’s analysis came to a different conclusion. Using a variety of data and methods—some relatively novel—they found that when policymakers in New Jersey raised the minimum wage, employment in fast food restaurants did not decline relative to those in the neighboring state of Pennsylvania. Their research had real-world implications and broke new ground in research methods; it also brought to the fore profoundly unsettling theoretical questions.

When Card and Krueger published their analysis, “natural experiments” were a new idea in economics research—and Card gives credit to Harvard economist Richard Freeman as “the first person” he heard use the phrase. These techniques, alongside other methods, allowed economists to estimate causality—that is, to show that one thing caused another, rather than simply being able to say that two things seem to go together or move in tandem. As Diane Whitmore Schanzenbach at Northwestern University told me, “In the last 15 or 20 years or so, economics—empirical economics—has really undergone what we call the credibility revolution. In the old days, you could get away with doing correlational research that doesn’t have a format that allows you to extract the cause and effect between a policy and an outcome.”

The profession was not immediately comfortable with Card and Krueger’s research, balking at being told the world didn’t work the way theory predicted. Their 1995 book, Myth and Measurement , contained a set of studies that laid bare a conundrum at the core of economic theory. The reception was cold at best. At an event at Equitable Growth to mark the twentieth anniversary edition, Krueger recalled a prominent economist in the audience at an event for the first edition saying, “Theory is evidence too.” Indeed, when Krueger passed away in March, his obituary in The Washington Post quoted University of Chicago economist James J. Heckman, who in 1999 told The New York Times, “They don’t root their findings in existing theory and research. That is where I really deviate from these people. Each day you can wake up with a brand new world, not plugged into the previous literature.”

History tells a different story. Card and Krueger would go on to become greats in their fields and lead a new generation of scholars to new conclusions using innovative empirical techniques. Krueger’s recent passing earlier this year illustrated the extent of this transformation in economics. The discipline is now grounded in empirical evidence that focuses on proving causality, even if that does not conform to long-standing theoretical assumptions about how the economy works. New methods, such as natural or quasi experiments that examine how people react to policies across time or place, are now the industry standard.

While in hindsight it might seem incredible that this discipline could have existed without these empirical techniques, their widespread adoption only came late in the twentieth century, alongside the dawn of the Information Age and advances in empirical methods, access to data, and computing power. As one journalist put it, “[N]umber crunching on PCs made noodling on chalkboards passé.” One piece of evidence for this is that the top economics journals now mostly publish empirical papers. Whereas in the 1960s about half of the papers in the top three economics journals were theoretical, about four in five now rely on empirical analysis—and of those, over a third used the researcher’s own data set, while nearly one-in-ten are based on an experiment. Card and Krueger connect their findings to another game-changing development—the emergence of a set of ideas known as behavioral economics. This body of thought—along with much of feminist economics—starts from the premise that there is no such thing as the “rational economic man,” the theoretical invention required for economic theory to work. Krueger put it this way:

The standard textbook model, by contrast, views workers as atomistic. They just look at their own situation, their own self-interest, so whether someone else gets paid more or less than them doesn’t matter. The real world actually has to take into account these social comparisons and social considerations. And the field of behavioral economics recognizes this feature of human behavior and tries to model it. That thrust was going on, kind of parallel to our work, I’d say.

This is the definition of a paradigm shift. As a result of these changes, empirical research is now the route to join those in the top echelons of economics. While this may seem like a field looking within, it also appears to be a field on the cusp of change. Kuhn talks about how as a field matures, it becomes more specialized; as researchers dig into specific aspects of theory, they often then uncover a new paradigm buried in fresh examinations of the evidence. This kind of research commonly elevates anomalies—findings that don’t fit the prevailing theory.

How we make sense of this new empirical analysis will define the new paradigm. The policy world has been quick to take note of key pieces of this new body of empirical research. Indeed, evidence-backed policymaking has become the standard in many areas. But the nature of a paradigm shift means that policymakers are in need of a new framework to make sense of all the pieces of evidence and to guide their agenda. We can see this in current policy debates; while conservatives continue to tout tax cuts as the solution to all that ails us, the public no longer believes this to be the true remedy. Whether that means the agenda being discussed in many quarters to address inequality, by taxing capital and addressing rising economic concentration, will become core to the new framework remains to be seen.

A New Vision

A new focus on empirical analysis doesn’t necessarily mean a new paradigm. The evidence must be integrated into a new story of what economics is and seeks to understand. We can see something like this happening as scholars seek to understand inequality—what economists often refer to in empirical work as “heterogeneity.” Putting inequality at the core of the analysis pushes forward questions about whether the market performs the same for everyone—rich and poor, with economic power or without—and what that means for how the economy functions. It brings to the fore questions that cannot be ignored about how economic power translates into social power. Most famously, in Capital in the Twenty-First Century , Thomas Piketty brings together hundreds of years of income data from across a number of countries, and concludes from this that powerful forces push toward an extremely high level of inequality, so much so that capital will calcify as “the past tends to devour the future.”

That rethinking is happening right now. At January’s Allied Social Science Association conference—the gathering place for economists across all the sub-fields—UC Berkeley’s Gabriel Zucman put up a very provocative slide, which said only “Good-bye representative agent.” This slide was as revolutionary as Card and Krueger’s work decades before because it implied that we should let go of the workhorse macroeconomic models. For the most part, policymakers rely on so-called “representative agent models” to inform economic policy. These models assume that the responses of economic actors, be they firms or individuals, can be represented by one (or maybe two) sets of rules. That is, if conditions change—for example, a price rises—the model assumes that everyone in the economy responds in the same way, regardless of whether they are low income or high income. Moody’s Analytics houses a commonly cited economic model, led by economist Mark Zandi, who confirms this: “Most macroeconomists, at least those focused on the economy’s prospects, have all but ignored inequality in their thinking. Their implicit, if not explicit, assumption is that inequality doesn’t matter much when gauging the macroeconomic outlook.”

But these workhorse models of macroeconomics underperformed—to put it mildly—in the run up to the most recent financial crisis. They neither predicted the crisis nor provided reasonable estimates of economic growth moving forward as the Great Recession hit and then the slow recovery began. When Zandi integrated inequality into the Moodys macroeconomic forecasting model for the United States, he found that adding inequality to the traditional models—ones that do not take into account economic inequality at all—did not change the short-term forecasts very much. But when he looked at the long-term picture or considered the potential for the system to spin out of control, he found that higher inequality increases the likelihood of instability in the financial system.

This research is confirmed in a growing body of empirical work. This spring, International Monetary Fund economists Jonathan D. Ostry, Prakash Loungani, and Andrew Berg released a book pulling together a series of research studies showing the link between higher inequality levels and more frequent economic downturns. They find that when growth takes place in societies with high inequality, the economic gains are more likely to be destroyed by the more severe recessions and depressions that follow—and the economic pain is all too often compounded for those at the lower end of the income spectrum. Even so, as of now, most of the macroeconomic models used by central banks and financial firms for forecasting and decision-making don’t take inequality into account.

Key to any paradigm change is that there’s a new way of seeing the field of inquiry. That’s where Saez, along with many co-authors, including Thomas Piketty and Gabriel Zucman, are making their mark. They have created what they call “Distributional National Accounts,” which combine the aggregate data on National Income so important to the early twentieth century paradigm, with detailed data on how that income is allocated across individuals—incorporating the later-twentieth-century learning—into a measure that shows growth and its distribution. As Zucman told me, “We talk about growth, we talk about inequality; but never about the two together. And so distribution of national accounts, that’s an attempt at bridging the gap between macroeconomics on the one hand and inequality studies on the other hand.”

Congress seems to have gotten the message. The 2019 Consolidated Appropriations Act, which opened the government after a record 35-day shutdown, included language “encourag[ing]” the Bureau of Economic Analysis to “begin reporting on income growth indicators” by decile at least annually and to incorporate that work into its GDP releases if possible. In this way, step by step, new economic paradigms become new policymaking tools.

From the Symposium

Beyond neoliberalism, the moral vision after neoliberalism, 16 min read, read more about economics neoliberalism.

Heather Boushey is President and CEO of the Washington Center for Equitable Growth and author of Unbound: How Inequality Constricts Our Economy and What We Can Do About It .

Also by this author

Which side are we on, view comments.

"A multidisciplinary forum focused on the social consequences and policy implications of all forms of knowledge on a global basis"

We are pleased to introduce Eruditio, the electronic journal of the World Academy of Art & Science. The vision of the Journal complements and enhances the World Academy's focus on global perspectives in the generation of knowledge from all fields of legitimate inquiry. The Journal also mirrors the World Academy's specific focus and mandate which is to consider the social consequences and policy implications of knowledge in the broadest sense.

Eruditio Issues

Volume 2 issue 1.

- Volume 2 Issue 2

- Volume 2 Issue 3

- Volume 2 Issue 4

- Volume 2 Issue 5

- Volume 2 Issue 6

- Volume 3 Issue 1

Editorial Information

- Editorial Board

- Author Guidelines

- Editorial Policy

- Reference Style Guide

World Academy of Art & Science

- Publications

- Events & News

Introduction to the New Paradigm of Political Economic Theory

ARTICLE | November 27, 2015 | BY Winston P. Nagan

This short article is an attempt to provide a reasonably simplified introduction to a complex initiative. Influential Fellows in the World Academy of Art and Science, moved in part by the global crisis of unemployment and a conspicuous lack of theoretical engagement that might constructively respond to the problem, came to the conclusion that the reason for the silence of intellectual concern was because there was a dire need for new thinking about the importance of political economy and its salience for a defensible world order. Leading figures in the Academy, such as Orio Giarini, Ivo Šlaus, Garry Jacobs, Ian Johnson, and many others, have diligently worked on a new economic framework with the focus of the centrality of human capital as a critical foundation for economic prosperity.

This article seeks to contribute to a clear and more simplified description of the fundamental paradigms of traditional and emerging economic order. It seeks to set out the paradigmatic contours of classical theory, it moves from classical theory (the old normal) to the new normal in neoliberalism and then recommends a framework for the future that borrows from the new paradigm thinking of jurisprudence. It applies and summarizes these ideas as guidelines for the development of a theory about political economy as an inquiring system with a comprehensive focus and a fixed concentration on human-centered approach for the future. This approach summarizes articles the author wrote for Cadmus. *

1. The Background to Basic Theory and its Roots in laissez-faire

Economic Theory is a disputed field of intellectual endeavor. The stakes implicated in economic theory development are high and as a consequence theory is a contested domain. The contestation is intensified because the dominance of a particular theory will influence the social impact of that theory on human relations and this in turn will invite policy interventions and policy consequences. Within the arena of theoretical contestation, there has emerged a new normal for economic theory. We may regard this new normal as the conventional paradigm of economic theory. The new normal has come with various terms of identification, but the one that seems to be ascendant is encapsulated in the phrase “economic neoliberalism.” In a sense, economic neoliberalism draws powerful inspiration from the earliest iterations of the nature of economic activity. In the 18 th century, French officials adopted and popularized a phrase that would serve as both an empirical description of ideal economic exchanges, as well as a preferred model for the structure and function of the arena within which economic activity happened. The phrase was laissez-faire . In practice, this meant that the state should reduce its regulatory control over economic interactions within the body politic. The less regulation, the less interference there would be in the arena of economic activity. Less interference meant increased dynamism in the arena of economic productivity, distribution, and exchange.

In the latter part of the 18 th century, the moral philosopher Adam Smith published his famous book, The Wealth of Nations . Smith was aware of the principle of laissez-faire that had emerged in French practice. Indeed, he found this idea compatible with the theory of economic enterprise that was developed in his book. Smith provided both a description of how economics worked, and by implication, provided a justification for the importance of his model in improving the level of economic prosperity in society. Adam Smith was preeminently a moral philosopher with profound economic insight. In his book, The Theory of Moral Sentiments , he noted that the specialized capacities of human beings were not a matter coordinated by centralized authority and control. On the contrary, it was influenced by something more impersonal—the market. By the pursuit of economic self-interest and the system of pricing, human beings and their capacities are led to meet the needs of others, who they do not know and by mechanism, they do not comprehend.

The genius of Smith’s work lay in its simplicity using common sense ideas to sustain a level of understanding of the workings of economic order. Economic relations encompassed the supplier of goods and services and the demander of goods and services. The goods and services constituted property that was exchanged between supplier (S) and demander (D). The arena of this exchange between S & D was the market, which established a natural equilibrium when it functioned optimally and satisfied the self-interests of both S & D. We may look at Smith’s model as the old normal of economic theorizing. The importance of this model is its reinvention for the new normal model of economic neoliberalism.

2. The Influence of Value-Free Positivism

We must carefully remind ourselves that Adam Smith was at heart a moral philosopher. This particular understanding of the role of the market became a central feature of his work, largely because subsequent economists committed to the positivist approach to the study of economics received no inspiration to moderate the dynamics of autonomous market, with the untidy implications of collective and individual social responsibility. Vitally important to this approach was its strenuous justification of insulating economics from social reality and social responsibility. Indeed, positivism as a science went much further. It excluded normative discourse and its value implications because values were essentially non-science. At the time, there did not exist a credible science of society as well. Adam Smith’s theoretical meditations did not subscribe to this as modern scholarship has amply demonstrated. † Braham has isolated four precepts in the corpus of Smith’s writings which clarify this issue. First, there is the assumption that when people are left alone to pursue their own interests, there rides along with this dynamic an invisible hand that indicates that society will benefit from this conduct as a whole. This idea is moderated by Smith’s moral egalitarianism, which implies that every person has equal moral worth. This brings us to Smith’s ideas of social justice, which are connected to moral egalitarianism. Here Smith was deeply influenced by the jurisprudence of natural law. Natural Law makes a distinction between commutative justice and distributive justice. In the latter, justice is done according to the right one has to compensate for a legal wrong done. The former is more complex. Smith’s work is permeated with discussions of the foundations of distributive justice.

Following this classical tradition, distributive justice is equated with beneficence, the application of ‘charity and generosity’ based on an individual or social assessment of ‘merit.’ Under this notion the rules that assign particular objects to particular persons, which is the nub of the concept of distributive justice, is a private and not a public matter or one of social norms; it is not a duty of the society at large and no one has a claim in morality against others to alleviate their condition. Smith subsumes this notion of justice under ‘all the social virtues’. ‡

Under the influence of the old normal model of economic theorizing, modern science added an important dimension to the evolution of the old model. In the early 19 th century, the social sciences and law came under the influence of positivism. The positivist impulse was meant to bring intellectual rigor, a rigorous commitment to objectivity, and an insistence that scientific inquiry be completely separated from inquiry into values, morals, and ethics. The influence of science and mathematics on economics has been enormous. Credible scientific work in economics required a reliance on mathematics and mathematical abstractions. This tended to remove theory from the critical scrutiny of intellectuals untrained in mathematics.

In the context of infusing complex mathematical equations into the theory of economics, the trend led to a greater formalization of economic theory and as a consequence, the formalistic emphasis was further abstracted from the concrete conditions of social life and human problems. Moreover, the principle that the market established an abstract equilibrium of absolute efficiency seemed to be conventional wisdom in policy-making circles. This approach to economic organization received a severe setback between 1929 and 1933. The conventional wisdom at the time was that the laissez-faire approach to a weakly regulated economy was the cause of the Great Depression, and there was no natural force within the market to self-regulate the economy out of the Depression. In later years a single American economist, Milton Friedman, claimed that the Depression was not a failure of the free natural market, but rather a failure of government policy. The government did not sufficiently monetize the economy and within three years the amount of money in the economy was reduced by a third. This he claimed was the cause of the Depression and not the fidelity to a weakly regulated market.

3. The New Normal in Economic Theory: Economic Neoliberalism − Milton Friedman and the University of Chicago’s Economic Department

Milton Friedman is generally acknowledged to be the architect of the New Normal Paradigm of economic thinking. He was a leader of the University of Chicago’s Economics Department, which was the institutional base for the New Normal Paradigm. The two significant influences that had emerged in particular after the Second World War was the Keynesian influenced American New Deal and the reach of Stalin’s influence in Eastern Europe. From the perspective of Friedman and his colleagues, the New Deal was a form of creeping socialism, and an indirect threat to freedom. With regard to Stalin’s socialism and its extinction of private property, the Stalinist State control of the economy was quite simply an extinction of freedom. In 1947, Friedrich von Hayek, Milton Friedman, and others formed the Mont Pelerin Society to address these questions intellectually.

The fundamentals of economic neoliberalism insist upon a radical privatization of property and value in society. In short: if a matter may be privatized, it should be privatized. Additionally, economic neoliberalism favored the notion of the minimal state. In short: the more deregulation and limitation on the state’s power to regulate, the better. A strong belief in corporate tax cuts and reduced taxes for the wealthy. A strong belief in trade liberalization and open markets. Finally, with regard to the minimal state, there would be a massive diminution of the role of government in society: The writer Tayyab Mahmud describes economic neoliberalism as follows:

The neoliberal project is to turn the “nation-state,” one with the primary agenda of facilitating global capital accumulation unburdened from any legal regulations aimed at assuring welfare of citizens. In summary, neoliberalism seeks unbridled accumulation of capital through a rollback of the state, and limits its functions to minimal security and maintenance of law, fiscal and monetary discipline, flexible labor markets, and liberalization of trade and capital flows.

Friedman made several strong arguments as to why governmental intervention into the market is generally futile, or leaves the economy worse than it was without the intervention. These arguments were formed around the ideas of adaptive expectations and rational expectations. With regard to adaptive expectations, Friedman demonstrated that the government printing money increased inflation and businessmen neutralized the rate of increase in the money supply by predicting it. The rational expectations argument was based on the idea that the market would predict and undermine government intervention. These ideas were meant to show that markets are indeed self-regulating and that regulation is both unnecessary and dysfunctional. There are a vast range of critiques of economic neoliberalism, but the critique of N. Chomsky seems to be one of the most compelling.

Neoliberalism is actually closer to corporatism than any other philosophy in that, in its abandonment of the traditional regulatory function of the state and embracing of corporate goals and objectives, it cedes sovereignty over how its economy and society and are organized to a global cabal of corporate elite.

Since the economic crisis of 2008, the criticisms of economic neoliberalism have also focused on the deregulation of the global financial system. The critique of the financial system is that it is organized along the lines of a gambler’s nirvana. Additionally, this is an economic model that could not predict the financial catastrophe that was to accompany the crisis. The consequences of the theory and its practice have also led to a global crisis of radical inequality. In addition, the consequences of the theory would reflect on its absence of a credible theory of sustainable development. This is a theory that resists the concern of the impact of the economy on environmental degradation and climate change. Finally, the radical exclusion of values from economic theory means that the assignment of responsibility to the private sector for mismanagement and dangerous conduct is undermined.

The central thrust of our emphasis is to deemphasize the abstract formalism of economic neoliberalism pseudoscience and to develop a comprehensive theory for inquiry into economic phenomena from the local to the most comprehensive Earth-Space context. We recognize that putting theory into the most comprehensive context generates complexity and a critical need for expeditious knowledge integration. In short, economics should be enriched and informed by sociology, anthropology, political science, the psychological sciences, as well as lessons from the enhanced methods of the physical sciences. Therefore, our theory and method for inquiry set out as their initial task, the development of a theory that describes economics as it is in the broadest eco-social context.

4. The Fundamentals of a New Paradigm of Political Economy

The search for a new paradigm of political economy is in effect the search for a theory about political economy that should be comprehensive enough to embrace the context of the entire earth-space community. It must also be particular in adequately accounting for the specific localized effects of economic theory, policy, and practice. To this end, a new paradigm theory of political economy should include the following emphases:

- It must have a comprehensive global eco-social focus for relevant inquiry. This means theory must not only transcend but also include the relevance of the sovereign state while stressing the importance of transnational causes and consequences of economically related behavior. In particular, it must acknowledge the salience of the global inter-determination of economic perspectives and operations.

- It must engage in normative, value-based description and analysis including a clarification of the basic goal values of current world order. It must use these as markers to clarify the basic community policies implicated in all economic cooperation and contestation. Here the all inclusive value of universal human dignity may be a critical principle of political-economic normative guidance.

- Political economy is not animated by an autonomous machine. It is given dynamism by a sustained advocacy and very critically the vital importance of both authoritative and controlling decision making. The critical role of decision is a mandated focus of professional responsibility as well as responsible inquiry.

- Just as political economy must account for the structure of authority and control in the sovereign state, it must be alert to the principal features of global constitutional order. In particular, it must be alert to the way in which global constitutional order and its decision processes shape the evolving domains of world order.

- The evolving new paradigm of political economy must engage in the scientific task of illuminating and devaluating the conditions that inspire political economic outcomes. In short, it is a task that requires the identification and analysis of political and economically relevant causes and consequences that influence economic outcomes.

- The evolving new paradigm theory of political economy must consciously seek to anticipate and examine all possible relevant future scenarios to enhance the rationality of this function of theory, this function may well be guided by the clarification of the value bases that are desired for future scenarios.

- The new paradigm of political economy must infuse itself with the most important element of the human faculty—human creativity. In particular, this means that the new paradigm must focus on the alternative possibilities that may be anticipated from relevant future scenarios. This focus should have the creative element that creates the prospect of imaginative but realizable future outcomes that are compatible with the basic fundamental values that represent the common interest of the community as a whole.

* See http://cadmusjournal.org/author/winston-p-nagan-0

† See Matthew Braham, Adam Smith’s Concept of Social Justice , August 14, 2006

‡ Id . at 1

About the Author(s)

- Login to post comments

Subscribe here to free electronic edition

Lead articles.

Determinism and Reification: The Twin Pillars of the Amoral Society

- Gerald Gutenschwager

Breaking Free: Bringing the Overview Effect to Life and Work

- Charles Smith

Higher Education -Cornerstone of the New Era

- Federico Mayor

The Future of International Law

- John Scales Avery

EU between Monetarism and Keynesianism

- Mladen Staničić & Josip Sapunar

- Winston P. Nagan

Collabrocracy: Collaborative Intelligence and Governance of Globalised Society

- Dimitar Tchurovsky

Reforming Electronic Markets and Trading

- Hazel Henderson

Remarks on Visions of Sustainable Development

- Robert J. Berg

Analysis and Assessment of the Right to Peace in Light of the Latest Developments at the Human Rights Council

- Christian Guillermet-Fernández & David Fernández Puyana

Sustainability, Past and Future

- Michael Marien

Book Review

Download Volume 2 Issue 1

Terms of Use | Copyright Policy | Privacy Policy

- Tools and Resources

- Customer Services

- Econometrics, Experimental and Quantitative Methods

- Economic Development

- Economic History

- Economic Theory and Mathematical Models

- Environmental, Agricultural, and Natural Resources Economics

- Financial Economics

- Health, Education, and Welfare Economics

- History of Economic Thought

- Industrial Organization

- International Economics

- Labor and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Micro, Behavioral, and Neuro-Economics

- Public Economics and Policy

- Urban, Rural, and Regional Economics

- Save Search

- Share This Facebook LinkedIn Twitter

Perform this search in

- Oxford Research Encyclopedias

Narrow Your Choices Narrow Your Choices

Modify your search.

- Full Article (28)

Availability

- Econometrics, Experimental and Quantitative Methods (12)

- Economic Development (3)

- Economic History (3)

- Economic Theory and Mathematical Models (28)

- Environmental, Agricultural, and Natural Resources Economics (2)

- Financial Economics (4)

- Health, Education, and Welfare Economics (4)

- International Economics (4)

- Labor and Demographic Economics (3)

- Macroeconomics and Monetary Economics (6)

- Micro, Behavioral, and Neuro-Economics (4)

- Public Economics and Policy (1)

- Urban, Rural, and Regional Economics (1)

1 - 20 of 28 Results for :

- Economic Theory and Mathematical Models x

Anthropometrics: The Intersection of Economics and Human Biology

John komlos, publication history:.

Anthropometrics is a research program that explores the extent to which economic processes affect human biological processes using height and weight as markers. This agenda differs from health economics in the sense that instead of studying diseases or longevity, macro manifestations of well-being, it focuses on cellular-level processes that determine the extent to which the organism thrives in its socio-economic and epidemiological environment. Thus, anthropometric indicators are used as a proxy measure for the biological standard of living as complements to conventional measures based on monetary units. Using physical stature as a marker, we enabled the profession to learn about the well-being of children and youth for whom market-generated monetary data are not abundant even in contemporary societies. It is now clear that economic transformations such as the onset of the Industrial Revolution and modern economic growth were accompanied by negative externalities that were hitherto unknown. Moreover, there is plenty of evidence to indicate that the Welfare States of Western and Northern Europe take better care of the biological needs of their citizens than the market-oriented health-care system of the United States. Obesity has reached pandemic proportions in the United States affecting 40% of the population. It is fostered by a sedentary and harried lifestyle, by the diminution in self-control, the spread of labor-saving technologies, and the rise of instant gratification characteristic of post-industrial society. The spread of television and a fast-food culture in the 1950s were watershed developments in this regard that accelerated the process. Obesity poses a serious health risk including heart disease, stroke, diabetes, and some types of cancer and its cost reaches $150 billion per annum in the United States or about $1,400 per capita. We conclude that the economy influences not only mortality and health but reaches bone-deep into the cellular level of the human organism. In other words, the economy is inextricably intertwined with human biological processes.

A Survey of Econometric Approaches to Convergence Tests of Emissions and Measures of Environmental Quality

Junsoo lee, james e. payne, and md. towhidul islam.

The analysis of convergence behavior with respect to emissions and measures of environmental quality can be categorized into four types of tests: absolute and conditional β-convergence, σ-convergence, club convergence, and stochastic convergence. In the context of emissions, absolute β-convergence occurs when countries with high initial levels of emissions have a lower emission growth rate than countries with low initial levels of emissions. Conditional β-convergence allows for possible differences among countries through the inclusion of exogenous variables to capture country-specific effects. Given that absolute and conditional β-convergence do not account for the dynamics of the growth process, which can potentially lead to dynamic panel data bias, σ-convergence evaluates the dynamics and intradistributional aspects of emissions to determine whether the cross-section variance of emissions decreases over time. The more recent club convergence approach tests the decline in the cross-sectional variation in emissions among countries over time and whether heterogeneous time-varying idiosyncratic components converge over time after controlling for a common growth component in emissions among countries. In essence, the club convergence approach evaluates both conditional σ- and β-convergence within a panel framework. Finally, stochastic convergence examines the time series behavior of a country’s emissions relative to another country or group of countries. Using univariate or panel unit root/stationarity tests, stochastic convergence is present if relative emissions, defined as the log of emissions for a particular country relative to another country or group of countries, is trend-stationary. The majority of the empirical literature analyzes carbon dioxide emissions and varies in terms of both the convergence tests deployed and the results. While the results supportive of emissions convergence for large global country coverage are limited, empirical studies that focus on country groupings defined by income classification, geographic region, or institutional structure (i.e., EU, OECD, etc.) are more likely to provide support for emissions convergence. The vast majority of studies have relied on tests of stochastic convergence with tests of σ-convergence and the distributional dynamics of emissions less so. With respect to tests of stochastic convergence, an alternative testing procedure accounts for structural breaks and cross-correlations simultaneously is presented. Using data for OECD countries, the results based on the inclusion of both structural breaks and cross-correlations through a factor structure provides less support for stochastic convergence when compared to unit root tests with the inclusion of just structural breaks. Future studies should increase focus on other air pollutants to include greenhouse gas emissions and their components, not to mention expanding the range of geographical regions analyzed and more robust analysis of the various types of convergence tests to render a more comprehensive view of convergence behavior. The examination of convergence through the use of eco-efficiency indicators that capture both the environmental and economic effects of production may be more fruitful in contributing to the debate on mitigation strategies and allocation mechanisms.

Bayesian Statistical Economic Evaluation Methods for Health Technology Assessment

Andrea gabrio, gianluca baio, and andrea manca.

The evidence produced by healthcare economic evaluation studies is a key component of any Health Technology Assessment (HTA) process designed to inform resource allocation decisions in a budget-limited context. To improve the quality (and harmonize the generation process) of such evidence, many HTA agencies have established methodological guidelines describing the normative framework inspiring their decision-making process. The information requirements that economic evaluation analyses for HTA must satisfy typically involve the use of complex quantitative syntheses of multiple available datasets, handling mixtures of aggregate and patient-level information, and the use of sophisticated statistical models for the analysis of non-Normal data (e.g., time-to-event, quality of life and costs). Much of the recent methodological research in economic evaluation for healthcare has developed in response to these needs, in terms of sound statistical decision-theoretic foundations, and is increasingly being formulated within a Bayesian paradigm. The rationale for this preference lies in the fact that by taking a probabilistic approach, based on decision rules and available information, a Bayesian economic evaluation study can explicitly account for relevant sources of uncertainty in the decision process and produce information to identify an “optimal” course of actions. Moreover, the Bayesian approach naturally allows the incorporation of an element of judgment or evidence from different sources (e.g., expert opinion or multiple studies) into the analysis. This is particularly important when, as often occurs in economic evaluation for HTA, the evidence base is sparse and requires some inevitable mathematical modeling to bridge the gaps in the available data. The availability of free and open source software in the last two decades has greatly reduced the computational costs and facilitated the application of Bayesian methods and has the potential to improve the work of modelers and regulators alike, thus advancing the fields of economic evaluation of healthcare interventions. This chapter provides an overview of the areas where Bayesian methods have contributed to the address the methodological needs that stem from the normative framework adopted by a number of HTA agencies.

The Biological Foundations of Economic Preferences

Nikolaus robalino and arthur robson.

Modern economic theory rests on the basic assumption that agents’ choices are guided by preferences. The question of where such preferences might have come from has traditionally been ignored or viewed agnostically. The biological approach to economic behavior addresses the issue of the origins of economic preferences explicitly. This approach assumes that economic preferences are shaped by the forces of natural selection. For example, an important theoretical insight delivered thus far by this approach is that individuals ought to be more risk averse to aggregate than to idiosyncratic risk. Additionally the approach has delivered an evolutionary basis for hedonic and adaptive utility and an evolutionary rationale for “theory of mind.” Related empirical work has studied the evolution of time preferences, loss aversion, and explored the deep evolutionary determinants of long-run economic development.

Consumer Debt and Default: A Macro Perspective

Florian exler and michèle tertilt.

Consumer debt is an important means for consumption smoothing. In the United States, 70% of households own a credit card, and 40% borrow on it. When borrowers cannot (or do not want to) repay their debts, they can declare bankruptcy, which provides additional insurance in tough times. Since the 2000s, up to 1.5% of households declared bankruptcy per year. Clearly, the option to default affects borrowing interest rates in equilibrium. Consequently, when assessing (welfare) consequences of different bankruptcy regimes or providing policy recommendations, structural models with equilibrium default and endogenous interest rates are needed. At the same time, many questions are quantitative in nature: the benefits of a certain bankruptcy regime critically depend on the nature and amount of risk that households bear. Hence, models for normative or positive analysis should quantitatively match some important data moments. Four important empirical patterns are identified: First, since 1950, consumer debt has risen constantly, and it amounted to 25% of disposable income by 2016. Defaults have risen since the 1980s. Interestingly, interest rates remained roughly constant over the same time period. Second, borrowing and default clearly depend on age: both measures exhibit a distinct hump, peaking around 50 years of age. Third, ownership of credit cards and borrowing clearly depend on income: high-income households are more likely to own a credit card and to use it for borrowing. However, this pattern was stronger in the 1980s than in the 2010s. Finally, interest rates became more dispersed over time: the number of observed interest rates more than quadrupled between 1983 and 2016. These data have clear implications for theory: First, considering the importance of age, life cycle models seem most appropriate when modeling consumer debt and default. Second, bankruptcy must be costly to support any debt in equilibrium. While many types of costs are theoretically possible, only partial repayment requirements are able to quantitatively match the data on filings, debt levels, and interest rates simultaneously. Third, to account for the long-run trends in debts, defaults, and interest rates, several quantitative theory models identify a credit expansion along the intensive and extensive margin as the most likely source. This expansion is a consequence of technological advancements. Many of the quantitative macroeconomic models in this literature assess welfare effects of proposed reforms or of granting bankruptcy at all. These welfare consequences critically hinge on the types of risk that households face—because households incur unforeseen expenditures, not-too-stringent bankruptcy laws are typically found to be welfare superior to banning bankruptcy (or making it extremely costly) but also to extremely lax bankruptcy rules. There are very promising opportunities for future research related to consumer debt and default. Newly available data in the United States and internationally, more powerful computational resources allowing for more complex modeling of household balance sheets, and new loan products are just some of many promising avenues.

Contests: Theory and Topics

Qiang fu and zenan wu.

Competitive situations resembling contests are ubiquitous in modern economic landscape. In a contest, economic agents expend costly effort to vie for limited prizes, and they are rewarded for “getting ahead” of their opponents instead of their absolute performance metrics. Many social, economic, and business phenomena exemplify such competitive schemes, ranging from college admissions, political campaigns, advertising, and organizational hierarchies, to warfare. The economics literature has long recognized contest/tournament as a convenient and efficient incentive scheme to remedy the moral hazard problem, especially when the production process is subject to random perturbation or the measurement of input/output is imprecise or costly. An enormous amount of scholarly effort has been devoted to developing tractable theoretical models, unveiling the fundamentals of the strategic interactions that underlie such competitions, and exploring the optimal design of contest rules. This voluminous literature has enriched basic contest/tournament models by introducing different variations to the modeling, such as dynamic structure, incomplete and asymmetric information, multi-battle confrontations, sorting and entry, endogenous prize allocation, competitions in groups, contestants with alternative risk attitude, among other things.

Econometrics for Modelling Climate Change

Jennifer l. castle and david f. hendry.

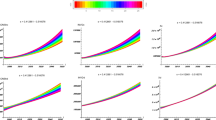

Shared features of economic and climate time series imply that tools for empirically modeling nonstationary economic outcomes are also appropriate for studying many aspects of observational climate-change data. Greenhouse gas emissions, such as carbon dioxide, nitrous oxide, and methane, are a major cause of climate change as they cumulate in the atmosphere and reradiate the sun’s energy. As these emissions are currently mainly due to economic activity, economic and climate time series have commonalities, including considerable inertia, stochastic trends, and distributional shifts, and hence the same econometric modeling approaches can be applied to analyze both phenomena. Moreover, both disciplines lack complete knowledge of their respective data-generating processes (DGPs), so model search retaining viable theory but allowing for shifting distributions is important. Reliable modeling of both climate and economic-related time series requires finding an unknown DGP (or close approximation thereto) to represent multivariate evolving processes subject to abrupt shifts. Consequently, to ensure that DGP is nested within a much larger set of candidate determinants, model formulations to search over should comprise all potentially relevant variables, their dynamics, indicators for perturbing outliers, shifts, trend breaks, and nonlinear functions, while retaining well-established theoretical insights. Econometric modeling of climate-change data requires a sufficiently general model selection approach to handle all these aspects. Machine learning with multipath block searches commencing from very general specifications, usually with more candidate explanatory variables than observations, to discover well-specified and undominated models of the nonstationary processes under analysis, offers a rigorous route to analyzing such complex data. To do so requires applying appropriate indicator saturation estimators (ISEs), a class that includes impulse indicators for outliers, step indicators for location shifts, multiplicative indicators for parameter changes, and trend indicators for trend breaks. All ISEs entail more candidate variables than observations, often by a large margin when implementing combinations, yet can detect the impacts of shifts and policy interventions to avoid nonconstant parameters in models, as well as improve forecasts. To characterize nonstationary observational data, one must handle all substantively relevant features jointly: A failure to do so leads to nonconstant and mis-specified models and hence incorrect theory evaluation and policy analyses.

The Effects of Monetary Policy Announcements

Chao gu, han han, and randall wright.

The effects of news (i.e., information innovations) are studied in dynamic general equilibrium models where liquidity matters. As a leading example, news can be announcements about monetary policy directions. In three standard theoretical environments—an overlapping generations model of fiat currency, a new monetarist model accommodating multiple payment methods, and a model of unsecured credit—transition paths are constructed between an announcement and the date at which events are realized. Although the economics is different, in each case, news about monetary policy can induce volatility in financial and other markets, with transitions displaying booms, crashes, and cycles in prices, quantities, and welfare. This is not the same as volatility based on self-fulfilling prophecies (e.g., cyclic or sunspot equilibria) studied elsewhere. Instead, the focus is on the unique equilibrium that is stationary when parameters are constant but still delivers complicated dynamics in simple environments due to information and liquidity effects. This is true even for classically-neutral policy changes. The induced volatility can be bad or good for welfare, but using policy to exploit this in practice seems difficult because outcomes are very sensitive to timing and parameters. The approach can be extended to include news of real factors, as seen in examples.

Fractional Integration and Cointegration

Javier hualde and morten ørregaard nielsen.

Fractionally integrated and fractionally cointegrated time series are classes of models that generalize standard notions of integrated and cointegrated time series. The fractional models are characterized by a small number of memory parameters that control the degree of fractional integration and/or cointegration. In classical work, the memory parameters are assumed known and equal to 0, 1, or 2. In the fractional integration and fractional cointegration context, however, these parameters are real-valued and are typically assumed unknown and estimated. Thus, fractionally integrated and fractionally cointegrated time series can display very general types of stationary and nonstationary behavior, including long memory, and this more general framework entails important additional challenges compared to the traditional setting. Modeling, estimation, and testing in the context of fractional integration and fractional cointegration have been developed in time and frequency domains. Related to both alternative approaches, theory has been derived under parametric or semiparametric assumptions, and as expected, the obtained results illustrate the well-known trade-off between efficiency and robustness against misspecification. These different developments form a large and mature literature with applications in a wide variety of disciplines.

Frequency-Domain Approach in High-Dimensional Dynamic Factor Models

Marco lippi.

High-Dimensional Dynamic Factor Models have their origin in macroeconomics, precisely in empirical research on Business Cycles. The central idea, going back to the work of Burns and Mitchell in the years 1940, is that the fluctuations of all the macro and sectoral variables in the economy are driven by a “reference cycle,” that is, a one-dimensional latent cause of variation. After a fairly long process of generalization and formalization, the literature settled at the beginning of the year 2000 on a model in which (1) both n the number of variables in the dataset and T , the number of observations for each variable, may be large, and (2) all the variables in the dataset depend dynamically on a fixed independent of n , a number of “common factors,” plus variable-specific, usually called “idiosyncratic,” components. The structure of the model can be exemplified as follows: x i t = α i u t + β i u t − 1 + ξ i t , i = 1, … , n , t = 1, … , T , (*) where the observable variables x i t are driven by the white noise u t , which is common to all the variables, the common factor, and by the idiosyncratic component ξ i t . The common factor u t is orthogonal to the idiosyncratic components ξ i t , the idiosyncratic components are mutually orthogonal (or weakly correlated). Lastly, the variations of the common factor u t affect the variable x i t dynamically, that is through the lag polynomial α i + β i L . Asymptotic results for High-Dimensional Factor Models, particularly consistency of estimators of the common factors, are obtained for both n and T tending to infinity. Model ( ∗ ) , generalized to allow for more than one common factor and a rich dynamic loading of the factors, has been studied in a fairly vast literature, with many applications based on macroeconomic datasets: (a) forecasting of inflation, industrial production, and unemployment; (b) structural macroeconomic analysis; and (c) construction of indicators of the Business Cycle. This literature can be broadly classified as belonging to the time- or the frequency-domain approach. The works based on the second are the subject of the present chapter. We start with a brief description of early work on Dynamic Factor Models. Formal definitions and the main Representation Theorem follow. The latter determines the number of common factors in the model by means of the spectral density matrix of the vector ( x 1 t x 2 t ⋯ x n t ) . Dynamic principal components, based on the spectral density of the x ’s, are then used to construct estimators of the common factors. These results, obtained in early 2000, are compared to the literature based on the time-domain approach, in which the covariance matrix of the x ’s and its (static) principal components are used instead of the spectral density and dynamic principal components. Dynamic principal components produce two-sided estimators, which are good within the sample but unfit for forecasting. The estimators based on the time-domain approach are simple and one-sided. However, they require the restriction of finite dimension for the space spanned by the factors. Recent papers have constructed one-sided estimators based on the frequency-domain method for the unrestricted model. These results exploit results on stochastic processes of dimension n that are driven by a q -dimensional white noise, with q

General Equilibrium Theory of Land

Masahisa fujita.

Land is everywhere: the substratum of our existence. In addition, land is intimately linked to the dual concept of location in human activity. Together, land and location are essential ingredients for the lives of individuals as well as for national economies. In the early 21st century, there exist two different approaches to incorporating land and location into a general equilibrium theory. Dating from the classic work of von Thünen (1826), a rich variety of land-location density models have been developed. In a density model, a continuum of agents is distributed over a continuous location space. Given that simple calculus can be used in the analysis, these density models continue to be the “workhorse” of urban economics and location theory. However, the behavioral meaning of each agent occupying an infinitesimal “density of land” has long been in question. Given this situation, a radically new approach, called the σ -field approach, was developed in the mid-1980s for modeling land in a general equilibrium framework. In this approach: (1) the totality of land, L , is specified as a subset of ℝ 2 , (2) all possible land parcels in L are given by the σ -field of Lebesgue measurable subsets of L , and (3) each of a finite number of agents is postulated to choose one such parcel. Starting with Berliant (1985), increasingly more sophisticated σ -field models of land have been developed. Given these two different approaches to modeling land within a general equilibrium framework, several attempts have thus far been proposed for bridging the gap between them. But while a systematic study of the relationship between density models and σ -field models remains to be completed, the clarification of this relationship could open a new horizon toward a general equilibrium theory of land.

Geography, Trade, and Power-Law Phenomena

Pao-li chang and wen-tai hsu.

This article reviews interrelated power-law phenomena in geography and trade. Given the empirical evidence on the gravity equation in trade flows across countries and regions, its theoretical underpinnings are reviewed. The gravity equation amounts to saying that trade flows follow a power law in distance (or geographic barriers). It is concluded that in the environment with firm heterogeneity, the power law in firm size is the key condition for the gravity equation to arise. A distribution is said to follow a power law if its tail probability follows a power function in the distribution’s right tail. The second part of this article reviews the literature that provides the microfoundation for the power law in firm size and reviews how this power law (in firm size) may be related to the power laws in other distributions (in incomes, firm productivity and city size).

The Implications of School Assignment Mechanisms for Efficiency and Equity

Atila abdulkadiroğlu.

Parental choice over public schools has become a major policy tool to combat inequality in access to schools. Traditional neighborhood-based assignment is being replaced by school choice programs, broadening families’ access to schools beyond their residential location. Demand and supply in school choice programs are cleared via centralized admissions algorithms. Heterogeneous parental preferences and admissions policies create trade-offs among efficiency and equity. The data from centralized admissions algorithms can be used effectively for credible research design toward better understanding of school effectiveness, which in turn can be used for school portfolio planning and student assignment based on match quality between students and schools.

Improving on Simple Majority Voting by Alternative Voting Mechanisms

Jacob k. goeree, philippos louis, and jingjing zhang.

Majority voting is the predominant mechanism for collective decision making. It is used in a broad range of applications, spanning from national referenda to small group decision making. It is simple, transparent, and induces voters to vote sincerely. However, it is increasingly recognized that it has some weaknesses. First of all, majority voting may lead to inefficient outcomes. This happens because it does not allow voters to express the intensity of their preferences. As a result, an indifferent majority may win over an intense minority. In addition, majority voting suffers from the “tyranny of the majority,” i.e., the risk of repeatedly excluding minority groups from representation. A final drawback is the “winner-take-all” nature of majority voting, i.e., it offers no compensation for losing voters. Economists have recently proposed various alternative mechanisms that aim to produce more efficient and more equitable outcomes. These can be classified into three different approaches. With storable votes, voters allocate a budget of votes across several issues. Under vote trading, voters can exchange votes for money. Under linear voting or quadratic voting, voters can buy votes at a linear or quadratic cost respectively. The properties of different alternative mechanisms can be characterized using theoretical modeling and game theoretic analysis. Lab experiments are used to test theoretical predictions and evaluate their fitness for actual use in applications. Overall, these alternative mechanisms hold the promise to improve on majority voting but have their own shortcomings. Additional theoretical analysis and empirical testing is needed to produce a mechanism that robustly delivers efficient and equitable outcomes.

Incentives and Performance of Healthcare Professionals

Martin chalkley.

Economists have long regarded healthcare as a unique and challenging area of economic activity on account of the specialized knowledge of healthcare professionals (HCPs) and the relatively weak market mechanisms that operate. This places a consideration of how motivation and incentives might influence performance at the center of research. As in other domains economists have tended to focus on financial mechanisms and when considering HCPs have therefore examined how existing payment systems and potential alternatives might impact on behavior. There has long been a concern that simple arrangements such as fee-for-service, capitation, and salary payments might induce poor performance, and that has led to extensive investigation, both theoretical and empirical, on the linkage between payment and performance. An extensive and rapidly expanded field in economics, contract theory and mechanism design, had been applied to study these issues. The theory has highlighted both the potential benefits and the risks of incentive schemes to deal with the information asymmetries that abound in healthcare. There has been some expansion of such schemes in practice but these are often limited in application and the evidence for their effectiveness is mixed. Understanding why there is this relatively large gap between concept and application gives a guide to where future research can most productively be focused.

The Indeterminacy School in Macroeconomics

Roger e. a. farmer.