- Recently Active

- Top Discussions

- Best Content

By Industry

- Investment Banking

- Private Equity

- Hedge Funds

- Real Estate

- Venture Capital

- Asset Management

- Equity Research

- Investing, Markets Forum

- Business School

- Fashion Advice

- Technical Skills

- Trading & Investing Guides

Random Walk Theory

It is a financial theory that states that the stock prices in a stock market are independent of their historical trends.

Christy currently works as a senior associate for EdR Trust, a publicly traded multi-family REIT . Prior to joining EdR Trust, Christy works for CBRE in investment property sales. Before completing her MBA and breaking into finance, Christy founded and education startup in which she actively pursued for seven years and works as an internal auditor for the U.S. Department of State and CIA.

Christy has a Bachelor of Arts from the University of Maryland and a Master of Business Administrations from the University of London.

Currently an investment analyst focused on the TMT sector at 1818 Partners (a New York Based Hedge Fund), Sid previously worked in private equity at BV Investment Partners and BBH Capital Partners and prior to that in investment banking at UBS.

Sid holds a BS from The Tepper School of Business at Carnegie Mellon.

What Is Random Walk Theory?

- How Does The Random Walk Theory Work?

Pros And Criticisms Of Random Walk Theory

- Random Walk Theory In The Stock Market

Random walk theory or random walk hypothesis is a financial theory that states that the stock prices in a stock market are independent of their historical trends. This means that the prices of these securities follow an irregular trend.

The theory further states that the future prevailing prices of a stock cannot be predicted accurately even after deploying its historical prices.

It all started with a mathematician, Jules Regnault, who turned into a stockbroker and wrote a book named “Calcul des Chances et Philosophie de la Bourse” or “The Study of Chance and the Philosophy of Exchange.”

He pioneered the application of mathematics to the stock market for stock market analysis. Another French mathematician, Louis Bachelier, published his paper, “Théorie de la Spéculation,” or the “Theory of Speculation.”

This theory has been named after the book “A Random Walk Down Wall Street,” authored by American economist Burton Malkiel .

His theory about the stock market that the stock prices follow an unpredictable random path was mainly criticized by many experts in America alone and worldwide too.

This theory works over the following two broad assumptions:

- The price of the securities on the stock market follows a random trend

- There is no dependency between any two securities being traded in the stock market

We will discuss in detail the criticism of the foundation built by these assumptions later in the coming sections.

An interesting point to note here is that Malkiel himself mentioned the term “ efficient market ” while delineating his theory that plays a crucial role.

He says that stock prices cannot be predicted through any analysis in a stock market as they follow a random path.

Let’s delve into this a tad bit.

An efficient market, by definition, is a market where all the information required for trading in the stock market is freely available; that is, the market is transparent, and all the players have equal access to general information about the stocks being traded in the market.

The definition here states that an efficient market allows a trader to analyze all the necessary information about the stock they plan to buy after proper strategic planning through the information available.

Let us hold onto that thought and move to the next section. It’s going to be an interesting yet mind-boggling conversation!

how does the Random Walk Theory work?

Although criticized by many stock analysts, Random Walk Theory has proven itself in the past. The most recent one was the outbreak of COVID-22, which was completely unanticipated and out of control.

This theory states that if the stock prices are random, then the prediction of the future standing of the stock cannot be made.

Fund managers are the people whom a company hires to perform predictive analyses of stock prices based on their recent historical records.

Let us say that the managers’ forecasts work out, and the company earns a considerable profit. For this, there can be two explanations at large.

First, the forecasting and analysis done by the experts were accurate enough to let the company earn that profit.

Second, it could be possible that the markets favored the company during that period, and the profits were bagged due to coincidence and luck.

As for the first statement made in that respect, the question arises that if the predictions are positive, the company is more likely to get huge returns, then why is it so that even after rigorous research techniques and models, they end up earning just the face value or even lower?

Furthermore, for the second statement, it could be that the market showed trends in favor of the company leading them to earn alpha returns.

The random walk theory simply says that these profits are earned by nothing but chance, as the stock prices cannot be determined for the future.

We are now coming back to our previous conversation on efficient markets . As we mentioned, an efficient market provides any information required for analyzing the stocks of a company.

This implies that the future price of stocks can be predicted with the help of their historical movements and trends, given that the market is efficient.

If this is true, why do some expert analysts bear losses and others do not?

This would only give one the impression that either loss or gain, it’s a matter of luck.

However, experts disagree with this theory. In a world where a computer achieves the impossible, algorithms come in handy while trading.

A strong algorithm will bear more fruits, and a weak one will bear more losses.

Let’s take a real-time example to illustrate Random Walk Theory.

We all recollect when COVID-19 hit the world economy , and the stock markets thrashed worldwide. This was a sudden happening and a factor that was out of everyone’s control.

Even the investors who have practicing investment for a long time and who knew their strategies and their way into and out of the market could not do anything but bear huge losses.

This is one of those instances where no pre-planned strategy or a strong algorithm could improve one’s market standing in such adversities.

Since every theory has advantages and disadvantages, Random Walk Theory lists specific pros and cons to keep in mind.

A few of the pros and cons are:

- Markets are not entirely efficient. This means the information required to invest in that stock is not fully transparent.

- Many insiders acquire information before the general public investors, giving them an edge over others. To overcome this catch, one should invest directly in ETFs (Exchange Traded Funds) to earn decent returns. ETFs are funds that hold multiple types of securities as their underlying asset . An ETF is an excellent option for a risk-averse person as it allows the option of portfolio diversification . You can read more about ETFs here .

- Moreover, historical trends depict that a stock price can easily fluctuate by even significantly irrelevant news about a company. Since sentiments can’t be predicted, the stock movements are unpredictable and erratic.

- Random Walk Theory is gaining popularity among passive investors as the fund managers fail to outperform the index and increase the investors’ increasing belief in randomness.

- Random Walk Theory argues that it is not entirely impossible to outperform the market. However, one can efficiently earn a decent amount of profits with careful analysis and possible future happenings related to that particular stock.

- They further state that this theory is baseless and stocks instead follow a trend that could be deduced from historical data, combined with certain future possible factors that might affect the stock movement.

- There may be an infinite number of factors influencing the stock movement that might make the task of detecting the pattern pretty cumbersome. But this, they argue, doesn’t mean that if a pattern is not quickly visible, it doesn’t exist.

Random walk theory in the stock market

It’s time to resume the conversation that we began in the first section.

We’ve only talked about how this theory states that stock movements are unpredictable and that one cannot outperform the market index by any financial analysis.

When we want to earn money quickly, we invest in stocks. Then, we wait for them to grow over time and pull back the profits. The rebuttal here is that if earning through investing in stocks is that easy, why do many investors end up losing their money?

If Random Walk Theory doesn’t stand any chance in the practicality of stock markets, why are some investors able to bag profits from the same stock while other investors bear losses?

This is all about the timing and future growth probability of that particular company. The best profits are earned when the market is unstable and erratic.

The investor needs to plan his standing in the market in such a strategic way that the market favors them. Moreover, RWT doesn’t consider the concept of insider information or an information edge which, in turn, refutes the fact that stocks are random.

In essence, today’s stock price is independent of yesterday’s stock price, an aggregate result of information available at that time. Due to the failure of many fund managers to generate sufficient profits over stocks, there has been an increase in the number of investors in index funds .

Index funds are a kind of mutual fund or ETF that tracks the market value of a specified basket of underlying assets.

Click here to read more about Index Funds . All in all, trading is assumed between informed buyers and sellers with completely different strategies. Thus, ultimately the market follows a random path.

So, we have dwelled on the intricacies of this stock theory thus far. What do you think was a reasonable argument with respect to or against this theory?

All these years, the debate over this theory has never had a hiatus among its fanatics and critics. Each individual depends mainly on how much investment has been put into the stock market.

The only way to deal with this problem is to act according to what is best for you as an investor. For example, if you believe that stock movement is random, you should invest your money into ETFs, which reflect the whole market return in just one portfolio.

ETFs, as told earlier, are more diverse as they constitute a mix of assets that yield better returns with minimal risk when blended into one single portfolio. Thus, this option could be used by risk-averse investors.

Now, if you are the other half, you think that stocks are not random but rather form a pattern through which one can predict future prices; you should perform financial analysis and generate return predictions for future periods for your investment.

After all, investing some of your wealth is better than investing nothing.

You can also try our Valuation Modeling Course by clicking the banner below now!

Everything You Need To Master Valuation Modeling

To Help You Thrive in the Most Prestigious Jobs on Wall Street.

Researched and authored by Anushka Raj Sonkar | LinkedIn

Free Resources

To continue learning and advancing your career, check out these additional helpful WSO resources:

- Bull Put Spread

- Buying on Margin

- Open-end vs. Closed-end Mutual Funds

- Option Greeks

- Quantitative Trading

Get instant access to lessons taught by experienced private equity pros and bulge bracket investment bankers including financial statement modeling, DCF, M&A, LBO, Comps and Excel Modeling.

or Want to Sign up with your social account?

The Random Walk and the Efficient Market Hypotheses

Early in the past century, statisticians noticed that changes in stock prices seem to follow a fair-game pattern. This has led to the random walk hypothesis , 1 st espoused by French mathematician Louis Bachelier in 1900, which states that stock prices are random, like the steps taken by a drunk, and therefore, unpredictable. A few studies appeared in the 1930's, but the random walk hypothesis was studied — and debated — intensively in the 1960's. The current consensus is that the random walk is explained by the efficient market hypothesis, that the markets quickly and efficiently react to new information about stocks, so most of the fluctuations in prices are explained by the changes in the instantaneous demand and supply of any given stock, causing the random walk in prices.

The efficient market hypothesis ( EMH ) states that financial markets are efficient, that prices already reflect all known information concerning a stock or other security, and that prices rapidly adjust to any new information. Information includes not only what is currently known about a stock, but also any future expectations, such as earnings or dividend payments. It seeks to explain the random walk hypothesis by positing that only new information will move stock prices significantly, and since new information is presently unknown and occurs at random, future movements in stock prices are also unknown and, thus, move randomly. Hence, it is not possible to outperform the market by picking undervalued stocks, since the efficient market hypothesis posits that there are no undervalued or even overvalued stocks (otherwise, one could earn abnormal profits by selling short ).

The basis of the efficient market hypothesis is that the market consists of many rational investors constantly reading the news and reacting quickly to any new significant information about a security. Similarly, many fund managers constantly read new reports and news, and use high-speed computers to constantly sift through financial data looking for mispriced securities. High-frequency traders , likewise, use high-speed computer systems located near exchanges to execute trades based on price discrepancies between securities on different exchanges or between related securities with interrelated prices, such as a stock and options based on the stock, or to front-run market orders.

To summarize, the efficient market hypothesis rests on the following predicates that:

- information is widely available to all investors;

- investors use this information to analyze the economy, the markets, and individual securities to make trading decisions;

- most events having a major impact on stock prices — such as labor strikes, major lawsuits, and accidents — are random, unpredictable events, and their occurrences are quickly broadcast to investors;

- investors will react quickly to new information.

The efficient market hypothesis has 3 forms differing in what information is considered:

- The weak form considers only past market trading information, such as stock prices, trading volume, and short interest. Hence, even the weak form of the EMH implies that technical analysis can't work, since technical analysis relies exclusively on past trading data to forecast future price movements.

- The semi-strong form extends the information to public information other than market data, such as news, accounting reports, company management, patents, products of the company, and analysts' recommendations .

- The strong form extends the information further to include not only public information, but also private information, typically held by corporate insiders, such as corporate officers and executives. Obviously, corporate insiders can make abnormal profits by trading their company's stock before a major corporate change is communicated to the public, which is why such insider trading is banned by the Securities and Exchange Commission ( SEC ). Corporate insiders can trade their stock, but only if the trade is not based on a major development few people know, such as a merger, a new product line, or significant key appointments within the company.

EMH posits that only information about the security affects its price. However, security prices also depend on how much money is invested in the markets and on how many investment opportunities are available. For instance, EMH does not account for the desirability of other investments, such as Bitcoin or gold. Another assumption of EMH is that information dispersal and reactions to that information are instant, which obviously is not the case. Professional traders will get the information sooner and react quicker, while retail investors will lag behind, if they react at all. Another tacit assumption is that investors know what price a security should be, based on the available information. But, obviously, investors will value the information differently, as people are wont to do.

Primary evidence that shows that information about a security is not the only thing affecting its price and that, in some cases, it may not even be a factor is the wild fluctuations of Bitcoin. As a cryptocurrency that has little practical value as money and does not even have fiat value, its true value cannot be anything other than 0, so there will never be fundamental information about Bitcoin that should change its price, yet prices for a single Bitcoin have exceeded $40,000, ranging over thousands of dollars just during January 2021, even though there is no fundamental news that would directly affect the price of Bitcoin, since its intrinsic value is always 0. (I discuss why cryptocurrencies have no practical value as money in my article Money: Commodity, Representative, Fiat, and Electronic Money , and why cryptocurrencies fluctuate in price.) That the price does change substantially every day causes people to invest in it simply to profit from those changes. This change of price results from changing demand while the supply is limited, but there is no fundamental reason why any cryptocurrency should be a certain price, so EMH can certainly not be applicable to the trading prices of cryptocurrencies. The trading of cryptocurrencies is pure speculation. The irony of Bitcoin is that its price is often determined by the investors themselves, who hype Bitcoin to increase its perceived value. For instance, if a famous celebrity hyped Bitcoin, then their followers may buy it up. Or if a major bank says that Bitcoin will reach a certain price, then the bank may increase demand simply by making that projection, even though such a projection cannot possibly have any foundation, since the intrinsic value of Bitcoin is always 0. Dogecoin is another example, a digital currency created in 2013 as a joke zoomed more than 1,500% between January 28 and February 8, 2021, during which time it was being mentioned on social media by celebrities Elon Musk , Snoop Dogg , and Gene Simmons .

Although cryptocurrencies probably were not originally created as a scam, it is clear that they can be used as such. In my opinion, cryptocurrencies are the ultimate penny stock, the best pump-and-dump scheme, because their prices are mainly determined by hype! People with influence can buy Bitcoin, then hype it to increase its price, then sell for a fat profit! And because Bitcoin fluctuates wildly in price, even within a short time, people can continue doing this. But since this is unpredictable, the price of Bitcoin will also walk randomly, so EMH is not applicable, since Bitcoin is not based on a company or anything else that would provide it with real value, so no information could ever be provided that would determine its price.

The Random Walk of Prices is the Brownian Motion of Supply and Demand

In my opinion, the random walk is only partly explained by EMH in that information would make the walk less random, so if material information about the stock is quickly dispersed, than changes in price will seem random most of the time. But what causes this randomness? The random walk of stocks and other securities can best be explained by the same concept used to explain Brownian motion. Brownian motion , which is the random motion of small particles suspended in a fluid, was 1 st observed by the botanist Robert Browning in 1827 as the random movement of pollen grains suspended in a liquid, and that this movement continued even for a liquid at equilibrium — in other words, the pollen grains continued to move randomly even though there was no evident force moving them. Albert Einstein provided a mathematical foundation to explain Brownian motion in 1905 as the result of the random molecular bombardment of the pollen grains — at any given time, the molecules bombarding the pollen grains on all sides are unbalanced, causing the grains to move one way, then another. Because this bombardment of molecules was random, so was the resultant motion.

So how does this apply to the stock market? Economists would say that stocks and other security prices result from the equilibrium of supply and demand — however, it is the instantaneous supply and demand that determines prices, and at any given time, supply and demand will differ simply due to chance.

For instance, suppose, on a particular day, that you have 100 investors who want to buy a particular stock and 100 investors who want to sell the same stock, and suppose further that they believe that the opening market price to be a fair price and they place market orders to effect their trades — and these traders are not aware of any news about the company during the course of the day. I think you will agree that there is little chance that these traders will all come to market at the same time, even on the same day, and if some of them do happen to trade at the same time, the number of buyers and sellers probably will not be equal at that time, and that whether there are more buyers than sellers or vice versa will differ throughout the day. Hence, at most times of the day, there will be an instantaneous imbalance of supply and demand for the stock, which will cause the stock price to move seemingly randomly throughout the day. I say seemingly, because even though the stock price is determined by the instantaneous supply and demand of the stock, no one can know what that equilibrium price will be ahead of time.

The proof of this explanation can be observed by the fact that even when there is no news about a particular company, its stock will walk randomly throughout the day because the instantaneous supply and demand will vary randomly throughout the day.

Nonetheless, news does move the markets, and news being mostly unpredictable, at least by most traders, means some randomness will be created by news events. But even when news about a particular company does move its stock price significantly, the response will still have some randomness, because different traders with different amounts of capital will learn about it at different times, and limit and stop-loss orders will be triggered as the stock price changes significantly, thereby causing the stock to zigzag up or down. But how much will the price move because of the news? Different traders will assess the news differently. Some traders will buy more on good news, believing that the good news will propel the stock price higher; others will sell because they believe the price has overshot its top, and these traders will trade at different times.

A good example of the fact that complete information about a security does not account for its total price is a closed-end mutual fund . A closed-end fund ( CEF ) is composed of certain securities that were initially selected when the fund was created. The fund is then closed with its security composition set, then the CEF shares trade like a stock on an exchange. The net asset value of the shares = the value of all the securities composing the fund divided by the number of fund shares. However, the market price of the shares often sell at a discount — sometimes, a steep discount — to the net asset value of the fund. This discrepancy exists because the supply and demand of the CEF shares depends on factors other than the intrinsic value of the shares, and these factors also vary randomly, thus partially explaining the random walk.

Is the Efficient Market Hypothesis True?

I have no reason to doubt some aspects of the efficient market hypothesis, but is there another reasonable explanation as to why it is difficult to outperform the market? After all, how does the efficient market hypothesis explain the stock market bubble of the latter half of the 1990's? If stock prices were simply the result of the total sum of all information about the companies and their stocks, then stock bubbles shouldn't happen — but they do happen. In the late 90's, it was evident that a bubble was forming because stock prices were growing much faster than the underlying companies — you don't have to be an economist or an analyst to know that this could not continue, and that stock prices would eventually decline significantly.

Some have argued that information only affects changes in prices, not their level. The problem that I have with this argument is that the old information should continually be telling investors that stock prices have already overshot their intrinsic value or their true pricing, and that investors should've been selling since even good news can't really overcome the fact that stock prices have soared much faster than the underlying businesses. But, alas, that isn't what happened.

What happened is that more and more people started piling into the stock market as it soared ever higher, thinking that it will go higher still — what Alan Greenspan has termed irrational exuberance . Indeed, the rest of the world joined in, buying stocks listed on the United States exchanges because they, too, expected the stock market to increase. I guess they thought they would be out of the market before it dropped. Some did get out at the top — that's why the market started dropping. But most investors suffered significant losses.

Another factor propelling stock market bubbles is the fear of missing out ( FOMO ). As a stock market ascends higher, people brag to their friends about how much money they are making, so people not yet invested in the stock market become envious and fear they are missing out, so they invest. Unfortunately, many of these people invest after the stock market has already ascended considerably, paying much higher bubble prices, only to suffer grievous losses after the market declines.

After the stock market bubble came the real estate bubble in 2005 through 2007, as people believed that real estate couldn't possibly decline in price — after all, they're not making any more of the stuff as Will Rogers once quipped. But maybe rational investors should've paused, thinking: "Can real estate prices really continue to rise much faster than people's incomes?" Or maybe these rational investors thought they would take advantage of the momentum and get out just before the market started falling. Some did get out before it fell, that's why it started falling, but most investors suffered horrendous losses.

Now some would argue that the smart money got out in time — the so-called rational investors posited in the efficient market hypothesis. And yet, it has come to light that the biggest banks, including investment banks, have suffered so many losses that they had to be bailed out by the United States government in late 2008 or be taken over by healthier banks; otherwise, they would have suffered the fate of Lehman Brothers — bankruptcy! Many of these investors working for these banks were making huge bonuses, supposedly because they were the smart money. Although these banks didn't directly buy real estate, they did invest in mortgage-backed securities and other derivatives based on mortgages , which they considered relatively safe. And yet, these were the very same banks that didn't worry too much about the creditworthiness of their borrowers, since they could pass on the credit default risk to the buyers of the securitized loans — many of whom turned out to be other banks! Where is the rationality here?

Then came the commodities bubble. A barrel of oil was priced above $147 in the summer of 2008, only to fall to less than $40 per barrel by December of the same year. Where is the efficiency here? And then the Bitcoin bubble in 2019, 2020, and 2021. I predict that the Bitcoin bubble will expand and contract continuously for the foreseeable future. It is simply 1 of the best scams ever devised! I don't believe it was devised as a scam, but it is becoming evident that its price can be easily manipulated, making it the ultimate penny stock of the 21st century! Although this is true of the other cryptocurrencies as well, it is currently Bitcoin that is receiving most of the hype, which is why it is fluctuating so wildly in price.

Although the efficient market hypothesis is a useful heuristic concept that may shed some light on trading and the markets, I believe that a more plausible reason to explain the inability of most investors to outperform the market, especially by active trading, is because there are so many factors affecting the prices of most investment products, that no one can know and quantify all these factors to arrive at what the future price of anything will be. This bounded rationality explains why many economic decisions are not rational or optimal. Moreover, it is reasonable to expect fluctuations over the short term will be more random than over the long term, but that fundamental values will be more determinative over the long term. Since many factors affect prices, not just information about companies or stocks, EMH explains some effects on prices, but EMH does not determine prices.

- Search Search Please fill out this field.

Semi-Strong Form

Strong form, the bottom line.

- Trading Strategies

The Weak, Strong, and Semi-Strong Efficient Market Hypotheses

Learn about the three versions of the efficient market hypothesis

J.B. Maverick is an active trader, commodity futures broker, and stock market analyst 17+ years of experience, in addition to 10+ years of experience as a finance writer and book editor.

:max_bytes(150000):strip_icc():format(webp)/picture-53889-1440688003-5bfc2a8846e0fb0083c04dc5.jpg)

The efficient market hypothesis (EMH), as a whole, theorizes that the market is generally efficient, but the theory is offered in three different versions: weak, semi-strong, and strong.

The basic efficient market hypothesis posits that the market cannot be beaten because it incorporates all important determining information into current share prices . Therefore, stocks trade at the fairest value, meaning that they can't be purchased undervalued or sold overvalued .

The theory determines that the only opportunity investors have to gain higher returns on their investments is through purely speculative investments that pose a substantial risk.

Key Takeaways

- The efficient market hypothesis posits that the market cannot be beaten because it incorporates all important information into current share prices, so stocks trade at the fairest value.

- Though the efficient market hypothesis theorizes the market is generally efficient, the theory is offered in three different versions: weak, semi-strong, and strong.

- The weak form suggests today’s stock prices reflect all the data of past prices and that no form of technical analysis can aid investors.

- The semi-strong form submits that because public information is part of a stock's current price, investors cannot utilize either technical or fundamental analysis, though information not available to the public can help investors.

- The strong form version states that all information, public and not public, is completely accounted for in current stock prices, and no type of information can give an investor an advantage on the market.

The three versions of the efficient market hypothesis are varying degrees of the same basic theory. The weak form suggests that today’s stock prices reflect all the data of past prices and that no form of technical analysis can be effectively utilized to aid investors in making trading decisions.

Advocates for the weak form efficiency theory believe that if the fundamental analysis is used, undervalued and overvalued stocks can be determined, and investors can research companies' financial statements to increase their chances of making higher-than-market-average profits.

The semi-strong form efficiency theory follows the belief that because all information that is public is used in the calculation of a stock's current price , investors cannot utilize either technical or fundamental analysis to gain higher returns in the market.

Those who subscribe to this version of the theory believe that only information that is not readily available to the public can help investors boost their returns to a performance level above that of the general market.

The strong form version of the efficient market hypothesis states that all information—both the information available to the public and any information not publicly known—is completely accounted for in current stock prices, and there is no type of information that can give an investor an advantage on the market.

Advocates for this degree of the theory suggest that investors cannot make returns on investments that exceed normal market returns, regardless of information retrieved or research conducted.

There are anomalies that the efficient market theory cannot explain and that may even flatly contradict the theory. For example, the price/earnings (P/E) ratio shows that firms trading at lower P/E multiples are often responsible for generating higher returns.

The neglected firm effect suggests that companies that are not covered extensively by market analysts are sometimes priced incorrectly in relation to their true value and offer investors the opportunity to pick stocks with hidden potential. The January effect shows historical evidence that stock prices—especially smaller cap stocks—tend to experience an upsurge in January.

Though the efficient market hypothesis is an important pillar of modern financial theories and has a large backing, primarily in the academic community, it also has a large number of critics. The theory remains controversial, and investors continue attempting to outperform market averages with their stock selections.

Due to the empirical presence of market anomalies and information asymmetries, many practitioners do not believe that the efficient markets hypothesis holds in reality, except, perhaps, in the weak form.

What Is the Importance of the Efficient Market Hypothesis?

The efficient market hypothesis (EMH) is important because it implies that free markets are able to optimally allocate and distribute goods, services, capital, or labor (depending on what the market is for), without the need for central planning, oversight, or government authority. The EMH suggests that prices reflect all available information and represent an equilibrium between supply (sellers/producers) and demand (buyers/consumers). One important implication is that it is impossible to "beat the market" since there are no abnormal profit opportunities in an efficient market.

What Are the 3 Forms of Market Efficiency?

The EMH has three forms. The strong form assumes that all past and current information in a market, whether public or private, is accounted for in prices. The semi-strong form assumes that only publicly-available information is incorporated into prices, but privately-held information may not be. The weak form concedes that markets tend to be efficient but anomalies can and do occur, which can be exploited (which tends to remove the anomaly, restoring efficiency via arbitrage ). In reality, only the weak form is thought to exist in most markets, if any.

How Would You Know If the Market Is Semi-Strong Form Efficient?

To test the semi-strong version of the EMH, one can see if a stock's price gaps up or down when previously private news is released. For instance, a proposed merger or dismal earnings announcement would be known by insiders but not the public. Therefore, this information is not correctly priced into the shares until it is made available. At that point, the stock may jump or slump, depending on the nature of the news, as investors and traders incorporate this new information.

The efficient market hypothesis exists in degrees, but each degree argues that financial markets are already too efficient for investors to consistently beat them. The idea is that the volume of activity within markets is so high that the value of resulting prices are as fair as can be. The weak form of the theory is the most lenient and concedes that there are circumstance when fundamental analysis can help investors find value. The strong form of the theory is the least lenient in this regard, while the semi-strong form of the theory holds a middle ground between the two.

Burton Gordon Malkiel. "A Random Walk Down Wall Street: The Time-tested Strategy for Successful Investing," W.W Norton & Company, 2007.

:max_bytes(150000):strip_icc():format(webp)/usa-stock-market-crash-827585890-bb854fc8911b4026990b0152db976fd6.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

A procedure for testing the hypothesis of weak efficiency in financial markets: a Monte Carlo simulation

- Original Paper

- Open access

- Published: 31 March 2022

- Volume 31 , pages 1289–1327, ( 2022 )

Cite this article

You have full access to this open access article

- José A. Roldán-Casas ORCID: orcid.org/0000-0002-1785-4147 1 &

- Mª B. García-Moreno García ORCID: orcid.org/0000-0002-7981-8846 1

3586 Accesses

Explore all metrics

The weak form of the efficient market hypothesis is identified with the conditions established by different types of random walks (1–3) on the returns associated with the prices of a financial asset. The methods traditionally applied for testing weak efficiency in a financial market as stated by the random walk model test only some necessary, but not sufficient, condition of this model. Thus, a procedure is proposed to detect if a return series associated with a given price index follows a random walk and, if so, what type it is. The procedure combines methods that test only a necessary, but not sufficient, condition for the fulfilment of the random walk hypothesis and methods that directly test a particular type of random walk. The proposed procedure is evaluated by means of a Monte Carlo experiment, and the results show that this procedure performs better (more powerful) against linear correlation-only alternatives when starting from the Ljung–Box test. On the other hand, against the random walk type 3 alternative, the procedure is more powerful when it is initiated from the BDS test.

Similar content being viewed by others

Is Violation of the Random Walk Assumption an Exception or a Rule in Capital Markets?

A Generalized Moving Average Convergence/Divergence for Testing Semi-strong Market Efficiency

Why a simple herding model may generate the stylized facts of daily returns: explanation and estimation.

Avoid common mistakes on your manuscript.

1 Introduction

The hypothesis of financial market efficiency is an analytical approach aimed at explaining movements in prices of financial assets over time and is based on the insight that prices for such assets are determined by the rational behaviour of agents interacting in the market. This hypothesis states that stock prices reflect all the information available for the agents when they are determined. Therefore, if the hypothesis is fulfilled, it would not be possible to anticipate price changes and formulate investment strategies to obtain substantial returns, i.e., predictions about future market behaviour could not be performed.

The validation of the hypothesis of efficiency in a given financial market is important for both investors and trade regulatory institutions. It provides criteria to assess whether the environment favours the state that all agents playing in the market are subject to equal footings in a “fair game”, where expectations of success and failure are equivalent.

Although the theoretical origin of the efficiency hypothesis arises from the work of Bachelier in 1900, Samuelson reported the theoretical foundations for this hypothesis in 1965. On the other hand, Fama established, for the first time, the concept of an efficient market . A short time later, the concept of the hypothesis of financial market efficiency emerged from the work of Roberts ( 1967 ), which also analysed efficiency with an informational outlook, leading to a rating for efficiency on three levels according to the rising availability of information for agents: weak , semi-strong and strong . Thus, weak efficiency means that information available to the agents is restricted to the historical price series; semi-strong efficiency means that all public information is available for all agents; and strong efficiency means that the set of available information includes the previously described information and other private data, known as insider trading.

The weak form of the efficiency hypothesis has been the benchmark of the theoretical and empirical approaches throughout history. In relation to the theoretical contributions, most link the weak efficiency hypothesis to the fact that financial asset prices follow a random walk (in form 1, 2 or 3) or a martingale. However, since it is necessary to impose additional restrictions on the underlying probability distributions that lead to one of the forms of random walk to obtain testable hypotheses derived from the martingale model, it seems logical to assume any of these forms as a pricing model.

Specifically, the types of random walks with which the weak efficiency hypothesis is identified are conditions that are established on the returns of a financial asset, which are relaxed from random walk 1 (which is the most restrictive) to random walk 3 (which corresponds to the most plausible in economic terms since it is not as restrictive). This makes it possible to evaluate the degree of weak efficiency.

Although numerous procedures have traditionally been used to test the weak efficiency of a financial market according to the random walk model, many test only some necessary, but not sufficient, condition of the aforementioned model in any of its forms (this is the case, for example, of the so-called linear methods that test only the necessary uncorrelation for the three types of random walk). In any case, applying one of these tests can lead to an incorrect conclusion. On the other hand, there are methods that directly test a specific type of random walk.

Through the strategic combination of both types of methods, a procedure that allows us to detect if a time series of financial returns follows a random walk and, if so, its corresponding type, is proposed. The objective is to reduce the effect of the above-mentioned limitation of some traditional methods when studying the weak efficiency hypothesis.

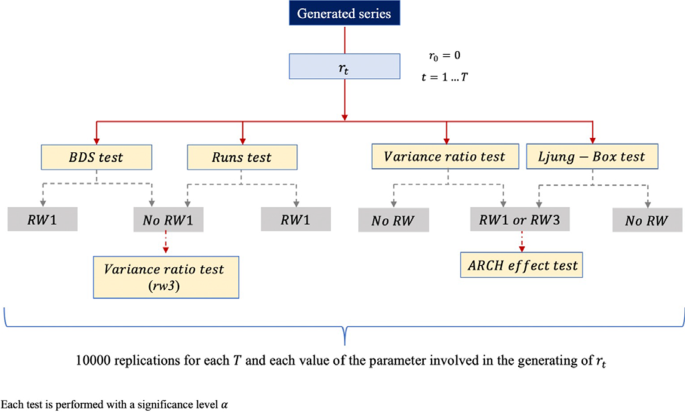

Consequently, the work begins (Sect. 2 ) by describing how the hypothesis of efficiency in a financial market is evaluated based on the so-called joint-hypothesis problem (Fama 1991 ). The different methods traditionally applied to test the weak efficiency in the forms that establish the random walk types are detailed in Sect. 3 . Next, a procedure is proposed to detect if a return series associated with a given price index follows a random walk and, if so, what type it is. This procedure combines methods that test only a necessary, but not sufficient, condition for the fulfilment of the random walk hypothesis and methods that directly test for a particular type of random walk. The proposed procedure is evaluated by means of a Monte Carlo experiment, and the results are presented in Sect. 4 . Finally, Sect. 5 contains the main conclusions of the study.

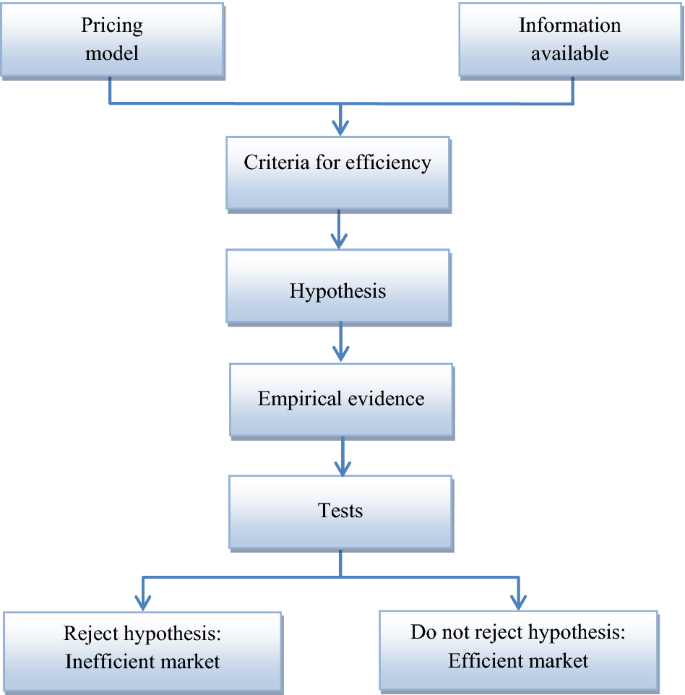

2 Evaluation of the efficiency hypothesis

To evaluate the efficiency of a financial market, Bailey ( 2005 ) proposes a procedure on the basis of the joint-hypothesis problem of Fama ( 1991 ), that is, considering, in addition to the available information, the existence of an underlying model to fix the prices of financial assets. Specifically, based on the aforementioned model and the cited set of information, the criterion that determines the efficiency of the market is established to create a testable hypothesis. Thus, by means of some method designed to test the hypothesis of efficiency, whether the collected data (observed prices) evince this hypothesis is tested, which would imply the efficiency or inefficiency of the market. Figure 1 shows this whole process schematically.

Source: Bailey ( 2005 )

Scheme of the procedure to evaluate the efficiency of a market.

Clearly, in this procedure, the efficiency of a market depends on the pricing model and the information set assumed. Thus, if the conclusion for a market is efficiency (inefficiency) given a pricing model and a specific information set, it is possible that inefficiency (efficiency) would be concluded if another model and/or different set are assumed.

Traditionally, the martingale and the random walk are assumed to be models to fix the price P t of a financial asset whose continuously compounded return or log return is given by the expression

2.1 Martingale

Samuelson ( 1965 ) and Fama ( 1970 ), understanding the market as a fair game, raised the idea of efficiency from an informational outlook, with the less restrictive model, the martingale model. In this case, if \(\Omega_{t}\) is the available information set at time t ,

That is, in an efficient market, it is not possible to forecast the future using the available information, so the best forecast for the price of an asset at time \(t + 1\) is today's price. The second condition of expression ( 1 ) implies

which reflects the idea of a fair game and allows us to affirm that the return \(r_{t}\) constitutes a martingale difference sequence, i.e., it satisfies the conditions

2.2 Random walk

The random walk was initially formulated as

where \(r_{t}\) is considered an independent and identically distributed process with mean 0 and constant variance, which assumes that changes in prices are unpredictable and random, a fact that is inherent to the first versions of the efficient market hypothesis. Nevertheless, several studies have shown that financial data are inconsistent with these conditions.

Campbell et al. ( 1997 ) adjusted the idea of random walks based on the formulation

where μ is a constant term. By establishing conditions on the dependency structure of the process \(\{ \varepsilon_{t} \}\) (which the authors call increments), they distinguish three types of random walks: 1, 2 and 3. In this case, the change in the price or return is

So the conditions fixed on the increments \(\{ \varepsilon_{t} \}\) can be extrapolated integrally to the returns { \(r_{t}\) }.

Random walk 1 (RW1) : IID increments/returns

In this first type, \(\varepsilon_{t}\) is an independent and identically distributed process with mean 0 and variance \(\sigma^{{2}}\) , or \(\varepsilon_{t} \sim {\text{IID(0,}}\sigma^{{2}} {)}\) in abbreviated form, which implies \(r_{t} \sim {\text{IID(}}\mu {,}\sigma^{{2}} {)}\) . Thus, formulation (2) is a particular case of this type of random walk for \(\mu = 0\) . Under these conditions, the constant term μ is the expected price change or drift. If, in addition, normality of \(\varepsilon_{t}\) is assumed, then (3) is equivalent to arithmetic Brownian motion.

In this case, the independence of \(\varepsilon_{t}\) implies that random walk 1 is also a fair game but in a much stronger sense than martingale, since the mentioned independence implies not only that increments/returns are uncorrelated but also that any nonlinear functions of them are uncorrelated.

Random walk 2 (RW2) : independent increments/returns

For this type of random walk, \(\varepsilon_{t}\) (and by extension \(r_{t}\) ) is an independent but not identically distributed process (INID). RW2 contains RW1 as a particular case.

This version of the random walk accommodates more general price generation processes and, at the same time, is more in line with the reality of the market since, for example, it allows for unconditional heteroskedasticity in \(r_{t}\) , thus taking into account the temporal dependence of volatility that is characteristic of financial series.

Random walk 3 (RW3) : uncorrelated increments/returns

Under this denomination, \(\varepsilon_{t}\) (and therefore \(r_{t}\) ) is a process that is not independent or identically distributed but is uncorrelated; that is, cases are considered

which means there may be dependence but no correlation.

This is the weakest form of the random walk hypothesis and contains RW1 and RW2 as special cases.

As previously mentioned, financial data tend to reject random walk 1, mainly due to non-compliance with the constancy assumption of the variance of \(r_{t}\) . In contrast, random walks 2 and 3 are more consistent with financial reality since they allow for heteroskedasticity (conditional or unconditional) in \(r_{t}\) . Consequently, we could say that RW2 is the type of random walk closest to the martingale [actually, RW1 and RW2 satisfy the conditions of the martingale, but in a stronger sense (Bailey 2005 )].

2.3 Martingale vs. random walk

The random walk hypothesis, in its three versions, and that of the martingale are captured in an expression that considers the kind of dependence that can exist between the returns r of a given asset at two times, t y \( t{ + }k\) ,

where, in principle, \(f( \cdot )\) and \(g( \cdot )\) are two arbitrary functions and may be interpreted as an orthogonality condition. For appropriately chosen \(f( \cdot )\) and \(g( \cdot )\) , all versions of the random walk hypothesis and martingale hypothesis are captured by (4). Specifically,

If condition (4) is satisfied only in the case that \(f( \cdot )\) and \(g( \cdot )\) are linear functions, then the returns are serially uncorrelated but not independent, which is identified with RW3. In this context, the linear projection of \(r_{t + k}\) onto the set of its past values \(\Omega_{t}\) satisfies

If condition (4) is satisfied only when \(g( \cdot )\) is a linear function but \(f( \cdot )\) is unrestricted, then the returns are uncorrelated with any function of their past values, which is equivalent to the martingale hypothesis. In this case,

If condition (4) holds for any \(f( \cdot )\) and \(g( \cdot )\) , then returns are independent, which corresponds to RW1 and RW2. In this case,

where d.f. denotes the probability density function.

Table 1 summarizes the hypotheses derived from expression ( 4 ).

Since, in practice, additional restrictions are usually imposed on the underlying probability distributions to obtain testable hypotheses derived from the martingale model, which results in the conditions of some of the random walk versions Footnote 1 (Bailey 2005 , pp. 59–60), it is normal to assume the random walk as a pricing model.

Therefore, if the available information set is the historical price series and the pricing model assumed is the random walk, weak efficiency is identified with some types of random walks.

3 Evaluation of the weak efficiency

3.1 traditional methods.

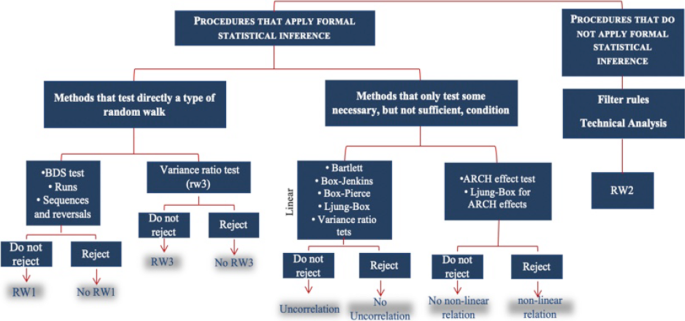

The methods traditionally used to test the weak form of efficiency, as established by some of the random walk types, are classified into two groups depending on whether they make use of formal statistical inference.

RW2 is analysed with methods that do not use formal inference techniques ( filter rules and technical analysis Footnote 2 ) because this type of random walk requires that the return series is INID. In this case, it would be very complicated to test for independence without assuming identical distributions (particularly in the context of time series) since the sampling distributions of the statistics that would be constructed to carry out the corresponding test could not be obtained (Campbell et al. 1997 , p. 41).

On the other hand, the methods that apply formal inference techniques for the analysis can be classified into two groups according to whether they allow direct testing of a type of random walk or only some necessary, but not sufficient, condition for its fulfilment.

The methods of the second group include the Bartlett test, tests based on the Box–Jenkins methodology, the Box–Pierce test, the Ljung–Box test and the variance ratio test. These methods test only the uncorrelation condition on the return series (they are also called linear methods Footnote 3 ) necessary for any type of random walk. Since these tests do not detect non-linear relationships Footnote 4 that, if they exist, would entail the dependence of the series, rejection of the null hypothesis would imply no uncorrelation of the series and, consequently, the non-existence of any type of random walk.

On the other hand, for tests that try to detect ARCH effects, rejection of the null hypothesis involves only the acceptance of non-linear relationships, which does not necessarily imply that the series is uncorrelated.

Other methods allow direct determination of whether the return series follows a specific type of random walk. This means that these procedures also take into account the possibility of non-linear relationships in the series, either because they are considered by the null hypothesis itself or because the cited methods have power against alternatives that capture these relationships (they would be, therefore, non-linear methods). These methods include those that allow testing of the random walk type 1 (BDS test, runs test and sequences and reversals test) and one that tests for a type 3 random walk (variance ratio test, which considers the heteroskedasticity of the series).

Figure 2 shows the classification established in this section for the different methods that are traditionally used to test the hypothesis of weak efficiency.

Source: own elaboration

Methods traditionally used to test the random walk hypothesis (weak efficiency).

The financial literature shows that the methods described above have traditionally been applied to test the weak efficiency hypothesis in financial markets.

Correlation tests to determine the efficiency of a market were first used when Fama ( 1965 ) and Samuelson ( 1965 ) laid the foundations of efficient market theory. From these beginnings, the works developed by Moore ( 1964 ), Theil and Leenders ( 1965 ), Fama ( 1965 ) and Fisher ( 1966 ), among others, stand out.

These tests were used as almost the only tool to analyse the efficiency of a market until, in the 1970s, seasonal effects and calendar anomalies became relevant for the analysis. Then, new methodologies incorporating these effects emerged, such as the seasonality tests applied by Roseff and Kinney ( 1976 ), French ( 1980 ) and Gultekin and Gultekin ( 1983 ).

In the 1990s, studies that analysed the efficiency hypothesis in financial markets using so-called traditional methods began to appear. This practice has continued to the present day, as evidenced by the most prominent empirical works on financial efficiency in recent years.

Articles using technical analysis to test for the efficiency of a financial market include Potvin et al. ( 2004 ), Marshall et al. ( 2006 ), Chen et al. ( 2009 ), Alexeev and Tapon ( 2011 ), Shynkevich ( 2012 ), Ho et al. ( 2012 ), Leković ( 2018 ), Picasso et al. ( 2019 ) and Nti et al. ( 2020 ).

On the other hand, among the studies that apply methods that test only a necessary, but not sufficient, condition of the random walk hypothesis, the most numerous are those that use correlation tests . In this sense, we can cite the studies developed by Buguk and Brorsen ( 2003 ), DePenya and Gil-Alana ( 2007 ), Lim et al. ( 2008b ), Álvarez-Ramírez and Escarela-Pérez ( 2010 ), Khan and Vieito ( 2012 ), Ryaly et al. ( 2014 ), Juana ( 2017 ), Rossi and Gunardi ( 2018 ) and Stoian and Iorgulescu ( 2020 ). Research applying the variance ratio test (also very numerous) includes Hasan et al. ( 2003 ), Hoque et al. ( 2007 ), Righi and Ceretta ( 2013 ), Kumar ( 2018 ), Omane-Adjepong et al. ( 2019 ) and Sánchez-Granero et al. ( 2020 ). Finally, ARCH effect tests have been used in several papers, such as Appiah-Kusi and Menyah ( 2003 ), Cheong et al. ( 2007 ), Jayasinghe and Tsui ( 2008 ), Lim et al. ( 2008a ), Chuang et al. ( 2012 ), Rossi and Gunardi ( 2018 ) and Khanh and Dat ( 2020 ).

Regarding methods that directly test a type of random walk, the runs test has been used in works such as Dicle et al. ( 2010 ), Jamaani and Roca ( 2015 ), Leković ( 2018 ), Chu et al. ( 2019 ) and Tiwari et al. ( 2019 ). Meanwhile, the application of the BDS test can be found in studies such as Yao and Rahaman ( 2018 ), Abdullah et al. ( 2020 ), Kołatka ( 2020 ) and Adaramola and Obisesan ( 2021 ).

Therefore, the proposal of a procedure (Sect. 3.2 ) that reduces the limitations of traditional methods would be a novel contribution to the financial field as far as the analysis of the weak efficiency hypothesis is concerned. Moreover, it would be more accurate than the traditional methods in determining whether a return series follows a random walk.

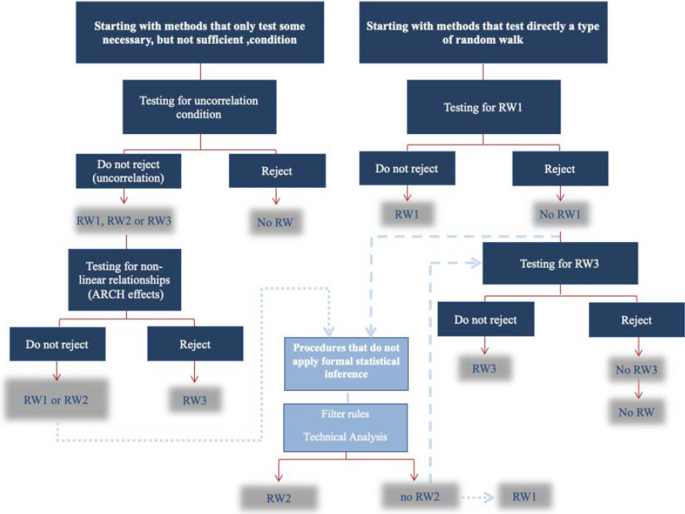

3.2 Proposed procedure

By strategically combining the methods analysed in the previous section, we propose a procedure to test the random walk hypothesis that can be started either from a method that tests only a necessary, but not sufficient, condition or from one that directly tests a specific type of random walk (1, 2 or 3).

On the one hand, if the procedure is started with a method from the first group and shows correlation of the return series, it would not follow any type of random walk. In the opposite case (uncorrelation), an ARCH effect test is recommended to determine the type of random walk. Thus, if ARCH effects are detected, which implies the existence of non-linear relationships, it should be concluded that the series is RW3. Otherwise, the series will be RW1 or RW2, and a non-formal statistical inference technique can be applied to test for type 2. Finally, if the RW2 hypothesis is rejected, then the series is necessarily RW1.

On the other hand, regarding the methods that directly test a type of random walk, it is proposed to start the procedure with one that tests RW1. Thus, if the null hypothesis is rejected with this method, it cannot be ruled out that the series is RW2, RW3 or not a random walk at all. Before affirming that we are not facing any type of random walk, first it is suggested to check for type 2 by applying a non-formal statistical inference technique. If the RW2 hypothesis cannot be accepted, then RW3 is tested. In this case, rejection of the RW3 hypothesis implies that the series is not a random walk.

Figure 3 schematically shows the described procedure.

Source: Own elaboration

Procedure for testing the random walk hypothesis.

The acceptance of market inefficiency (i.e., that the return series is not RW) occurs when the price series analysed shows non-randomness, a structure that can be identified, long systematic deviations from its intrinsic value, etc. (even the RW3 hypothesis implies dependence but no correlation). This indicates a certain degree of predictability, at least in the short run, i.e., it is possible to forecast both the asset returns and the volatility of the market using past price changes. These forecasts are constructed on the basis of models reflecting the behaviour of financial asset prices.

Among the models that allow linear structures to be captured, the ARIMA and ARIMAX models stand out. Moreover, ARCH family models are used for modelling and forecasting the conditional volatility of asset returns. On the other hand, when the return series presents non-linear relationships, it is common to use non-parametric and non-linear models, including those based on neural networks and machine learning techniques. Finally, hybrid models (a combination of two or more of the procedures described above) consider all the typical characteristics of financial series.

4 Monte Carlo experiment

The procedure introduced in the previous section is evaluated by means of a Monte Carlo experiment, Footnote 5 considering the variance ratio test proposed by Lo and MacKinlay ( 1988 ) Footnote 6 and the Ljung–Box test (1978), when started from methods that test only some necessary, but not sufficient, condition of the random walk hypothesis; and the BDS test Footnote 7 and the runs test when starting from methods that directly test the mentioned hypothesis. If the procedure requires the application of an ARCH effect test to decide between random walks 1 and 3, ARCH models up to order 4 are used.

To conduct this analysis, return series are generated from two different models because the objective is twofold: to evaluate the performance of the procedure in the analysis of the random walk 1 hypothesis against the linear correlation alternative, on the one hand, and against that of the random walk 3, on the other. Footnote 8

Thus, the BDS, runs, variance ratio and Ljung–Box tests are applied to each generated return series. Then, if the RW1 hypothesis is rejected by the first two tests, the variance-ratio test is applied to determine whether the series is at least RW3. On the other hand, if the random walk hypothesis is not rejected with the first two tests, an ARCH effect test is applied to discern between RW1 and RW3. The process is replicated 10,000 times for each sample size T and each value of the parameter involved in the generation of the series (see the whole process in Fig. 4 ).

Iteration of the simulation process.

Nominal size

Before analysing the Monte Carlo powers of the procedure initiated from the different indicated tests, the corresponding nominal size is estimated; that is, the maximum probability of falsely rejecting the null hypothesis of random walk 1 is calculated in each case. Since the different ways of executing the proposed procedure contemplate the possibility of applying tests sequentially to make a decision, we must not expect, in general, the nominal size of each case to coincide with the significance level α which is fixed in each of the individual tests.

To estimate the mentioned nominal size, return series that follow a random walk 1 are generated

where \(\varepsilon_{t} \sim iid(0,1)\) . Specifically, 10,000 series of size T are generated, and the tests required by the specific way in which the procedure is being applied are performed on each data series independently, not sequentially, with significance level α . The reiteration of this process allows us to determine, for each T , the number of acceptances and rejections of the null hypothesis (random walk 1) that occur with the independent application of each test. This makes possible the estimation of the nominal size of the procedure in each case as the quotient of the total rejections of the null hypothesis divided by the total number of replications (10,000 in this case).

The process described in the previous paragraph was performed for the sample sizes T = 25, 50, 100, 250, 500 and 1000 and significance levels α = 0.02, 0.05 and 0.1 [application of the process in Fig. 4 for expression ( 5 )]. The results (Table 2 ) indicate, for a given value T , the (estimated) theoretical size of the procedure when a significance level α is set in the individual tests required by the cited procedure initiated from a specific method. For example, if for \(T = 100\) the researcher sets a value of \(\alpha = 0.05\) in the individual tests and wishes to apply the procedure initiated from the variance ratio test, he will be working with a (estimated) theoretical size of 0.0975.

The estimated nominal size of the procedure when starting from methods that directly test the hypothesis of random walk 1 is much better in the case of the runs test since it practically coincides with the significance level α fixed (in the individual tests) for any sample size T . However, size distortions (estimated values far from the level α ) are evident when the procedure is initiated from the BDS test, and the results are clearly affected by T . In effect, the greatest distortions occur for small sample sizes and decrease as T increases (at \(T = 1000\) , the estimated nominal size for each α is 0.0566, 0.133244 and 0.2214, respectively, i.e., approximately \(2\alpha\) ).

Since the variance ratio test and the Ljung–Box test do not directly test the random walk 1 hypothesis—to estimate the nominal size of the procedure initiated from any of them, it is necessary to apply tests sequentially—the results that appear in Table 2 for these two cases are expected in the sense that the estimates of the respective nominal sizes for each T are greater than the significance level α . In this context of size distortion, the best results correspond to the case of the variance ratio test, with estimated values very close to the significance level α for small sample sizes ( \(T = 25\) and 50) but that increase as T increases (note that at \(T = 1000\) , for each value of α , the nominal size is approximately double that at \(T = 25\) , i.e., approximately \(2\alpha\) ). In the case of the Ljung–Box test, where the distortion is greater, the sample size T hardly influences the estimated values of the nominal size since, irrespective of the value of T , they remain approximately 10%, 21% and 37% for levels 0.02, 0.05 and 0.1, respectively.

Empirical size and Monte Carlo power

(b1) The performance of the procedure for testing the random walk 1 hypothesis against the only linear correlation alternative (among the variables of the return series generating process) is analysed using the model

with \(r_{0} = 0\) and \(\varepsilon_{t} \sim iid(0,1)\) . By means of (6), ten thousand samples of sizes T = 25, 50, 100, 250, 500 and 1000 of the series \(r_{t}\) are generated for each value of parameter \(\phi_{1}\) considered: − 0.9, − 0.75, − 0.5, − 0.25, − 0.1, 0, 0.1 0.25, 0.5. 0.75 and 0.9. In this way, the model yields return series that follow a random walk 1 (particular case in which \(\phi_{1} = 0\) ) and, as an alternative, series with a first-order autoregressive structure (cases in which \(\phi_{1} \ne 0\) ), i.e., they would be generated by a process whose variables are correlated. Therefore, when the null hypothesis is rejected, some degree of predictability is admitted since by modelling the above autoregressive structure with an ARMA model, it is possible to predict price changes on the basis of historical price changes.

The procedure, starting from each of the considered tests (BDS, runs, Ljung–Box and variance ratio), was applied to the different series generated by the combinations of values of T and \(\phi_{1}\) with a significance level of 5% [application of the process in Fig. 4 for expression ( 6 )]. Then, we calculated the number of times that the different decisions contemplated by the two ways of applying the procedure are made (according to whether we start from a method that does or does not directly test the random walk hypothesis).

From the previous results, we calculate, for each sample size T , the percentage of rejection of the null hypothesis (random walk 1) when starting from each of the four tests considered, depending on the value of parameter \(\phi_{1}\) . Since \(\phi_{1} = 0\) implies that the null hypothesis is true, in this particular case, the calculations represent the empirical probability of committing a type I error for the procedure in the four applications, i.e., the empirical size . However, when \(\phi_{1} \ne 0\) , the cited calculations represent the Monte Carlo power of each version of the procedure since for these values of \(\phi_{1}\) , the null hypothesis is false.

Empirical size

The empirical sizes (Table 3 ) that resulted from the different cases analysed nearly coincide with the corresponding theoretical probabilities calculated for \(\alpha = 0.05\) (see Table 2 ). Therefore, there is empirical confirmation of the size distortions that appear in the procedure according to the test from which it is started. In effect,

When the procedure is initiated from methods that directly test the random walk 1 hypothesis, the results confirm that for the runs test, the size of the procedure remains approximately 5% (the significance level) for all T . Nevertheless, when initiating from the BDS test, a very high size distortion is produced for small sample sizes (0.6806 and 0.5425 at \(T = 25\) and 50, respectively), but the distortion decreases as T increases (it reaches a value of 0.1334 at \(T = 1000\) ).

The size distortions exhibited by the procedure when starting with methods that test only a necessary, but not sufficient, condition of the random walk hypothesis, are less pronounced when the procedure is applied starting from the variance ratio test than when starting from the Ljung–Box test. Likewise, in the former case, the empirical size increases with the sample size T from values close to the significance level (0.05) to more than double the significance level (from 0.0603 at \(T = 25\) to 0.1287 at \(T = 1000\) ). In the latter case (Ljung–Box), the values between which the empirical size oscillates (18% and 22%) do not allow us to affirm that there exists an influence of T .

Monte Carlo power

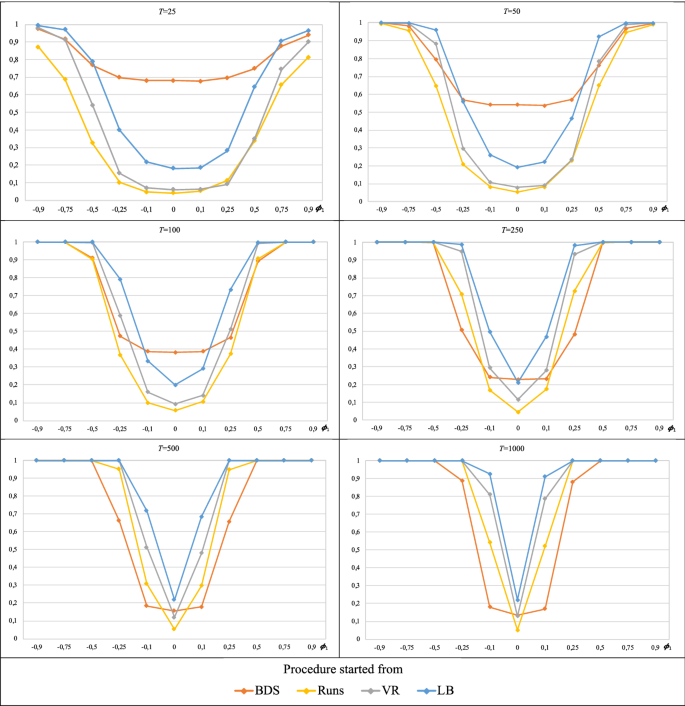

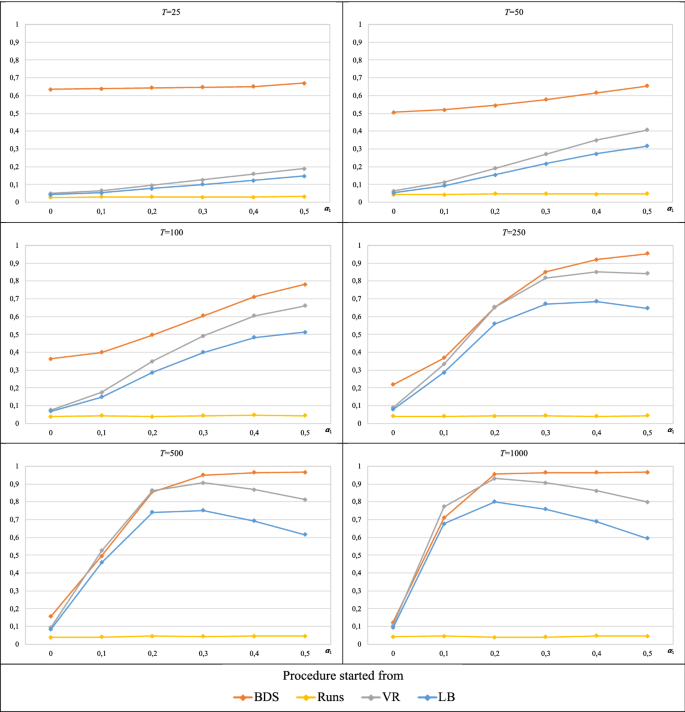

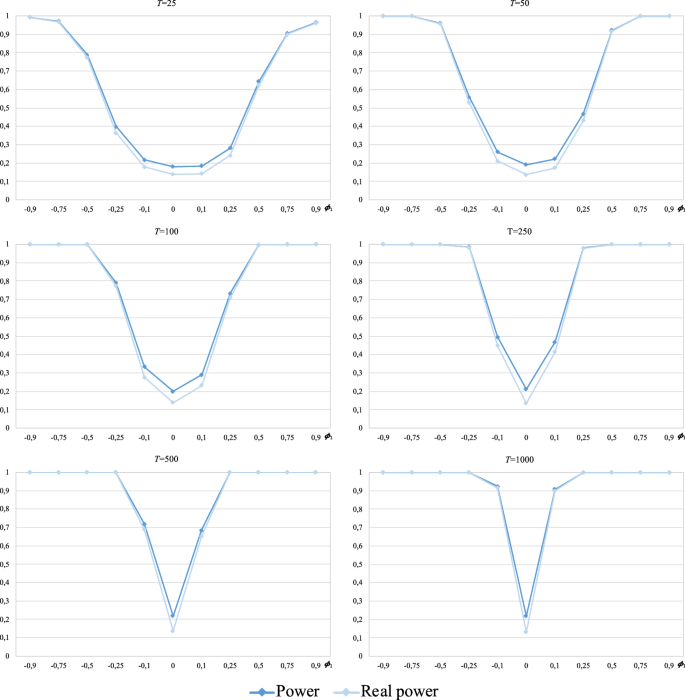

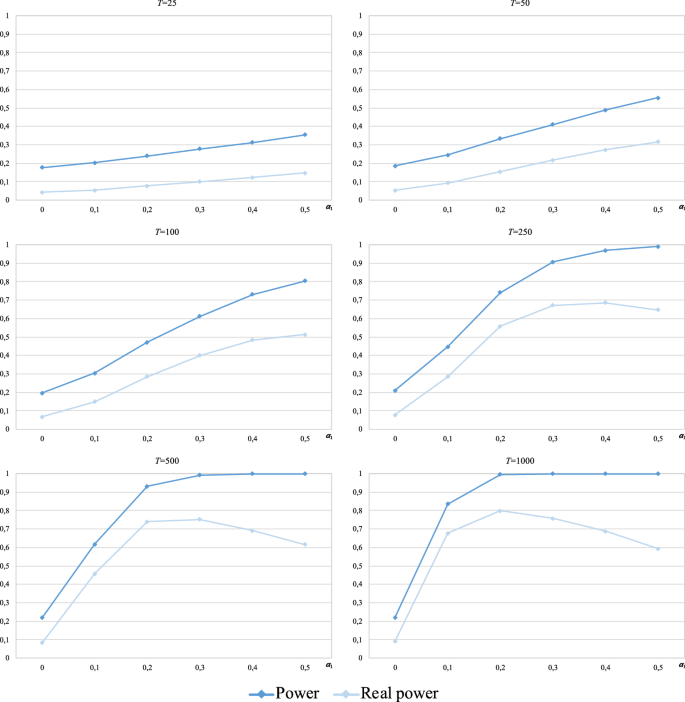

Table 4 reports, for each sample size T , the power calculations of the procedure started from each of the four tests considered in this study, i.e., the probability of rejecting the null hypothesis (random walk 1) with each version of the procedure on the assumption that the hypothesis is false. Likewise, since several alternatives to the null hypothesis (values that satisfy \(\phi_{1} \ne 0\) ) are considered, the corresponding power functions of the cited versions of the procedure are obtained and plotted in a comparative way for each T (Fig. 5 ).

Monte Carlo power of the procedure when starting from each test against linear correlation-only alternatives.

For each sample size T and regardless of the test from which the procedure is started, the corresponding probabilities of rejection of the random walk 1 hypothesis are distributed symmetrically around the value \(\phi_{1} = 0\) (random walk 1 hypothesis). Additionally, these probabilities tend to unity as \(\left| {\phi_{1} } \right|\) increases, reaching 1 for values of \(\left| {\phi_{1} } \right|\) increasingly closer to 0 as the sample size T increases. The velocity of the described behaviour depends on the test from which the procedure is started:

For the two smallest sample sizes ( \(T = 25\) and 50), a power of 1 is hardly achieved for any of the alternatives. Only at \(T = 50\) is the power approximately 100 percent, with the procedure initiated from any of the four tests, for \(\left| {\phi_{1} } \right| \ge 0.75\) . On the other hand, at \(T = 25\) , the estimated powers of the procedure initiated from the BDS test for \(\left| {\phi_{1} } \right| \le 0.5\) are much higher than those presented by the other cases. A similar situation occurs at \(T = 50\) , but with less pronounced differences between what the procedure with the BDS test and the other cases yield and restricted to the alternatives with \(\left| {\phi_{1} } \right| \le 0.25\) .

From sample size 100, we observe differences in the convergence to unity of the estimated powers according to the test from which the procedure is initiated. Thus, when starting from the Ljung–Box test and the variance ratio test, a power of approximately 1 is achieved for \(\left| {\phi_{1} } \right| \ge 0.5\) at \(T = 100\) , whereas for larger sample sizes, convergence to 1 is nearly reached for \(\left| {\phi_{1} } \right| \ge 0.25\) . On the other hand, when the procedure is started from the BDS test, a power of 1 is reached for \(\left| {\phi_{1} } \right| \ge 0.75\) at \(T = 100\) and for \(\left| {\phi_{1} } \right| \ge 0.5\) at \(T \ge 250\) (note that at \(T = 1000\) , the estimated power does not exceed 0.89 for \(\left| {\phi_{1} } \right| = 0.25)\) . Finally, when the procedure is initiated from the runs test, the value of \(\left| {\phi_{1} } \right|\) for which the powers achieve unity decreases as the sample size T increases beyond 100. Specifically, at \(T = 100\) , unity is reached for \(\left| {\phi_{1} } \right| \ge 0.75\) ; at \(T = 250\) , for \(\left| {\phi_{1} } \right| \ge 0.5\) ; and at \(T = 1000\) , for \(\left| {\phi_{1} } \right| \ge 0.25\) (at \(T = 500\) , the power is approximately 0.95 for \(\left| {\phi_{1} } \right| = 0.25\) ). The plots in Fig. 5 show that the power function of the procedure initiated from the Ljung–Box test is always above the other power functions, i.e., it is uniformly more powerful for \(T \ge 100\) .

Regardless of the test from which the procedure is started, a power of 1 is not achieved for \(\left| {\phi_{1} } \right| = 0.1\) for any sample size, not even at \(T = 1000\) (the best result corresponds to the Ljung–Box case with an estimated power of approximately 0.91, followed by the variance ratio and runs cases with values close to 0.8 and 0.53, respectively; the BDS case yields the worst result of approximately 0.18).

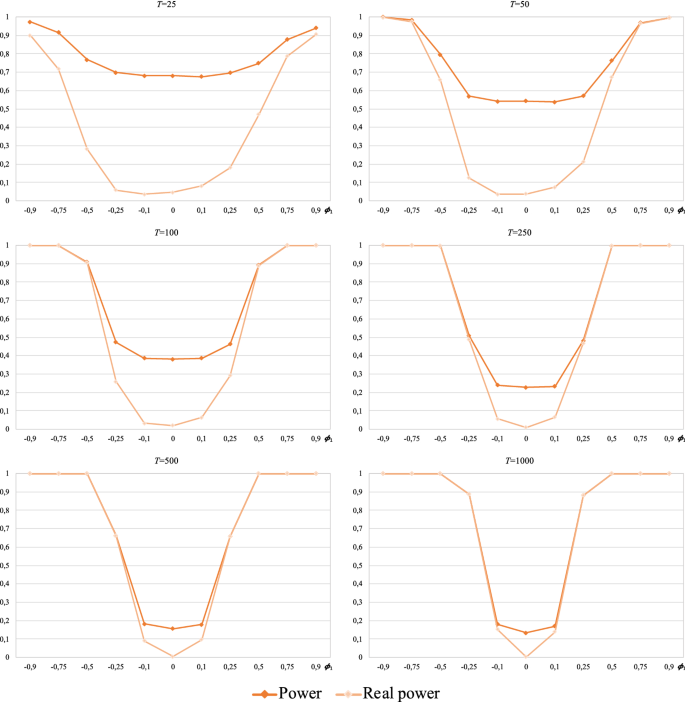

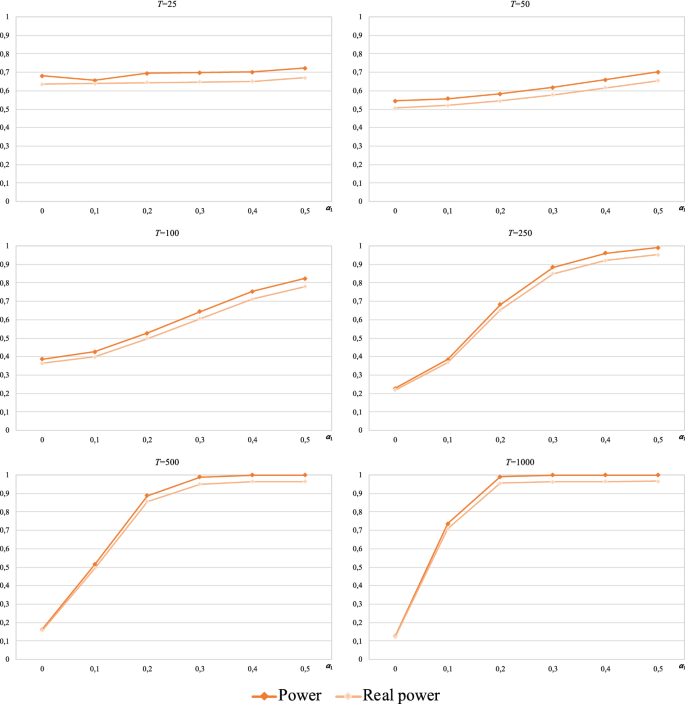

At this point, we can say that the power of the procedure has been analysed, that is, its capability of rejecting the null hypothesis (random walk 1) when the null hypothesis is false. As already mentioned, for \(\phi_{1} \ne 0\) , Model (6) yields a series that does not follow any type of random walk. However, the proposed procedure contemplates random walk 3 among the possible decisions. Therefore, if from the powers calculated for each version of the procedure, we subtract the portion that corresponds to the (wrong) decision of random walk 3, we obtain the power that the procedure initiated from each test actually has, i.e., its capability to reject the null hypothesis in favour of true alternatives when the null hypothesis is false.

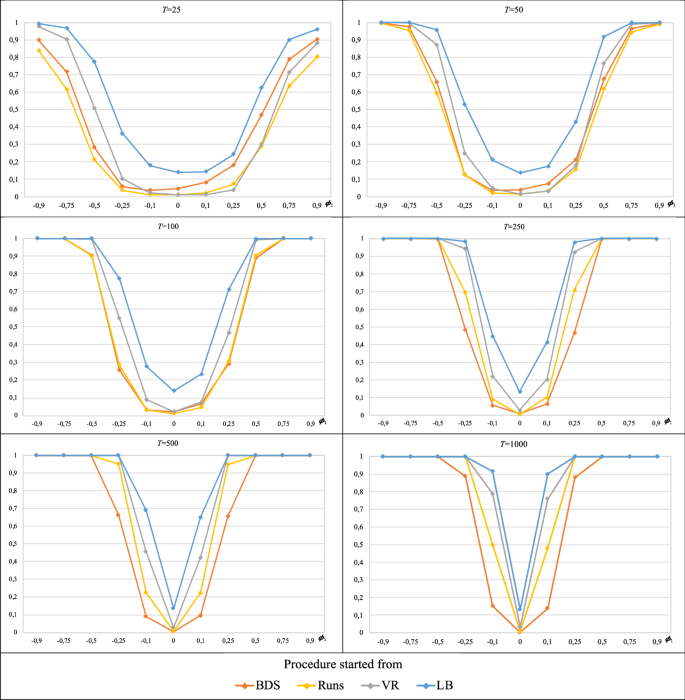

In this sense, Table 4 and Fig. 6 report, for each sample size T , the power calculations of the procedure initiated from each of the tests considered after subtracting the effect of the (false) alternative of random walk 3. Furthermore, the cited powers and those initially calculated for each version of the procedure are compared for each T in Figs. 9, 10, 11 and 12 (Appendix).

Real Monte Carlo power of the procedure when starting from each test against linear correlation-only alternatives.

When the procedure is started from the runs test, the variance ratio test or the Ljung–Box test (Appendix Figs. 10, 11, 12), what we call real power hardly differs from that initially calculated for each sample size T (these slight differences occur for \(\left| {\phi_{1} } \right| \le 0.5\) with \(T \le 100\) and \(\left| {\phi_{1} } \right| = 0.1\) with \(T \ge 250\) ). Therefore, all the above-mentioned findings in relation to the power of these three cases is maintained.

Nevertheless, there are considerable differences between the real power and that initially calculated when the procedure is started from the BDS test. In effect, the initial calculations indicated that this version of the procedure was the most powerful for \(\left| {\phi_{1} } \right| \le 0.5\) and \(\left| {\phi_{1} } \right| \le 0.25\) for \(T = 25\) and \(T = 50\) , respectively (with all the values greater than 0.5), but the results in Table 4 and Fig. 6 show that the powers in these cases are actually much lower (0.2 is hardly reached in one single case). Although these differences persist for \(T = 100\) , also in the context of \(\left| {\phi_{1} } \right| \le 0,25\) , they start to decrease as the sample size increases from \(T \ge 250\) (we could say that, for \(T \ge 500\) , there are minimal differences between the real power and the initially calculated power).

Consequently, in terms of the power referring only to true alternatives (linear correlation in this case), the procedure initiated from the Ljung–Box test is the most powerful.

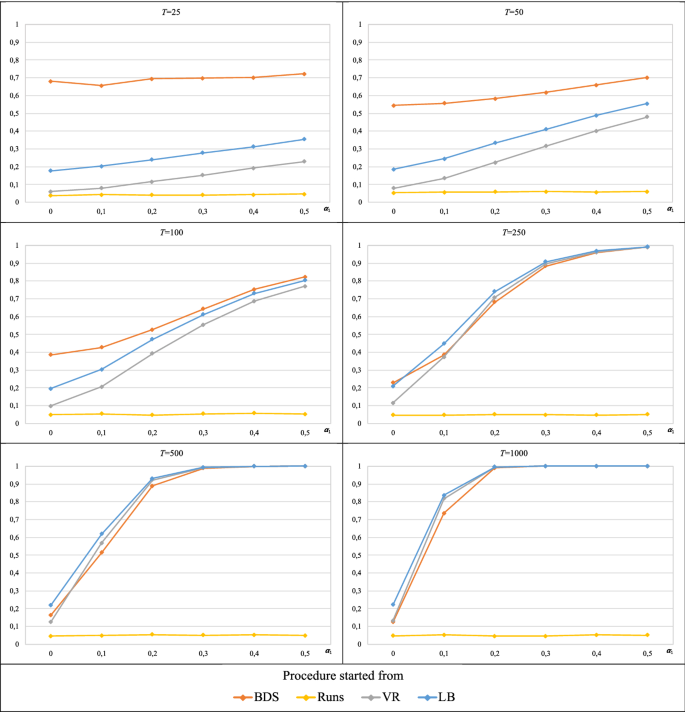

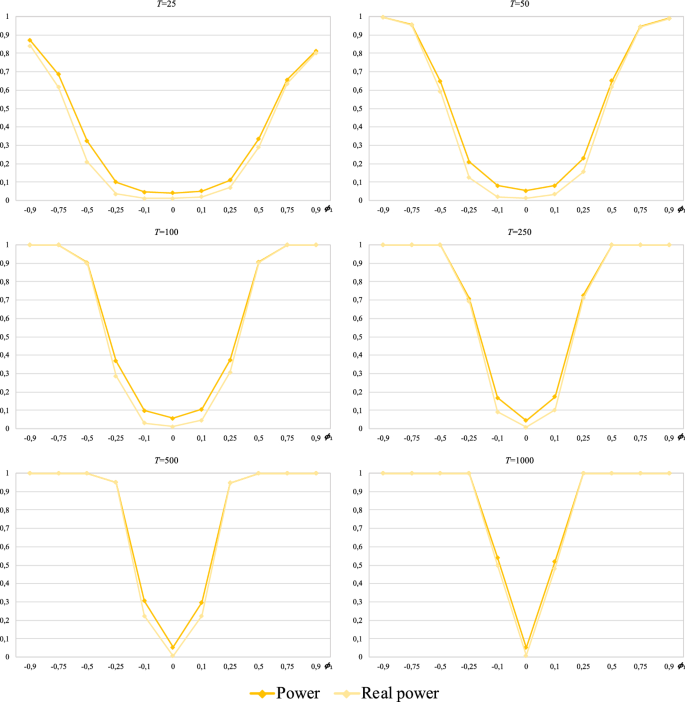

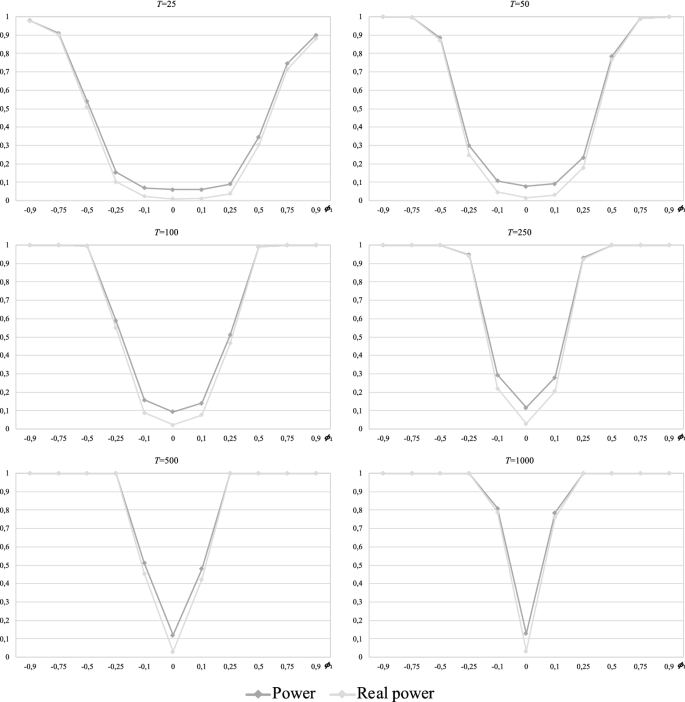

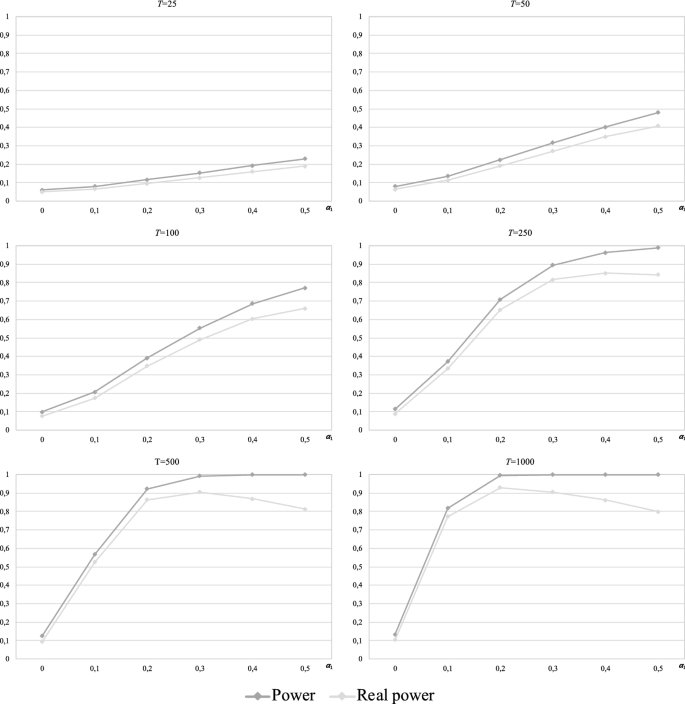

(b2) The performance of the procedure for testing the random walk 1 hypothesis against only the non-linear alternative (among the variables of the return series generating process) is analysed by means of an ARCH(1) model.

where \(h_{t}\) and \(\varepsilon_{t}\) are independent processes of each other such that \(h_{t}\) is stationary and \(\varepsilon_{t} \sim iid(0,1)\) , with \(\alpha_{0} > 0\) and \(\alpha_{1} \ge 0\) . Specifically, taking \(r_{0} = 0\) in (7), 10,000 samples of sizes T = 25, 50, 100, 250, 500 and 1000 of the series \(r_{t}\) are generated for \(\alpha_{0} = 1\) and each value of \(\alpha_{1}\) considered: 0, 0.1, 0.2, 0.3, 0.4 and 0.5. Footnote 9 In the particular case in which \(\alpha_{1} = 0\) , Model (7) yields a return series that follows a random walk 1 and, for \(\alpha_{1} > 0\) , series that are identified with a random walk 3, i.e., they would be generated by a process whose variables are uncorrelated but dependent (there are non-linear relationships among the variables Footnote 10 ). Therefore, when random walk 3 is accepted, it is possible to develop models that allow market volatility to be predicted (model types ARCH and GARCH).

The procedure, starting from each of the four tests considered in this study, was applied to the different series generated by the combination of values for T and \(\alpha_{1}\) with a significance level of 5% [application of the process in Fig. 4 for expression ( 7 )]. Then, we calculated the number of times that the different decisions contemplated by the two already known ways of applying the procedure were made.

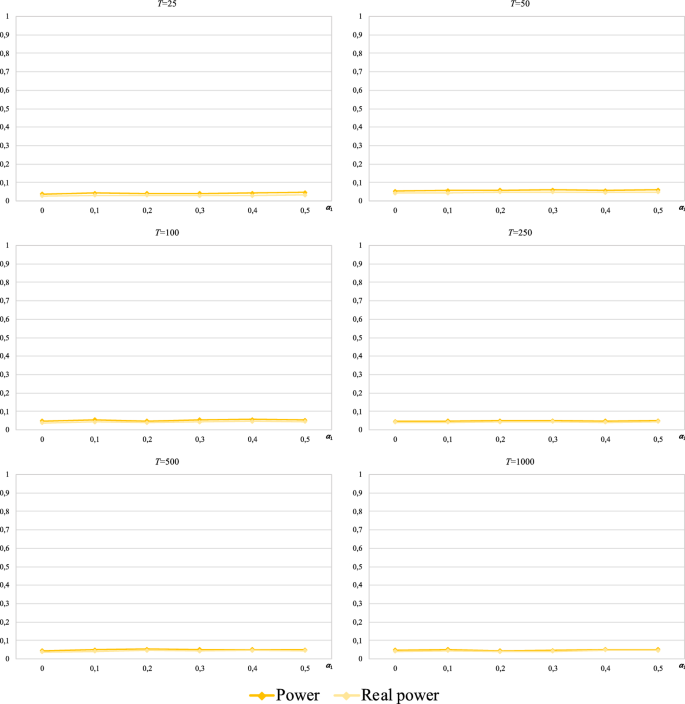

On the basis of the results indicated in the previous paragraph and analogously to that described in Section (b1), we calculate, for each sample size T , the empirical size and the Monte Carlo power of each version of the procedure. In this context, \(\alpha_{1} = 0\) implies that the random walk 1 hypothesis is true, and \(\alpha_{1} > 0\) implies that it is not (it corresponds to a random walk 3).