An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Determining the level of evidence: Nonexperimental research designs

Affiliation.

- 1 Amy Glasofer is a nurse scientist at Virtua Center for Learning in Mt. Laurel, N.J., and Ann B. Townsend is an adult NP with The Nurse Practitioner Group, LLC.

- PMID: 33953103

- DOI: 10.1097/01.NURSE.0000731852.39123.e1

To support evidence-based nursing practice, the authors provide guidelines for appraising research based on quality, quantity, and consistency. This article, the second of a three-part series, focuses on nonexperimental research designs.

Copyright © 2021 Wolters Kluwer Health, Inc. All rights reserved.

PubMed Disclaimer

Similar articles

- Determining the level of evidence: Nonresearch evidence. Glasofer A, Townsend AB. Glasofer A, et al. Nursing. 2021 Mar 1;51(3):53-57. doi: 10.1097/01.NURSE.0000733964.06881.23. Nursing. 2021. PMID: 33674537

- Sequential multiple assignment randomization trial designs for nursing research. Doorenbos AZ, Haozous EA, Jang MK, Langford D. Doorenbos AZ, et al. Res Nurs Health. 2019 Dec;42(6):429-435. doi: 10.1002/nur.21988. Epub 2019 Oct 10. Res Nurs Health. 2019. PMID: 31599468 Clinical Trial.

- Implementing the standards of best practice for simulation. Rutherford-Hemming T, Lioce L, Durham CF. Rutherford-Hemming T, et al. Nurse Educ. 2015 Mar-Apr;40(2):96-100. doi: 10.1097/NNE.0000000000000115. Nurse Educ. 2015. PMID: 25402713

- An Overview of Research Designs Relevant to Nursing: part 2: qualitative research designs. Driessnack M, Sousa VD, Mendes IA. Driessnack M, et al. Rev Lat Am Enfermagem. 2007 Jul-Aug;15(4):684-8. doi: 10.1590/s0104-11692007000400025. Rev Lat Am Enfermagem. 2007. PMID: 17957836 Review.

- Claiming our core: appraising qualitative evidence for nursing questions about human response and meaning. Grace JT, Powers BA. Grace JT, et al. Nurs Outlook. 2009 Jan-Feb;57(1):27-34. doi: 10.1016/j.outlook.2008.05.009. Nurs Outlook. 2009. PMID: 19150264 Review.

- Glasofer A, Townsend AB. Determining the level of evidence: experimental research appraisal. Nurs Crit Care . 2019;14(6):22–25.

- Melnyk BM, Fineout-Overholt E. Evidence-based Practice in Nursing & Healthcare: A Guide to Best Practice . 4th ed. Philadelphia, PA: Wolters Kluwer; 2019.

- Dearholt SL, Dang D. Johns Hopkins Nursing Evidence-based Practice: Models and Guidelines . 2nd ed. Indianapolis, IN: Sigma Theta Tau International; 2012.

- Portney LG, Watkins MP. Foundations of Clinical Research: Applications to Practice . 3rd ed. Upper Saddle River, NJ: Pearson Prentice Hall; 2009.

- Burns N, Grove SK. The Practice of Nursing Research: Appraisal, Synthesis, and Generation of Evidence . 6th ed. Philadelphia, PA: Saunders Elsevier; 2009.

- Search in MeSH

Related information

Linkout - more resources, full text sources.

- Ovid Technologies, Inc.

- Wolters Kluwer

Other Literature Sources

- scite Smart Citations

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

To read this content please select one of the options below:

Please note you do not have access to teaching notes, nonexperimental research: strengths, weaknesses and issues of precision.

European Journal of Training and Development

ISSN : 2046-9012

Article publication date: 6 September 2016

Nonexperimental research, defined as any kind of quantitative or qualitative research that is not an experiment, is the predominate kind of research design used in the social sciences. How to unambiguously and correctly present the results of nonexperimental research, however, remains decidedly unclear and possibly detrimental to applied disciplines such as human resource development. To clarify issues about the accurate reporting and generalization of nonexperimental research results, this paper aims to present information about the relative strength of research designs, followed by the strengths and weaknesses of nonexperimental research. Further, some possible ways to more precisely report nonexperimental findings without using causal language are explored. Next, the researcher takes the position that the results of nonexperimental research can be used cautiously, yet appropriately, for making practice recommendations. Finally, some closing thoughts about nonexperimental research and the appropriate use of causal language are presented.

Design/methodology/approach

A review of the extant social science literature was consulted to inform this paper.

Nonexperimental research, when reported accurately, makes a tremendous contribution because it can be used for conducting research when experimentation is not feasible or desired. It can be used also to make tentative recommendations for practice.

Originality/value

This article presents useful means to more accurately report nonexperimental findings through avoiding causal language. Ways to link nonexperimental results to making practice recommendations are explored.

- Research design

- Experimental design

- Causal inference

- Nonexperimental

- Social science research

- Triangulation

Reio, T.G. (2016), "Nonexperimental research: strengths, weaknesses and issues of precision", European Journal of Training and Development , Vol. 40 No. 8/9, pp. 676-690. https://doi.org/10.1108/EJTD-07-2015-0058

Emerald Group Publishing Limited

Copyright © 2016, Emerald Group Publishing Limited

Related articles

All feedback is valuable.

Please share your general feedback

Report an issue or find answers to frequently asked questions

Contact Customer Support

- Subscribe to journal Subscribe

- Get new issue alerts Get alerts

Secondary Logo

Journal logo.

Colleague's E-mail is Invalid

Your message has been successfully sent to your colleague.

Save my selection

Determining the level of evidence

Nonexperimental research designs.

Glasofer, Amy DNP, RN, NE-BC; Townsend, Ann B. DRNP, RN, ANP-C, CNS-C

Amy Glasofer is a nurse scientist at Virtua Center for Learning in Mt. Laurel, N.J., and Ann B. Townsend is an adult NP with The Nurse Practitioner Group, LLC.

The authors have disclosed no financial relationships related to this article.

This article was first published as: Glasofer A, Townsend AB. Determining the level of evidence: nonexperimental research designs. Nurs Crit Care . 2020;15(1):24-27.

To support evidence-based nursing practice, the authors provide guidelines for appraising research based on quality, quantity, and consistency. This article, the second of a three-part series, focuses on nonexperimental research designs.

This three-part series supports and describes evidence-based practice. The second installment focuses on the appraisal of nonexperimental research.

Full Text Access for Subscribers:

Individual subscribers.

Institutional Users

Not a subscriber.

You can read the full text of this article if you:

- + Favorites

- View in Gallery

Readers Of this Article Also Read

Evidence-based practice, quality improvement, and research: a visual model, supporting new graduate nurses' transition to practice, leaders, managers, and followers: working in harmony, what if the bully is the manager, asthma management updates.

- Open access

- Published: 09 September 2023

Using public clinical trial reports to probe non-experimental causal inference methods

- Ethan Steinberg 1 ,

- Nikolaos Ignatiadis 2 ,

- Steve Yadlowsky 3 ,

- Yizhe Xu 1 &

- Nigam Shah 1

BMC Medical Research Methodology volume 23 , Article number: 204 ( 2023 ) Cite this article

1735 Accesses

1 Citations

Metrics details

Non-experimental studies (also known as observational studies) are valuable for estimating the effects of various medical interventions, but are notoriously difficult to evaluate because the methods used in non-experimental studies require untestable assumptions. This lack of intrinsic verifiability makes it difficult both to compare different non-experimental study methods and to trust the results of any particular non-experimental study.

We introduce TrialProbe , a data resource and statistical framework for the evaluation of non-experimental methods. We first collect a dataset of pseudo “ground truths” about the relative effects of drugs by using empirical Bayesian techniques to analyze adverse events recorded in public clinical trial reports. We then develop a framework for evaluating non-experimental methods against that ground truth by measuring concordance between the non-experimental effect estimates and the estimates derived from clinical trials. As a demonstration of our approach, we also perform an example methods evaluation between propensity score matching, inverse propensity score weighting, and an unadjusted approach on a large national insurance claims dataset.

From the 33,701 clinical trial records in our version of the ClinicalTrials.gov dataset, we are able to extract 12,967 unique drug/drug adverse event comparisons to form a ground truth set. During our corresponding methods evaluation, we are able to use that reference set to demonstrate that both propensity score matching and inverse propensity score weighting can produce estimates that have high concordance with clinical trial results and substantially outperform an unadjusted baseline.

Conclusions

We find that TrialProbe is an effective approach for probing non-experimental study methods, being able to generate large ground truth sets that are able to distinguish how well non-experimental methods perform in real world observational data.

Peer Review reports

Non-experimental studies (which are also known as observational studies) are valuable for estimating causal relationships in medical settings where randomized trials are not feasible due to either ethical or logistical concerns [ 1 ]. In addition, effects from randomized trials might not generalize to real-world use due to limited and non-representative study populations and differing clinical practice environments [ 2 ]. Accurately estimating these causal relationships is important, as learning which treatments are the most effective is a key component of improving health care. However, non-experimental studies are difficult to use in practice due to the absence of randomization, which forces them to rely on difficult-to-verify assumptions, such as the absence of unmeasured confounding and non-informative censoring [ 3 ]. These assumptions make it difficult to evaluate the performance of non-experimental methods, which is an important step for verifying the reliability of these techniques as well as determining the relative merits of different methods. Despite significant recent progress in non-experimental study evaluation (detailed in Section “Related work”), this difficulty with evaluation hampers research, by making it more difficult to develop more effective methods, and hinders practice, as clinicians are hesitant to use evidence generated from non-experimental studies even in situations where clinical trial derived evidence is not available [ 4 , 5 , 6 ].

In this work, we introduce TrialProbe , a new principled approach for the systematic appraisal of non-experimental causal inference methods. Our basic premise is that we can evaluate non-experimental causal inference methods by comparing adverse event effect estimates from non-experimental methods with published experimentally derived estimates from public ClinicalTrials.gov clinical trial reports. Compared to previous approaches for the evaluation of non-experimental methods (more of which below in Section “Related work”), TrialProbe differs in three regards. First, we explicitly focus on active comparator study designs where one drug is directly compared to another drug as those are easier to connect to potential non-experimental study designs [ 7 ]. Second, we estimate the magnitude of the effects extracted from the public clinical trial reports through an empirical Bayes approach that explicitly accounts for the heterogeneity of odds ratios across the clinical trials, the statistical information content (e.g., sample size) used to estimate each odds ratio, and the fact that most effects are very small. Third, we use those estimated effects to split our reference set into several subsets that contain drug effects of varying strengths, so that users can simultaneously understand the concordance between non-experimental and experimental methods for both stronger and weaker effects.

We then use TrialProbe to evaluate common non-experimental study methods in terms of their ability to identify causal relationships from a large national administrative claims dataset - Optum’s de-identified Clinformatics Data Mart Database. We find that available methods can reproduce a significant fraction of the reported effect and that adjusting for a low-dimensional representation of patient history outperforms a naive analysis that does not adjust for any covariates.

Related work

The importance of evaluating non-experimental methods is well-understood and ubiquitous. The most common approach for evaluation is based on simulation experiments, or more recently, based on semi-synthetic simulations that seek to mimic real observational datasets [ 8 , 9 , 10 , 11 , 12 ]. The upshot of simulation studies is that the ground truth is precisely known, and so non-experimental methods can be compared with respect to any metric of interest. Nevertheless, it is difficult to determine whether or not those simulations provide a realistic confounding structure that is similar to observational data in practice.

Non-experimental methods have also been evaluated in terms of reproducibility by evaluating whether it is possible to independently reproduce previously published non-experimental studies [ 13 ]. Reproducibility is an important and useful feature for non-experimental studies, but measuring reproducibility alone does not necessarily address the issue of whether non-experimental studies provide correct effect estimates.

Closer to our work, several authors have evaluated non-experimental methods by comparing them to results from RCTs. Some authors have used data from RCTs to estimate a causal effect, and then applied a non-experimental method only to the treatment arm of the same RCT [ 14 , 15 ] Footnote 1 or to the treated subjects from the RCT along with control subjects drawn from survey datasets [ 16 ]. Furthermore, such approaches require access to patient-level data for each RCT.

Other authors have constructed pairs of published non-experimental studies and RCTs that assess the same intervention in similar populations [ 17 , 18 ]. Such an approach is appealing, as it directly compares non-experimental designs that researchers have pursued (and published). On the other hand, such an approach does not allow the large-scale and systematic exploration of variations in causal inference methods and is typically restricted to the study of dozens of effects. This approach is also subject to publication bias issues, which results in an under-reporting of non-significant effects in both experimental and non-experimental designs.

Another common approach—that most closely aligns with our work—for evaluating non-experimental causal inference methods is through reference sets [ 19 , 20 ]. A reference set is a collection of relationships about the effects of treatments that are independently verified, and treated as ground truth against which the ability of a non-experimental method to identify those effects from available data can be quantified. There have been several proposed approaches to create reference sets, the most prominent of which rely on either FDA labels or expert knowledge to declare known relationships between drugs and outcomes [ 20 ]. However, the actual construction of existing reference sets can be opaque. Instead, in TrialProbe we generate a sequence of nested reference sets that correspond to increasing levels of evidence for the strength of the causal effect. The construction of the TrialProbe reference sets is fully data-driven and reproducible. Furthermore, we are not aware of previous reference sets that focus on active comparator study designs.

RCT-Duplicate [ 21 ] is another closely related effort that attempts to quantify the performance of non-experimental methods by carefully reproducing the results of 32 clinical trials using insurance claims databases. This manual emulation of the trial design (to the extent feasible) allows RCT-Duplicate to very closely match the exact clinical trial setup, including details such as inclusion/exclusion criteria that are not possible with fully automated approaches such as ours. In addition, the increased effort per trial limits the number of RCTs that can be feasibly reproduced to just 32. Our work is similar in spirit, but expands on the idea by vastly increasing the number of estimated effects by several orders of magnitude to 12,967 by being fully automated and by taking advantage of the entire ClinicalTrials.gov database.

All the approaches we outlined above for the evaluation of non-experimental methods based on results from RCTs face the following difficulty: Even in an optimal situation, it is not expected that any non-experimental method will reproduce the entire ground truth in the reference set because the observational data usually comes from a different population than the population used to collect the ground truth [ 22 ]. Identification of a known relationship might fail for example because the academic medical center population used in an RCT might differ drastically from the general population available in the non-experimental data resource. Many other study design factors (e.g., whether the estimand is a hazard ratio in the non-experimental study and an odds ratio in the RCT) can further lead to deviations between the non-experimental study and the RCT. A related issue is that experimental studies also have a certain error rate, in that incorrect blinding, randomization, unrealistic usage, or other errors can cause an RCT to return incorrect effect estimates [ 2 ]. Nevertheless, a common assumption is that while the exact effect might differ, the effect identified in the observational data and the original “ground truth” should be correlated and good non-experimental methods should on average have greater correspondence with the provided ground truth [ 23 ]. Here we take this idea to an extreme and only check for concordance between the direction of effects in RCTs and the non-experimental methods [ 12 , 20 ]. A related evaluation approach, where one only seeks to recover the direction of an effect, has appeared in the causal discovery literature [ 24 ].

In this section we describe the TrialProbe approach. We describe the data source of the clinical trial reports (ClinicalTrials.gov), the processing of the raw data to a curated dataset of \(M={12,967}\) unique drug/drug adverse event comparisons, as well as the statistical approach that we propose for comparing non-experimental causal inference methods.

The primary data source: ClinicalTrials.gov

ClinicalTrials.gov serves as a public repository for clinical trials carried out in the United States and abroad. The database contains pre-registration information, trial status, and results as provided by researchers conducting the trials. Many clinical trials are legally required to report results to ClinicalTrials.gov within 1 year of study completion, with a compliance rate of over 40% [ 25 ]. In this work we use the June 4, 2020 version of the database, which includes 33,701 clinical trials. Note that we are not using patient level data collected in the trial, but the public report posted at ClinicalTrials.gov.

Extracting trials with an active comparator design

We focus on drug versus drug active comparator clinical trials, which evaluate one drug directly against another. The reason is that such comparisons are easier to conduct in the context of a non-experimental study design. In contrast, placebo or standard of care based trials are more difficult to work with because there is no clear corresponding case-control non-experimental study that can be used to estimate effects. We additionally restrict our analysis to higher quality clinical trials using the study design reported on ClinicalTrials.gov. We implement a quality filter by inspecting the reported randomization and blinding information and explicitly removing trials that are either not randomized or do not use participant blinding.

The results section of each active comparator clinical trial record consists of a set of intervention arms as well as the primary outcomes and adverse events associated with each arm. The primary outcomes and side effects are all specified in natural language and must be mapped to standardized terminologies. We discard the primary outcomes because it is difficult to consistently map them to electronic healthcare data sources due to a wide diversity of measurements and a lack of standardized terminology. We instead focus on the adverse events because they are specified using MedDRA terminology and because mappings to corresponding condition codes are available for healthcare data sources. We obtain a standardized version of these adverse outcomes by mapping them to ICD10 using the dictionary mappings contained within UMLS 2019AB.

The drug mentions in the ClinicalTrials.gov records are specified in an ad-hoc manner in terms of brand names, ingredients, dosages and/or more specialized names. As a preliminary step, we filter out all treatment arms with fewer than 100 patients as trials of that size frequently do not have enough power to obtain statistical significance. We then use the RxNorm API to transform the text descriptions of drugs into RxNorm ingredient sets. We require at least 50% of the tokens to match in order to avoid false positives. Treatment arms with more than one ingredient (due to either containing multiple drugs or drugs with multiple active ingredients) are also filtered out. As an additional quality control step, we remove intervention arms that contain plus (“ \(+\) ”) signs in their names that usually indicate combination treatments that RxNorm is not always able to detect and map to ingredients correctly. Finally, we map those RxNorm ingredient sets to Anatomical Therapeutic Chemical (ATC) codes so that we can find the corresponding drugs more easily in our ATC code annotated observational data. We manually very that this automated drug name extraction and mapping step did not introduce significant errors by manually inspecting a set of 100 random mapped trials and double-checking that all drugs in those trials were resolved to correct the RxNorms.

One important feature of ClinicalTrials.gov data is that it often contains records where the same drug-drug comparisons have been tested in multiple trials. We aggregate side effect event counts and participant counts for trials with identical drug combinations and outcome measurements. Similarly, we also aggregate counts across arms where the same drug was evaluated with different dosages. This aggregation procedure has the dual purpose of strengthening the reliability of consistent true effects while helping to down-weigh trials with conflicting effects.

We also note that in an active comparator design, there is typically no concrete choice for the baseline arm (in contrast to e.g., placebo or standard of care trials)—the role of the two arms is symmetric . To express this symmetry, we reorder all pairs of drugs under comparison (for each adverse event) in such a way that the sample odds ratio is \(\ge 1\) .

At the end of this process, we have compiled \(M={12,967}\) unique drug versus drug treatment adverse event comparisons. The summarized data for the i -th entry comprises of the ICD10 code of the adverse event, the ATC code of the two drugs being compared, as well as the contingency table \(Z_i\) :

Below we describe our concrete statistical proposal for leveraging the above dataset to compare non-experimental causal inference methods.

Empirical Bayes effect size estimation

In this section, we develop an approach for estimating the effect sizes of all the drug versus drug treatment adverse event comparisons that adjusts for the following issues: First, most of the drug vs drug effect sizes are very small, close to 1, if not non-existent. Adjusting for this prior is necessary in order to reject spurious, but statistically significant effects. Second, each drug vs drug comparison contains vastly different amounts of information, with differing event rates, patient counts, etc for each comparison. Taking into account the differences in information content is important for identifying effects that are weak, but strongly supported due to the quantity of clinical trial evidence.

Our estimation approach follows a tradition of methodological developments based on hierarchical modeling combined with an empirical Bayes analysis [ 26 , 27 , 28 , 29 ]. This approach explicitly learns a prior to take into account how most effects are small and takes advantage of the differing amounts of information in each comparison. We model the likelihood for the log odds ratio \(\omega _i\) of the i -th comparison (with contingency table ( 1 )) through the non-central hypergeometric distribution, that is,

The likelihood \(L_i(\omega _i)\) for the analysis of \(2 \times 2\) contingency tables has been proposed by, e.g., [ 30 , 31 , 32 , 33 ], and is derived by conditioning on the margins of the table \(Z_i\) —in entirely the same way as in the derivation of Fisher’s exact test.

In our hierarchical approach, we further model the \(\omega _i\) as exchangeable random effects, independent of the margins of \(Z_i\) , with:

In contrast to a fully Bayesian approach, we do not posit knowledge of G , but instead follow the empirical Bayes paradigm and estimate G based on the data \(Z_1,\dotsc ,Z_M\) as follows:

Equation ( 4 ) is an optimization problem over all symmetric distributions G and the objective is the marginal log-likelihood—each component likelihood \(L_i(\cdot )\) ( 2 ) is integrated with respect to the unknown G . The estimator \(\widehat{G}\) is the nonparametric maximum likelihood estimator (NPMLE) of Kiefer and Wolfowitz [ 34 ], and has been used for contingency tables [ 30 ]. We note that in contrast to previous works [ 30 ], we also enforce symmetry of G around 0 in ( 3 ), ( 4 ). The reason is that, as explained in Section “Extracting trials with an active comparator design”, our active comparator design setting is symmetric with respect to the drugs under comparison.

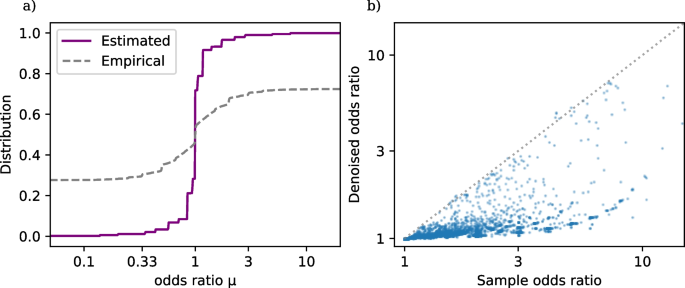

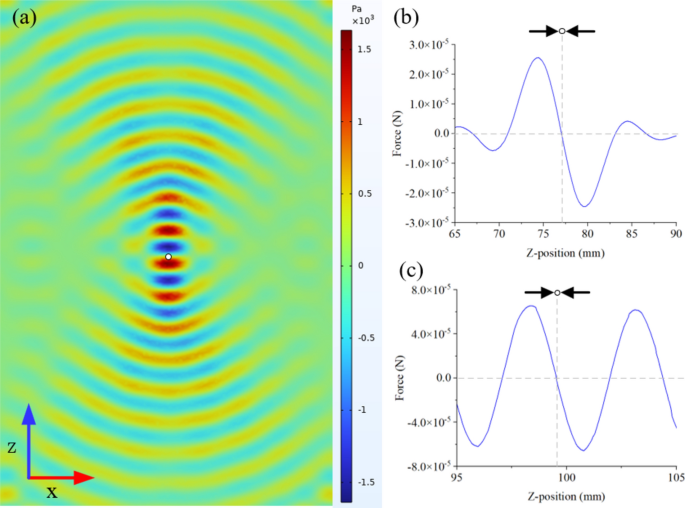

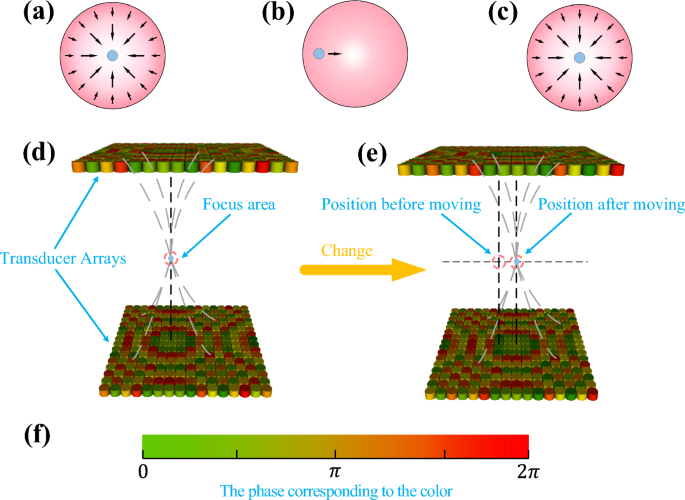

Figure 1 a shows the estimated distribution function \(\widehat{G}\) ( 4 ) based on the TrialProbe dataset (in terms of odds ratios \(\textrm{exp}(\omega _i)\) , but with a logarithmic x -axis scale), as well as the empirical distribution of sample odds ratios. Footnote 2 We observe that even though the sample odds ratios are quite spread out, the NPMLE \(\widehat{G}\) is substantially more concentrated around odds ratios near 0. This is consistent with the intuition that for an active comparator design study, side effects will often be similar for the two drugs under comparison (but not always).

a Distribution function of drug versus drug adverse event odds ratios in TrialProbe . \(\widehat{G}\) is estimated via nonparametric maximum likelihood as in ( 4 ), while the dashed curve is the empirical distribution of sample odds ratios. b Denoised vs. raw odds ratios. Denoising ( 5 ) is done by computing the posterior mean of the log odds ratio given the data for the i -th comparison and the estimated \(\widehat{G}\)

Finally, to create an effect estimate for the the drug versus drug treatment adverse event comparisons, we use the plug-in principle: We use the estimated \(\widehat{G}\) to compute denoised point estimates of the log odds ratios via the empirical Bayes rule :

Figure 1 b plots \(\textrm{exp}(\widehat{\omega }_i^{\text {EB}})\) against the sample odds ratios. We observe that the rule \(\widehat{\omega }_i^{\text {EB}}\) automatically shrinks most sample log odds ratios toward 0 (equivalently: \(\textrm{exp}(\widehat{\omega }_i^{\text {EB}})\) shrinks most sample odds ratios toward 1), while rigorously accounting for varying effective sample size of each comparison (so that shrinkage toward 1 is heterogeneous). Table 1 gives the first ten entries of TrialProbe , with the largest denoised odds ratio \(\textrm{exp}(\widehat{\omega }_i^{\text {EB}})\) .

Effect size ranking and subsetting

Given our effect size estimates computed through empirical Bayes, we rank drug vs drug adverse event comparisons by effect size magnitude [ 35 ] and construct subsets of our reference set that only contain effects greater than a chosen magnitude.

There is a challenging trade-off when choosing the effect size threshold required to be included in the reference set. Stronger effects should be more resilient to errors in either the clinical trial or non-experimental study design, but might exclude moderate effects that clinicians and researchers are interested in estimating with non-experimental methods.

Due to that complicated trade-off, we do not choose a static effect size threshold and instead perform all analyses with all possible effect size thresholds. This strategy also allows us to provide some insight into how metrics degrade as weaker effects are allowed in the reference set.

We thus define a family of reference sets \(S_t\) , where t is the minimum required denoised odds ratio to be included in the set. Each set \(S_t\) is a subset of TrialProbe , defined as follows:

Evaluation: concordant sign rate

As explained previously, there are many possible reasons why the exact effect size from a non-experimental assessment of a causal effect may not match the results of a clinical trial. We propose to handle this by only looking at the estimated effect direction for those effects which are known to be large. We additionally only compare concordance for cases where the non-experimental method returns a statistically significant result, as this both removes cases where we wouldn’t expect the non-experimental assessment to match and better aligns with how non-experimental assessments are used in practice. The basic premise of our approach is the following.

Consider the comparison of two drugs with respect to an adverse event. Suppose that:

In the clinical trial report, there is strong evidence that \(\omega _A \gg \omega _B\) , that is, there is strong evidence that the adverse event rate under drug A is substantially larger compared to drug B.

The non-experimental causal inference method yields a significant p -value, indicating that the null hypothesis (that both drugs have the same adverse event rate) is probably false.

According to the non-experimental method, drug B leads to a higher adverse event rate compared to drug A, that is, the direction of the effect is the opposite compared to the clinical trial evidence.

Then, we are confident that the non-experimental method yields misleading evidence in this case as it provides statistically significant effects in the wrong direction compared to the ground truth.

We instantiate the above framework as follows. We seek to systematically evaluate a non-experimental causal inference method \(\mathcal {O}\) , which we define as follows (see Section “Case study on Optum’s Clinformatics” for a concrete instantiation): \(\mathcal {O}\) is a mapping from two drugs (drug A and drug B) and an adverse event to a p -value and a predicted causal effect direction (i.e., whether drug A or drug B causes the adverse event more frequently). Specifying the mapping \(\mathcal {O}\) requires specification of the healthcare data resource, the protocol for extracting subjects treated with drug A, resp. drug B, and a statistical method (e.g., an observational method that adjusts for observed covariates) that returns a p -value and the predicted effect direction.

We define \(\mathcal {R}(\mathcal {O}) \subset \textit{TrialProbe}\) as the set of comparisons such that the non-experimental study returns a p -value \(\le 0.05\) . In order to ensure that we only evaluate larger effects, we use the \(S_t\) subsets of TrialProbe defined in the previous section which require each entry in the set to have an empirical Bayes denoised odds ratio greater than t .

We then define the Concordant Sign Rate, as:

Large values of \(\text {CSR}(\mathcal {S}_t, \mathcal {O})\) are preferable. We may define \(1-\text {CSR}(\mathcal {S}_t, \mathcal {O})\) as the discordant sign rate, which is analogous to the notion of false sign rate in multiple testing [ 36 , 37 ] and the type-S (“sign”) error [ 38 ]. In the present setting, however, there is no precise notion of “true” and “false” sign, and instead we evaluate only based on concordance/discordance with the effect derived from the public clinical trial reports.

For every \(\mathcal {S}_t\) and every non-experimental causal inference method \(\mathcal {O}\) , we compute two metrics: the fraction of statistically significant results that have a concordant sign (as in ( 7 )) and the fraction of entries of \(\mathcal {S}_t\) recovered (as in being marked statistically significant with concordant sign). The concordant sign rate gives an indication of how reliable a non-experimental method is and the fraction recovered gives an indication of its power.

Case study on Optum’s Clinformatics

To illustrate how TrialProbe may be applied, we consider a hypothetical investigator who is interested in comparing two drugs with respect to a specific adverse event and seeks to generate evidence for the comparison. The investigator has access to Optum’s de-identified Clinformatics Data Mart 8.0 medical claims dataset [ 39 ], a large US commercial claims dataset containing over 88 million patients that is frequently used for non-experimental studies.

The investigator proceeds as follows:

Cohorts are constructed systematically using the first drug reimbursement claim for either of the two drugs as the index time. Patients with a prior event or an event at the index time are excluded. At most 100,000 patients are sampled for each drug. Outcomes are measured until each record is censored (as indicated by the end of their healthcare enrollment in the Clinformatics dataset).

For the cohort generated as above, the investigator fits a Cox proportional hazards model with response equal to the first time the adverse event occurs and covariate equal to the indicator of treatment assignment to drug A. Footnote 3

The investigator reports a significant causal effect if the p -value from the Cox fit is \(\le 0.05\) and in that case, declares the direction of the effect according to the estimated hazard ratio.

Steps 1—3 comprise a non-experimental strategy \(\mathcal {O}\) . We also consider two additional non-experimental strategies that replace step 2. by 2.’ or 2.”:

The investigator fits a propensity score matched (PSM) Cox model. The propensity score is estimated using logistic regression on a low-dimensional representation of the patient’s history obtained via a procedure by Steinberg et al. [ 40 ]. When performing matching, the investigator uses a 1:1 greedy matching algorithm on the logit scale with a caliper of 0.1. Once a matched cohort is chosen, the hazard ratio is estimated using a Cox regression by modeling the survival outcome as a function of the treatment status in the cohort. The calculation of the p -value corresponding to the hazard ratio ignores the estimation of the propensity scores.

The investigator fits an inverse propensity score weighted (IPSW) Cox model. As in 2.’, the propensity score is estimated using logistic regression on a low-dimensional representation of the patient’s history obtained via a procedure by Steinberg et al. [ 40 ]. The calculation of the p -value corresponding to the hazard ratio ignores the estimation of the propensity scores.

In what follows, we refer to these two non-experimental methods as “Unadjusted Cox”, “Cox PSM” and “Cox IPSW”. We note that there are many possible criticisms to all three approaches. For example, the first approach is naïve, in that it does not even attempt to adjust for confounding. The second approach adjusts for confounding, but also has caveats, e.g., the computed standard error may be overly conservative [ 41 ]. Finally, the third approach, IPSW, has relatively high variance and can be unstable, especially when there is minimal overlap. Nevertheless, it is plausible that an investigator would proceed using one of these non-experimental approaches (especially Cox PSM and Cox IPSW). With TrialProbe , we can probe some of the properties of these three non-experimental methods.

For a given comparison of interest, it could be the case that any of the methods provides more reliable evidence than the others, or perhaps all methods provide unreliable evidence. There are many reasons why the methods could fail to provide reliable evidence, and these reasons may vary from comparison to comparison (as explained before). Through TrialProbe we probe operating characteristics of methods in aggregate over many possible comparisons. At the same time, we also encourage researchers to delve in more depth at specific comparisons to identify failure modes of non-experimental strategies.

As an example, the effect in the third row is so strong, so that all three non-experimental methods declare the effect as significant and determine a concordant direction. On the other hand, we do not see good concordance or recovery for the Nicotine vs Bupropion examples (rows one, two, and six), with the covariate-adjusted methods returning three statistically insignificant results and the unadjusted method returning one statistically significant concordant result, one statistically significant discordant result, and one statistically insignificant result. This illustrates some of the tradeoffs when adjusting for confounders in that adjusted methods have an increased Type 1 error rate, but also an increased Type 2 error rate. A likely explanation for the poor performance with nicotine in particular is that nicotine usage is frequently not recorded well in claims data. In this case the potential mismatch between trial results and non-experimental results may be more due to the data source, and not due to the adjustment strategies. This example thus illustrates how TrialProbe can help identify failure modes of non-experimental studies.

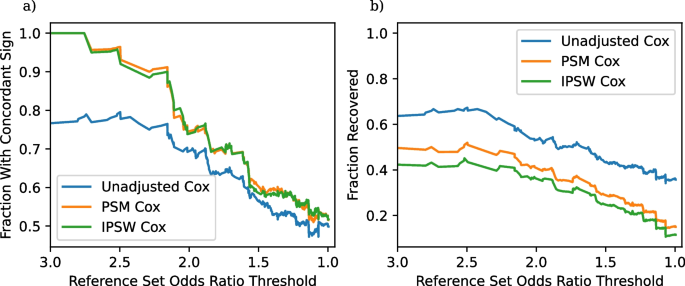

a Fraction of significant results with concordant sign as a function of the odds ratio threshold in ( 6 ). b Fraction of recovered entries as a function of the odds ratio threshold

We continue with a more holistic picture of the comparison of the two non-experimental strategies (instead of looking at results for individual comparisons) and proceed as suggested in Section “Evaluation: Concordant sign rate”. One important aspect of our results is that many of the non-experimental effect estimates are not statistically significant, and thus not evaluated by our pipeline. The fraction of non-significant results are in Table 3 . The high frequency of non-significant results, even with the use of a large observational dataset probably reflects the fact that many of these adverse events are rare, especially given the underreporting common in claims data. We compute the fraction of significant results that have concordant signs and the fraction of reference set entries correctly recovered by each method for each subset \(S_t\) of TrialProbe that only contains effects that have an odds ratio threshold greater than t . Figure 2 provides the performance of each of our three methods on these two metrics. It is reassuring that for the relatively strong effects, all methods perform better than a “coin-flip” based guess of directionality. On the other hand, also as anticipated, the methods that adjust for confounders have better concordance compared to unadjusted Cox-PH and the concordant sign rate is \(\ge 80\%\) for comparisons with strong evidence in ClinicalTrials.gov, say, with (denoised) odds ratio \(\ge 2\) .

We make the following remarks: As the x -axis varies in the plots, we are scanning over less stringent choices of “reference sets”. However, in the spirit of probing methods in an exploratory way, we do not need to make a choice of a specific reference set / cutoff on the x -axis. We also note that as the denoised odds ratios approaches zero, the “reference set” \(\mathcal {S}_t\) becomes increasingly uninformative, and so we would anticipate that any method would have \(\text {CSR} \approx 0.5\) .

Comparison to prior work

In order to better understand how TrialProbe compares to prior work, we perform three other non-experimental method evaluation strategies. First, we perform a direct concordance and recovery rate evaluation using the positive controls (that are presumed to have an effect) from the OMOP and EU-ADR reference sets. We also create an ablated form of TrialProbe that does not use the empirical Bayesian effect estimation and odds ratio magnitude filtering, and instead only identifies significant effects using an exact Fisher test with a 0.05 p -value threshold. Table 4 contains the results of this comparison.

We find that all three of these sets, OMOP, EU-ADR, and the corresponding TrialProbe subset that only required Fisher statistical significance, were difficult to reproduce, with many non-concordant signs and lost effects. The low concordance and recovery of Fisher exact test based TrialProbe subset in particular helps indicate the importance of our empirical Bayesian estimation and effect size filtering.

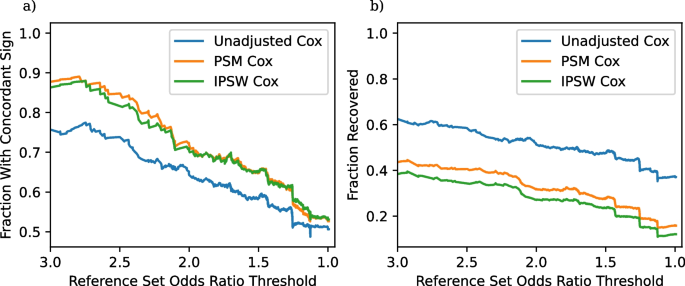

Importance of clinical trial filtering

One of the key decisions for constructing TrialProbe is which clinical trials to include for analysis. Our analysis uses an assignment and blinding filter, requiring all candidate clinical trials to use randomized assignment and participant blinding. This filter excludes 6,855 of the 19,822 candidate effects that we could have otherwise studied. In order to understand the effect of this filter, and whether it is worth the lost entries, we perform an ablation experiment where we rerun our analysis without this filter. The resulting concordance and recovery plots are in Fig. 3 .

Concordance and recovery rates for an ablated form of TrialProbe that does not use clinical trial quality filters. a Fraction of significant results with concordant sign as a function of the odds ratio threshold in ( 6 ). b Fraction of recovered entries as a function of the odds ratio threshold

The concordance rate and recovery rate without the clinical trial quality filter are distinctly lower, especially at larger odds ratio thresholds. This probably reflects how low-quality clinical trials are less likely to be reproducible due to the inherent increased error rate caused by a lack of participant blinding and incomplete randomization.

In this work, we use clinical trial records from ClinicalTrials.gov to build a source of ground truth to probe the performance non-experimental study methods. We show how such a dataset can be constructed in a systematic statistically sound manner in a way that also allows us to filter by the estimated strength of the effects. We also demonstrate the value of our approach by quantifying the performance of three commonly used non-experimental study methods.

Our approach has three advantages. First, it characterizes the performance of methods on real observational data. Second, our approach provides high quality ground truth based on clinical trials that have varying effect sizes, allowing a read out of the performance of a method for a given effect size (Fig. 2 ). Prior reference sets rely on ground truth sources that might be less reliable or have weaker relationships. Finally, our approach scales better than prior work, because we can create thousands of “known relationships” from published trial reports. This is a significant advantage compared to prior approaches that rely on evaluating methods using patient-level randomized trial datasets that can be difficult to acquire [ 42 ].

The empirical Bayes estimation and odds ratio magnitude subsetting in particular seems to be a key component of how TrialProbe can achieve relatively high measured concordance between the clinical trials and non-experimental methods. As shown in our results section, a TrialProbe subset that only relies on statistical significance achieves very low concordance. Likewise, the OMOP and EU-ADR reference sets (which indirectly rely only on statistical significance through FDA reports) also report similarly poor performance. We believe the most likely hypothesis for explaining this is that there is likely to be significant type 1 error due to the implicit vast multiple hypothesis testing problem when searching for a small number of significant adverse event effects in a sea of thousands of reported minor effects. Empirical Bayes automatically adjusts for this multiple hypothesis testing issue by learning a prior that incorporates the knowledge that most adverse event effects are null (Fig. 1 ), and can thus more effectively discard these invalid effects.

However, our approach has several limitations. The primary limitation of our approach is that we rely on an assumption that the average treatment effect seen in the clinical trials generalizes to the observational data. One way this could be violated is if there is a significant mismatch in the patient population and there is a heterogeneous treatment effect. In that case, it is possible to see different effect directions in the observational data than the randomized trial even if the non-experimental methods are functioning correctly [ 43 , 44 ]. Another probable mismatch between the observational data and the clinical trials is that there is frequent underreporting of outcomes in our observational datasets because they rely on billing records for adverse events. This is especially the case for non-serious outcomes such as nausea or rashes. Such underreporting would cause the estimated rate of adverse events to be lower in our observational data than in clinical trials A third potential cause is that the clinical trial might not provide a correct effect estimate due to poor internal clinical trial quality (such as improper blinding, poor randomization, and publication bias). For all of these potential causes of different effect estimates, our primary mitigation strategy is to focus on the effect directions of hazard ratios. The benefit of effect directions is that they intrinsically require greater error to change, especially when the effect magnitude is large. Hazard ratios additionally increase resilience by making analysis more resilient to changes in the base rate of the event, whether due to population differences or outcome reporting changes. One piece of evidence that this mitigation strategy is somewhat successful is that we observe much greater concordance between non-experimental methods and clinical trials than what could be achieved by random chance. However, we do expect this mitigation strategy to be imperfect, and differences in the underlying effects should cause us to underestimate the performance of non-experimental methods.

Our work also has several secondary limitations. First, our approach is only able to evaluate methods for detecting average treatment effects because our ground truth is in the form of average treatment effects. We are simply unable to evaluate how effective methods can detect heterogeneous treatment effects. A second additional limitation is that our evaluation strategy simultaneously probes both the statistical method and the observational healthcare data resource used, in that we would only expect high concordance when both are of high quality. This is frequently a disadvantage, in that it can be hard to understand the particular cause of poor concordance. However, in some circumstances, this can be an advantage: TrialProbe can help identify potential issues associated with the observational dataset itself (e.g., the underreporting of side effects such as nausea). TrialProbe could also be used to probe and contrast different observational datasets, e.g., one could seek to contrast one statistical method applied to a cohort extracted from Optum’s de-identified Clinformatics Data Mart Database compared to the same statistical method applied to a cohort extracted from an alternative observational data resource. Third, our reference set is a biased sample of true drug effects due to selection bias, caused by a combination of publication bias (in the form of trials not reporting results to clinicaltrials.gov) and our requirement for drug prescriptions in our observational data. In particular, it is probably the case that studies that result in significant quantities of adverse events are halted and those drugs are then infrequently (or not at all) used in clinical practice, resulting in our work underestimating the “true” adverse event rates of various drugs. This would in turn mean that the empirical Bayes based subsets that try to identify effects of a particular strength will incorrectly contain stronger effects than expected. However, this should not affect our estimated concordance between non-experimental methods and clinical trials within a particular subset, as we only compare effect directions and not effect magnitudes. Finally, one other disadvantage of our current approach is that the same prior is learned for all log-odds ratios; this presupposes that the selection of effects we consider are relevant to each other. This may not necessarily be the case; for example, chemotherapy drugs will typically have much stronger side effects than other drugs. Not accounting for these differences might cause us to underestimate the effect sizes for high risk drugs like chemotherapy drugs and underestimate the effect sizes for less risky medications. A refinement of the approach would be to stratify effects into groups [ 45 ] and learn a separate prior for each group, or to apply methods for empirical Bayes estimation in the presence of covariate information [ 46 ].

We propose an approach for evaluating non-experimental methods using clinical trial derived reference sets, and evaluate three commonly used non-experimental study methods in terms of their ability to identify the known relationships in a commonly used claims dataset. We find that adjustment significantly improves the ability to correctly recover known relationships, with propensity score matching performing particularly well for detecting large effects.

We make TrialProbe , i.e., the reference set as well as the procedure to create it, freely available at https://github.com/som-shahlab/TrialProbe . TrialProbe is useful for benchmarking observational study methods performance by developers of the methods as well as for practitioners interested in knowing the expected performance of a specific method on the dataset available to them.

Availability of data and materials

Our code is available at https://github.com/som-shahlab/TrialProbe . The source clinical trial records can be found at clinicaltrials.gov. The data we used in our case study, Optum’s Clinformatics Data Mart Database, is not publicly available as it is a commercially licensed product. In order to get access to Optum’s Clinformatics Data Mart Database, it is generally necessary to reach out to Optum directly to obtain both a license and the data itself. Contact information and other details about how to get access can be found on the product sheet [ 39 ]. Optum is the primary long term repository for their datasets and we are not allowed to maintain archive copies past our contract dates.

Such comparisons make sense when there is imperfect compliance to treatment and one is not interested in intention-to-treat effects.

Computed with a pseudocount adjustment to deal with zero cell counts, that is, \(\textrm{exp}(\widehat{\omega }^{\text {sample}}_i)= \left( {(X_{A,i}+0.5)/(Y_{A,i}+1)}\right) \big /\left( {(X_{B,i}+0.5)/(Y_{B,i}+1)}\right) .\)

In other words, the investigator does not adjust for any possible confounders.

Grootendorst DC, Jager KJ, Zoccali C, Dekker FW. Observational studies are complementary to randomized controlled trials. Nephron Clin Pract. 2010;114(3):173–7.

Article Google Scholar

Gershon AS, Lindenauer PK, Wilson KC, Rose L, Walkey AJ, Sadatsafavi M, et al. Informing Healthcare Decisions with Observational Research Assessing Causal Effect. An Official American Thoracic Society Research Statement. Am J Respir Crit Care Med. 2021;203(1):14–23.

Berger ML, Sox H, Willke RJ, Brixner DL, Eichler HG, Goettsch W, et al. Good practices for real-world data studies of treatment and/or comparative effectiveness: Recommendations from the joint ISPOR-ISPE Special Task Force on real-world evidence in health care decision making. Pharmacoepidemiol Drug Saf. 2017;26(9):1033–9.

Article PubMed PubMed Central Google Scholar

Darst JR, Newburger JW, Resch S, Rathod RH, Lock JE. Deciding without data. Congenit Heart Dis. 2010;5(4):339–42.

Hampson G, Towse A, Dreitlein WB, Henshall C, Pearson SD. Real-world evidence for coverage decisions: opportunities and challenges. J Comp Eff Res. 2018;7(12):1133–43.

Article PubMed Google Scholar

Klonoff DC. The Expanding Role of Real-World Evidence Trials in Health Care Decision Making. J Diabetes Sci Technol. 2020;14(1):174–9.

Hernán MA, Robins JM. Using Big Data to Emulate a Target Trial When a Randomized Trial Is Not Available. Am J Epidemiol. 2016;183(8):758–64.

Schuler A, Jung K, Tibshirani R, Hastie T, Shah N. Synth-validation: Selecting the best causal inference method for a given dataset. arXiv preprint arXiv:1711.00083 . 2017.

Dorie V, Hill J, Shalit U, Scott M, Cervone D. Automated versus do-it-yourself methods for causal inference: Lessons learned from a data analysis competition. arXiv:1707.02641 . 2017.

Dorie V, Hill J, Shalit U, Scott M, Cervone D. Automated versus do-it-yourself methods for causal inference: Lessons learned from a data analysis competition. Stat Sci. 2019;34(1):43–68.

Athey S, Imbens GW, Metzger J, Munro E. Using wasserstein generative adversarial networks for the design of monte carlo simulations. J Econom. 2021:105076. https://doi.org/10.1016/j.jeconom.2020.09.013 .

Schuemie MJ, Cepeda MS, Suchard MA, Yang J, Tian Y, Schuler A, et al. How confident are we about observational findings in health care: a benchmark study. Harvard Data Science Review. 2020;2(1). https://doi.org/10.1162/99608f92.147cc28e .

Wang SV, Sreedhara SK, Schneeweiss S, Franklin JM, Gagne JJ, Huybrechts KF, et al. Reproducibility of real-world evidence studies using clinical practice data to inform regulatory and coverage decisions. Nat Commun. 2022;13(1). https://doi.org/10.1038/s41467-022-32310-3 .

Gordon BR, Zettelmeyer F, Bhargava N, Chapsky D. A comparison of approaches to advertising measurement: Evidence from big field experiments at Facebook. Mark Sci. 2019;38(2):193–225.

Gordon BR, Moakler R, Zettelmeyer F. Close enough? a large-scale exploration of non-experimental approaches to advertising measurement. arXiv:2201.07055 . 2022.

LaLonde RJ. Evaluating the econometric evaluations of training programs with experimental data. Am Econ Rev. 1986;76(4):604–20. http://www.jstor.org/stable/1806062 . Accessed 5 Sept 2023.

Ioannidis JP, Haidich AB, Pappa M, Pantazis N, Kokori SI, Tektonidou MG, et al. Comparison of evidence of treatment effects in randomized and nonrandomized studies. JAMA. 2001;286(7):821–30.

Article CAS PubMed Google Scholar

Dahabreh IJ, Kent DM. Can the learning health care system be educated with observational data? JAMA. 2014;312(2):129–30.

Schuemie MJ, Gini R, Coloma PM, Straatman H, Herings RMC, Pedersen L, et al. Replication of the OMOP experiment in Europe: evaluating methods for risk identification in electronic health record databases. Drug Saf. 2013;36(Suppl 1):159–69.

Ryan PB, Schuemie MJ, Welebob E, Duke J, Valentine S, Hartzema AG. Defining a reference set to support methodological research in drug safety. Drug Saf. 2013;36(Suppl 1):33–47.

Wang SV, Schneeweiss S, Initiative RD. Emulation of Randomized Clinical Trials With Nonrandomized Database Analyses: Results of 32 Clinical Trials. JAMA. 2023;329(16):1376–85. https://doi.org/10.1001/jama.2023.4221 .

Thompson D. Replication of Randomized, Controlled Trials Using Real-World Data: What Could Go Wrong? Value Health. 2021;24(1):112–5.

Camerer CF, Dreber A, Holzmeister F, Ho TH, Huber J, Johannesson M, et al. Evaluating the replicability of social science experiments in Nature and Science between 2010 and 2015. Nat Hum Behav. 2018;2(9):637–44.

Mooij JM, Peters J, Janzing D, Zscheischler J, Schölkopf B. Distinguishing cause from effect using observational data: methods and benchmarks. J Mach Learn Res. 2016;17(1):1103–204.

Google Scholar

DeVito NJ, Bacon S, Goldacre B. Compliance with legal requirement to report clinical trial results on ClinicalTrials.gov: a cohort study. Lancet. 2020;395(10221):361–9.

Robbins H. An Empirical Bayes Approach to Statistics. In: Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Contributions to the Theory of Statistics. Berkeley: The Regents of the University of California; 1956. p. 157–163.

Efron B, Morris C. Data Analysis Using Stein’s Estimator and Its Generalizations. J Am Stat Assoc. 1975;70(350):311–9.

Efron B. Bayes, oracle Bayes and empirical Bayes. Statist Sci. 2019;34(2):177–201. https://doi.org/10.1214/18-STS674 .

Gu J, Koenker R. Invidious comparisons: Ranking and selection as compound decisions. Econometrica (forthcoming). 2022.

Van Houwelingen HC, Zwinderman KH, Stijnen T. A bivariate approach to meta-analysis. Stat Med. 1993;12(24):2273–84.

Efron B. Empirical Bayes methods for combining likelihoods. J Am Stat Assoc. 1996;91(434):538–50.

Sidik K, Jonkman JN. Estimation using non-central hypergeometric distributions in combining 2 \(\times\) 2 tables. J Stat Plan Infer. 2008;138(12):3993–4005.

Stijnen T, Hamza TH, Özdemir P. Random effects meta-analysis of event outcome in the framework of the generalized linear mixed model with applications in sparse data. Stat Med. 2010;29(29):3046–67.

Kiefer J, Wolfowitz J. Consistency of the maximum likelihood estimator in the presence of infinitely many incidental parameters. Ann Math Statist. 1956;27(4):887–906. https://doi.org/10.1214/aoms/1177728066 .

Aitkin M, Longford N. Statistical modelling issues in school effectiveness studies. J R Stat Soc Ser A Gen. 1986;149(1):1–26.

Stephens M. False discovery rates: a new deal. Biostatistics. 2017;18(2):275–94.

PubMed Google Scholar

Ignatiadis N, Wager S. Confidence Intervals for Nonparametric Empirical Bayes Analysis. J Am Stat Assoc. 2022;117(539):1149–66.

Article CAS Google Scholar

Gelman A, Tuerlinckx F. Type S error rates for classical and Bayesian single and multiple comparison procedures. Comput Stat. 2000;15(3):373–90.

Optum. Optum’s de-identified Clinformatics Data Mart Database. 2017. https://www.optum.com/content/dam/optum/resources/productSheets/Clinformatics_for_Data_Mart.pdf . Accessed 5 Sept 2023.

Steinberg E, Jung K, Fries JA, Corbin CK, Pfohl SR, Shah NH. Language models are an effective representation learning technique for electronic health record data. J Biomed Inform. 2021;113:103637.

Austin PC, Small DS. The use of bootstrapping when using propensity-score matching without replacement: a simulation study. Stat Med. 2014;33(24):4306–19.

Powers S, Qian J, Jung K, Schuler A, Shah NH, Hastie T, et al. Some methods for heterogeneous treatment effect estimation in high dimensions. Stat Med. 2018;37(11):1767–87.

Rogers JR, Hripcsak G, Cheung YK, Weng C. Clinical comparison between trial participants and potentially eligible patients using electronic health record data: a generalizability assessment method. J Biomed Inform. 2021;119:103822.

Dahabreh IJ, Robins JM, Hernán MA. Benchmarking Observational Methods by Comparing Randomized Trials and Their Emulations. Epidemiology. 2020;31(5):614–9.

Efron B, Morris C. Combining Possibly Related Estimation Problems. J R Stat Soc Ser B Methodol. 1973;35(3):379–402.

Ignatiadis N, Wager S. Covariate-powered empirical Bayes estimation. Adv Neural Inf Process Syst. 2019;32.

Download references

Acknowledgements

We would like to thank Agata Foryciarz, Stephen R. Pfohl, and Jason A. Fries for providing useful comments on the paper. We would also like to thank the anonymous reviewers who have contributed feedback that has helped us improve this work.

This work was funded under NLM R01-LM011369-05.

Author information

Authors and affiliations.

Center for Biomedical Informatics Research, Stanford University, Stanford, US

Ethan Steinberg, Yizhe Xu & Nigam Shah

Department of Statistics, University of Chicago, Chicago, US

Nikolaos Ignatiadis

Google Research, Google, Cambridge, US

Steve Yadlowsky

You can also search for this author in PubMed Google Scholar

Contributions

Ethan Steinberg: Conceptualization, Methodology, Software, Writing—original draft. Nikolaos Ignatiadis: Methodology, Software, Writing. Steve Yadlowsky: Methodology, Software, Writing. Yizhe Xu: Software, Writing. Nigam H. Shah: Writing—review & editing, Supervision, Funding acquisition.

Corresponding author

Correspondence to Ethan Steinberg .

Ethics declarations

Ethics approval and consent to participate.

Optum’s Clinformatics Data Mart Database is a de-identified dataset [ 39 ] per HIPAA (Health Insurance Portability and Accountability Act) standards so neither IRB approval nor patient consent is required. As such, we can confirm that all experiments were performed in accordance with relevant guidelines and regulations.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Steinberg, E., Ignatiadis, N., Yadlowsky, S. et al. Using public clinical trial reports to probe non-experimental causal inference methods. BMC Med Res Methodol 23 , 204 (2023). https://doi.org/10.1186/s12874-023-02025-0

Download citation

Received : 27 October 2022

Accepted : 24 August 2023

Published : 09 September 2023

DOI : https://doi.org/10.1186/s12874-023-02025-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Causal inference

- Meta-analysis

- Clinical trials

- Method evaluation

BMC Medical Research Methodology

ISSN: 1471-2288

- General enquiries: [email protected]

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 7: Nonexperimental Research

Overview of Nonexperimental Research

Learning Objectives

- Define nonexperimental research, distinguish it clearly from experimental research, and give several examples.

- Explain when a researcher might choose to conduct nonexperimental research as opposed to experimental research.

What Is Nonexperimental Research?

Nonexperimental research is research that lacks the manipulation of an independent variable, random assignment of participants to conditions or orders of conditions, or both.

In a sense, it is unfair to define this large and diverse set of approaches collectively by what they are not . But doing so reflects the fact that most researchers in psychology consider the distinction between experimental and nonexperimental research to be an extremely important one. This distinction is because although experimental research can provide strong evidence that changes in an independent variable cause differences in a dependent variable, nonexperimental research generally cannot. As we will see, however, this inability does not mean that nonexperimental research is less important than experimental research or inferior to it in any general sense.

When to Use Nonexperimental Research

As we saw in Chapter 6 , experimental research is appropriate when the researcher has a specific research question or hypothesis about a causal relationship between two variables—and it is possible, feasible, and ethical to manipulate the independent variable and randomly assign participants to conditions or to orders of conditions. It stands to reason, therefore, that nonexperimental research is appropriate—even necessary—when these conditions are not met. There are many ways in which preferring nonexperimental research can be the case.

- The research question or hypothesis can be about a single variable rather than a statistical relationship between two variables (e.g., How accurate are people’s first impressions?).

- The research question can be about a noncausal statistical relationship between variables (e.g., Is there a correlation between verbal intelligence and mathematical intelligence?).

- The research question can be about a causal relationship, but the independent variable cannot be manipulated or participants cannot be randomly assigned to conditions or orders of conditions (e.g., Does damage to a person’s hippocampus impair the formation of long-term memory traces?).

- The research question can be broad and exploratory, or it can be about what it is like to have a particular experience (e.g., What is it like to be a working mother diagnosed with depression?).

Again, the choice between the experimental and nonexperimental approaches is generally dictated by the nature of the research question. If it is about a causal relationship and involves an independent variable that can be manipulated, the experimental approach is typically preferred. Otherwise, the nonexperimental approach is preferred. But the two approaches can also be used to address the same research question in complementary ways. For example, nonexperimental studies establishing that there is a relationship between watching violent television and aggressive behaviour have been complemented by experimental studies confirming that the relationship is a causal one (Bushman & Huesmann, 2001) [1] . Similarly, after his original study, Milgram conducted experiments to explore the factors that affect obedience. He manipulated several independent variables, such as the distance between the experimenter and the participant, the participant and the confederate, and the location of the study (Milgram, 1974) [2] .

Types of Nonexperimental Research

Nonexperimental research falls into three broad categories: single-variable research, correlational and quasi-experimental research, and qualitative research. First, research can be nonexperimental because it focuses on a single variable rather than a statistical relationship between two variables. Although there is no widely shared term for this kind of research, we will call it single-variable research . Milgram’s original obedience study was nonexperimental in this way. He was primarily interested in one variable—the extent to which participants obeyed the researcher when he told them to shock the confederate—and he observed all participants performing the same task under the same conditions. The study by Loftus and Pickrell described at the beginning of this chapter is also a good example of single-variable research. The variable was whether participants “remembered” having experienced mildly traumatic childhood events (e.g., getting lost in a shopping mall) that they had not actually experienced but that the research asked them about repeatedly. In this particular study, nearly a third of the participants “remembered” at least one event. (As with Milgram’s original study, this study inspired several later experiments on the factors that affect false memories.)

As these examples make clear, single-variable research can answer interesting and important questions. What it cannot do, however, is answer questions about statistical relationships between variables. This detail is a point that beginning researchers sometimes miss. Imagine, for example, a group of research methods students interested in the relationship between children’s being the victim of bullying and the children’s self-esteem. The first thing that is likely to occur to these researchers is to obtain a sample of middle-school students who have been bullied and then to measure their self-esteem. But this design would be a single-variable study with self-esteem as the only variable. Although it would tell the researchers something about the self-esteem of children who have been bullied, it would not tell them what they really want to know, which is how the self-esteem of children who have been bullied compares with the self-esteem of children who have not. Is it lower? Is it the same? Could it even be higher? To answer this question, their sample would also have to include middle-school students who have not been bullied thereby introducing another variable.

Research can also be nonexperimental because it focuses on a statistical relationship between two variables but does not include the manipulation of an independent variable, random assignment of participants to conditions or orders of conditions, or both. This kind of research takes two basic forms: correlational research and quasi-experimental research. In correlational research , the researcher measures the two variables of interest with little or no attempt to control extraneous variables and then assesses the relationship between them. A research methods student who finds out whether each of several middle-school students has been bullied and then measures each student’s self-esteem is conducting correlational research. In quasi-experimental research , the researcher manipulates an independent variable but does not randomly assign participants to conditions or orders of conditions. For example, a researcher might start an antibullying program (a kind of treatment) at one school and compare the incidence of bullying at that school with the incidence at a similar school that has no antibullying program.

The final way in which research can be nonexperimental is that it can be qualitative. The types of research we have discussed so far are all quantitative, referring to the fact that the data consist of numbers that are analyzed using statistical techniques. In qualitative research , the data are usually nonnumerical and therefore cannot be analyzed using statistical techniques. Rosenhan’s study of the experience of people in a psychiatric ward was primarily qualitative. The data were the notes taken by the “pseudopatients”—the people pretending to have heard voices—along with their hospital records. Rosenhan’s analysis consists mainly of a written description of the experiences of the pseudopatients, supported by several concrete examples. To illustrate the hospital staff’s tendency to “depersonalize” their patients, he noted, “Upon being admitted, I and other pseudopatients took the initial physical examinations in a semipublic room, where staff members went about their own business as if we were not there” (Rosenhan, 1973, p. 256). [3] Qualitative data has a separate set of analysis tools depending on the research question. For example, thematic analysis would focus on themes that emerge in the data or conversation analysis would focus on the way the words were said in an interview or focus group.

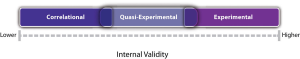

Internal Validity Revisited

Recall that internal validity is the extent to which the design of a study supports the conclusion that changes in the independent variable caused any observed differences in the dependent variable. Figure 7.1 shows how experimental, quasi-experimental, and correlational research vary in terms of internal validity. Experimental research tends to be highest because it addresses the directionality and third-variable problems through manipulation and the control of extraneous variables through random assignment. If the average score on the dependent variable in an experiment differs across conditions, it is quite likely that the independent variable is responsible for that difference. Correlational research is lowest because it fails to address either problem. If the average score on the dependent variable differs across levels of the independent variable, it could be that the independent variable is responsible, but there are other interpretations. In some situations, the direction of causality could be reversed. In others, there could be a third variable that is causing differences in both the independent and dependent variables. Quasi-experimental research is in the middle because the manipulation of the independent variable addresses some problems, but the lack of random assignment and experimental control fails to address others. Imagine, for example, that a researcher finds two similar schools, starts an antibullying program in one, and then finds fewer bullying incidents in that “treatment school” than in the “control school.” There is no directionality problem because clearly the number of bullying incidents did not determine which school got the program. However, the lack of random assignment of children to schools could still mean that students in the treatment school differed from students in the control school in some other way that could explain the difference in bullying.

Notice also in Figure 7.1 that there is some overlap in the internal validity of experiments, quasi-experiments, and correlational studies. For example, a poorly designed experiment that includes many confounding variables can be lower in internal validity than a well designed quasi-experiment with no obvious confounding variables. Internal validity is also only one of several validities that one might consider, as noted in Chapter 5.

Key Takeaways

- Nonexperimental research is research that lacks the manipulation of an independent variable, control of extraneous variables through random assignment, or both.

- There are three broad types of nonexperimental research. Single-variable research focuses on a single variable rather than a relationship between variables. Correlational and quasi-experimental research focus on a statistical relationship but lack manipulation or random assignment. Qualitative research focuses on broader research questions, typically involves collecting large amounts of data from a small number of participants, and analyses the data nonstatistically.

- In general, experimental research is high in internal validity, correlational research is low in internal validity, and quasi-experimental research is in between.

Discussion: For each of the following studies, decide which type of research design it is and explain why.

- A researcher conducts detailed interviews with unmarried teenage fathers to learn about how they feel and what they think about their role as fathers and summarizes their feelings in a written narrative.

- A researcher measures the impulsivity of a large sample of drivers and looks at the statistical relationship between this variable and the number of traffic tickets the drivers have received.

- A researcher randomly assigns patients with low back pain either to a treatment involving hypnosis or to a treatment involving exercise. She then measures their level of low back pain after 3 months.

- A college instructor gives weekly quizzes to students in one section of his course but no weekly quizzes to students in another section to see whether this has an effect on their test performance.

- Bushman, B. J., & Huesmann, L. R. (2001). Effects of televised violence on aggression. In D. Singer & J. Singer (Eds.), Handbook of children and the media (pp. 223–254). Thousand Oaks, CA: Sage. ↵

- Milgram, S. (1974). Obedience to authority: An experimental view . New York, NY: Harper & Row. ↵

- Rosenhan, D. L. (1973). On being sane in insane places. Science, 179 , 250–258. ↵