2024 Guide: 23 Data Science Case Study Interview Questions (with Solutions)

Case studies are often the most challenging aspect of data science interview processes. They are crafted to resemble a company’s existing or previous projects, assessing a candidate’s ability to tackle prompts, convey their insights, and navigate obstacles.

To excel in data science case study interviews, practice is crucial. It will enable you to develop strategies for approaching case studies, asking the right questions to your interviewer, and providing responses that showcase your skills while adhering to time constraints.

The best way of doing this is by using a framework for answering case studies. For example, you could use the product metrics framework and the A/B testing framework to answer most case studies that come up in data science interviews.

There are four main types of data science case studies:

- Product Case Studies - This type of case study tackles a specific product or feature offering, often tied to the interviewing company. Interviewers are generally looking for a sense of business sense geared towards product metrics.

- Data Analytics Case Study Questions - Data analytics case studies ask you to propose possible metrics in order to investigate an analytics problem. Additionally, you must write a SQL query to pull your proposed metrics, and then perform analysis using the data you queried, just as you would do in the role.

- Modeling and Machine Learning Case Studies - Modeling case studies are more varied and focus on assessing your intuition for building models around business problems.

- Business Case Questions - Similar to product questions, business cases tackle issues or opportunities specific to the organization that is interviewing you. Often, candidates must assess the best option for a certain business plan being proposed, and formulate a process for solving the specific problem.

How Case Study Interviews Are Conducted

Oftentimes as an interviewee, you want to know the setting and format in which to expect the above questions to be asked. Unfortunately, this is company-specific: Some prefer real-time settings, where candidates actively work through a prompt after receiving it, while others offer some period of days (say, a week) before settling in for a presentation of your findings.

It is therefore important to have a system for answering these questions that will accommodate all possible formats, such that you are prepared for any set of circumstances (we provide such a framework below).

Why Are Case Study Questions Asked?

Case studies assess your thought process in answering data science questions. Specifically, interviewers want to see that you have the ability to think on your feet, and to work through real-world problems that likely do not have a right or wrong answer. Real-world case studies that are affecting businesses are not binary; there is no black-and-white, yes-or-no answer. This is why it is important that you can demonstrate decisiveness in your investigations, as well as show your capacity to consider impacts and topics from a variety of angles. Once you are in the role, you will be dealing directly with the ambiguity at the heart of decision-making.

Perhaps most importantly, case interviews assess your ability to effectively communicate your conclusions. On the job, data scientists exchange information across teams and divisions, so a significant part of the interviewer’s focus will be on how you process and explain your answer.

Quick tip: Because case questions in data science interviews tend to be product- and company-focused, it is extremely beneficial to research current projects and developments across different divisions , as these initiatives might end up as the case study topic.

How to Answer Data Science Case Study Questions (The Framework)

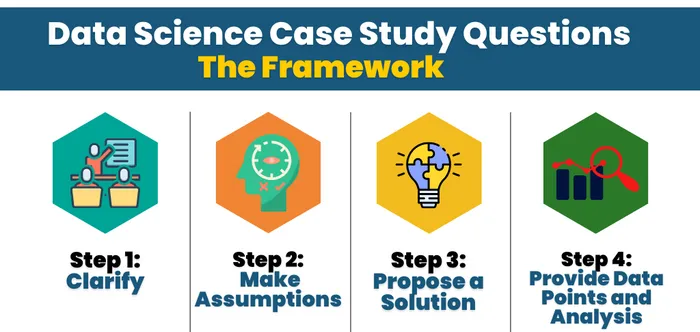

There are four main steps to tackling case questions in Data Science interviews, regardless of the type: clarify, make assumptions, gather context, and provide data points and analysis.

Step 1: Clarify

Clarifying is used to gather more information . More often than not, these case studies are designed to be confusing and vague. There will be unorganized data intentionally supplemented with extraneous or omitted information, so it is the candidate’s responsibility to dig deeper, filter out bad information, and fill gaps. Interviewers will be observing how an applicant asks questions and reach their solution.

For example, with a product question, you might take into consideration:

- What is the product?

- How does the product work?

- How does the product align with the business itself?

Step 2: Make Assumptions

When you have made sure that you have evaluated and understand the dataset, start investigating and discarding possible hypotheses. Developing insights on the product at this stage complements your ability to glean information from the dataset, and the exploration of your ideas is paramount to forming a successful hypothesis. You should be communicating your hypotheses with the interviewer, such that they can provide clarifying remarks on how the business views the product, and to help you discard unworkable lines of inquiry. If we continue to think about a product question, some important questions to evaluate and draw conclusions from include:

- Who uses the product? Why?

- What are the goals of the product?

- How does the product interact with other services or goods the company offers?

The goal of this is to reduce the scope of the problem at hand, and ask the interviewer questions upfront that allow you to tackle the meat of the problem instead of focusing on less consequential edge cases.

Step 3: Propose a Solution

Now that a hypothesis is formed that has incorporated the dataset and an understanding of the business-related context, it is time to apply that knowledge in forming a solution. Remember, the hypothesis is simply a refined version of the problem that uses the data on hand as its basis to being solved. The solution you create can target this narrow problem, and you can have full faith that it is addressing the core of the case study question.

Keep in mind that there isn’t a single expected solution, and as such, there is a certain freedom here to determine the exact path for investigation.

Step 4: Provide Data Points and Analysis

Finally, providing data points and analysis in support of your solution involves choosing and prioritizing a main metric. As with all prior factors, this step must be tied back to the hypothesis and the main goal of the problem. From that foundation, it is important to trace through and analyze different examples– from the main metric–in order to validate the hypothesis.

Quick tip: Every case question tends to have multiple solutions. Therefore, you should absolutely consider and communicate any potential trade-offs of your chosen method. Be sure you are communicating the pros and cons of your approach.

Note: In some special cases, solutions will also be assessed on the ability to convey information in layman’s terms. Regardless of the structure, applicants should always be prepared to solve through the framework outlined above in order to answer the prompt.

The Role of Effective Communication

There have been multiple articles and discussions conducted by interviewers behind the Data Science Case Study portion, and they all boil down success in case studies to one main factor: effective communication.

All the analysis in the world will not help if interviewees cannot verbally work through and highlight their thought process within the case study. Again, interviewers are keyed at this stage of the hiring process to look for well-developed “soft-skills” and problem-solving capabilities. Demonstrating those traits is key to succeeding in this round.

To this end, the best advice possible would be to practice actively going through example case studies, such as those available in the Interview Query questions bank . Exploring different topics with a friend in an interview-like setting with cold recall (no Googling in between!) will be uncomfortable and awkward, but it will also help reveal weaknesses in fleshing out the investigation.

Don’t worry if the first few times are terrible! Developing a rhythm will help with gaining self-confidence as you become better at assessing and learning through these sessions.

Finding the right data science talent for case studies? OutSearch.ai ’s AI-driven platform streamlines this by pinpointing candidates who excel in real-world scenarios. Discover how they can help you match with top problem-solvers.

Product Case Study Questions

With product data science case questions , the interviewer wants to get an idea of your product sense intuition. Specifically, these questions assess your ability to identify which metrics should be proposed in order to understand a product.

1. How would you measure the success of private stories on Instagram, where only certain close friends can see the story?

Start by answering: What is the goal of the private story feature on Instagram? You can’t evaluate “success” without knowing what the initial objective of the product was, to begin with.

One specific goal of this feature would be to drive engagement. A private story could potentially increase interactions between users, and grow awareness of the feature.

Now, what types of metrics might you propose to assess user engagement? For a high-level overview, we could look at:

- Average stories per user per day

- Average Close Friends stories per user per day

However, we would also want to further bucket our users to see the effect that Close Friends stories have on user engagement. By bucketing users by age, date joined, or another metric, we could see how engagement is affected within certain populations, giving us insight on success that could be lost if looking at the overall population.

2. How would you measure the success of acquiring new users through a 30-day free trial at Netflix?

More context: Netflix is offering a promotion where users can enroll in a 30-day free trial. After 30 days, customers will automatically be charged based on their selected package. How would you measure acquisition success, and what metrics would you propose to measure the success of the free trial?

One way we can frame the concept specifically to this problem is to think about controllable inputs, external drivers, and then the observable output . Start with the major goals of Netflix:

- Acquiring new users to their subscription plan.

- Decreasing churn and increasing retention.

Looking at acquisition output metrics specifically, there are several top-level stats that we can look at, including:

- Conversion rate percentage

- Cost per free trial acquisition

- Daily conversion rate

With these conversion metrics, we would also want to bucket users by cohort. This would help us see the percentage of free users who were acquired, as well as retention by cohort.

3. How would you measure the success of Facebook Groups?

Start by considering the key function of Facebook Groups . You could say that Groups are a way for users to connect with other users through a shared interest or real-life relationship. Therefore, the user’s goal is to experience a sense of community, which will also drive our business goal of increasing user engagement.

What general engagement metrics can we associate with this value? An objective metric like Groups monthly active users would help us see if Facebook Groups user base is increasing or decreasing. Plus, we could monitor metrics like posting, commenting, and sharing rates.

There are other products that Groups impact, however, specifically the Newsfeed. We need to consider Newsfeed quality and examine if updates from Groups clog up the content pipeline and if users prioritize those updates over other Newsfeed items. This evaluation will give us a better sense of if Groups actually contribute to higher engagement levels.

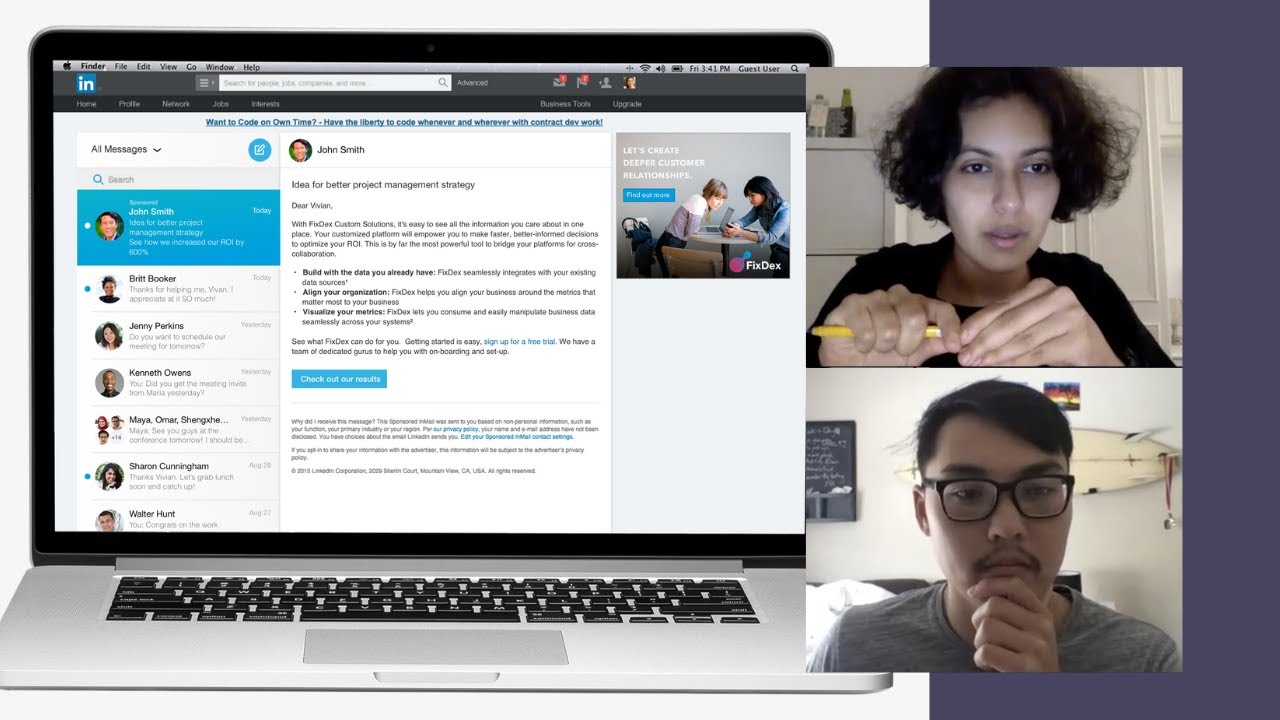

4. How would you analyze the effectiveness of a new LinkedIn chat feature that shows a “green dot” for active users?

Note: Given engineering constraints, the new feature is impossible to A/B test before release. When you approach case study questions, remember always to clarify any vague terms. In this case, “effectiveness” is very vague. To help you define that term, you would want first to consider what the goal is of adding a green dot to LinkedIn chat.

5. How would you diagnose why weekly active users are up 5%, but email notification open rates are down 2%?

What assumptions can you make about the relationship between weekly active users and email open rates? With a case question like this, you would want to first answer that line of inquiry before proceeding.

Hint: Open rate can decrease when its numerator decreases (fewer people open emails) or its denominator increases (more emails are sent overall). Taking these two factors into account, what are some hypotheses we can make about our decrease in the open rate compared to our increase in weekly active users?

6. Let’s say you’re working on Facebook Groups. A product manager decides to add threading to comments on group posts. We see comments per user increase by 10% but posts go down 2%. Why would that be?

To approach this question, consider the impact of threading on user behavior and engagement. Analyze how threading changes the way users interact with posts and comments. Identify relevant metrics such as the number of comments per post, new post frequency, user engagement, and duplicate posts to test your hypotheses about these behavioral changes.

Data Analytics Case Study Questions

Data analytics case studies ask you to dive into analytics problems. Typically these questions ask you to examine metrics trade-offs or investigate changes in metrics. In addition to proposing metrics, you also have to write SQL queries to generate the metrics, which is why they are sometimes referred to as SQL case study questions .

7. Using the provided data, generate some specific recommendations on how DoorDash can improve.

In this DoorDash analytics case study take-home question you are provided with the following dataset:

- Customer order time

- Restaurant order time

- Driver arrives at restaurant time

- Order delivered time

- Customer ID

- Amount of discount

- Amount of tip

With a dataset like this, there are numerous recommendations you can make. A good place to start is by thinking about the DoorDash marketplace, which includes drivers, riders and merchants. How could you analyze the data to increase revenue, driver/user retention and engagement in that marketplace?

8. After implementing a notification change, the total number of unsubscribes increases. Write a SQL query to show how unsubscribes are affecting login rates over time.

This is a Twitter data science interview question , and let’s say you implemented this new feature using an A/B test. You are provided with two tables: events (which includes login, nologin and unsubscribe ) and variants (which includes control or variant ).

We are tasked with comparing multiple different variables at play here. There is the new notification system, along with its effect of creating more unsubscribes. We can also see how login rates compare for unsubscribes for each bucket of the A/B test.

Given that we want to measure two different changes, we know we have to use GROUP BY for the two variables: date and bucket variant. What comes next?

9. Write a query to disprove the hypothesis: Data scientists who switch jobs more often end up getting promoted faster.

More context: You are provided with a table of user experiences representing each person’s past work experiences and timelines.

This question requires a bit of creative problem-solving to understand how we can prove or disprove the hypothesis. The hypothesis is that a data scientist that ends up switching jobs more often gets promoted faster.

Therefore, in analyzing this dataset, we can prove this hypothesis by separating the data scientists into specific segments on how often they jump in their careers.

For example, if we looked at the number of job switches for data scientists that have been in their field for five years, we could prove the hypothesis that the number of data science managers increased as the number of career jumps also rose.

- Never switched jobs: 10% are managers

- Switched jobs once: 20% are managers

- Switched jobs twice: 30% are managers

- Switched jobs three times: 40% are managers

10. Write a SQL query to investigate the hypothesis: Click-through rate is dependent on search result rating.

More context: You are given a table with search results on Facebook, which includes query (search term), position (the search position), and rating (human rating from 1 to 5). Each row represents a single search and includes a column has_clicked that represents whether a user clicked or not.

This question requires us to formulaically do two things: create a metric that can analyze a problem that we face and then actually compute that metric.

Think about the data we want to display to prove or disprove the hypothesis. Our output metric is CTR (clickthrough rate). If CTR is high when search result ratings are high and CTR is low when the search result ratings are low, then our hypothesis is proven. However, if the opposite is true, CTR is low when the search result ratings are high, or there is no proven correlation between the two, then our hypothesis is not proven.

With that structure in mind, we can then look at the results split into different search rating buckets. If we measure the CTR for queries that all have results rated at 1 and then measure CTR for queries that have results rated at lower than 2, etc., we can measure to see if the increase in rating is correlated with an increase in CTR.

11. How would you help a supermarket chain determine which product categories should be prioritized in their inventory restructuring efforts?

You’re working as a Data Scientist in a local grocery chain’s data science team. The business team has decided to allocate store floor space by product category (e.g., electronics, sports and travel, food and beverages). Help the team understand which product categories to prioritize as well as answering questions such as how customer demographics affect sales, and how each city’s sales per product category differs.

Check out our Data Analytics Learning Path .

12. Write a SQL query to select the 2nd highest salary in the engineering department.

Note: If more than one person shares the highest salary, the query should select the next highest salary.

When asked for the “2nd highest” value, focus on getting a singular value. Filter the data to include only relevant entries (e.g., engineering salaries), order the results, and use LIMIT and OFFSET to isolate the value. First, limit to the top two distinct salaries and select the second, or use OFFSET to skip the highest and get the second highest.

Modeling and Machine Learning Case Questions

Machine learning case questions assess your ability to build models to solve business problems. These questions can range from applying machine learning to solve a specific case scenario to assessing the validity of a hypothetical existing model . The modeling case study requires a candidate to evaluate and explain any certain part of the model building process.

13. Describe how you would build a model to predict Uber ETAs after a rider requests a ride.

Common machine learning case study problems like this are designed to explain how you would build a model. Many times this can be scoped down to specific parts of the model building process. Examining the example above, we could break it up into:

How would you evaluate the predictions of an Uber ETA model?

What features would you use to predict the Uber ETA for ride requests?

Our recommended framework breaks down a modeling and machine learning case study to individual steps in order to tackle each one thoroughly. In each full modeling case study, you will want to go over:

- Data processing

- Feature Selection

- Model Selection

- Cross Validation

- Evaluation Metrics

- Testing and Roll Out

14. How would you build a model that sends bank customers a text message when fraudulent transactions are detected?

Additionally, the customer can approve or deny the transaction via text response.

Let’s start out by understanding what kind of model would need to be built. We know that since we are working with fraud, there has to be a case where either a fraudulent transaction is or is not present .

Hint: This problem is a binary classification problem. Given the problem scenario, what considerations do we have to think about when first building this model? What would the bank fraud data look like?

15. How would you design the inputs and outputs for a model that detects potential bombs at a border crossing?

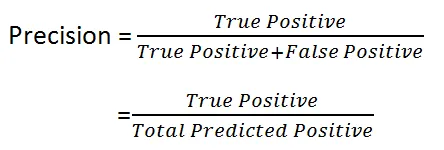

Additional questions. How would you test the model and measure its accuracy? Remember the equation for precision:

Because we can not have high TrueNegatives, recall should be high when assessing the model.

16. Which model would you choose to predict Airbnb booking prices: Linear regression or random forest regression?

Start by answering this question: What are the main differences between linear regression and random forest?

Random forest regression is based on the ensemble machine learning technique of bagging . The two key concepts of random forests are:

- Random sampling of training observations when building trees.

- Random subsets of features for splitting nodes.

Random forest regressions also discretize continuous variables, since they are based on decision trees and can split categorical and continuous variables.

Linear regression, on the other hand, is the standard regression technique in which relationships are modeled using a linear predictor function, the most common example represented as y = Ax + B.

Let’s see how each model is applicable to Airbnb’s bookings. One thing we need to do in the interview is to understand more context around the problem of predicting bookings. To do so, we need to understand which features are present in our dataset.

We can assume the dataset will have features like:

- Location features.

- Seasonality.

- Number of bedrooms and bathrooms.

- Private room, shared, entire home, etc.

- External demand (conferences, festivals, sporting events).

Which model would be the best fit for this feature set?

17. Using a binary classification model that pre-approves candidates for a loan, how would you give each rejected application a rejection reason?

More context: You do not have access to the feature weights. Start by thinking about the problem like this: How would the problem change if we had ten, one thousand, or ten thousand applicants that had gone through the loan qualification program?

Pretend that we have three people: Alice, Bob, and Candace that have all applied for a loan. Simplifying the financial lending loan model, let us assume the only features are the total number of credit cards , the dollar amount of current debt , and credit age . Here is a scenario:

Alice: 10 credit cards, 5 years of credit age, $\$20K$ in debt

Bob: 10 credit cards, 5 years of credit age, $\$15K$ in debt

Candace: 10 credit cards, 5 years of credit age, $\$10K$ in debt

If Candace is approved, we can logically point to the fact that Candace’s $\$10K$ in debt swung the model to approve her for a loan. How did we reason this out?

If the sample size analyzed was instead thousands of people who had the same number of credit cards and credit age with varying levels of debt, we could figure out the model’s average loan acceptance rate for each numerical amount of current debt. Then we could plot these on a graph to model the y-value (average loan acceptance) versus the x-value (dollar amount of current debt). These graphs are called partial dependence plots.

Business Case Questions

In data science interviews, business case study questions task you with addressing problems as they relate to the business. You might be asked about topics like estimation and calculation, as well as applying problem-solving to a larger case. One tip: Be sure to read up on the company’s products and ventures before your interview to expose yourself to possible topics.

18. How would you estimate the average lifetime value of customers at a business that has existed for just over one year?

More context: You know that the product costs $\$100$ per month, averages 10% in monthly churn, and the average customer stays for 3.5 months.

Remember that lifetime value is defined by the prediction of the net revenue attributed to the entire future relationship with all customers averaged. Therefore, $\$100$ * 3.5 = $\$350$… But is it that simple?

Because this company is so new, our average customer length (3.5 months) is biased from the short possible length of time that anyone could have been a customer (one year maximum). How would you then model out LTV knowing the churn rate and product cost?

19. How would you go about removing duplicate product names (e.g. iPhone X vs. Apple iPhone 10) in a massive database?

See the full solution for this Amazon business case question on YouTube:

20. What metrics would you monitor to know if a 50% discount promotion is a good idea for a ride-sharing company?

This question has no correct answer and is rather designed to test your reasoning and communication skills related to product/business cases. First, start by stating your assumptions. What are the goals of this promotion? It is likely that the goal of the discount is to grow revenue and increase retention. A few other assumptions you might make include:

- The promotion will be applied uniformly across all users.

- The 50% discount can only be used for a single ride.

How would we be able to evaluate this pricing strategy? An A/B test between the control group (no discount) and test group (discount) would allow us to evaluate Long-term revenue vs average cost of the promotion. Using these two metrics how could we measure if the promotion is a good idea?

21. A bank wants to create a new partner card, e.g. Whole Foods Chase credit card). How would you determine what the next partner card should be?

More context: Say you have access to all customer spending data. With this question, there are several approaches you can take. As your first step, think about the business reason for credit card partnerships: they help increase acquisition and customer retention.

One of the simplest solutions would be to sum all transactions grouped by merchants. This would identify the merchants who see the highest spending amounts. However, the one issue might be that some merchants have a high-spend value but low volume. How could we counteract this potential pitfall? Is the volume of transactions even an important factor in our credit card business? The more questions you ask, the more may spring to mind.

22. How would you assess the value of keeping a TV show on a streaming platform like Netflix?

Say that Netflix is working on a deal to renew the streaming rights for a show like The Office , which has been on Netflix for one year. Your job is to value the benefit of keeping the show on Netflix.

Start by trying to understand the reasons why Netflix would want to renew the show. Netflix mainly has three goals for what their content should help achieve:

- Acquisition: To increase the number of subscribers.

- Retention: To increase the retention of active subscribers and keep them on as paying members.

- Revenue: To increase overall revenue.

One solution to value the benefit would be to estimate a lower and upper bound to understand the percentage of users that would be affected by The Office being removed. You could then run these percentages against your known acquisition and retention rates.

23. How would you determine which products are to be put on sale?

Let’s say you work at Amazon. It’s nearing Black Friday, and you are tasked with determining which products should be put on sale. You have access to historical pricing and purchasing data from items that have been on sale before. How would you determine what products should go on sale to best maximize profit during Black Friday?

To start with this question, aggregate data from previous years for products that have been on sale during Black Friday or similar events. You can then compare elements such as historical sales volume, inventory levels, and profit margins.

Learn More About Feature Changes

This course is designed teach you everything you need to know about feature changes:

More Data Science Interview Resources

Case studies are one of the most common types of data science interview questions . Practice with the data science course from Interview Query, which includes product and machine learning modules.

Top 10 Data Science Case Study Interview Questions for 2024

Data Science Case Study Interview Questions and Answers to Crack Your next Data Science Interview.

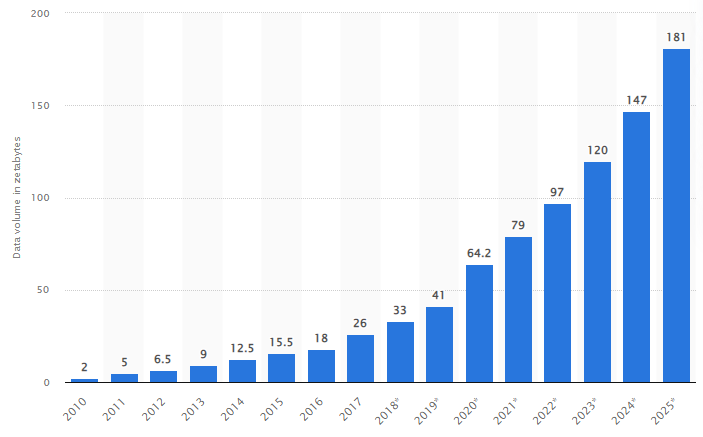

According to Harvard business review, data scientist jobs have been termed “The Sexist job of the 21st century” by Harvard business review . Data science has gained widespread importance due to the availability of data in abundance. As per the below statistics, worldwide data is expected to reach 181 zettabytes by 2025

Source: statists 2021

Build a Churn Prediction Model using Ensemble Learning

Downloadable solution code | Explanatory videos | Tech Support

“Data is the new oil. It’s valuable, but if unrefined it cannot really be used. It has to be changed into gas, plastic, chemicals, etc. to create a valuable entity that drives profitable activity; so must data be broken down, analyzed for it to have value.” — Clive Humby, 2006

Table of Contents

What is a data science case study, why are data scientists tested on case study-based interview questions, research about the company, ask questions, discuss assumptions and hypothesis, explaining the data science workflow, 10 data science case study interview questions and answers.

A data science case study is an in-depth, detailed examination of a particular case (or cases) within a real-world context. A data science case study is a real-world business problem that you would have worked on as a data scientist to build a machine learning or deep learning algorithm and programs to construct an optimal solution to your business problem.This would be a portfolio project for aspiring data professionals where they would have to spend at least 10-16 weeks solving real-world data science problems. Data science use cases can be found in almost every industry out there e-commerce , music streaming, stock market,.etc. The possibilities are endless.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

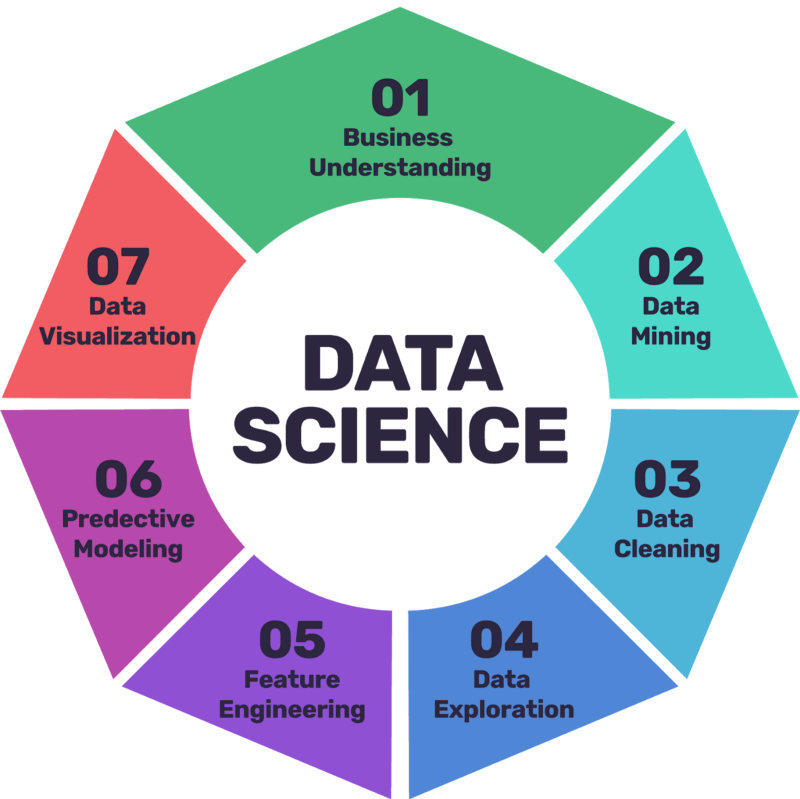

A case study evaluation allows the interviewer to understand your thought process. Questions on case studies can be open-ended; hence you should be flexible enough to accept and appreciate approaches you might not have taken to solve the business problem. All interviews are different, but the below framework is applicable for most data science interviews. It can be a good starting point that will allow you to make a solid first impression in your next data science job interview. In a data science interview, you are expected to explain your data science project lifecycle , and you must choose an approach that would broadly cover all the data science lifecycle activities. The below seven steps would help you get started in the right direction.

Source: mindsbs

Business Understanding — Explain the business problem and the objectives for the problem you solved.

Data Mining — How did you scrape the required data ? Here you can talk about the connections(can be database connections like oracle, SAP…etc.) you set up to source your data.

Data Cleaning — Explaining the data inconsistencies and how did you handle them.

Data Exploration — Talk about the exploratory data analysis you performed for the initial investigation of your data to spot patterns and anomalies.

Feature Engineering — Talk about the approach you took to select the essential features and how you derived new ones by adding more meaning to the dataset flow.

Predictive Modeling — Explain the machine learning model you trained, how did you finalized your machine learning algorithm, and talk about the evaluation techniques you performed on your accuracy score.

Data Visualization — Communicate the findings through visualization and what feedback you received.

New Projects

How to Answer Case Study-Based Data Science Interview Questions?

During the interview, you can also be asked to solve and explain open-ended, real-world case studies. This case study can be relevant to the organization you are interviewing for. The key to answering this is to have a well-defined framework in your mind that you can implement in any case study, and we uncover that framework here.

Ensure that you read about the company and its work on its official website before appearing for the data science job interview . Also, research the position you are interviewing for and understand the JD (Job description). Read about the domain and businesses they are associated with. This will give you a good idea of what questions to expect.

As case study interviews are usually open-ended, you can solve the problem in many ways. A general mistake is jumping to the answer straight away.

Try to understand the context of the business case and the key objective. Uncover the details kept intentionally hidden by the interviewer. Here is a list of questions you might ask if you are being interviewed for a financial institution -

Does the dataset include all transactions from Bank or transactions from some specific department like loans, insurance, etc.?

Is the customer data provided pre-processed, or do I need to run a statistical test to check data quality?

Which segment of borrower’s your business is targeting/focusing on? Which parameter can be used to avoid biases during loan dispersion?

Begin Your Big Data Journey with ProjectPro's Project-Based PySpark Online Course !

Here's what valued users are saying about ProjectPro

Ameeruddin Mohammed

ETL (Abintio) developer at IBM

Abhinav Agarwal

Graduate Student at Northwestern University

Not sure what you are looking for?

Make informed or well-thought assumptions to simplify the problem. Talk about your assumption with the interviewer and explain why you would want to make such an assumption. Try to narrow down to key objectives which you can solve. Here is a list of a few instances —

As car sales increase consistently over time with no significant spikes, I assume seasonal changes do not impact your car sales. Hence I would prefer the modeling excluding the seasonality component.

As confirmed by you, the incoming data does not require any preprocessing. Hence I will skip the part of running statistical tests to check data quality and perform feature selection.

As IoT devices are capturing temperature data at every minute, I am required to predict weather daily. I would prefer averaging out the minute data to a day to have data daily.

Get Closer To Your Dream of Becoming a Data Scientist with 150+ Solved End-to-End ML Projects

Now that you have a clear and focused objective to solve the business case. You can start leveraging the 7-step framework we briefed upon above. Think of the mining and cleaning activities that you are required to perform. Talk about feature selection and why you would prefer some features over others, and lastly, how you would select the right machine learning model for the business problem. Here is an example for car purchase prediction from auctions -

First, Prepare the relevant data by accessing the data available from various auctions. I will selectively choose the data from those auctions which are completed. At the same time, when selecting the data, I need to ensure that the data is not imbalanced.

Now I will implement feature engineering and selection to create and select relevant features like a car manufacturer, year of purchase, automatic or manual transmission…etc. I will continue this process if the results are not good on the test set.

Since this is a classification problem, I will check the prediction using the Decision trees and Random forest as this algorithm tends to do better for classification problems. If the results score is unsatisfactory, I can perform hyper parameterization to fine-tune the model and achieve better accuracy scores.

In the end, summarise the answer and explain how your solution is best suited for this business case. How the team can leverage this solution to gain more customers. For instance, building on the car sales prediction analogy, your response can be

For the car predicted as a good car during an auction, the dealers can purchase those cars and minimize the overall losses they incur upon buying a bad car.

Often, the company you are being interviewed for would select case study questions based on a business problem they are trying to solve or have already solved. Here we list down a few case study-based data science interview questions and the approach to answering those in the interviews. Note that these case studies are often open-ended, so there is no one specific way to approach the problem statement.

1. How would you improve the bank's existing state-of-the-art credit scoring of borrowers? How will you predict someone can face financial distress in the next couple of years?

Consider the interviewer has given you access to the dataset. As explained earlier, you can think of taking the following approach.

Ask Questions —

Q: What parameter does the bank consider the borrowers while calculating the credit scores? Do these parameters vary among borrowers of different categories based on age group, income level, etc.?

Q: How do you define financial distress? What features are taken into consideration?

Q: Banks can lend different types of loans like car loans, personal loans, bike loans, etc. Do you want me to focus on any one loan category?

Discuss the Assumptions  —

As debt ratio is proportional to monthly income, we assume that people with a high debt ratio(i.e., their loan value is much higher than the monthly income) will be an outlier.

Monthly income tends to vary (mainly on the upside) over two years. Cases, where the monthly income is constant can be considered data entry issues and should not be considered for analysis. I will choose the regression model to fill up the missing values.

Get FREE Access to Machine Learning Example Codes for Data Cleaning, Data Munging, and Data Visualization

Building end-to-end Data Science Workflows —

Firstly, I will carefully select the relevant data for my analysis. I will deselect records with insane values like people with high debt ratios or inconsistent monthly income.

Identifying essential features and ensuring they do not contain missing values. If they do, fill them up. For instance, Age seems to be a necessary feature for accepting or denying a mortgage. Also, ensuring data is not imbalanced as a meager percentage of borrowers will be defaulter when compared to the complete dataset.

As this is a binary classification problem, I will start with logistic regression and slowly progress towards complex models like decision trees and random forests.

Conclude —

Banks play a crucial role in country economies. They decide who can get finance and on what terms and can make or break investment decisions. Individuals and companies need access to credit for markets and society to function.

You can leverage this credit scoring algorithm to determine whether or not a loan should be granted by predicting the probability that somebody will experience financial distress in the next two years.

2. At an e-commerce platform, how would you classify fruits and vegetables from the image data?

Q: Do the images in the dataset contain multiple fruits and vegetables, or would each image have a single fruit or a vegetable?

Q: Can you help me understand the number of estimated classes for this classification problem?

Q: What would be an ideal dimension of an image? Do the images vary within the dataset? Are these color images or grey images?

Upon asking the above questions, let us assume the interviewer confirms that each image would contain either one fruit or one vegetable. Hence there won't be multiple classes in a single image, and our website has roughly 100 different varieties of fruits and vegetables. For simplicity, the dataset contains 50,000 images each the dimensions are 100 X 100 pixels.

Assumptions and Preprocessing—

I need to evaluate the training and testing sets. Hence I will check for any imbalance within the dataset. The number of training images for each class should be consistent. So, if there are n number of images for class A, then class B should also have n number of training images (or a variance of 5 to 10 %). Hence if we have 100 classes, the number of training images under each class should be consistent. The dataset contains 50,000 images average image per class is close to 500 images.

I will then divide the training and testing sets into 80: 20 ratios (or 70:30, whichever suits you best). I assume that the images provided might not cover all possible angles of fruits and vegetables; hence such a dataset can cause overfitting issues once the training gets completed. I will keep techniques like Data augmentation handy in case I face overfitting issues while training the model.

End to End Data Science Workflow —

As this is a larger dataset, I would first check the availability of GPUs as processing 50,000 images would require high computation. I will use the Cuda library to move the training set to GPU for training.

I choose to develop a convolution neural network (CNN) as these networks tend to extract better features from the images when compared to the feed-forward neural network. Feature extraction is quite essential while building the deep neural network. Also, CNN requires way less computation requirement when compared to the feed-forward neural networks.

I will also consider techniques like Batch normalization and learning rate scheduling to improve the accuracy of the model and improve the overall performance of the model. If I face the overfitting issue on the validation set, I will choose techniques like dropout and color normalization to over those.

Once the model is trained, I will test it on sample test images to see its behavior. It is quite common to model that doing well on training sets does not perform well on test sets. Hence, testing the test set model is an important part of the evaluation.

The fruit classification model can be helpful to the e-commerce industry as this would help them classify the images and tag the fruit and vegetables belonging to their category.The fruit and vegetable processing industries can use the model to organize the fruits to the correct categories and accordingly instruct the device to place them on the cover belts involved in packaging and shipping to customers.

Explore Categories

3. How would you determine whether Netflix focuses more on TV shows or Movies?

Q: Should I include animation series and movies while doing this analysis?

Q: What is the business objective? Do you want me to analyze a particular genre like action, thriller, etc.?

Q: What is the targeted audience? Is this focus on children below a certain age or for adults?

Let us assume the interview responds by confirming that you must perform the analysis on both movies and animation data. The business intends to perform this analysis over all the genres, and the targeted audience includes both adults and children.

Assumptions —

It would be convenient to do this analysis over geographies. As US and India are the highest content generator globally, I would prefer to restrict the initial analysis over these countries. Once the initial hypothesis is established, you can scale the model to other countries.

While analyzing movies in India, understanding the movie release over other months can be an important metric. For example, there tend to be many releases in and around the holiday season (Diwali and Christmas) around November and December which should be considered.

End to End Data Science Workflow —

Firstly, we need to select only the relevant data related to movies and TV shows among the entire dataset. I would also need to ensure the completeness of the data like this has a relevant year of release, month-wise release data, Country-wise data, etc.

After preprocessing the dataset, I will do feature engineering to select the data for only those countries/geographies I am interested in. Now you can perform EDA to understand the correlation of Movies and TV shows with ratings, Categories (drama, comedies…etc.), actors…etc.

Lastly, I would focus on Recommendation clicks and revenues to understand which of the two generate the most revenues. The company would likely prefer the categories generating the highest revenue ( TV Shows vs. Movies) over others.

This analysis would help the company invest in the right venture and generate more revenue based on their customer preference. This analysis would also help understand the best or preferred categories, time in the year to release, movie directors, and actors that their customers would like to see.

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

4. How would you detect fake news on social media?

Q: When you say social media, does it mean all the apps available on the internet like Facebook, Instagram, Twitter, YouTub, etc.?

Q: Does the analysis include news titles? Does the news description carry significance?

Q: As these platforms contain content from multiple languages? Should the analysis be multilingual?

Let us assume the interviewer responds by confirming that the news feeds are available only from Facebook. The new title and the news details are available in the same block and are not segregated. For simplicity, we would prefer to categorize the news available in the English language.

Assumptions and Data Preprocessing —

I would first prefer to segregate the news title and description. The news title usually contains the key phrases and the intent behind the news. Also, it would be better to process news titles as that would require low computing than processing the whole text as a data scientist. This will lead to an efficient solution.

Also, I would also check for data imbalance. An imbalanced dataset can cause the model to be biased to a particular class.

I would also like to take a subset of news that may focus on a specific category like sports, finance , etc. Gradually, I will increase the model scope, and this news subset would help me set up my baseline model, which can be tweaked later based on the requirement.

Firstly, it would be essential to select the data based on the chosen category. I take up sports as a category I want to start my analysis with.

I will first clean the dataset by checking for null records. Once this check is done, data formatting is required before you can feed to a natural network. I will write a function to remove characters like !”#$%&’()*+,-./:;<=>?@[]^_`{|}~ as their character does not add any value for deep neural network learning. I will also implement stopwords to remove words like ‘and’, ‘is”, etc. from the vocabulary.

Then I will employ the NLP techniques like Bag of words or TFIDF based on the significance. The bag of words can be faster, but TF IDF can be more accurate and slower. Selecting the technique would also depend upon the business inputs.

I will now split the data in training and testing, train a machine learning model, and check the performance. Since the data set is heavy on text models like naive bayes tends to perform better in these situations.

Conclude  —

Social media and news outlets publish fake news to increase readership or as part of psychological warfare. In general, the goal is profiting through clickbait. Clickbaits lure users and entice curiosity with flashy headlines or designs to click links to increase advertisements revenues. The trained model will help curb such news and add value to the reader's time.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

5. How would you forecast the price of a nifty 50 stock?

Q: Do you want me to forecast the nifty 50 indexes/tracker or stock price of a specific stock within nifty 50?

Q: What do you want me to forecast? Is it the opening price, closing price, VWAP, highest of the day, etc.?

Q: Do you want me to forecast daily prices /weekly/monthly prices?

Q: Can you tell me more about the historical data available? Do we have ten years or 15 years of recorded data?

With all these questions asked to the interviewer, let us assume the interviewer responds by saying that you should pick one stock among nifty 50 stocks and forecast their average price daily. The company has historical data for the last 20 years.

Assumptions and Data preprocessing —

As we forecast the average price daily, I would consider VWAP my target or predictor value. VWAP stands for Volume Weighted Average Price, and it is a ratio of the cumulative share price to the cumulative volume traded over a given time.

Solving this data science case study requires tracking the average price over a period, and it is a classical time series problem. Hence I would refrain from using the classical regression model on the time series data as we have a separate set of machine learning models (like ARIMA , AUTO ARIMA, SARIMA…etc.) to work with such datasets.

Like any other dataset, I will first check for null and understand the % of null values. If they are significantly less, I would prefer to drop those records.

Now I will perform the exploratory data analysis to understand the average price variation from the last 20 years. This would also help me understand the tread and seasonality component of the time series data. Alternatively, I will use techniques like the Dickey-Fuller test to know if the time series is stationary or not.

Usually, such time series is not stationary, and then I can now decompose the time series to understand the additive or multiplicative nature of time series. Now I can use the existing techniques like differencing, rolling stats, or transformation to make the time series non-stationary.

Lastly, once the time series is non-stationary, I will separate train and test data based on the dates and implement techniques like ARIMA or Facebook prophet to train the machine learning model .

Some of the major applications of such time series prediction can occur in stocks and financial trading, analyzing online and offline retail sales, and medical records such as heart rate, EKG, MRI, and ECG.

Time series datasets invoke a lot of enthusiasm between data scientists . They are many different ways to approach a Time series problem, and the process mentioned above is only one of the know techniques.

Access Job Recommendation System Project with Source Code

6. How would you forecast the weekly sales of Walmart? Which department impacted most during the holidays?

Q: Walmart usually operates three different stores - supermarkets, discount stores, and neighborhood stores. Which store data shall I pick to get started with my analysis? Are the sales tracked in US dollars?

Q: How would I identify holidays in the historical data provided? Is the store closed on Black Friday week, super bowl week, or Christmas week?

Q: What are the evaluation or the loss criteria? How many departments are present across all store types?

Let us assume the interviewer responds by saying you must forecast weekly sales department-wise and not store type-wise in US dollars. You would be provided with a flag within the dataset to inform weeks having holidays. There are over 80 departments across three types of stores.

As we predict the weekly sales, I would assume weekly sales to be the target or the predictor for our data model before training.

We are tracking sales price weekly, We will use a regression model to predict our target variable, “Weekly_Sales,” a grouped/hierarchical time series. We will explore the following categories of models, engineer features, and hyper-tune parameters to choose a model with the best fit.

- Linear models

- Tree models

- Ensemble models

I will consider MEA, RMSE, and R2 as evaluation criteria.

End to End Data Science Workflow-

The foremost step is to figure out essential features within the dataset. I would explore store information regarding their size, type, and the total number of stores present within the historical dataset.

The next step would be to perform feature engineering; as we have weekly sales data available, I would prefer to extract features like ‘WeekofYear’, ‘Month’, ‘Year’, and ‘Day’. This would help the model to learn general trends.

Now I will create store and dept rank features as this is one of the end goals of the given problem. I would create these features by calculating the average weekly sales.

Now I will perform the exploratory data analysis (a.k.a EDA) to understand what story does the data has to say? I will analyze the stores and weekly dept sales for the historical data to foresee the seasonality and trends. Weekly sales against the store and weekly sales against the department to understand their significance and whether these features must be retained that will be passed to the machine learning models.

After feature engineering and selection, I will set up a baseline model and run the evaluation considering MAE, RMSE and R2. As this is a regression problem, I will begin with simple models like linear regression and SGD regressor. Later, I will move towards complex models, like Decision Trees Regressor, if the need arises. LGBM Regressor and SGB regressor.

Sales forecasting can play a significant role in the company’s success. Accurate sales forecasts allow salespeople and business leaders to make smarter decisions when setting goals, hiring, budgeting, prospecting, and other revenue-impacting factors. The solution mentioned above is one of the many ways to approach this problem statement.

With this, we come to the end of the post. But let us do a quick summary of the techniques we learned and how they can be implemented. We would also like to provide you with some practice case studies questions to help you build up your thought process for the interview.

7. Considering an organization has a high attrition rate, how would you predict if an employee is likely to leave the organization?

8. How would you identify the best cities and countries for startups in the world?

9. How would you estimate the impact on Air Quality across geographies during Covid 19?

10. A Company often faces machine failures at its factory. How would you develop a model for predictive maintenance?

Do not get intimated by the problem statement; focus on your approach -

Ask questions to get clarity

Discuss assumptions, don't assume things. Let the data tell the story or get it verified by the interviewer.

Build Workflows — Take a few minutes to put together your thoughts; start with a more straightforward approach.

Conclude — Summarize your answer and explain how it best suits the use case provided.

We hope these case study-based data scientist interview questions will give you more confidence to crack your next data science interview.

About the Author

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

Data Science Case Study Interview: Your Guide to Success

by Sam McKay, CFA | Careers

Ready to crush your next data science interview? Well, you’re in the right place.

This type of interview is designed to assess your problem-solving skills, technical knowledge, and ability to apply data-driven solutions to real-world challenges.

So, how can you master these interviews and secure your next job?

To master your data science case study interview:

Practice Case Studies: Engage in mock scenarios to sharpen problem-solving skills.

Review Core Concepts: Brush up on algorithms, statistical analysis, and key programming languages.

Contextualize Solutions: Connect findings to business objectives for meaningful insights.

Clear Communication: Present results logically and effectively using visuals and simple language.

Adaptability and Clarity: Stay flexible and articulate your thought process during problem-solving.

This article will delve into each of these points and give you additional tips and practice questions to get you ready to crush your upcoming interview!

After you’ve read this article, you can enter the interview ready to showcase your expertise and win your dream role.

Let’s dive in!

Table of Contents

What to Expect in the Interview?

Data science case study interviews are an essential part of the hiring process. They give interviewers a glimpse of how you, approach real-world business problems and demonstrate your analytical thinking, problem-solving, and technical skills.

Furthermore, case study interviews are typically open-ended , which means you’ll be presented with a problem that doesn’t have a right or wrong answer.

Instead, you are expected to demonstrate your ability to:

Break down complex problems

Make assumptions

Gather context

Provide data points and analysis

This type of interview allows your potential employer to evaluate your creativity, technical knowledge, and attention to detail.

But what topics will the interview touch on?

Topics Covered in Data Science Case Study Interviews

In a case study interview , you can expect inquiries that cover a spectrum of topics crucial to evaluating your skill set:

Topic 1: Problem-Solving Scenarios

In these interviews, your ability to resolve genuine business dilemmas using data-driven methods is essential.

These scenarios reflect authentic challenges, demanding analytical insight, decision-making, and problem-solving skills.

Real-world Challenges: Expect scenarios like optimizing marketing strategies, predicting customer behavior, or enhancing operational efficiency through data-driven solutions.

Analytical Thinking: Demonstrate your capacity to break down complex problems systematically, extracting actionable insights from intricate issues.

Decision-making Skills: Showcase your ability to make informed decisions, emphasizing instances where your data-driven choices optimized processes or led to strategic recommendations.

Your adeptness at leveraging data for insights, analytical thinking, and informed decision-making defines your capability to provide practical solutions in real-world business contexts.

Topic 2: Data Handling and Analysis

Data science case studies assess your proficiency in data preprocessing, cleaning, and deriving insights from raw data.

Data Collection and Manipulation: Prepare for data engineering questions involving data collection, handling missing values, cleaning inaccuracies, and transforming data for analysis.

Handling Missing Values and Cleaning Data: Showcase your skills in managing missing values and ensuring data quality through cleaning techniques.

Data Transformation and Feature Engineering: Highlight your expertise in transforming raw data into usable formats and creating meaningful features for analysis.

Mastering data preprocessing—managing, cleaning, and transforming raw data—is fundamental. Your proficiency in these techniques showcases your ability to derive valuable insights essential for data-driven solutions.

Topic 3: Modeling and Feature Selection

Data science case interviews prioritize your understanding of modeling and feature selection strategies.

Model Selection and Application: Highlight your prowess in choosing appropriate models, explaining your rationale, and showcasing implementation skills.

Feature Selection Techniques: Understand the importance of selecting relevant variables and methods, such as correlation coefficients, to enhance model accuracy.

Ensuring Robustness through Random Sampling: Consider techniques like random sampling to bolster model robustness and generalization abilities.

Excel in modeling and feature selection by understanding contexts, optimizing model performance, and employing robust evaluation strategies.

Become a master at data modeling using these best practices:

Topic 4: Statistical and Machine Learning Approach

These interviews require proficiency in statistical and machine learning methods for diverse problem-solving. This topic is significant for anyone applying for a machine learning engineer position.

Using Statistical Models: Utilize logistic and linear regression models for effective classification and prediction tasks.

Leveraging Machine Learning Algorithms: Employ models such as support vector machines (SVM), k-nearest neighbors (k-NN), and decision trees for complex pattern recognition and classification.

Exploring Deep Learning Techniques: Consider neural networks, convolutional neural networks (CNN), and recurrent neural networks (RNN) for intricate data patterns.

Experimentation and Model Selection: Experiment with various algorithms to identify the most suitable approach for specific contexts.

Combining statistical and machine learning expertise equips you to systematically tackle varied data challenges, ensuring readiness for case studies and beyond.

Topic 5: Evaluation Metrics and Validation

In data science interviews, understanding evaluation metrics and validation techniques is critical to measuring how well machine learning models perform.

Choosing the Right Metrics: Select metrics like precision, recall (for classification), or R² (for regression) based on the problem type. Picking the right metric defines how you interpret your model’s performance.

Validating Model Accuracy: Use methods like cross-validation and holdout validation to test your model across different data portions. These methods prevent errors from overfitting and provide a more accurate performance measure.

Importance of Statistical Significance: Evaluate if your model’s performance is due to actual prediction or random chance. Techniques like hypothesis testing and confidence intervals help determine this probability accurately.

Interpreting Results: Be ready to explain model outcomes, spot patterns, and suggest actions based on your analysis. Translating data insights into actionable strategies showcases your skill.

Finally, focusing on suitable metrics, using validation methods, understanding statistical significance, and deriving actionable insights from data underline your ability to evaluate model performance.

Also, being well-versed in these topics and having hands-on experience through practice scenarios can significantly enhance your performance in these case study interviews.

Prepare to demonstrate technical expertise and adaptability, problem-solving, and communication skills to excel in these assessments.

Now, let’s talk about how to navigate the interview.

Here is a step-by-step guide to get you through the process.

Steps by Step Guide Through the Interview

This section’ll discuss what you can expect during the interview process and how to approach case study questions.

Step 1: Problem Statement: You’ll be presented with a problem or scenario—either a hypothetical situation or a real-world challenge—emphasizing the need for data-driven solutions within data science.

Step 2: Clarification and Context: Seek more profound clarity by actively engaging with the interviewer. Ask pertinent questions to thoroughly understand the objectives, constraints, and nuanced aspects of the problem statement.

Step 3: State your Assumptions: When crucial information is lacking, make reasonable assumptions to proceed with your final solution. Explain these assumptions to your interviewer to ensure transparency in your decision-making process.

Step 4: Gather Context: Consider the broader business landscape surrounding the problem. Factor in external influences such as market trends, customer behaviors, or competitor actions that might impact your solution.

Step 5: Data Exploration: Delve into the provided datasets meticulously. Cleanse, visualize, and analyze the data to derive meaningful and actionable insights crucial for problem-solving.

Step 6: Modeling and Analysis: Leverage statistical or machine learning techniques to address the problem effectively. Implement suitable models to derive insights and solutions aligning with the identified objectives.

Step 7: Results Interpretation: Interpret your findings thoughtfully. Identify patterns, trends, or correlations within the data and present clear, data-backed recommendations relevant to the problem statement.

Step 8: Results Presentation: Effectively articulate your approach, methodologies, and choices coherently. This step is vital, especially when conveying complex technical concepts to non-technical stakeholders.

Remember to remain adaptable and flexible throughout the process and be prepared to adapt your approach to each situation.

Now that you have a guide on navigating the interview, let us give you some tips to help you stand out from the crowd.

Top 3 Tips to Master Your Data Science Case Study Interview

Approaching case study interviews in data science requires a blend of technical proficiency and a holistic understanding of business implications.

Here are practical strategies and structured approaches to prepare effectively for these interviews:

1. Comprehensive Preparation Tips

To excel in case study interviews, a blend of technical competence and strategic preparation is key.

Here are concise yet powerful tips to equip yourself for success:

Practice with Mock Case Studies : Familiarize yourself with the process through practice. Online resources offer example questions and solutions, enhancing familiarity and boosting confidence.

Review Your Data Science Toolbox: Ensure a strong foundation in fundamentals like data wrangling, visualization, and machine learning algorithms. Comfort with relevant programming languages is essential.

Simplicity in Problem-solving: Opt for clear and straightforward problem-solving approaches. While advanced techniques can be impressive, interviewers value efficiency and clarity.

Interviewers also highly value someone with great communication skills. Here are some tips to highlight your skills in this area.

2. Communication and Presentation of Results

In case study interviews, communication is vital. Present your findings in a clear, engaging way that connects with the business context. Tips include:

Contextualize results: Relate findings to the initial problem, highlighting key insights for business strategy.

Use visuals: Charts, graphs, or diagrams help convey findings more effectively.

Logical sequence: Structure your presentation for easy understanding, starting with an overview and progressing to specifics.

Simplify ideas: Break down complex concepts into simpler segments using examples or analogies.

Mastering these techniques helps you communicate insights clearly and confidently, setting you apart in interviews.

Lastly here are some preparation strategies to employ before you walk into the interview room.

3. Structured Preparation Strategy

Prepare meticulously for data science case study interviews by following a structured strategy.

Here’s how:

Practice Regularly: Engage in mock interviews and case studies to enhance critical thinking and familiarity with the interview process. This builds confidence and sharpens problem-solving skills under pressure.

Thorough Review of Concepts: Revisit essential data science concepts and tools, focusing on machine learning algorithms, statistical analysis, and relevant programming languages (Python, R, SQL) for confident handling of technical questions.

Strategic Planning: Develop a structured framework for approaching case study problems. Outline the steps and tools/techniques to deploy, ensuring an organized and systematic interview approach.

Understanding the Context: Analyze business scenarios to identify objectives, variables, and data sources essential for insightful analysis.

Ask for Clarification: Engage with interviewers to clarify any unclear aspects of the case study questions. For example, you may ask ‘What is the business objective?’ This exhibits thoughtfulness and aids in better understanding the problem.

Transparent Problem-solving: Clearly communicate your thought process and reasoning during problem-solving. This showcases analytical skills and approaches to data-driven solutions.

Blend technical skills with business context, communicate clearly, and prepare to systematically ace your case study interviews.

Now, let’s really make this specific.

Each company is different and may need slightly different skills and specializations from data scientists.

However, here is some of what you can expect in a case study interview with some industry giants.

Case Interviews at Top Tech Companies

As you prepare for data science interviews, it’s essential to be aware of the case study interview format utilized by top tech companies.

In this section, we’ll explore case interviews at Facebook, Twitter, and Amazon, and provide insight into what they expect from their data scientists.

Facebook predominantly looks for candidates with strong analytical and problem-solving skills. The case study interviews here usually revolve around assessing the impact of a new feature, analyzing monthly active users, or measuring the effectiveness of a product change.

To excel during a Facebook case interview, you should break down complex problems, formulate a structured approach, and communicate your thought process clearly.

Twitter , similar to Facebook, evaluates your ability to analyze and interpret large datasets to solve business problems. During a Twitter case study interview, you might be asked to analyze user engagement, develop recommendations for increasing ad revenue, or identify trends in user growth.

Be prepared to work with different analytics tools and showcase your knowledge of relevant statistical concepts.

Amazon is known for its customer-centric approach and data-driven decision-making. In Amazon’s case interviews, you may be tasked with optimizing customer experience, analyzing sales trends, or improving the efficiency of a certain process.

Keep in mind Amazon’s leadership principles, especially “Customer Obsession” and “Dive Deep,” as you navigate through the case study.

Remember, practice is key. Familiarize yourself with various case study scenarios and hone your data science skills.

With all this knowledge, it’s time to practice with the following practice questions.

Mockup Case Studies and Practice Questions

To better prepare for your data science case study interviews, it’s important to practice with some mockup case studies and questions.

One way to practice is by finding typical case study questions.

Here are a few examples to help you get started:

Customer Segmentation: You have access to a dataset containing customer information, such as demographics and purchase behavior. Your task is to segment the customers into groups that share similar characteristics. How would you approach this problem, and what machine-learning techniques would you consider?

Fraud Detection: Imagine your company processes online transactions. You are asked to develop a model that can identify potentially fraudulent activities. How would you approach the problem and which features would you consider using to build your model? What are the trade-offs between false positives and false negatives?

Demand Forecasting: Your company needs to predict future demand for a particular product. What factors should be taken into account, and how would you build a model to forecast demand? How can you ensure that your model remains up-to-date and accurate as new data becomes available?

By practicing case study interview questions , you can sharpen problem-solving skills, and walk into future data science interviews more confidently.

Remember to practice consistently and stay up-to-date with relevant industry trends and techniques.

Final Thoughts

Data science case study interviews are more than just technical assessments; they’re opportunities to showcase your problem-solving skills and practical knowledge.

Furthermore, these interviews demand a blend of technical expertise, clear communication, and adaptability.

Remember, understanding the problem, exploring insights, and presenting coherent potential solutions are key.

By honing these skills, you can demonstrate your capability to solve real-world challenges using data-driven approaches. Good luck on your data science journey!

Frequently Asked Questions

How would you approach identifying and solving a specific business problem using data.

To identify and solve a business problem using data, you should start by clearly defining the problem and identifying the key metrics that will be used to evaluate success.

Next, gather relevant data from various sources and clean, preprocess, and transform it for analysis. Explore the data using descriptive statistics, visualizations, and exploratory data analysis.

Based on your understanding, build appropriate models or algorithms to address the problem, and then evaluate their performance using appropriate metrics. Iterate and refine your models as necessary, and finally, communicate your findings effectively to stakeholders.

Can you describe a time when you used data to make recommendations for optimization or improvement?

Recall a specific data-driven project you have worked on that led to optimization or improvement recommendations. Explain the problem you were trying to solve, the data you used for analysis, the methods and techniques you employed, and the conclusions you drew.

Share the results and how your recommendations were implemented, describing the impact it had on the targeted area of the business.

How would you deal with missing or inconsistent data during a case study?

When dealing with missing or inconsistent data, start by assessing the extent and nature of the problem. Consider applying imputation methods, such as mean, median, or mode imputation, or more advanced techniques like k-NN imputation or regression-based imputation, depending on the type of data and the pattern of missingness.

For inconsistent data, diagnose the issues by checking for typos, duplicates, or erroneous entries, and take appropriate corrective measures. Document your handling process so that stakeholders can understand your approach and the limitations it might impose on the analysis.

What techniques would you use to validate the results and accuracy of your analysis?

To validate the results and accuracy of your analysis, use techniques like cross-validation or bootstrapping, which can help gauge model performance on unseen data. Employ metrics relevant to your specific problem, such as accuracy, precision, recall, F1-score, or RMSE, to measure performance.

Additionally, validate your findings by conducting sensitivity analyses, sanity checks, and comparing results with existing benchmarks or domain knowledge.

How would you communicate your findings to both technical and non-technical stakeholders?

To effectively communicate your findings to technical stakeholders, focus on the methodology, algorithms, performance metrics, and potential improvements. For non-technical stakeholders, simplify complex concepts and explain the relevance of your findings, the impact on the business, and actionable insights in plain language.

Use visual aids, like charts and graphs, to illustrate your results and highlight key takeaways. Tailor your communication style to the audience, and be prepared to answer questions and address concerns that may arise.

How do you choose between different machine learning models to solve a particular problem?

When choosing between different machine learning models, first assess the nature of the problem and the data available to identify suitable candidate models. Evaluate models based on their performance, interpretability, complexity, and scalability, using relevant metrics and techniques such as cross-validation, AIC, BIC, or learning curves.

Consider the trade-offs between model accuracy, interpretability, and computation time, and choose a model that best aligns with the problem requirements, project constraints, and stakeholders’ expectations.

Keep in mind that it’s often beneficial to try several models and ensemble methods to see which one performs best for the specific problem at hand.

Related Posts

Top 22 Database Design Interview Questions Revealed