- How it works

"Christmas Offer"

Terms & conditions.

As the Christmas season is upon us, we find ourselves reflecting on the past year and those who we have helped to shape their future. It’s been quite a year for us all! The end of the year brings no greater joy than the opportunity to express to you Christmas greetings and good wishes.

At this special time of year, Research Prospect brings joyful discount of 10% on all its services. May your Christmas and New Year be filled with joy.

We are looking back with appreciation for your loyalty and looking forward to moving into the New Year together.

"Claim this offer"

In unfamiliar and hard times, we have stuck by you. This Christmas, Research Prospect brings you all the joy with exciting discount of 10% on all its services.

Offer valid till 5-1-2024

We love being your partner in success. We know you have been working hard lately, take a break this holiday season to spend time with your loved ones while we make sure you succeed in your academics

Discount code: RP23720

Published by Robert Bruce at August 8th, 2024 , Revised On August 12, 2024

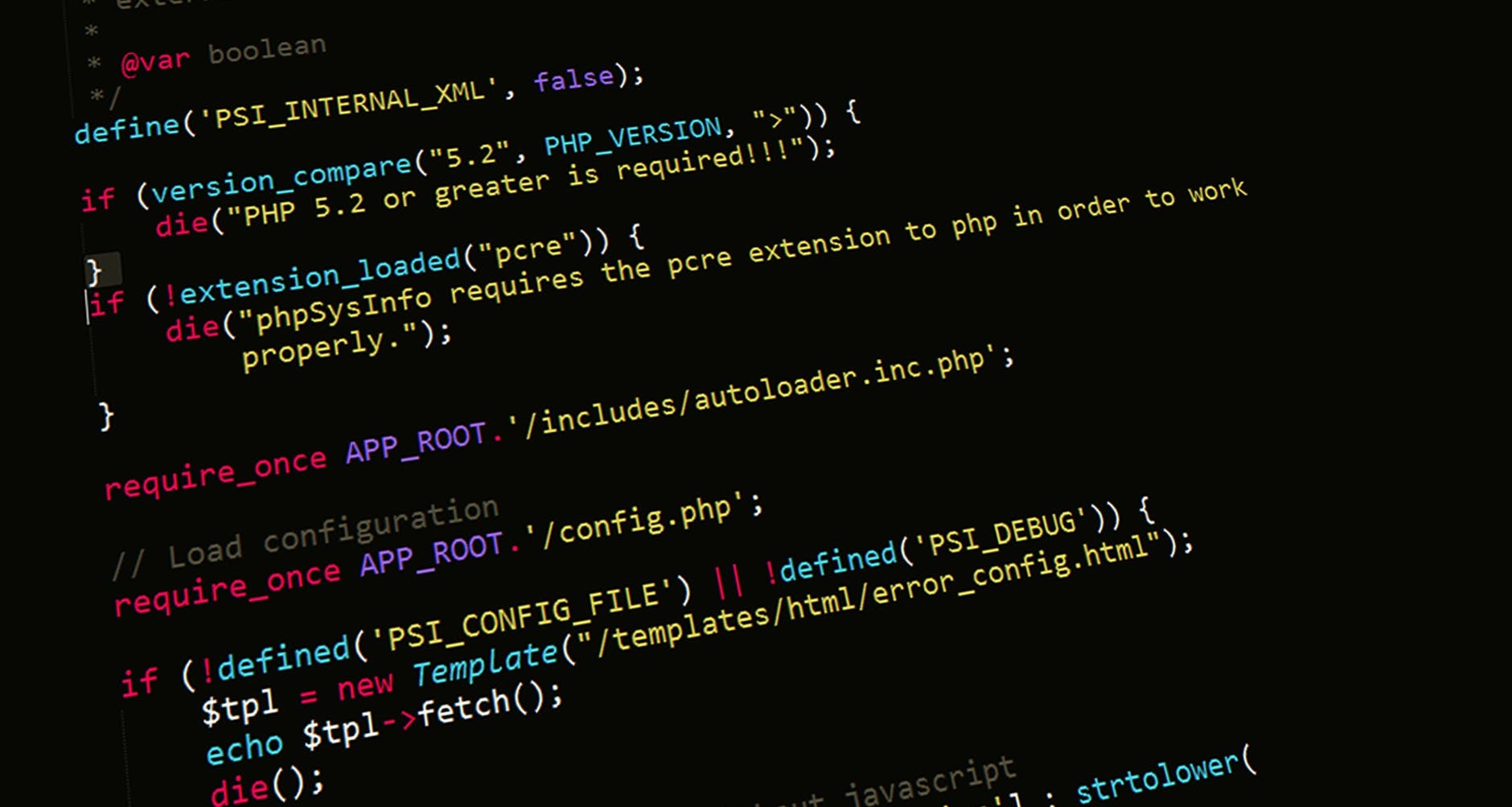

Computer Science Research Topics

The dynamic discipline of computer science is driving innovation and technological progress in a number of areas, including education. Its importance is vast, as it is the foundation of the modern digital world, we live in.

Table of Contents

Choosing a computer science research topic for a thesis or dissertation is an important step for students to complete their degree. Research topics provided in this article will help students better understand theoretical ideas and provide them with hands-on experience applying those ideas to create original solutions.

Our comprehensive lists of computer science research topics cover a wide range of topics and are designed to help students select meaningful and relevant dissertation topics. All of these topics have been chosen by our team of highly qualified dissertation experts , taking into account both previous research findings and gaps in the field of computer science.

Computer Science Teacher/Professor Research Topics

- The impact of collaborative learning tools on computer science student engagement

- Evaluating the effectiveness of online and traditional computer science courses

- Identify Opportunities and difficulties of incorporating artificial intelligence into the computer science curriculum

- Explore the gamification as a means to improve learning outcomes in computer science education

- How peer instruction helps students perform better in programming courses

Computer Science Research Ideas

- Study of the implications of quantum computing for cryptographic algorithms

- Analysing artificial intelligence methods to detect fraud in financial systems instantly

- Enhancing cybersecurity measures for IoT networks using blockchain technology

- Assessing the efficiency of transfer learning in natural language processing

- Devising privacy-preserving data mining methods for cloud computing environments

Computer Science Thesis Topics

- Examining Artificial Intelligence’s Effect on the Safety of Autonomous Vehicles

- Investigating Deep Learning Models for Diagnostic Imaging in Medicine

- Examining Blockchain’s Potential for Secure Voting Systems

- Improving Cybersecurity with State-of-the-Art Intrusion Detection Technologies

- Comparing Quantum Algorithms’ Effectiveness in Solving Complex Problems

Computer Science Dissertation Topics

- Evaluating Big Data Analytics’ Effect on Business Intelligence Approaches

- Understanding Machine Learning’s Function in Customized Healthcare Systems

- Examining Blockchain’s Potential to Improve Data Security and Privacy

- Improving the User Experience with Cutting-Edge Human-Computer Interaction Strategies

- Assessing Cloud Computing Architectures’ Scalability for High-Demand Uses

Computer Science Topic Examples

- Studying the Potential of AI to Enhance Medical Diagnostics and Therapy

- The examination of Cyber-Physical System Applications and Integration Methods

- Exploring Obstacles and Prospects in the Creation of Self-Driving Cars

- Analyzing Artificial Intelligence’s Social Impact and Ethical Consequences

- Building and Evaluating Interactive Virtual Reality User Experiences

Computer Security Research Topics

- Examining Methods for Digital Communications Phishing Attack Detection and Prevention

- Improving Intrusion Detection System Security in Networks

- Cryptographic Protocol Development and Evaluation for Safe Data Transmission

- Evaluating Security Limitations and Possible Solutions in Mobile Computing Settings

- Vulnerability Analysis and Mitigation for Smart Contract Implementations

Cloud Computing Research Topics

- Examining the Security of Cloud Computing: Recognizing Risks and Creating Countermeasures

- Optimizing Resource Distribution Plans in Cloud-Based Environments

- Investigating Cloud-Based Options to Improve Big Data Analytics

- Examining the Effects of Cloud Computing on Enterprise IT Infrastructure

- Formulating and Measuring Optimal Load Distribution Methods for Cloud Computing Services

Also read: Psychology Research Topics

Computational Biology Research Topics

- Complex Biological System Modeling and Simulation for Predictive Insights

- Implementing Bioinformatics Algorithms for DNA Sequence Analysis

- Predictive genomics using Machine Learning Techniques

- Investigating Computational Methods to Quicken Drug Discovery

- Examining Protein-Protein Interactions Using State-of-the-Art Computational Techniques

Computational Chemistry Research Topics

- Investigating Quantum Chemistry: Computational Techniques and Their Uses

- Molecular Dynamics Models for Examining Chemical Processes

- The use of Computational Methods to Promote Progress in Material Science

- Chemical Property Prediction Using Machine Learning Methods

- Evaluating Computational Chemistry’s Contribution to Drug Development and Design

Computational Mathematics Research Topics

- Establishing Numerical Techniques to Solve Partial Differential Equations Effectively

- Investigating of a Computational Methods in Algebraic Geometry

- Formulating Mathematical Frameworks to Examine Complex System Behavior

- Examining Computational Number Theory’s Use in Contemporary Mathematics

Computational Physics Research Topics

- Compare the methodologies and Applications for Quantum System Simulation

- Progressing Computational Fluid Dynamics: Methodologies and Real-World Uses

- Study of the Simulating and Modeling Phenomena in Solid State Physics

- Utilizing High-Performance Computing in Astrophysical Research

- Handling Statistical Physics Problems with Computational Approaches

Computational Neuroscience Research Topics

- Investigating the modelling of neural networks using machine learning techniques

- Analysing brain imaging data using computational methods

- Research into the role of computer modelling in understanding cognitive processes

- Simulating synaptic plasticity and learning mechanisms in neural networks

- Advances in the development of brain-computer interfaces through computational approaches

Also check: Education research ideas for your project

Computer Engineering Research Topics

- Design and implement of low-power VLSI circuits for energy efficiency

- Advanced embedded systems: design techniques and optimisation strategies

- Exploring the latest advances in microprocessor architecture

- Development and implementation of fault-tolerant systems for increased reliability

- Implementation of real-time operating systems: Challenges and solutions

Computer Graphics Research Topics

- Exploring real-time rendering techniques for interactive graphics

- Comparative study of the advances in 3D modelling and animation technology

- Applications of augmented reality in entertainment and education

- Procedural generation techniques for the creation of virtual environments

- The impact of GPU computing on modern graphics applications

Also read: Cancer research topics

Computer Forensics Research Topics

- Developing advanced techniques for collecting and analysing digital evidence

- Using machine learning to analyse patterns in cybercrime

- Performing forensic analyses of data in cloud-based environments

- Creating and improving tools for network forensics

- Exploring legal and ethical considerations in computer forensics

Computer Hardware Research Topics

- Design and optimisation of energy-efficient computing units for high-performance computers

- Integration of quantum computer components into conventional hardware systems

- Advances in neuromorphic computer hardware for artificial intelligence applications

- Development of reliable hardware solutions for edge computing in IoT environments

- High-density interconnects and packaging techniques for future semiconductor devices

Also read: Nursing research topics

Computer Programming Research Topics

- Design and implementation of new programming languages for high-performance computing: challenges and solutions

- Advances in automated testing tools and their impact on the software development lifecycle

- The impact of functional programming paradigms on the design and architecture of modern software

- Comparative analysis of concurrent and parallel programming models: Performance, scalability and usability

Computer Networking Research Topics

- Advances in wireless communication technologies

- Development of secure protocols for Internet of Things (IoT) networks

- Optimising network performance with software-defined networking (SDN)

- The role of 5G in the design of future communication systems

How to choose a topic in computer science

To choose a computer science topic, student first identify their interests and research current trends and available resources. They can seek advice from subject specialists to make sure the topic has a clear scope.

How Can Research Prospect Help students with Computer Science Topic and Dissertation process

At Research Prospect, we provide valuable support to computer science students throughout their dissertation process . From choosing research topics, drafting research proposals , conducting literature reviews , and analysing the data, our experts ensure to deliver high quality dissertations.

You May Also Like

Cancer research is a vast and dynamic field that plays a pivotal role in advancing our understanding of this complex […]

Looking for interesting and manageable research topics in education? Your search ends right here because this blog post provides 50 […]

Discover Canadian doctoral dissertation format: structure, formatting, and word limits. Check your university guidelines.

Ready to place an order?

USEFUL LINKS

Learning resources.

COMPANY DETAILS

- How It Works

Research Topics & Ideas: CompSci & IT

50+ Computer Science Research Topic Ideas To Fast-Track Your Project

Finding and choosing a strong research topic is the critical first step when it comes to crafting a high-quality dissertation, thesis or research project. If you’ve landed on this post, chances are you’re looking for a computer science-related research topic , but aren’t sure where to start. Here, we’ll explore a variety of CompSci & IT-related research ideas and topic thought-starters, including algorithms, AI, networking, database systems, UX, information security and software engineering.

NB – This is just the start…

The topic ideation and evaluation process has multiple steps . In this post, we’ll kickstart the process by sharing some research topic ideas within the CompSci domain. This is the starting point, but to develop a well-defined research topic, you’ll need to identify a clear and convincing research gap , along with a well-justified plan of action to fill that gap.

If you’re new to the oftentimes perplexing world of research, or if this is your first time undertaking a formal academic research project, be sure to check out our free dissertation mini-course. In it, we cover the process of writing a dissertation or thesis from start to end. Be sure to also sign up for our free webinar that explores how to find a high-quality research topic.

Overview: CompSci Research Topics

- Algorithms & data structures

- Artificial intelligence ( AI )

- Computer networking

- Database systems

- Human-computer interaction

- Information security (IS)

- Software engineering

- Examples of CompSci dissertation & theses

Topics/Ideas: Algorithms & Data Structures

- An analysis of neural network algorithms’ accuracy for processing consumer purchase patterns

- A systematic review of the impact of graph algorithms on data analysis and discovery in social media network analysis

- An evaluation of machine learning algorithms used for recommender systems in streaming services

- A review of approximation algorithm approaches for solving NP-hard problems

- An analysis of parallel algorithms for high-performance computing of genomic data

- The influence of data structures on optimal algorithm design and performance in Fintech

- A Survey of algorithms applied in internet of things (IoT) systems in supply-chain management

- A comparison of streaming algorithm performance for the detection of elephant flows

- A systematic review and evaluation of machine learning algorithms used in facial pattern recognition

- Exploring the performance of a decision tree-based approach for optimizing stock purchase decisions

- Assessing the importance of complete and representative training datasets in Agricultural machine learning based decision making.

- A Comparison of Deep learning algorithms performance for structured and unstructured datasets with “rare cases”

- A systematic review of noise reduction best practices for machine learning algorithms in geoinformatics.

- Exploring the feasibility of applying information theory to feature extraction in retail datasets.

- Assessing the use case of neural network algorithms for image analysis in biodiversity assessment

Topics & Ideas: Artificial Intelligence (AI)

- Applying deep learning algorithms for speech recognition in speech-impaired children

- A review of the impact of artificial intelligence on decision-making processes in stock valuation

- An evaluation of reinforcement learning algorithms used in the production of video games

- An exploration of key developments in natural language processing and how they impacted the evolution of Chabots.

- An analysis of the ethical and social implications of artificial intelligence-based automated marking

- The influence of large-scale GIS datasets on artificial intelligence and machine learning developments

- An examination of the use of artificial intelligence in orthopaedic surgery

- The impact of explainable artificial intelligence (XAI) on transparency and trust in supply chain management

- An evaluation of the role of artificial intelligence in financial forecasting and risk management in cryptocurrency

- A meta-analysis of deep learning algorithm performance in predicting and cyber attacks in schools

Topics & Ideas: Networking

- An analysis of the impact of 5G technology on internet penetration in rural Tanzania

- Assessing the role of software-defined networking (SDN) in modern cloud-based computing

- A critical analysis of network security and privacy concerns associated with Industry 4.0 investment in healthcare.

- Exploring the influence of cloud computing on security risks in fintech.

- An examination of the use of network function virtualization (NFV) in telecom networks in Southern America

- Assessing the impact of edge computing on network architecture and design in IoT-based manufacturing

- An evaluation of the challenges and opportunities in 6G wireless network adoption

- The role of network congestion control algorithms in improving network performance on streaming platforms

- An analysis of network coding-based approaches for data security

- Assessing the impact of network topology on network performance and reliability in IoT-based workspaces

Topics & Ideas: Database Systems

- An analysis of big data management systems and technologies used in B2B marketing

- The impact of NoSQL databases on data management and analysis in smart cities

- An evaluation of the security and privacy concerns of cloud-based databases in financial organisations

- Exploring the role of data warehousing and business intelligence in global consultancies

- An analysis of the use of graph databases for data modelling and analysis in recommendation systems

- The influence of the Internet of Things (IoT) on database design and management in the retail grocery industry

- An examination of the challenges and opportunities of distributed databases in supply chain management

- Assessing the impact of data compression algorithms on database performance and scalability in cloud computing

- An evaluation of the use of in-memory databases for real-time data processing in patient monitoring

- Comparing the effects of database tuning and optimization approaches in improving database performance and efficiency in omnichannel retailing

Topics & Ideas: Human-Computer Interaction

- An analysis of the impact of mobile technology on human-computer interaction prevalence in adolescent men

- An exploration of how artificial intelligence is changing human-computer interaction patterns in children

- An evaluation of the usability and accessibility of web-based systems for CRM in the fast fashion retail sector

- Assessing the influence of virtual and augmented reality on consumer purchasing patterns

- An examination of the use of gesture-based interfaces in architecture

- Exploring the impact of ease of use in wearable technology on geriatric user

- Evaluating the ramifications of gamification in the Metaverse

- A systematic review of user experience (UX) design advances associated with Augmented Reality

- A comparison of natural language processing algorithms automation of customer response Comparing end-user perceptions of natural language processing algorithms for automated customer response

- Analysing the impact of voice-based interfaces on purchase practices in the fast food industry

Topics & Ideas: Information Security

- A bibliometric review of current trends in cryptography for secure communication

- An analysis of secure multi-party computation protocols and their applications in cloud-based computing

- An investigation of the security of blockchain technology in patient health record tracking

- A comparative study of symmetric and asymmetric encryption algorithms for instant text messaging

- A systematic review of secure data storage solutions used for cloud computing in the fintech industry

- An analysis of intrusion detection and prevention systems used in the healthcare sector

- Assessing security best practices for IoT devices in political offices

- An investigation into the role social media played in shifting regulations related to privacy and the protection of personal data

- A comparative study of digital signature schemes adoption in property transfers

- An assessment of the security of secure wireless communication systems used in tertiary institutions

Topics & Ideas: Software Engineering

- A study of agile software development methodologies and their impact on project success in pharmacology

- Investigating the impacts of software refactoring techniques and tools in blockchain-based developments

- A study of the impact of DevOps practices on software development and delivery in the healthcare sector

- An analysis of software architecture patterns and their impact on the maintainability and scalability of cloud-based offerings

- A study of the impact of artificial intelligence and machine learning on software engineering practices in the education sector

- An investigation of software testing techniques and methodologies for subscription-based offerings

- A review of software security practices and techniques for protecting against phishing attacks from social media

- An analysis of the impact of cloud computing on the rate of software development and deployment in the manufacturing sector

- Exploring the impact of software development outsourcing on project success in multinational contexts

- An investigation into the effect of poor software documentation on app success in the retail sector

CompSci & IT Dissertations/Theses

While the ideas we’ve presented above are a decent starting point for finding a CompSci-related research topic, they are fairly generic and non-specific. So, it helps to look at actual dissertations and theses to see how this all comes together.

Below, we’ve included a selection of research projects from various CompSci-related degree programs to help refine your thinking. These are actual dissertations and theses, written as part of Master’s and PhD-level programs, so they can provide some useful insight as to what a research topic looks like in practice.

- An array-based optimization framework for query processing and data analytics (Chen, 2021)

- Dynamic Object Partitioning and replication for cooperative cache (Asad, 2021)

- Embedding constructural documentation in unit tests (Nassif, 2019)

- PLASA | Programming Language for Synchronous Agents (Kilaru, 2019)

- Healthcare Data Authentication using Deep Neural Network (Sekar, 2020)

- Virtual Reality System for Planetary Surface Visualization and Analysis (Quach, 2019)

- Artificial neural networks to predict share prices on the Johannesburg stock exchange (Pyon, 2021)

- Predicting household poverty with machine learning methods: the case of Malawi (Chinyama, 2022)

- Investigating user experience and bias mitigation of the multi-modal retrieval of historical data (Singh, 2021)

- Detection of HTTPS malware traffic without decryption (Nyathi, 2022)

- Redefining privacy: case study of smart health applications (Al-Zyoud, 2019)

- A state-based approach to context modeling and computing (Yue, 2019)

- A Novel Cooperative Intrusion Detection System for Mobile Ad Hoc Networks (Solomon, 2019)

- HRSB-Tree for Spatio-Temporal Aggregates over Moving Regions (Paduri, 2019)

Looking at these titles, you can probably pick up that the research topics here are quite specific and narrowly-focused , compared to the generic ones presented earlier. This is an important thing to keep in mind as you develop your own research topic. That is to say, to create a top-notch research topic, you must be precise and target a specific context with specific variables of interest . In other words, you need to identify a clear, well-justified research gap.

Fast-Track Your Research Topic

If you’re still feeling a bit unsure about how to find a research topic for your Computer Science dissertation or research project, check out our Topic Kickstarter service.

10 Comments

Investigating the impacts of software refactoring techniques and tools in blockchain-based developments.

Steps on getting this project topic

I want to work with this topic, am requesting materials to guide.

Information Technology -MSc program

It’s really interesting but how can I have access to the materials to guide me through my work?

That’s my problem also.

Investigating the impacts of software refactoring techniques and tools in blockchain-based developments is in my favour. May i get the proper material about that ?

BLOCKCHAIN TECHNOLOGY

I NEED TOPIC

Database Management Systems

Can you give me a Research title for system

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- Write my thesis

- Thesis writers

- Buy thesis papers

- Bachelor thesis

- Master's thesis

- Thesis editing services

- Thesis proofreading services

- Buy a thesis online

- Write my dissertation

- Dissertation proposal help

- Pay for dissertation

- Custom dissertation

- Dissertation help online

- Buy dissertation online

- Cheap dissertation

- Dissertation editing services

- Write my research paper

- Buy research paper online

- Pay for research paper

- Research paper help

- Order research paper

- Custom research paper

- Cheap research paper

- Research papers for sale

- Thesis subjects

- How It Works

100 Great Computer Science Research Topics Ideas for 2023

Being a computer student in 2023 is not easy. Besides studying a constantly evolving subject, you have to come up with great computer science research topics at some point in your academic life. If you’re reading this article, you’re among many other students that have also come to this realization.

Interesting Computer Science Topics

Awesome research topics in computer science, hot topics in computer science, topics to publish a journal on computer science.

- Controversial Topics in Computer Science

Fun AP Computer Science Topics

Exciting computer science ph.d. topics, remarkable computer science research topics for undergraduates, incredible final year computer science project topics, advanced computer science topics, unique seminars topics for computer science, exceptional computer science masters thesis topics, outstanding computer science presentation topics.

- Key Computer Science Essay Topics

Main Project Topics for Computer Science

- We Can Help You with Computer Science Topics

Whether you’re earnestly searching for a topic or stumbled onto this article by accident, there is no doubt that every student needs excellent computer science-related topics for their paper. A good topic will not only give your essay or research a good direction but will also make it easy to come up with supporting points. Your topic should show all your strengths as well.

Fortunately, this article is for every student that finds it hard to generate a suitable computer science topic. The following 100+ topics will help give you some inspiration when creating your topics. Let’s get into it.

One of the best ways of making your research paper interesting is by coming up with relevant topics in computer science . Here are some topics that will make your paper immersive:

- Evolution of virtual reality

- What is green cloud computing

- Ways of creating a Hopefield neural network in C++

- Developments in graphic systems in computers

- The five principal fields in robotics

- Developments and applications of nanotechnology

- Differences between computer science and applied computing

Your next research topic in computer science shouldn’t be tough to find once you’ve read this section. If you’re looking for simple final year project topics in computer science, you can find some below.

- Applications of the blockchain technology in the banking industry

- Computational thinking and how it influences science

- Ways of terminating phishing

- Uses of artificial intelligence in cyber security

- Define the concepts of a smart city

- Applications of the Internet of Things

- Discuss the applications of the face detection application

Whenever a topic is described as “hot,” it means that it is a trendy topic in computer science. If computer science project topics for your final years are what you’re looking for, have a look at some below:

- Applications of the Metaverse in the world today

- Discuss the challenges of machine learning

- Advantages of artificial intelligence

- Applications of nanotechnology in the paints industry

- What is quantum computing?

- Discuss the languages of parallel computing

- What are the applications of computer-assisted studies?

Perhaps you’d like to write a paper that will get published in a journal. If you’re searching for the best project topics for computer science students that will stand out in a journal, check below:

- Developments in human-computer interaction

- Applications of computer science in medicine

- Developments in artificial intelligence in image processing

- Discuss cryptography and its applications

- Discuss methods of ransomware prevention

- Applications of Big Data in the banking industry

- Challenges of cloud storage services in 2023

Controversial Topics in Computer Science

Some of the best computer science final year project topics are those that elicit debates or require you to take a stand. You can find such topics listed below for your inspiration:

- Can robots be too intelligent?

- Should the dark web be shut down?

- Should your data be sold to corporations?

- Will robots completely replace the human workforce one day?

- How safe is the Metaverse for children?

- Will artificial intelligence replace actors in Hollywood?

- Are social media platforms safe anymore?

Are you a computer science student looking for AP topics? You’re in luck because the following final year project topics for computer science are suitable for you.

- Standard browser core with CSS support

- Applications of the Gaussian method in C++ development in integrating functions

- Vital conditions of reducing risk through the Newton method

- How to reinforce machine learning algorithms.

- How do artificial neural networks function?

- Discuss the advancements in computer languages in machine learning

- Use of artificial intelligence in automated cars

When studying to get your doctorate in computer science, you need clear and relevant topics that generate the reader’s interest. Here are some Ph.D. topics in computer science you might consider:

- Developments in information technology

- Is machine learning detrimental to the human workforce?

- How to write an algorithm for deep learning

- What is the future of 5G in wireless networks

- Statistical data in Maths modules in Python

- Data retention automation from a website using API

- Application of modern programming languages

Looking for computer science topics for research is not easy for an undergraduate. Fortunately, these computer science project topics should make your research paper easy:

- Ways of using artificial intelligence in real estate

- Discuss reinforcement learning and its applications

- Uses of Big Data in science and medicine

- How to sort algorithms using Haskell

- How to create 3D configurations for a website

- Using inverse interpolation to solve non-linear equations

- Explain the similarities between the Internet of Things and artificial intelligence

Your dissertation paper is one of the most crucial papers you’ll ever do in your final year. That’s why selecting the best ethics in computer science topics is a crucial part of your paper. Here are some project topics for the computer science final year.

- How to incorporate numerical methods in programming

- Applications of blockchain technology in cloud storage

- How to come up with an automated attendance system

- Using dynamic libraries for site development

- How to create cubic splines

- Applications of artificial intelligence in the stock market

- Uses of quantum computing in financial modeling

Your instructor may want you to challenge yourself with an advanced science project. Thus, you may require computer science topics to learn and research. Here are some that may inspire you:

- Discuss the best cryptographic protocols

- Advancement of artificial intelligence used in smartphones

- Briefly discuss the types of security software available

- Application of liquid robots in 2023

- How to use quantum computers to solve decoherence problem

- macOS vs. Windows; discuss their similarities and differences

- Explain the steps taken in a cyber security audit

When searching for computer science topics for a seminar, make sure they are based on current research or events. Below are some of the latest research topics in computer science:

- How to reduce cyber-attacks in 2023

- Steps followed in creating a network

- Discuss the uses of data science

- Discuss ways in which social robots improve human interactions

- Differentiate between supervised and unsupervised machine learning

- Applications of robotics in space exploration

- The contrast between cyber-physical and sensor network systems

Are you looking for computer science thesis topics for your upcoming projects? The topics below are meant to help you write your best paper yet:

- Applications of computer science in sports

- Uses of computer technology in the electoral process

- Using Fibonacci to solve the functions maximum and their implementations

- Discuss the advantages of using open-source software

- Expound on the advancement of computer graphics

- Briefly discuss the uses of mesh generation in computational domains

- How much data is generated from the internet of things?

A computer science presentation requires a topic relevant to current events. Whether your paper is an assignment or a dissertation, you can find your final year computer science project topics below:

- Uses of adaptive learning in the financial industry

- Applications of transitive closure on graph

- Using RAD technology in developing software

- Discuss how to create maximum flow in the network

- How to design and implement functional mapping

- Using artificial intelligence in courier tracking and deliveries

- How to make an e-authentication system

Key Computer Science Essay Topics

You may be pressed for time and require computer science master thesis topics that are easy. Below are some topics that fit this description:

- What are the uses of cloud computing in 2023

- Discuss the server-side web technologies

- Compare and contrast android and iOS

- How to come up with a face detection algorithm

- What is the future of NFTs

- How to create an artificial intelligence shopping system

- How to make a software piracy prevention algorithm

One major mistake students make when writing their papers is selecting topics unrelated to the study at hand. This, however, will not be an issue if you get topics related to computer science, such as the ones below:

- Using blockchain to create a supply chain management system

- How to protect a web app from malicious attacks

- Uses of distributed information processing systems

- Advancement of crowd communication software since COVID-19

- Uses of artificial intelligence in online casinos

- Discuss the pillars of math computations

- Discuss the ethical concerns arising from data mining

We Can Help You with Computer Science Topics, Essays, Thesis, and Research Papers

We hope that this list of computer science topics helps you out of your sticky situation. We do offer other topics in different subjects. Additionally, we also offer professional writing services tailor-made for you.

We understand what students go through when searching the internet for computer science research paper topics, and we know that many students don’t know how to write a research paper to perfection. However, you shouldn’t have to go through all this when we’re here to help.

Don’t waste any more time; get in touch with us today and get your paper done excellently.

Leave a Reply Cancel reply

- Customer Reviews

- Extended Essays

- IB Internal Assessment

- Theory of Knowledge

- Literature Review

- Dissertations

- Essay Writing

- Research Writing

- Assignment Help

- Capstone Projects

- College Application

- Online Class

30+ Good Computer Science Research Paper Topics and Ideas

by Antony W

June 6, 2024

We’ve written a lot on computer science to know that choosing research paper topics in the subject isn’t as easy as flipping a bulb’s switch. Brainstorming can take an entire afternoon before you come up with something constructive.

However, looking at prewritten topics is a great way to identify an idea to guide your research.

In this post, we give you a list of 20+ research paper topics on computer science to cut your ideation time to zero.

- Scan the list.

- Identify what topic piques your interest

- Develop your research question , and

- Follow our guide to write a research paper .

Key Takeaways

- Computer science is a broad field, meaning you can come up with endless number of topics for your research paper.

- With the freedom to choose the topic you want, consider working on a theme that you’ve always wanted to investigate.

- Focusing your research on a trending topic in the computer science space can be a plus.

- As long as a topic allows you to complete the steps of a research process with ease, work on it.

Computer Science Research Paper Topics

The following are 30+ research topics and ideas from which you can choose a title for your computer science project:

Artificial Intelligence Topics

AI made its first appearance in 1958 when Frank Rosenblatt developed the first deep neural network that could generate an original idea. Yet, there’s no time Artificial Intelligence has ever been a profound as it is right now. Interesting and equally controversial, AI opens door to an array of research opportunity, meaning there are countless topics that you can investigate in a project, including the following:

- Write about the efficacy of deep learning algorithms in forecasting and mitigating cyber-attacks within educational institutions.

- Focus on a study of the transformative impact of recent advances in natural language processing.

- Explain Artificial Intelligence’s influence on stock valuation decision-making, making sure you touch on impacts and implications.

- Write a research project on harnessing deep learning for speech recognition in children with speech impairments.

- Focus your paper on an in-depth evaluation of reinforcement learning algorithms in video game development.

- Write a research project that focuses on the integration of artificial intelligence in orthopedic surgery.

- Examine the social implications and ethical considerations of AI-based automated marking systems.

- Artificial Intelligence’s role in cryptocurrency: Evaluating its impact on financial forecasting and risk management

- The confluence of large-scale GIS datasets with AI and machine learning

Free Features

Don’t wait for the last minute. Hire a writer today.

$4.99 Title page

$10.91 Formatting

$3.99 Outline

$21.99 Revisions

Get all these features for $65.77 FREE

Data Structure and Algorithms Topics

Topics on data structure and algorithm focus on the storage, retrieval, and efficient use of data. Here are some ideas that you may find interesting for a research project in this area:

- Do an in-depth investigation of the efficacy of deep learning algorithms on structured and unstructured datasets.

- Conduct a comprehensive survey of approximation algorithms for solving NP-hard problems.

- Analyze the performance of decision tree-based approaches in optimizing stock purchasing decisions.

- Do a critical examination of the accuracy of neural network algorithms in processing consumer purchase patterns.

- Explore parallel algorithms for high-performance computing of genomic data.

- Evaluate machine-learning algorithms in facial pattern recognition.

- Examine the applicability of neural network algorithms for image analysis in biodiversity assessment

- Investigate the impact of data structures on optimal algorithm design and performance in financial technology

- Write a research paper on the survey of algorithm applications in Internet of Things (IoT) systems for supply-chain management.

Networking Topics

The networking topics in research focus on the communication between computer devices. Your project can focus on data transmission, data exchange, and data resources. You can focus on media access control, network topology design, packet classification, and so much more. Here are some ideas to get you started with your research:

- Analyzing the influence of 5g technology on rural internet accessibility in Africa

- The significance of network congestion control algorithms in enhancing streaming platform performance

- Evaluate the role of software-defined networking in contemporary cloud-based computing environments

- Examining the impact of network topology on performance and reliability of internet-of-things

- A comprehensive investigation of the integration of network function virtualization in telecommunication networks across South America

- A critical appraisal of network security and privacy challenges amid industry investments in healthcare

- Assessing the influence of edge computing on network architecture and design within Internet of Things

- Evaluating challenges and opportunities in the adoption of 6g wireless networks

- Exploring the intersection of cloud computing and security risks in the financial technology sector

- An analysis of network coding-based approaches for enhanced data security

Database Topic Ideas

Computer science relies heavily on data to produce information. This data requires efficient and secure management and mitigation for it to be of any good value. Given just how wide this area is as well, your database research topic can be on anything that you find fascinating to explore. Below are some ideas to get started:

- Examining big data management systems and technologies in business-to-business marketing

- Assessing the use of in-memory databases for real-time data processing in patient monitoring

- An analytical study on the implementation of graph databases for data modeling and analysis in recommendation systems

- Understanding the impact of NOSQL databases on data management and analysis within smart cities

- The evolving dynamics of database design and management in the retail grocery industry under the influence of the internet of things

- Evaluating the effects of data compression algorithms on database performance and scalability in cloud computing environments

- An in-depth examination of the challenges and opportunities presented by distributed databases in supply chain management

- Addressing security and privacy concerns of cloud-based databases in financial organizations

- Comparative analysis of database tuning and optimization approaches for enhancing efficiency in Omni channel retailing

- Exploring the nexus of data warehousing and business intelligence in the landscape of global consultancies

Need help to complete and ace your paper? Order our writing service.

Get all academic paper features for $65.77 FREE

About the author

Antony W is a professional writer and coach at Help for Assessment. He spends countless hours every day researching and writing great content filled with expert advice on how to write engaging essays, research papers, and assignments.

- Who’s Teaching What

- Subject Updates

- MEng program

- Opportunities

- Minor in Computer Science

- Resources for Current Students

- Program objectives and accreditation

- Graduate program requirements

- Admission process

- Degree programs

- Graduate research

- EECS Graduate Funding

- Resources for current students

- Student profiles

- Instructors

- DEI data and documents

- Recruitment and outreach

- Community and resources

- Get involved / self-education

- Rising Stars in EECS

- Graduate Application Assistance Program (GAAP)

- MIT Summer Research Program (MSRP)

- Sloan-MIT University Center for Exemplary Mentoring (UCEM)

- Electrical Engineering

- Computer Science

- Artificial Intelligence + Decision-making

- AI and Society

AI for Healthcare and Life Sciences

Artificial intelligence and machine learning.

- Biological and Medical Devices and Systems

Communications Systems

- Computational Biology

Computational Fabrication and Manufacturing

Computer architecture, educational technology.

- Electronic, Magnetic, Optical and Quantum Materials and Devices

Graphics and Vision

Human-computer interaction.

- Information Science and Systems

- Integrated Circuits and Systems

- Nanoscale Materials, Devices, and Systems

- Natural Language and Speech Processing

- Optics + Photonics

- Optimization and Game Theory

Programming Languages and Software Engineering

Quantum computing, communication, and sensing, security and cryptography.

- Signal Processing

Systems and Networking

- Systems Theory, Control, and Autonomy

Theory of Computation

- Departmental History

- Departmental Organization

- Visiting Committee

- Explore all research areas

Computer science deals with the theory and practice of algorithms, from idealized mathematical procedures to the computer systems deployed by major tech companies to answer billions of user requests per day.

Primary subareas of this field include: theory, which uses rigorous math to test algorithms’ applicability to certain problems; systems, which develops the underlying hardware and software upon which applications can be implemented; and human-computer interaction, which studies how to make computer systems more effectively meet the needs of real people. The products of all three subareas are applied across science, engineering, medicine, and the social sciences. Computer science drives interdisciplinary collaboration both across MIT and beyond, helping users address the critical societal problems of our era, including opportunity access, climate change, disease, inequality and polarization.

Research areas

Our goal is to develop AI technologies that will change the landscape of healthcare. This includes early diagnostics, drug discovery, care personalization and management. Building on MIT’s pioneering history in artificial intelligence and life sciences, we are working on algorithms suitable for modeling biological and clinical data across a range of modalities including imaging, text and genomics.

Our research covers a wide range of topics of this fast-evolving field, advancing how machines learn, predict, and control, while also making them secure, robust and trustworthy. Research covers both the theory and applications of ML. This broad area studies ML theory (algorithms, optimization, …), statistical learning (inference, graphical models, causal analysis, …), deep learning, reinforcement learning, symbolic reasoning ML systems, as well as diverse hardware implementations of ML.

We develop the next generation of wired and wireless communications systems, from new physical principles (e.g., light, terahertz waves) to coding and information theory, and everything in between.

We bring some of the most powerful tools in computation to bear on design problems, including modeling, simulation, processing and fabrication.

We design the next generation of computer systems. Working at the intersection of hardware and software, our research studies how to best implement computation in the physical world. We design processors that are faster, more efficient, easier to program, and secure. Our research covers systems of all scales, from tiny Internet-of-Things devices with ultra-low-power consumption to high-performance servers and datacenters that power planet-scale online services. We design both general-purpose processors and accelerators that are specialized to particular application domains, like machine learning and storage. We also design Electronic Design Automation (EDA) tools to facilitate the development of such systems.

Educational technology combines both hardware and software to enact global change, making education accessible in unprecedented ways to new audiences. We develop the technology that makes better understanding possible.

The shared mission of Visual Computing is to connect images and computation, spanning topics such as image and video generation and analysis, photography, human perception, touch, applied geometry, and more.

The focus of our research in Human-Computer Interaction (HCI) is inventing new systems and technology that lie at the interface between people and computation, and understanding their design, implementation, and societal impact.

We develop new approaches to programming, whether that takes the form of programming languages, tools, or methodologies to improve many aspects of applications and systems infrastructure.

Our work focuses on developing the next substrate of computing, communication and sensing. We work all the way from new materials to superconducting devices to quantum computers to theory.

Our research focuses on robotic hardware and algorithms, from sensing to control to perception to manipulation.

Our research is focused on making future computer systems more secure. We bring together a broad spectrum of cross-cutting techniques for security, from theoretical cryptography and programming-language ideas, to low-level hardware and operating-systems security, to overall system designs and empirical bug-finding. We apply these techniques to a wide range of application domains, such as blockchains, cloud systems, Internet privacy, machine learning, and IoT devices, reflecting the growing importance of security in many contexts.

From distributed systems and databases to wireless, the research conducted by the systems and networking group aims to improve the performance, robustness, and ease of management of networks and computing systems.

Theory of Computation (TOC) studies the fundamental strengths and limits of computation, how these strengths and limits interact with computer science and mathematics, and how they manifest themselves in society, biology, and the physical world.

Latest news

Enhancing llm collaboration for smarter, more efficient solutions.

“Co-LLM” algorithm helps a general-purpose AI model collaborate with an expert large language model by combining the best parts of both answers, leading to more factual responses.

Method prevents an AI model from being overconfident about wrong answers

More efficient than other approaches, the “Thermometer” technique could help someone know when they should trust a large language model.

A fast and flexible approach to help doctors annotate medical scans

“ScribblePrompt” is an interactive AI framework that can efficiently highlight anatomical structures across different medical scans, assisting medical workers to delineate regions of interest and abnormalities.

Student Spotlight: Krithik Ramesh

Today’s Student Spotlight focuses on Krithik Ramesh, a member of the class of 2025 majoring in 6-4, Artificial Intelligence and Decision-Making.

3Qs: Dirk Englund on the quantum computing track within 6-5, “Electrical Engineering With Computing”.

In the new undergraduate engineering sequence in quantum engineering, students learn the foundations of the quantum computing “stack” before creating their own quantum engineered systems in the lab.

Dirk Englund, Associate Professor in EECS, has been part of a team of instructors developing the quantum course sequence.

Upcoming events

Capital one – tech transformation, openai tech talk and recruiting.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

Computer science articles within Nature

News | 20 September 2024

Do AI models produce more original ideas than researchers?

The concepts were judged by reviewers and were not told who or what had created them.

- Gemma Conroy

Technology Feature | 16 September 2024

Forget ChatGPT: why researchers now run small AIs on their laptops

Artificial-intelligence models are typically used online, but a host of openly available tools is changing that. Here’s how to get started with local AIs.

- Matthew Hutson

Career Guide | 04 September 2024

Guide, don’t hide: reprogramming learning in the wake of AI

As artificial intelligence becomes increasingly integral to the world outside academia, universities face a crucial choice: to use or not to use.

- Monique Brouillette

News Feature | 04 September 2024

A day in the life of the world’s fastest supercomputer

In the hills of eastern Tennessee, a record-breaking machine called Frontier is providing scientists with unprecedented opportunities to study everything from atoms to galaxies.

- Sophia Chen

Research Briefing | 03 September 2024

Holistic approach to carbon capture bridges the ‘Valley of Death’

Carbon-capture technology often founders at the point when basic research is translated into practical applications. A computational modelling platform called PrISMa solves this problem by considering the needs of all stakeholders.

Article 28 August 2024 | Open Access

AI generates covertly racist decisions about people based on their dialect

Despite efforts to remove overt racial prejudice, language models using artificial intelligence still show covert racism against speakers of African American English that is triggered by features of the dialect.

- Valentin Hofmann

- , Pratyusha Ria Kalluri

- & Sharese King

News | 23 August 2024

Science treasures from Microsoft mogul up for auction — and researchers are salivating

Spacesuits, historic computers and more from the estate of the late Paul Allen are going on sale.

- Alix Soliman

News | 22 August 2024

AI made of jelly ‘learns’ to play Pong — and improves with practice

Inspired by neurons in a dish playing the classic video game, researchers show that synthetic hydrogels have a basic ‘memory’.

Comment | 21 August 2024

Light bulbs have energy ratings — so why can’t AI chatbots?

The rising energy and environmental cost of the artificial-intelligence boom is fuelling concern. Green policy mechanisms that already exist offer a path towards a solution.

- Sasha Luccioni

- , Boris Gamazaychikov

- & Carole-Jean Wu

News & Views | 21 August 2024

Switching between tasks can cause AI to lose the ability to learn

Artificial neural networks become incapable of mastering new skills when they learn them one after the other. Researchers have only scratched the surface of why this phenomenon occurs — and how it can be fixed.

- & Razvan Pascanu

Article 21 August 2024 | Open Access

Loss of plasticity in deep continual learning

The pervasive problem of artificial neural networks losing plasticity in continual-learning settings is demonstrated and a simple solution called the continual backpropagation algorithm is described to prevent this issue.

- Shibhansh Dohare

- , J. Fernando Hernandez-Garcia

- & Richard S. Sutton

Nature Video | 09 August 2024

Why ChatGPT can't handle some languages

In a test of the chatbot's language abilities it fails at certain languages.

- Nick Petrić Howe

Nature Podcast | 09 August 2024

ChatGPT has a language problem — but science can fix it

The Large Language Models that power chatbots are known to struggle in languages outside of English — this podcast explores how this challenge can be overcome.

Article 07 August 2024 | Open Access

Fully forward mode training for optical neural networks

We present fully forward mode learning, which conducts machine learning operations on site, leading to faster learning and promoting advancement in numerous fields.

- , Tiankuang Zhou

- & Lu Fang

Technology Feature | 05 August 2024

Quantum computing aims for diversity, one qubit at a time

The fast-growing discipline needs more scientists from under-represented groups. A raft of initiatives is rising to the challenge.

- Amanda Heidt

Career Feature | 05 August 2024

Slow productivity worked for Marie Curie — here’s why you should adopt it, too

Do fewer things, work at a natural pace and obsess over quality, says computer scientist Cal Newport, in his latest time-management book.

- Anne Gulland

Outlook | 25 July 2024

AI is vulnerable to attack. Can it ever be used safely?

The models that underpin artificial-intelligence systems such as ChatGPT can be subject to attacks that elicit harmful behaviour. Making them safe will not be easy.

- Simon Makin

News & Views | 24 July 2024

AI produces gibberish when trained on too much AI-generated data

Generative AI models are now widely accessible, enabling everyone to create their own machine-made something. But these models can collapse if their training data sets contain too much AI-generated content.

- Emily Wenger

Article 24 July 2024 | Open Access

AI models collapse when trained on recursively generated data

Analysis shows that indiscriminately training generative artificial intelligence on real and generated content, usually done by scraping data from the Internet, can lead to a collapse in the ability of the models to generate diverse high-quality output.

- Ilia Shumailov

- , Zakhar Shumaylov

- & Yarin Gal

Technology Feature | 22 July 2024

ChatGPT for science: how to talk to your data

Companies are using artificial intelligence tools to help scientists to query their data without the need for programming skills.

- Julian Nowogrodzki

News | 08 July 2024

Can AI be superhuman? Flaws in top gaming bot cast doubt

Building robust AI systems that always outperform people might be harder than thought, say researchers who studied Go-playing bots.

Technology Feature | 03 July 2024

Inside the maths that drives AI

Loss functions measure algorithmic errors in artificial-intelligence models, but there’s more than one way to do that. Here’s why the right function is so important.

- Michael Brooks

World View | 26 June 2024

How I’m using AI tools to help universities maximize research impacts

Artificial-intelligence algorithms could identify scientists who need support with translating their work into real-world applications and more. Leaders must step up.

- Dashun Wang

News & Views | 19 June 2024

‘Fighting fire with fire’ — using LLMs to combat LLM hallucinations

The number of errors produced by an LLM can be reduced by grouping its outputs into semantically similar clusters. Remarkably, this task can be performed by a second LLM, and the method’s efficacy can be evaluated by a third.

- Karin Verspoor

Article 19 June 2024 | Open Access

Detecting hallucinations in large language models using semantic entropy

Hallucinations (confabulations) in large language model systems can be tackled by measuring uncertainty about the meanings of generated responses rather than the text itself to improve question-answering accuracy.

- Sebastian Farquhar

- , Jannik Kossen

Article | 12 June 2024

Experiment-free exoskeleton assistance via learning in simulation

A learning-in-simulation framework for wearable robots uses dynamics-aware musculoskeletal and exoskeleton models and data-driven reinforcement learning to bridge the gap between simulation and reality without human experiments to assist versatile activities.

- Shuzhen Luo

- , Menghan Jiang

- & Hao Su

News & Views | 05 June 2024

Meta’s AI translation model embraces overlooked languages

More than 7,000 languages are in use throughout the world, but popular translation tools cannot deal with most of them. A translation model that was tested on under-represented languages takes a key step towards a solution.

- David I. Adelani

Article 05 June 2024 | Open Access

Scaling neural machine translation to 200 languages

Scaling neural machine translation to 200 languages is achieved by No Language Left Behind, a single massively multilingual model that leverages transfer learning across languages.

- Marta R. Costa-jussà

- , James Cross

- & Jeff Wang

News Feature | 04 June 2024

How cutting-edge computer chips are speeding up the AI revolution

Engineers are harnessing the powers of graphics processing units (GPUs) and more, with a bevy of tricks to meet the computational demands of artificial intelligence.

- Dan Garisto

News Explainer | 29 May 2024

Who owns your voice? Scarlett Johansson OpenAI complaint raises questions

In the age of artificial intelligence, situations are emerging that challenge the laws over rights to a persona.

- Nicola Jones

Article 29 May 2024 | Open Access

Low-latency automotive vision with event cameras

Use of a 20 frames per second (fps) RGB camera plus an event camera can achieve the same latency as a 5,000-fps camera with the bandwidth of a 45-fps camera without compromising accuracy.

- Daniel Gehrig

- & Davide Scaramuzza

Correspondence | 28 May 2024

Anglo-American bias could make generative AI an invisible intellectual cage

- Queenie Luo

- & Michael Puett

Editorial | 22 May 2024

AlphaFold3 — why did Nature publish it without its code?

Criticism of our decision to publish AlphaFold3 raises important questions. We welcome readers’ views.

News | 15 April 2024

AI now beats humans at basic tasks — new benchmarks are needed, says major report

Stanford University’s 2024 AI Index charts the meteoric rise of artificial-intelligence tools.

Article 27 March 2024 | Open Access

High-threshold and low-overhead fault-tolerant quantum memory

An end-to-end quantum error correction protocol that implements fault-tolerant memory on the basis of a family of low-density parity-check codes shows the possibility of low-overhead fault-tolerant quantum memory within the reach of near-term quantum processors.

- Sergey Bravyi

- , Andrew W. Cross

- & Theodore J. Yoder

Nature Podcast | 20 March 2024

AI hears hidden X factor in zebra finch love songs

Machine learning detects song differences too subtle for humans to hear, and physicists harness the computing power of the strange skyrmion.

- & Benjamin Thompson

Correspondence | 19 March 2024

Three reasons why AI doesn’t model human language

- Johan J. Bolhuis

- , Stephen Crain

- & Andrea Moro

Technology Feature | 19 March 2024

So … you’ve been hacked

Research institutions are under siege from cybercriminals and other digital assailants. How do you make sure you don’t let them in?

Technology Feature | 11 March 2024

No installation required: how WebAssembly is changing scientific computing

Enabling code execution in the web browser, the multilanguage tool is powerful but complicated.

- Jeffrey M. Perkel

Editorial | 06 March 2024

Why scientists trust AI too much — and what to do about it

Some researchers see superhuman qualities in artificial intelligence. All scientists need to be alert to the risks this creates.

News Explainer | 28 February 2024

Is ChatGPT making scientists hyper-productive? The highs and lows of using AI

Large language models are transforming scientific writing and publishing. But the productivity boost that these tools bring could have a downside.

- McKenzie Prillaman

World View | 20 February 2024

Generative AI’s environmental costs are soaring — and mostly secret

First-of-its-kind US bill would address the environmental costs of the technology, but there’s a long way to go.

- Kate Crawford

Editorial | 07 February 2024

Cyberattacks on knowledge institutions are increasing: what can be done?

For months, ransomware attacks have debilitated research at the British Library in London and Berlin’s natural history museum. They show how vulnerable scientific and educational institutions are to this kind of crime.

News | 06 February 2024

AI chatbot shows surprising talent for predicting chemical properties and reactions

Researchers lightly tweak ChatGPT-like system to offer chemistry insight.

- Davide Castelvecchi

Editorial | 31 January 2024

How can scientists make the most of the public’s trust in them?

Researchers have a part to play in addressing concerns about government interference in science.

Correspondence | 30 January 2024

Reaching carbon neutrality requires energy-efficient training of AI

Editorial | 23 January 2024

Computers make mistakes and AI will make things worse — the law must recognize that

A tragic scandal at the UK Post Office highlights the need for legal change, especially as organizations embrace artificial intelligence to enhance decision-making.

News | 23 January 2024

Two-faced AI language models learn to hide deception

‘Sleeper agents’ seem benign during testing but behave differently once deployed. And methods to stop them aren’t working.

News & Views | 17 January 2024

Large language models help computer programs to evolve

A branch of computer science known as genetic programming has been given a boost with the application of large language models that are trained on the combined intuition of the world’s programmers.

- Jean-Baptiste Mouret

Article 17 January 2024 | Open Access

Solving olympiad geometry without human demonstrations

A new neuro-symbolic theorem prover for Euclidean plane geometry trained from scratch on millions of synthesized theorems and proofs outperforms the previous best method and reaches the performance of an olympiad gold medallist.

- Trieu H. Trinh

- , Yuhuai Wu

- & Thang Luong

Browse broader subjects

- Systems biology

- Mathematics and computing

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Explore your training options in 10 minutes Get Started

- Graduate Stories

- Partner Spotlights

- Bootcamp Prep

- Bootcamp Admissions

- University Bootcamps

- Coding Tools

- Software Engineering

- Web Development

- Data Science

- Tech Guides

- Tech Resources

- Career Advice

- Online Learning

- Internships

- Apprenticeships

- Tech Salaries

- Associate Degree

- Bachelor's Degree

- Master's Degree

- University Admissions

- Best Schools

- Certifications

- Bootcamp Financing

- Higher Ed Financing

- Scholarships

- Financial Aid

- Best Coding Bootcamps

- Best Online Bootcamps

- Best Web Design Bootcamps

- Best Data Science Bootcamps

- Best Technology Sales Bootcamps

- Best Data Analytics Bootcamps

- Best Cybersecurity Bootcamps

- Best Digital Marketing Bootcamps

- Los Angeles

- San Francisco

- Browse All Locations

- Digital Marketing

- Machine Learning

- See All Subjects

- Bootcamps 101

- Full-Stack Development

- Career Changes

- View all Career Discussions

- Mobile App Development

- Cybersecurity

- Product Management

- UX/UI Design

- What is a Coding Bootcamp?

- Are Coding Bootcamps Worth It?

- How to Choose a Coding Bootcamp

- Best Online Coding Bootcamps and Courses

- Best Free Bootcamps and Coding Training

- Coding Bootcamp vs. Community College

- Coding Bootcamp vs. Self-Learning

- Bootcamps vs. Certifications: Compared

- What Is a Coding Bootcamp Job Guarantee?

- How to Pay for Coding Bootcamp

- Ultimate Guide to Coding Bootcamp Loans

- Best Coding Bootcamp Scholarships and Grants

- Education Stipends for Coding Bootcamps

- Get Your Coding Bootcamp Sponsored by Your Employer

- GI Bill and Coding Bootcamps

- Tech Intevriews

- Our Enterprise Solution

- Connect With Us

- Publication

- Reskill America

- Partner With Us

- Resource Center

- Bachelor’s Degree

- Master’s Degree

The Top 10 Most Interesting Computer Science Research Topics

Computer science touches nearly every area of our lives. With new advancements in technology, the computer science field is constantly evolving, giving rise to new computer science research topics. These topics attempt to answer various computer science research questions and how they affect the tech industry and the larger world.

Computer science research topics can be divided into several categories, such as artificial intelligence, big data and data science, human-computer interaction, security and privacy, and software engineering. If you are a student or researcher looking for computer research paper topics. In that case, this article provides some suggestions on examples of computer science research topics and questions.

Find your bootcamp match

What makes a strong computer science research topic.

A strong computer science topic is clear, well-defined, and easy to understand. It should also reflect the research’s purpose, scope, or aim. In addition, a strong computer science research topic is devoid of abbreviations that are not generally known, though, it can include industry terms that are currently and generally accepted.

Tips for Choosing a Computer Science Research Topic

- Brainstorm . Brainstorming helps you develop a few different ideas and find the best topic for you. Some core questions you should ask are, What are some open questions in computer science? What do you want to learn more about? What are some current trends in computer science?

- Choose a sub-field . There are many subfields and career paths in computer science . Before choosing a research topic, ensure that you point out which aspect of computer science the research will focus on. That could be theoretical computer science, contemporary computing culture, or even distributed computing research topics.

- Aim to answer a question . When you’re choosing a research topic in computer science, you should always have a question in mind that you’d like to answer. That helps you narrow down your research aim to meet specified clear goals.

- Do a comprehensive literature review . When starting a research project, it is essential to have a clear idea of the topic you plan to study. That involves doing a comprehensive literature review to better understand what has been learned about your topic in the past.

- Keep the topic simple and clear. The topic should reflect the scope and aim of the research it addresses. It should also be concise and free of ambiguous words. Hence, some researchers recommended that the topic be limited to five to 15 substantive words. It can take the form of a question or a declarative statement.

What’s the Difference Between a Research Topic and a Research Question?

A research topic is the subject matter that a researcher chooses to investigate. You may also refer to it as the title of a research paper. It summarizes the scope of the research and captures the researcher’s approach to the research question. Hence, it may be broad or more specific. For example, a broad topic may read, Data Protection and Blockchain, while a more specific variant can read, Potential Strategies to Privacy Issues on the Blockchain.

On the other hand, a research question is the fundamental starting point for any research project. It typically reflects various real-world problems and, sometimes, theoretical computer science challenges. As such, it must be clear, concise, and answerable.

How to Create Strong Computer Science Research Questions

To create substantial computer science research questions, one must first understand the topic at hand. Furthermore, the research question should generate new knowledge and contribute to the advancement of the field. It could be something that has not been answered before or is only partially answered. It is also essential to consider the feasibility of answering the question.

Top 10 Computer Science Research Paper Topics

1. battery life and energy storage for 5g equipment.

The 5G network is an upcoming cellular network with much higher data rates and capacity than the current 4G network. According to research published in the European Scientific Institute Journal, one of the main concerns with the 5G network is the high energy consumption of the 5G-enabled devices . Hence, this research on this topic can highlight the challenges and proffer unique solutions to make more energy-efficient designs.

2. The Influence of Extraction Methods on Big Data Mining

Data mining has drawn the scientific community’s attention, especially with the explosive rise of big data. Many research results prove that the extraction methods used have a significant effect on the outcome of the data mining process. However, a topic like this analyzes algorithms. It suggests strategies and efficient algorithms that may help understand the challenge or lead the way to find a solution.

3. Integration of 5G with Analytics and Artificial Intelligence

According to the International Finance Corporation, 5G and AI technologies are defining emerging markets and our world. Through different technologies, this research aims to find novel ways to integrate these powerful tools to produce excellent results. Subjects like this often spark great discoveries that pioneer new levels of research and innovation. A breakthrough can influence advanced educational technology, virtual reality, metaverse, and medical imaging.

4. Leveraging Asynchronous FPGAs for Crypto Acceleration

To support the growing cryptocurrency industry, there is a need to create new ways to accelerate transaction processing. This project aims to use asynchronous Field-Programmable Gate Arrays (FPGAs) to accelerate cryptocurrency transaction processing. It explores how various distributed computing technologies can influence mining cryptocurrencies faster with FPGAs and generally enjoy faster transactions.

5. Cyber Security Future Technologies

Cyber security is a trending topic among businesses and individuals, especially as many work teams are going remote. Research like this can stretch the length and breadth of the cyber security and cloud security industries and project innovations depending on the researcher’s preferences. Another angle is to analyze existing or emerging solutions and present discoveries that can aid future research.

6. Exploring the Boundaries Between Art, Media, and Information Technology

The field of computers and media is a vast and complex one that intersects in many ways. They create images or animations using design technology like algorithmic mechanism design, design thinking, design theory, digital fabrication systems, and electronic design automation. This paper aims to define how both fields exist independently and symbiotically.

7. Evolution of Future Wireless Networks Using Cognitive Radio Networks

This research project aims to study how cognitive radio technology can drive evolution in future wireless networks. It will analyze the performance of cognitive radio-based wireless networks in different scenarios and measure its impact on spectral efficiency and network capacity. The research project will involve the development of a simulation model for studying the performance of cognitive radios in different scenarios.

8. The Role of Quantum Computing and Machine Learning in Advancing Medical Predictive Systems

In a paper titled Exploring Quantum Computing Use Cases for Healthcare , experts at IBM highlighted precision medicine and diagnostics to benefit from quantum computing. Using biomedical imaging, machine learning, computational biology, and data-intensive computing systems, researchers can create more accurate disease progression prediction, disease severity classification systems, and 3D Image reconstruction systems vital for treating chronic diseases.

9. Implementing Privacy and Security in Wireless Networks

Wireless networks are prone to attacks, and that has been a big concern for both individual users and organizations. According to the Cyber Security and Infrastructure Security Agency CISA, cyber security specialists are working to find reliable methods of securing wireless networks . This research aims to develop a secure and privacy-preserving communication framework for wireless communication and social networks.

10. Exploring the Challenges and Potentials of Biometric Systems Using Computational Techniques