Mastering Python’s Set Difference: A Game-Changer for Data Wrangling

We use cookies essential for this site to function well. Please click to help us improve its usefulness with additional cookies. Learn about our use of cookies in our Privacy Policy & Cookies Policy .

Show details

This site uses cookies to ensure that you get the best experience possible. To learn more about how we use cookies, please refer to our Privacy Policy & Cookies Policy .

Necessary cookies help make a website usable by enabling basic functions like page navigation and access to secure areas of the website. The website cannot function properly without these cookies.

It is needed for personalizing the website.

Expiry: Session

This cookie is used to prevent Cross-site request forgery (often abbreviated as CSRF) attacks of the website

Type: HTTPS

Preserves the login/logout state of users across the whole site.

Preserves users' states across page requests.

Google One-Tap login adds this g_state cookie to set the user status on how they interact with the One-Tap modal.

Expiry: 365 days

Statistic cookies help website owners to understand how visitors interact with websites by collecting and reporting information anonymously.

Used by Microsoft Clarity, to store and track visits across websites.

Expiry: 1 Year

Used by Microsoft Clarity, Persists the Clarity User ID and preferences, unique to that site, on the browser. This ensures that behavior in subsequent visits to the same site will be attributed to the same user ID.

Expiry: 1 year

Used by Microsoft Clarity, Connects multiple page views by a user into a single Clarity session recording.

Expiry: 1 Day

Collects user data is specifically adapted to the user or device. The user can also be followed outside of the loaded website, creating a picture of the visitor's behavior.

Expiry: 2 years

Use to measure the use of the website for internal analytics

Expiry: 1 years

The cookie is set by embedded Microsoft Clarity scripts. The purpose of this cookie is for heatmap and session recording.

Collected user data is specifically adapted to the user or device. The user can also be followed outside of the loaded website, creating a picture of the visitor's behavior.

Expiry: 2 months

This cookie is installed by Google Analytics. The cookie is used to store information of how visitors use a website and helps in creating an analytics report of how the website is doing. The data collected includes the number of visitors, the source where they have come from, and the pages visited in an anonymous form.

Expiry: 399 days

Used by Google Analytics, to store and count pageviews.

Expiry: 399 Days

Used by Google Analytics to collect data on the number of times a user has visited the website as well as dates for the first and most recent visit.

Expiry: 1 day

Used to send data to Google Analytics about the visitor's device and behavior. Tracks the visitor across devices and marketing channels.

Type: PIXEL

cookies ensure that requests within a browsing session are made by the user, and not by other sites.

Expiry: 6 months

G_ENABLED_IDPS

use the cookie when customers want to make a referral from their gmail contacts; it helps auth the gmail account.

test_cookie

This cookie is set by DoubleClick (which is owned by Google) to determine if the website visitor's browser supports cookies.

this is used to send push notification using webengage.

WebKlipperAuth

used by webenage to track auth of webenagage.

Expiry: session

Linkedin sets this cookie to registers statistical data on users' behavior on the website for internal analytics.

Use to maintain an anonymous user session by the server.

Used as part of the LinkedIn Remember Me feature and is set when a user clicks Remember Me on the device to make it easier for him or her to sign in to that device.

AnalyticsSyncHistory

Used to store information about the time a sync with the lms_analytics cookie took place for users in the Designated Countries.

lms_analytics

Used to store information about the time a sync with the AnalyticsSyncHistory cookie took place for users in the Designated Countries.

Cookie used for Sign-in with Linkedin and/or to allow for the Linkedin follow feature.

allow for the Linkedin follow feature.

often used to identify you, including your name, interests, and previous activity.

Tracks the time that the previous page took to load

Used to remember a user's language setting to ensure LinkedIn.com displays in the language selected by the user in their settings

Tracks percent of page viewed

AMCV_14215E3D5995C57C0A495C55%40AdobeOrg

Indicates the start of a session for Adobe Experience Cloud

Provides page name value (URL) for use by Adobe Analytics

Used to retain and fetch time since last visit in Adobe Analytics

Remembers a user's display preference/theme setting

li_theme_set

Remembers which users have updated their display / theme preferences

Preference cookies enable a website to remember information that changes the way the website behaves or looks, like your preferred language or the region that you are in.

Marketing cookies are used to track visitors across websites. The intention is to display ads that are relevant and engaging for the individual user and thereby more valuable for publishers and third party advertisers.

Used by Google Adsense, to store and track conversions.

Expiry: 3 months

Save certain preferences, for example the number of search results per page or activation of the SafeSearch Filter. Adjusts the ads that appear in Google Search.

These cookies are used for the purpose of targeted advertising.

Expiry: 6 hours

Expiry: 1 month

These cookies are used to gather website statistics, and track conversion rates.

Aggregate analysis of website visitors

This cookie is set by Facebook to deliver advertisements when they are on Facebook or a digital platform powered by Facebook advertising after visiting this website.

Expiry: 4 months

Contains a unique browser and user ID, used for targeted advertising.

Used by LinkedIn to track the use of embedded services.

Used by LinkedIn for tracking the use of embedded services.

Use these cookies to assign a unique ID when users visit a website.

UserMatchHistory

These cookies are set by LinkedIn for advertising purposes, including: tracking visitors so that more relevant ads can be presented, allowing users to use the 'Apply with LinkedIn' or the 'Sign-in with LinkedIn' functions, collecting information about how visitors use the site, etc.

Used to make a probabilistic match of a user's identity outside the Designated Countries

Expiry: 90 days

Used to collect information for analytics purposes.

Used to store session ID for a users session to ensure that clicks from adverts on the Bing search engine are verified for reporting purposes and for personalisation

UnclassNameified cookies are cookies that we are in the process of classNameifying, together with the providers of individual cookies.

Cookie declaration last updated on 24/03/2023 by Analytics Vidhya.

Cookies are small text files that can be used by websites to make a user's experience more efficient. The law states that we can store cookies on your device if they are strictly necessary for the operation of this site. For all other types of cookies, we need your permission. This site uses different types of cookies. Some cookies are placed by third-party services that appear on our pages. Learn more about who we are, how you can contact us, and how we process personal data in our Privacy Policy .

Reading list

Basics of machine learning, machine learning lifecycle, importance of stats and eda, understanding data, probability, exploring continuous variable, exploring categorical variables, missing values and outliers, central limit theorem, bivariate analysis introduction, continuous - continuous variables, continuous categorical, categorical categorical, multivariate analysis, different tasks in machine learning, build your first predictive model, evaluation metrics, preprocessing data, linear models, selecting the right model, feature selection techniques, decision tree, feature engineering, naive bayes, multiclass and multilabel, basics of ensemble techniques, advance ensemble techniques, hyperparameter tuning, support vector machine, advance dimensionality reduction, unsupervised machine learning methods, recommendation engines, improving ml models, working with large datasets, interpretability of machine learning models, automated machine learning, model deployment, deploying ml models, embedded devices, an introduction to problem-solving using search algorithms for beginners.

- Introduction

In computer science, problem-solving refers to synthetic intelligence techniques, which include forming green algorithms, heuristics, and acting root reason analysis to locate suited solutions. Search algorithms are fundamental tools for fixing a big range of issues in computer science. They provide a systematic technique to locating answers by way of exploring a hard and fast of feasible alternatives. These algorithms are used in various applications together with path locating, scheduling, and information retrieval.

In this article, we will look into the problem-solving techniques used by various types of search algorithms.

This article was published as a part of the Data Science Blogathon .

Table of contents

Examples of problems in artificial intelligence, problem solving techniques, properties of search algorithms, types of search algorithms, uninformed search algorithms, comparison of various uninformed search algorithms, informed search algorithms, comparison of uninformed and informed search algorithms.

In today’s fast-paced digitized world, artificial intelligence techniques are used widely to automate systems that can use the resource and time efficiently. Some of the well-known problems experienced in everyday life are games and puzzles. Using AI techniques, we can solve these problems efficiently. In this sense, some of the most common problems resolved by AI are:

- Travelling Salesman Problem

- Tower of Hanoi Problem

- Water-Jug Problem

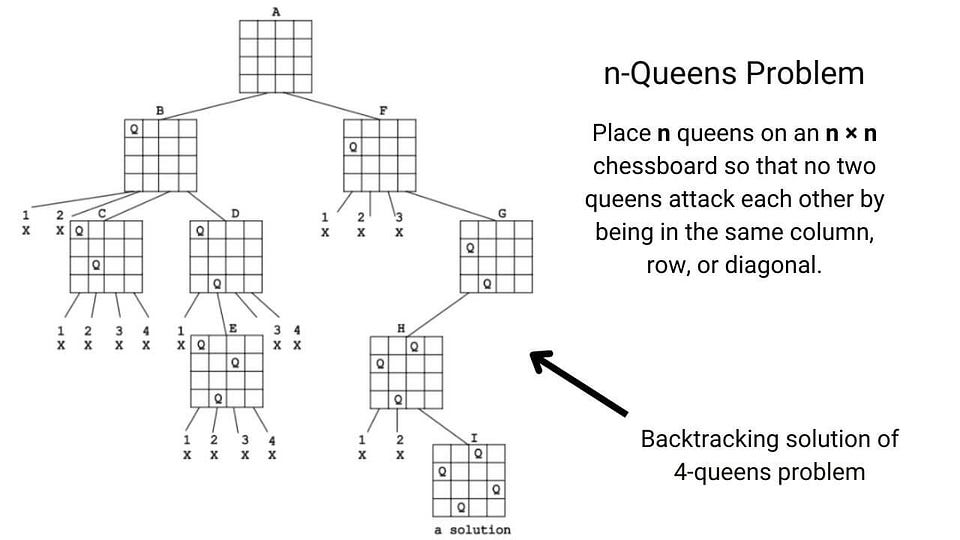

- N-Queen Problem

- Crypt-arithmetic Problems

- Magic Squares

- Logical Puzzles and so on.

In artificial intelligence, problems can be solved by using searching algorithms, evolutionary computations, knowledge representations, etc.

In this article, I am going to discuss the various searching techniques that are used to solve a problem.

In general, searching is referred to as finding information one needs.

The process of problem-solving using searching consists of the following steps.

- Define the problem

- Analyze the problem

- Identification of possible solutions

- Choosing the optimal solution

- Implementation

Let’s discuss some of the essential properties of search algorithms.

Completeness

A search algorithm is said to be complete when it gives a solution or returns any solution for a given random input.

If a solution found is best (lowest path cost) among all the solutions identified, then that solution is said to be an optimal one.

Time complexity

The time taken by an algorithm to complete its task is called time complexity. If the algorithm completes a task in a lesser amount of time, then it is an efficient one.

Space complexity

It is the maximum storage or memory taken by the algorithm at any time while searching.

These properties are also used to compare the efficiency of the different types of searching algorithms.

Now let’s see the types of the search algorithm.

Based on the search problems, we can classify the search algorithm as

- Uninformed search

- Informed search

The uninformed search algorithm does not have any domain knowledge such as closeness, location of the goal state, etc. it behaves in a brute-force way. It only knows the information about how to traverse the given tree and how to find the goal state. This algorithm is also known as the Blind search algorithm or Brute -Force algorithm. The uninformed search strategies are of six types.

- Breadth-first search

- Depth-first search

- Depth-limited search

- Iterative deepening depth-first search

- Bidirectional search

- Uniform cost search

Let’s discuss these six strategies one by one.

1. Breadth-first search

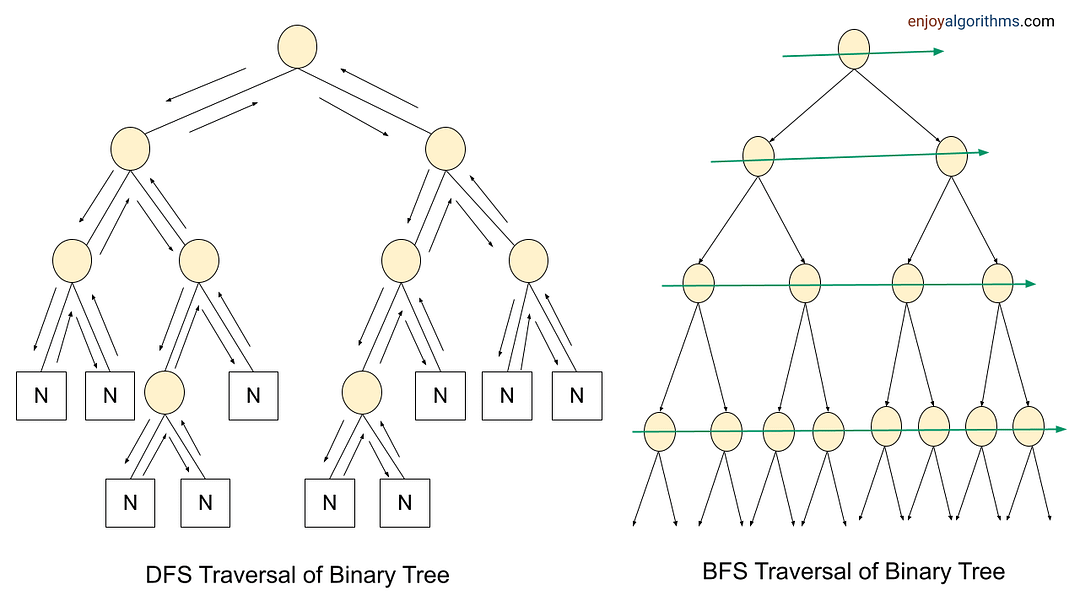

It is of the most common search strategies. It generally starts from the root node and examines the neighbor nodes and then moves to the next level. It uses First-in First-out (FIFO) strategy as it gives the shortest path to achieving the solution.

BFS is used where the given problem is very small and space complexity is not considered.

Now, consider the following tree.

Source: Author

Here, let’s take node A as the start state and node F as the goal state.

The BFS algorithm starts with the start state and then goes to the next level and visits the node until it reaches the goal state.

In this example, it starts from A and then travel to the next level and visits B and C and then travel to the next level and visits D, E, F and G. Here, the goal state is defined as F. So, the traversal will stop at F.

The path of traversal is:

A —-> B —-> C —-> D —-> E —-> F

Let’s implement the same in python programming.

Python Code:

Advantages of BFS

- BFS will never be trapped in any unwanted nodes.

- If the graph has more than one solution, then BFS will return the optimal solution which provides the shortest path.

Disadvantages of BFS

- BFS stores all the nodes in the current level and then go to the next level. It requires a lot of memory to store the nodes.

- BFS takes more time to reach the goal state which is far away.

2. Depth-first search

The depth-first search uses Last-in, First-out (LIFO) strategy and hence it can be implemented by using stack. DFS uses backtracking. That is, it starts from the initial state and explores each path to its greatest depth before it moves to the next path.

DFS will follow

Root node —-> Left node —-> Right node

Now, consider the same example tree mentioned above.

Here, it starts from the start state A and then travels to B and then it goes to D. After reaching D, it backtracks to B. B is already visited, hence it goes to the next depth E and then backtracks to B. as it is already visited, it goes back to A. A is already visited. So, it goes to C and then to F. F is our goal state and it stops there.

A —-> B —-> D —-> E —-> C —-> F

The output path is as follows.

Advantages of DFS

- It takes lesser memory as compared to BFS.

- The time complexity is lesser when compared to BFS.

- DFS does not require much more search.

Disadvantages of DFS

- DFS does not always guarantee to give a solution.

- As DFS goes deep down, it may get trapped in an infinite loop.

3. Depth-limited search

Depth-limited works similarly to depth-first search. The difference here is that depth-limited search has a pre-defined limit up to which it can traverse the nodes. Depth-limited search solves one of the drawbacks of DFS as it does not go to an infinite path.

DLS ends its traversal if any of the following conditions exits.

Standard Failure

It denotes that the given problem does not have any solutions.

Cut off Failure Value

It indicates that there is no solution for the problem within the given limit.

Now, consider the same example.

Let’s take A as the start node and C as the goal state and limit as 1.

The traversal first starts with node A and then goes to the next level 1 and the goal state C is there. It stops the traversal.

A —-> C

If we give C as the goal node and the limit as 0, the algorithm will not return any path as the goal node is not available within the given limit.

If we give the goal node as F and limit as 2, the path will be A, C, F.

Let’s implement DLS.

When we give C as goal node and 1 as limit the path will be as follows.

Advantages of DLS

- It takes lesser memory when compared to other search techniques.

Disadvantages of DLS

- DLS may not offer an optimal solution if the problem has more than one solution.

- DLS also encounters incompleteness.

4. Iterative deepening depth-first search

Iterative deepening depth-first search is a combination of depth-first search and breadth-first search. IDDFS find the best depth limit by gradually adding the limit until the defined goal state is reached.

Let me try to explain this with the same example tree.

Consider, A as the start node and E as the goal node. Let the maximum depth be 2.

The algorithm starts with A and goes to the next level and searches for E. If not found, it goes to the next level and finds E.

The path of traversal is

A —-> B —-> E

Let’s try to implement this.

The path generated is as follows.

Advantages of IDDFS

- IDDFS has the advantages of both BFS and DFS.

- It offers fast search and uses memory efficiently.

Disadvantages of IDDFS

- It does all the works of the previous stage again and again.

5. Bidirectional search

The bidirectional search algorithm is completely different from all other search strategies. It executes two simultaneous searches called forward-search and backwards-search and reaches the goal state. Here, the graph is divided into two smaller sub-graphs. In one graph, the search is started from the initial start state and in the other graph, the search is started from the goal state. When these two nodes intersect each other, the search will be terminated.

Bidirectional search requires both start and goal start to be well defined and the branching factor to be the same in the two directions.

Consider the below graph.

Here, the start state is E and the goal state is G. In one sub-graph, the search starts from E and in the other, the search starts from G. E will go to B and then A. G will go to C and then A. Here, both the traversal meets at A and hence the traversal ends.

E —-> B —-> A —-> C —-> G

Let’s implement the same in Python.

The path is generated as follows.

Advantages of bidirectional search

- This algorithm searches the graph fast.

- It requires less memory to complete its action.

Disadvantages of bidirectional search

- The goal state should be pre-defined.

- The graph is quite difficult to implement.

6. Uniform cost search

Uniform cost search is considered the best search algorithm for a weighted graph or graph with costs. It searches the graph by giving maximum priority to the lowest cumulative cost. Uniform cost search can be implemented using a priority queue.

Consider the below graph where each node has a pre-defined cost.

Here, S is the start node and G is the goal node.

From S, G can be reached in the following ways.

S, A, E, F, G -> 19

S, B, E, F, G -> 18

S, B, D, F, G -> 19

S, C, D, F, G -> 23

Here, the path with the least cost is S, B, E, F, G.

Let’s implement UCS in Python.

The optimal output path is generated.

Advantages of UCS

- This algorithm is optimal as the selection of paths is based on the lowest cost.

Disadvantages of UCS

- The algorithm does not consider how many steps it goes to reach the lowest path. This may result in an infinite loop also.

Now, let me compare the six different uninformed search strategies based on the time complexity.

| Algorithm | Time | Space | Complete | Optimality |

| Breadth First | O(b^d) | O(b^d) | Yes | Yes |

| Depth First | O(b^m) | O(bm) | No | No |

| Depth Limited | O(b^l) | O(bl) | No | No |

| Iterative Deepening | O(b^d) | O(bd) | Yes | Yes |

| Bidirectional | O(b^(d/2)) | O(b^(d/2)) | Yes | Yes |

| Uniform Cost | O(bl+floor(C*/epsilon)) | O(bl+floor9C*/epsilon)) | Yes | Yes |

This is all about uninformed search algorithms.

Let’s take a look at informed search algorithms.

The informed search algorithm is also called heuristic search or directed search. In contrast to uninformed search algorithms, informed search algorithms require details such as distance to reach the goal, steps to reach the goal, cost of the paths which makes this algorithm more efficient.

Here, the goal state can be achieved by using the heuristic function.

The heuristic function is used to achieve the goal state with the lowest cost possible. This function estimates how close a state is to the goal.

Let’s discuss some of the informed search strategies.

1. Greedy best-first search algorithm

Greedy best-first search uses the properties of both depth-first search and breadth-first search. Greedy best-first search traverses the node by selecting the path which appears best at the moment. The closest path is selected by using the heuristic function.

Consider the below graph with the heuristic values.

Here, A is the start node and H is the goal node.

Greedy best-first search first starts with A and then examines the next neighbour B and C. Here, the heuristics of B is 12 and C is 4. The best path at the moment is C and hence it goes to C. From C, it explores the neighbours F and G. the heuristics of F is 8 and G is 2. Hence it goes to G. From G, it goes to H whose heuristic is 0 which is also our goal state.

A —-> C —-> G —-> H

Let’s try this with Python.

The output path with the lowest cost is generated.

The time complexity of Greedy best-first search is O(b m ) in worst cases.

Advantages of Greedy best-first search

- Greedy best-first search is more efficient compared with breadth-first search and depth-first search.

Disadvantages of Greedy best-first search

- In the worst-case scenario, the greedy best-first search algorithm may behave like an unguided DFS.

- There are some possibilities for greedy best-first to get trapped in an infinite loop.

- The algorithm is not an optimal one.

Next, let’s discuss the other informed search algorithm called the A* search algorithm.

2. A* search Algorithm

A* search algorithm is a combination of both uniform cost search and greedy best-first search algorithms. It uses the advantages of both with better memory usage. It uses a heuristic function to find the shortest path. A* search algorithm uses the sum of both the cost and heuristic of the node to find the best path.

Consider the following graph with the heuristics values as follows.

Let A be the start node and H be the goal node.

First, the algorithm will start with A. From A, it can go to B, C, H.

Note the point that A* search uses the sum of path cost and heuristics value to determine the path.

Here, from A to B, the sum of cost and heuristics is 1 + 3 = 4.

From A to C, it is 2 + 4 = 6.

From A to H, it is 7 + 0 = 7.

Here, the lowest cost is 4 and the path A to B is chosen. The other paths will be on hold.

Now, from B, it can go to D or E.

From A to B to D, the cost is 1 + 4 + 2 = 7.

From A to B to E, it is 1 + 6 + 6 = 13.

The lowest cost is 7. Path A to B to D is chosen and compared with other paths which are on hold.

Here, path A to C is of less cost. That is 6.

Hence, A to C is chosen and other paths are kept on hold.

From C, it can now go to F or G.

From A to C to F, the cost is 2 + 3 + 3 = 8.

From A to C to G, the cost is 2 + 2 + 1 = 5.

The lowest cost is 5 which is also lesser than other paths which are on hold. Hence, path A to G is chosen.

From G, it can go to H whose cost is 2 + 2 + 2 + 0 = 6.

Here, 6 is lesser than other paths cost which is on hold.

Also, H is our goal state. The algorithm will terminate here.

Let’s try this in Python.

The output is given as

The time complexity of the A* search is O(b^d) where b is the branching factor.

Advantages of A* search algorithm

- This algorithm is best when compared with other algorithms.

- This algorithm can be used to solve very complex problems also it is an optimal one.

Disadvantages of A* search algorithm

- The A* search is based on heuristics and cost. It may not produce the shortest path.

- The usage of memory is more as it keeps all the nodes in the memory.

Now, let’s compare uninformed and informed search strategies.

Uninformed search is also known as blind search whereas informed search is also called heuristics search. Uniformed search does not require much information. Informed search requires domain-specific details. Compared to uninformed search, informed search strategies are more efficient and the time complexity of uninformed search strategies is more. Informed search handles the problem better than blind search.

Search algorithms are used in games, stored databases, virtual search spaces, quantum computers, and so on. In this article, we have discussed some of the important search strategies and how to use them to solve the problems in AI and this is not the end. There are several algorithms to solve any problem. Nowadays, AI is growing rapidly and applies to many real-life problems. Keep learning! Keep practicing!

Free Courses

Generative AI - A Way of Life

Explore Generative AI for beginners: create text and images, use top AI tools, learn practical skills, and ethics.

Getting Started with Large Language Models

Master Large Language Models (LLMs) with this course, offering clear guidance in NLP and model training made simple.

Building LLM Applications using Prompt Engineering

This free course guides you on building LLM apps, mastering prompt engineering, and developing chatbots with enterprise data.

Building Your first RAG System using LlamaIndex

Build your first RAG model with LlamaIndex in this free course. Dive into Retrieval-Augmented Generation now!

Building Production Ready RAG systems using LlamaIndex

Learn Retrieval-Augmented Generation (RAG): learn how it works, the RAG framework, and use LlamaIndex for advanced systems.

Recommended Articles

Uniform Cost Search Algorithm

Informed Search Strategies for State Space Sear...

Uninformed Search Strategy for State Space Sear...

Introduction to Intelligent Search Algorithms

Best First Search in Artificial Intelligence

State Space Search Optimization Using Local Sea...

What is A* Algorithm?

Understanding the Greedy Best-First Search (GBF...

Top 7 Algorithms for Data Structures in Python

Local Search Algorithms in AI: A Comprehensive ...

Frequently Asked Questions

Lorem ipsum dolor sit amet, consectetur adipiscing elit,

Write for us

Write, captivate, and earn accolades and rewards for your work

- Reach a Global Audience

- Get Expert Feedback

- Build Your Brand & Audience

- Cash In on Your Knowledge

- Join a Thriving Community

- Level Up Your Data Science Game

Sion Chakrabarti

CHIRAG GOYAL

kaustubh gupta

Barney Darlington

Suvojit Hore

Arnab Mondal

Flagship Courses

Popular categories, generative ai tools and techniques, popular genai models, data science tools and techniques, genai pinnacle program, revolutionizing ai learning & development.

- 1:1 Mentorship with Generative AI experts

- Advanced Curriculum with 200+ Hours of Learning

- Master 26+ GenAI Tools and Libraries

Enroll with us today!

Continue your learning for free, enter email address to continue, enter otp sent to.

Resend OTP in 45s

- Part 2 Problem-solving »

- Chapter 3 Solving Problems by Searching

- Edit on GitHub

Chapter 3 Solving Problems by Searching

When the correct action to take is not immediately obvious, an agent may need to plan ahead : to consider a sequence of actions that form a path to a goal state. Such an agent is called a problem-solving agent , and the computational process it undertakes is called search .

Problem-solving agents use atomic representations, that is, states of the world are considered as wholes, with no internal structure visible to the problem-solving algorithms. Agents that use factored or structured representations of states are called planning agents .

We distinguish between informed algorithms, in which the agent can estimate how far it is from the goal, and uninformed algorithms, where no such estimate is available.

3.1 Problem-Solving Agents

If the agent has no additional information—that is, if the environment is unknown —then the agent can do no better than to execute one of the actions at random. For now, we assume that our agents always have access to information about the world. With that information, the agent can follow this four-phase problem-solving process:

GOAL FORMULATION : Goals organize behavior by limiting the objectives and hence the actions to be considered.

PROBLEM FORMULATION : The agent devises a description of the states and actions necessary to reach the goal—an abstract model of the relevant part of the world.

SEARCH : Before taking any action in the real world, the agent simulates sequences of actions in its model, searching until it finds a sequence of actions that reaches the goal. Such a sequence is called a solution .

EXECUTION : The agent can now execute the actions in the solution, one at a time.

It is an important property that in a fully observable, deterministic, known environment, the solution to any problem is a fixed sequence of actions . The open-loop system means that ignoring the percepts breaks the loop between agent and environment. If there is a chance that the model is incorrect, or the environment is nondeterministic, then the agent would be safer using a closed-loop approach that monitors the percepts.

In partially observable or nondeterministic environments, a solution would be a branching strategy that recommends different future actions depending on what percepts arrive.

3.1.1 Search problems and solutions

A search problem can be defined formally as follows:

A set of possible states that the environment can be in. We call this the state space .

The initial state that the agent starts in.

A set of one or more goal states . We can account for all three of these possibilities by specifying an \(Is\-Goal\) method for a problem.

The actions available to the agent. Given a state \(s\) , \(Actions(s)\) returns a finite set of actions that can be executed in \(s\) . We say that each of these actions is applicable in \(s\) .

A transition model , which describes what each action does. \(Result(s,a)\) returns the state that results from doing action \(a\) in state \(s\) .

An action cost function , denote by \(Action\-Cost(s,a,s\pr)\) when we are programming or \(c(s,a,s\pr)\) when we are doing math, that gives the numeric cost of applying action \(a\) in state \(s\) to reach state \(s\pr\) .

A sequence of actions forms a path , and a solution is a path from the initial state to a goal state. We assume that action costs are additive; that is, the total cost of a path is the sum of the individual action costs. An optimal solution has the lowest path cost among all solutions.

The state space can be represented as a graph in which the vertices are states and the directed edges between them are actions.

3.1.2 Formulating problems

The process of removing detail from a representation is called abstraction . The abstraction is valid if we can elaborate any abstract solution into a solution in the more detailed world. The abstraction is useful if carrying out each of the actions in the solution is easier than the original problem.

3.2 Example Problems

A standardized problem is intended to illustrate or exercise various problem-solving methods. It can be given a concise, exact description and hence is suitable as a benchmark for researchers to compare the performance of algorithms. A real-world problem , such as robot navigation, is one whose solutions people actually use, and whose formulation is idiosyncratic, not standardized, because, for example, each robot has different sensors that produce different data.

3.2.1 Standardized problems

A grid world problem is a two-dimensional rectangular array of square cells in which agents can move from cell to cell.

Vacuum world

Sokoban puzzle

Sliding-tile puzzle

3.2.2 Real-world problems

Route-finding problem

Touring problems

Trveling salesperson problem (TSP)

VLSI layout problem

Robot navigation

Automatic assembly sequencing

3.3 Search Algorithms

A search algorithm takes a search problem as input and returns a solution, or an indication of failure. We consider algorithms that superimpose a search tree over the state-space graph, forming various paths from the initial state, trying to find a path that reaches a goal state. Each node in the search tree corresponds to a state in the state space and the edges in the search tree correspond to actions. The root of the tree corresponds to the initial state of the problem.

The state space describes the (possibly infinite) set of states in the world, and the actions that allow transitions from one state to another. The search tree describes paths between these states, reaching towards the goal. The search tree may have multiple paths to (and thus multiple nodes for) any given state, but each node in the tree has a unique path back to the root (as in all trees).

The frontier separates two regions of the state-space graph: an interior region where every state has been expanded, and an exterior region of states that have not yet been reached.

3.3.1 Best-first search

In best-first search we choose a node, \(n\) , with minimum value of some evaluation function , \(f(n)\) .

3.3.2 Search data structures

A node in the tree is represented by a data structure with four components

\(node.State\) : the state to which the node corresponds;

\(node.Parent\) : the node in the tree that generated this node;

\(node.Action\) : the action that was applied to the parent’s state to generate this node;

\(node.Path\-Cost\) : the total cost of the path from the initial state to this node. In mathematical formulas, we use \(g(node)\) as a synonym for \(Path\-Cost\) .

Following the \(PARENT\) pointers back from a node allows us to recover the states and actions along the path to that node. Doing this from a goal node gives us the solution.

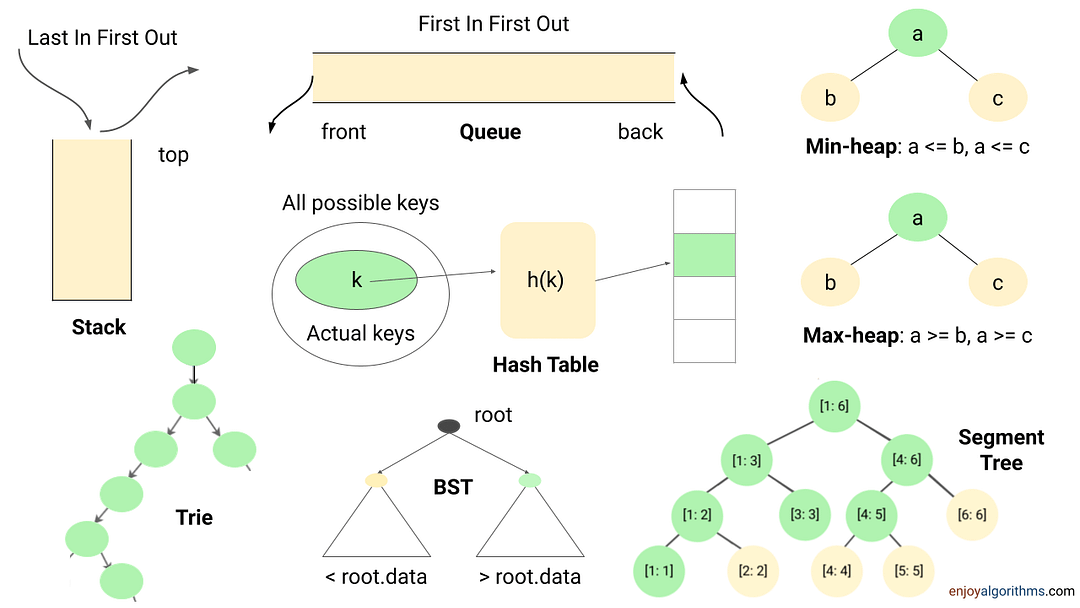

We need a data structure to store the frontier . The appropriate choice is a queue of some kind, because the operations on a frontier are:

\(Is\-Empty(frontier)\) returns true only if there are no nodes in the frontier.

\(Pop(frontier)\) removes the top node from the frontier and returns it.

\(Top(frontier)\) returns (but does not remove) the top node of the frontier.

\(Add(node, frontier)\) inserts node into its proper place in the queue.

Three kinds of queues are used in search algorithms:

A priority queue first pops the node with the minimum cost according to some evaluation function, \(f\) . It is used in best-first search.

A FIFO queue or first-in-first-out queue first pops the node that was added to the queue first; we shall see it is used in breadth-first search.

A LIFO queue or last-in-first-out queue (also known as a stack ) pops first the most recently added node; we shall see it is used in depth-first search.

3.3.3 Redundant paths

A cycle is a special case of a redundant path .

As the saying goes, algorithms that cannot remember the past are doomed to repeat it . There are three approaches to this issue.

First, we can remember all previously reached states (as best-first search does), allowing us to detect all redundant paths, and keep only the best path to each state.

Second, we can not worry about repeating the past. We call a search algorithm a graph search if it checks for redundant paths and a tree-like search if it does not check.

Third, we can compromise and check for cycles, but not for redundant paths in general.

3.3.4 Measuring problem-solving performance

COMPLETENESS : Is the algorithm guaranteed to find a solution when there is one, and to correctly report failure when there is not?

COST OPTIMALITY : Does it find a solution with the lowest path cost of all solutions?

TIME COMPLEXITY : How long does it take to find a solution?

SPACE COMPLEXITY : How much memory is needed to perform the search?

To be complete, a search algorithm must be systematic in the way it explores an infinite state space, making sure it can eventually reach any state that is connected to the initial state.

In theoretical computer science, the typical measure of time and space complexity is the size of the state-space graph, \(|V|+|E|\) , where \(|V|\) is the number of vertices (state nodes) of the graph and \(|E|\) is the number of edges (distinct state/action pairs). For an implicit state space, complexity can be measured in terms of \(d\) , the depth or number of actions in an optimal solution; \(m\) , the maximum number of actions in any path; and \(b\) , the branching factor or number of successors of a node that need to be considered.

3.4 Uninformed Search Strategies

3.4.1 breadth-first search .

When all actions have the same cost, an appropriate strategy is breadth-first search , in which the root node is expanded first, then all the successors of the root node are expanded next, then their successors, and so on.

Breadth-first search always finds a solution with a minimal number of actions, because when it is generating nodes at depth \(d\) , it has already generated all the nodes at depth \(d-1\) , so if one of them were a solution, it would have been found.

All the nodes remain in memory, so both time and space complexity are \(O(b^d)\) . The memory requirements are a bigger problem for breadth-first search than the execution time . In general, exponential-complexity search problems cannot be solved by uninformed search for any but the smallest instances .

3.4.2 Dijkstra’s algorithm or uniform-cost search

When actions have different costs, an obvious choice is to use best-first search where the evaluation function is the cost of the path from the root to the current node. This is called Dijkstra’s algorithm by the theoretical computer science community, and uniform-cost search by the AI community.

The complexity of uniform-cost search is characterized in terms of \(C^*\) , the cost of the optimal solution, and \(\epsilon\) , a lower bound on the cost of each action, with \(\epsilon>0\) . Then the algorithm’s worst-case time and space complexity is \(O(b^{1+\lfloor C^*/\epsilon\rfloor})\) , which can be much greater than \(b^d\) .

When all action costs are equal, \(b^{1+\lfloor C^*/\epsilon\rfloor}\) is just \(b^{d+1}\) , and uniform-cost search is similar to breadth-first search.

3.4.3 Depth-first search and the problem of memory

Depth-first search always expands the deepest node in the frontier first. It could be implemented as a call to \(Best\-First\-Search\) where the evaluation function \(f\) is the negative of the depth.

For problems where a tree-like search is feasible, depth-first search has much smaller needs for memory. A depth-first tree-like search takes time proportional to the number of states, and has memory complexity of only \(O(bm)\) , where \(b\) is the branching factor and \(m\) is the maximum depth of the tree.

A variant of depth-first search called backtracking search uses even less memory.

3.4.4 Depth-limited and iterative deepening search

To keep depth-first search from wandering down an infinite path, we can use depth-limited search , a version of depth-first search in which we supply a depth limit, \(l\) , and treat all nodes at depth \(l\) as if they had no successors. The time complexity is \(O(b^l)\) and the space complexity is \(O(bl)\)

Iterative deepening search solves the problem of picking a good value for \(l\) by trying all values: first 0, then 1, then 2, and so on—until either a solution is found, or the depth- limited search returns the failure value rather than the cutoff value.

Its memory requirements are modest: \(O(bd)\) when there is a solution, or \(O(bm)\) on finite state spaces with no solution. The time complexity is \(O(bd)\) when there is a solution, or \(O(bm)\) when there is none.

In general, iterative deepening is the preferred uninformed search method when the search state space is larger than can fit in memory and the depth of the solution is not known .

3.4.5 Bidirectional search

An alternative approach called bidirectional search simultaneously searches forward from the initial state and backwards from the goal state(s), hoping that the two searches will meet.

3.4.6 Comparing uninformed search algorithms

3.5 Informed (Heuristic) Search Strategies

An informed search strategy uses domain–specific hints about the location of goals to find colutions more efficiently than an uninformed strategy. The hints come in the form of a heuristic function , denoted \(h(n)\) :

\(h(n)\) = estimated cost of the cheapest path from the state at node \(n\) to a goal state.

3.5.1 Greedy best-first search

Greedy best-first search is a form of best-first search that expands first the node with the lowest \(h(n)\) value—the node that appears to be closest to the goal—on the grounds that this is likely to lead to a solution quickly. So the evaluation function \(f(n)=h(n)\) .

Search Algorithms

A search algorithm is a type of algorithm used in artificial intelligence to find the best or most optimal solution to a problem by exploring a set of possible solutions, also called a search space. A search algorithm filters through a large number of possibilities to find a solution that works best for a given set of constraints.

Search algorithms typically operate by organizing the search space into a particular type of graph, commonly a tree, and evaluate the best score, or cost, of traversing each branch of the tree. A solution is a path from the start state to the goal state that optimizes the cost given the parameters of the implemented algorithm.

Search algorithms are typically organized into two categories:

Uninformed Search: Algorithms that are general purpose traversals of the state space or search tree without any information about how good a state is. These are also referred to as blind search algorithms.

Informed Search: Algorithms that have information about the goal during the traversal, allowing the search to prioritize its expansion toward the goal state instead of exploring directions that may yield a favorable cost but don’t lead to the goal, or global optimum. By including extra rules that aid in estimating the location of the goal (known as heuristics) informed search algorithms can be more computationally efficient when searching for a path to the goal state.

Types of Search Algorithms

There are many types of search algorithms used in artificial intelligence, each with their own strengths and weaknesses. Some of the most common types of search algorithms include:

Depth-First Search (DFS)

This algorithm explores as far as possible along each branch before backtracking. DFS is often used in combination with other search algorithms, such as iterative deepening search, to find the optimal solution. Think of DFS as a traversal pattern that focuses on digging as deep as possible before exploring other paths.

Breadth-First Search (BFS)

This algorithm explores all the neighbor nodes at the current level before moving on to the nodes at the next level. Think of BFS as a traversal pattern that tries to explore broadly across many different paths at the same time.

Uniform Cost Search (UCS)

This algorithm expands the lowest cumulative cost from the start, continuing to explore all possible paths in order of increasing cost. UCS is guaranteed to find the optimal path between the start and goal nodes, as long as the cost of each edge is non-negative. However, it can be computationally expensive when exploring a large search space, as it explores all possible paths in order of increasing cost.

Heuristic Search

This algorithm uses a heuristic function to guide the search towards the optimal solution. A* search, one of the most popular heuristic search algorithms, uses both the actual cost of getting to a node and an estimate of the cost to reach the goal from that node.

Application of Search Algorithms

Search algorithms are used in various fields of artificial intelligence, including:

Pathfinding

Pathfinding problems involve finding the shortest path between two points in a given graph or network. BFS or A* search can be used to explore a graph and find the optimal path.

Optimization

In optimization problems, the goal is to find the minimum or maximum value of a function, subject to some constraints. Search algorithms such as hill climbing or simulated annealing are often used in optimization cases.

Game Playing

In game playing, search algorithms are used to evaluate all possible moves and choose the one that is most likely to lead to a win, or the best possible outcome. This is done by constructing a search tree where each node represents a game state and the edges represent the moves that can be taken to reach the associated new game state.

The following algorithms have dedicated, more in-depth content:

All contributors

Contribute to Docs

- Learn more about how to get involved.

- Edit this page on GitHub to fix an error or make an improvement.

- Submit feedback to let us know how we can improve Docs.

Learn AI on Codecademy

Data scientist: machine learning specialist.

IEEE Account

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- Practice Searching Algorithms

- MCQs on Searching Algorithms

- Tutorial on Searching Algorithms

- Linear Search

- Binary Search

- Ternary Search

- Jump Search

- Sentinel Linear Search

- Interpolation Search

- Exponential Search

- Fibonacci Search

- Ubiquitous Binary Search

- Linear Search Vs Binary Search

- Interpolation Search Vs Binary Search

- Binary Search Vs Ternary Search

- Sentinel Linear Search Vs Linear Search

- Searching Algorithms

Searching algorithms are essential tools in computer science used to locate specific items within a collection of data. These algorithms are designed to efficiently navigate through data structures to find the desired information, making them fundamental in various applications such as databases, web search engines , and more.

Table of Content

What is Searching?

- Searching terminologies

- Importance of Searching in DSA

- Applications of Searching

- Basics of Searching Algorithms

- Comparisons Between Different Searching Algorithms

- Library Implementations of Searching Algorithms

- Easy Problems on Searching

- Medium Problems on Searching

- Hard Problems on Searching

Searching is the fundamental process of locating a specific element or item within a collection of data . This collection of data can take various forms, such as arrays, lists, trees, or other structured representations. The primary objective of searching is to determine whether the desired element exists within the data, and if so, to identify its precise location or retrieve it. It plays an important role in various computational tasks and real-world applications, including information retrieval, data analysis, decision-making processes, and more.

Searching terminologies:

Target element:.

In searching, there is always a specific target element or item that you want to find within the data collection. This target could be a value, a record, a key, or any other data entity of interest.

Search Space:

The search space refers to the entire collection of data within which you are looking for the target element. Depending on the data structure used, the search space may vary in size and organization.

Complexity:

Searching can have different levels of complexity depending on the data structure and the algorithm used. The complexity is often measured in terms of time and space requirements.

Deterministic vs. Non-deterministic:

Some searching algorithms, like binary search , are deterministic, meaning they follow a clear, systematic approach. Others, such as linear search, are non-deterministic, as they may need to examine the entire search space in the worst case.

Importance of Searching in DSA:

- Efficiency: Efficient searching algorithms improve program performance.

- Data Retrieval: Quickly find and retrieve specific data from large datasets.

- Database Systems: Enables fast querying of databases.

- Problem Solving: Used in a wide range of problem-solving tasks.

Applications of Searching:

Searching algorithms have numerous applications across various fields. Here are some common applications:

- Information Retrieval: Search engines like Google, Bing, and Yahoo use sophisticated searching algorithms to retrieve relevant information from vast amounts of data on the web.

- Database Systems: Searching is fundamental in database systems for retrieving specific data records based on user queries, improving efficiency in data retrieval.

- E-commerce: Searching is crucial in e-commerce platforms for users to find products quickly based on their preferences, specifications, or keywords.

- Networking: In networking, searching algorithms are used for routing packets efficiently through networks, finding optimal paths, and managing network resources.

- Artificial Intelligence: Searching algorithms play a vital role in AI applications, such as problem-solving, game playing (e.g., chess), and decision-making processes

- Pattern Recognition: Searching algorithms are used in pattern matching tasks, such as image recognition, speech recognition, and handwriting recognition.

Basics of Searching Algorithms:

- Introduction to Searching – Data Structure and Algorithm Tutorial

- Importance of searching in Data Structure

- What is the purpose of the search algorithm?

Searching Algorithms:

- Meta Binary Search | One-Sided Binary Search

- The Ubiquitous Binary Search

Comparisons Between Different Searching Algorithms:

- Linear Search vs Binary Search

- Interpolation search vs Binary search

- Why is Binary Search preferred over Ternary Search?

- Is Sentinel Linear Search better than normal Linear Search?

Library Implementations of Searching Algorithms:

- Binary Search functions in C++ STL (binary_search, lower_bound and upper_bound)

- Arrays.binarySearch() in Java with examples | Set 1

- Arrays.binarySearch() in Java with examples | Set 2 (Search in subarray)

- Collections.binarySearch() in Java with Examples

Easy Problems on Searching:

- Find the largest three elements in an array

- Find the Missing Number

- Find the first repeating element in an array of integers

- Find the missing and repeating number

- Search, insert and delete in a sorted array

- Count 1’s in a sorted binary array

- Two elements whose sum is closest to zero

- Find a pair with the given difference

- k largest(or smallest) elements in an array

- Kth smallest element in a row-wise and column-wise sorted 2D array

- Find common elements in three sorted arrays

- Ceiling in a sorted array

- Floor in a Sorted Array

- Find the maximum element in an array which is first increasing and then decreasing

- Given an array of of size n and a number k, find all elements that appear more than n/k times

Medium Problems on Searching:

- Find all triplets with zero sum

- Find the element before which all the elements are smaller than it, and after which all are greater

- Find the largest pair sum in an unsorted array

- K’th Smallest/Largest Element in Unsorted Array

- Search an element in a sorted and rotated array

- Find the minimum element in a sorted and rotated array

- Find a peak element

- Maximum and minimum of an array using minimum number of comparisons

- Find a Fixed Point in a given array

- Find the k most frequent words from a file

- Find k closest elements to a given value

- Given a sorted array and a number x, find the pair in array whose sum is closest to x

- Find the closest pair from two sorted arrays

- Find three closest elements from given three sorted arrays

- Binary Search for Rational Numbers without using floating point arithmetic

Hard Problems on Searching:

- Median of two sorted arrays

- Median of two sorted arrays of different sizes

- Search in an almost sorted array

- Find position of an element in a sorted array of infinite numbers

- Given a sorted and rotated array, find if there is a pair with a given sum

- K’th Smallest/Largest Element in Unsorted Array | Worst case Linear Time

- K’th largest element in a stream

- Best First Search (Informed Search)

Quick Links:

- ‘Practice Problems’ on Searching

- ‘Quizzes’ on Searching

Recommended:

- Learn Data Structure and Algorithms | DSA Tutorial

Similar Reads

Please login to comment....

- Best Smartwatches in 2024: Top Picks for Every Need

- Top Budgeting Apps in 2024

- 10 Best Parental Control App in 2024

- Top Language Learning Apps in 2024

- GeeksforGeeks Practice - Leading Online Coding Platform

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Artificial Intelligence

- Artificial Intelligence (AI)

- Applications of AI

- History of AI

- Types of AI

- Intelligent Agent

- Types of Agents

- Agent Environment

- Turing Test in AI

Problem-solving

- Search Algorithms

- Uninformed Search Algorithm

- Informed Search Algorithms

- Hill Climbing Algorithm

- Means-Ends Analysis

Adversarial Search

- Adversarial search

- Minimax Algorithm

- Alpha-Beta Pruning

Knowledge Represent

- Knowledge Based Agent

- Knowledge Representation

- Knowledge Representation Techniques

- Propositional Logic

- Rules of Inference

- The Wumpus world

- knowledge-base for Wumpus World

- First-order logic

- Knowledge Engineering in FOL

- Inference in First-Order Logic

- Unification in FOL

- Resolution in FOL

- Forward Chaining and backward chaining

- Backward Chaining vs Forward Chaining

- Reasoning in AI

- Inductive vs. Deductive reasoning

Uncertain Knowledge R.

- Probabilistic Reasoning in AI

- Bayes theorem in AI

- Bayesian Belief Network

- Examples of AI

- AI in Healthcare

- Artificial Intelligence in Education

- Artificial Intelligence in Agriculture

- Engineering Applications of AI

- Advantages & Disadvantages of AI

- Robotics and AI

- Future of AI

- Languages used in AI

- Approaches to AI Learning

- Scope of AI

- Agents in AI

- Artificial Intelligence Jobs

- Amazon CloudFront

- Goals of Artificial Intelligence

- Can Artificial Intelligence replace Human Intelligence

- Importance of Artificial Intelligence

- Artificial Intelligence Stock in India

- How to Use Artificial Intelligence in Marketing

- Artificial Intelligence in Business

- Companies Working on Artificial Intelligence

- Artificial Intelligence Future Ideas

- Government Jobs in Artificial Intelligence in India

- What is the Role of Planning in Artificial Intelligence

- AI as a Service

- AI in Banking

- Cognitive AI

- Introduction of Seaborn

- Natural Language ToolKit (NLTK)

- Best books for ML

- AI companies of India will lead in 2022

- Constraint Satisfaction Problems in Artificial Intelligence

- How artificial intelligence will change the future

- Problem Solving Techniques in AI

- AI in Manufacturing Industry

- Artificial Intelligence in Automotive Industry

- Artificial Intelligence in Civil Engineering

- Artificial Intelligence in Gaming Industry

- Artificial Intelligence in HR

- Artificial Intelligence in Medicine

- PhD in Artificial Intelligence

- Activation Functions in Neural Networks

- Boston Housing Kaggle Challenge with Linear Regression

- What are OpenAI and ChatGPT

- Chatbot vs. Conversational AI

- Iterative Deepening A* Algorithm (IDA*)

- Iterative Deepening Search (IDS) or Iterative Deepening Depth First Search (IDDFS)

- Genetic Algorithm in Soft Computing

- AI and data privacy

- Future of Devops

- How Machine Learning is Used on Social Media Platforms in 2023

- Machine learning and climate change

- The Green Tech Revolution

- GoogleNet in AI

- AlexNet in Artificial Intelligence

- Basics of LiDAR - Light Detection and Ranging

- Explainable AI (XAI)

- Synthetic Image Generation

- What is Deepfake in Artificial Intelligence

- What is Generative AI: Introduction

- Artificial Intelligence in Power System Operation and Optimization

- Customer Segmentation with LLM

- Liquid Neural Networks in Artificial Intelligence

- Propositional Logic Inferences in Artificial Intelligence

- Text Generation using Gated Recurrent Unit Networks

- Viterbi Algorithm in NLP

- What are the benefits of Artificial Intelligence for devops

- AI Tech Stack

- Speech Recognition in Artificial Intelligence

- Types of AI Algorithms and How Do They Work

- AI Ethics (AI Code of Ethics)

- Pros and Cons of AI-Generated Content

- Top 10+ Jobs in AI and the Right Artificial Intelligence Skills You Need to Stand Out

- AIOps (artificial intelligence for IT operations)

- Artificial Intelligence In E-commerce

- How AI can Transform Industrial Safety

- How to Gradually Incorporate AI in Software Testing

- Generative AI

- NLTK WordNet

- What is Auto-GPT

- Artificial Super Intelligence (ASI)

- AI hallucination

- How to Learn AI from Scratch

- What is Dilated Convolution?

- Explainable Artificial Intelligence(XAI)

- AI Content Generator

- Artificial Intelligence Project Ideas for Beginners

- Beatoven.ai: Make Music AI

- Google Lumiere AI

- Handling Missing Data in Decision Tree Models

- Impacts of Artificial Intelligence in Everyday Life

- OpenAI DALL-E Editor Interface

- Water Jug Problem in AI

- What are the Ethical Problems in Artificial Intelligence

- Difference between Depth First Search, Breadth First Search, and Depth Limit Search in AI

- How To Humanize AI Text for Free

- 5 Algorithms that Demonstrate Artificial Intelligence Bias

- Artificial Intelligence - Boon or Bane

- Character AI

- 18 of the best large language models in 2024

- Explainable AI

- Conceptual Dependency in AI

- Problem characteristics in ai

- Top degree programs for studying artificial Intelligence

- AI Upscaling

- Artificial Intelligence combined with decentralized technologies

- Ambient Intelligence

- Federated Learning

- Neuromorphic Computing

- Bias Mitigation in AI

- Neural Architecture Search

- Top Artificial Intelligence Techniques

- Best First Search in Artificial Intelligence

- Top 10 Must-Read Books for Artificial Intelligence

- What are the Core Subjects in Artificial Intelligence

- Features of Artificial Intelligence

- Artificial Intelligence Engineer Salary in India

- Artificial Intelligence in Dentistry

- des.ai.gn - Augmenting Human Creativity with Artificial Intelligence

- Best Artificial Intelligence Courses in 2024

- Difference Between Data Science and Artificial Intelligence

- Narrow Artificial Intelligence

- What is OpenAI

- Best First Search Algorithm in Artificial Intelligence

- Decision Theory in Artificial Intelligence

- Subsets of AI

- Expert Systems

- Machine Learning Tutorial

- NLP Tutorial

- Artificial Intelligence MCQ

Related Tutorials

- Tensorflow Tutorial

- PyTorch Tutorial

- Data Science Tutorial

- Reinforcement Learning

| Search algorithms in AI are the algorithms that are created to aid the searchers in getting the right solution. The search issue contains search space, first start and end point. Now by performing simulation of scenarios and alternatives, searching algorithms help AI agents find the optimal state for the task. Logic used in algorithms processes the initial state and tries to get the expected state as the solution. Because of this, AI machines and applications just functioning using search engines and solutions that come from these algorithms can only be as effective as the algorithms. AI agents can make the AI interfaces usable without any software literacy. The agents that carry out such activities do so with the aim of reaching an end goal and develop action plans that in the end will bring the mission to an end. Completion of the action is gained after the steps of these different actions. The AI-agents finds the best way through the process by evaluating all the alternatives which are present. Search systems are a common task in artificial intelligence by which you are going to find the optimum solution for the AI agents. In Artificial Intelligence, Search techniques are universal problem-solving methods. or in AI mostly used these search strategies or algorithms to solve a specific problem and provide the best result. Problem-solving agents are the goal-based agents and use atomic representation. In this topic, we will learn various problem-solving search algorithms. Searchingis a step by step procedure to solve a search-problem in a given search space. A search problem can have three main factors: Search space represents a set of possible solutions, which a system may have. It is a state from where agent begins . It is a function which observe the current state and returns whether the goal state is achieved or not. A tree representation of search problem is called Search tree. The root of the search tree is the root node which is corresponding to the initial state. It gives the description of all the available actions to the agent. A description of what each action do, can be represented as a transition model. It is a function which assigns a numeric cost to each path. It is an action sequence which leads from the start node to the goal node. If a solution has the lowest cost among all solutions.Following are the four essential properties of search algorithms to compare the efficiency of these algorithms: A search algorithm is said to be complete if it guarantees to return a solution if at least any solution exists for any random input. If a solution found for an algorithm is guaranteed to be the best solution (lowest path cost) among all other solutions, then such a solution for is said to be an optimal solution. Time complexity is a measure of time for an algorithm to complete its task. It is the maximum storage space required at any point during the search, as the complexity of the problem. Here, are some important factors of role of search algorithms used AI are as follow.

"Workflow" logical search methods like describing the issue, getting the necessary steps together, and specifying an area to search help AI search algorithms getting better in solving problems. Take for instance the development of AI search algorithms which support applications like Google Maps by finding the fastest way or shortest route between given destinations. These programs basically conduct the search through various options to find the best solution possible.

Many AI functions can be designed as search oscillations, which thus specify what to look for in formulating the solution of the given problem.

Instead, the goal-directed and high-performance systems use a wide range of search algorithms to improve the efficiency of AI. Though they are not robots, these agents look for the ideal route for action dispersion so as to avoid the most impacting steps that can be used to solve a problem. It is their main aims to come up with an optimal solution which takes into account all possible factors.

AI Algorithms in search engines for systems manufacturing help them run faster. These programmable systems assist AI applications with applying rules and methods, thus making an effective implementation possible. Production systems involve learning of artificial intelligence systems and their search for canned rules that lead to the wanted action.

Beyond this, employing neural network algorithms is also of importance of the neural network systems. The systems are composed of these structures: a hidden layer, and an input layer, an output layer, and nodes that are interconnected. One of the most important functions offered by neural networks is to address the challenges of AI within any given scenarios. AI is somehow able to navigate the search space to find the connection weights that will be required in the mapping of inputs to outputs. This is made better by search algorithms in AI.

The uninformed search does not contain any domain knowledge such as closeness, the location of the goal. It operates in a brute-force way as it only includes information about how to traverse the tree and how to identify leaf and goal nodes. Uninformed search applies a way in which search tree is searched without any information about the search space like initial state operators and test for the goal, so it is also called blind search.It examines each node of the tree until it achieves the goal node.

Informed search algorithms use domain knowledge. In an informed search, problem information is available which can guide the search. Informed search strategies can find a solution more efficiently than an uninformed search strategy. Informed search is also called a Heuristic search. A heuristic is a way which might not always be guaranteed for best solutions but guaranteed to find a good solution in reasonable time. Informed search can solve much complex problem which could not be solved in another way. An example of informed search algorithms is a traveling salesman problem. |

Latest Courses

We provides tutorials and interview questions of all technology like java tutorial, android, java frameworks

Contact info

G-13, 2nd Floor, Sec-3, Noida, UP, 201301, India

[email protected] .

Latest Post

PRIVACY POLICY

Interview Questions

Online compiler.

Data Science Central

- Author Portal

- 3D Printing

- AI Data Stores

- AI Hardware

- AI Linguistics

- AI User Interfaces and Experience

- AI Visualization

- Cloud and Edge

- Cognitive Computing

- Containers and Virtualization

- Data Science

- Data Security

- Digital Factoring

- Drones and Robot AI

- Internet of Things

- Knowledge Engineering

- Machine Learning

- Quantum Computing

- Robotic Process Automation

- The Mathematics of AI

- Tools and Techniques

- Virtual Reality and Gaming

- Blockchain & Identity

- Business Agility

- Business Analytics

- Data Lifecycle Management

- Data Privacy

- Data Strategist

- Data Trends

- Digital Communications

- Digital Disruption

- Digital Professional

- Digital Twins

- Digital Workplace

- Marketing Tech

- Sustainability

- Agriculture and Food AI

- AI and Science

- AI in Government

- Autonomous Vehicles

- Education AI

- Energy Tech

- Financial Services AI

- Healthcare AI

- Logistics and Supply Chain AI

- Manufacturing AI

- Mobile and Telecom AI

- News and Entertainment AI

- Smart Cities

- Social Media and AI

- Functional Languages

- Other Languages

- Query Languages

- Web Languages

- Education Spotlight

- Newsletters

- O’Reilly Media

Using Uninformed & Informed Search Algorithms to Solve 8-Puzzle (n-Puzzle) in Python

- July 6, 2017 at 3:30 am

This problem appeared as a project in the edX course ColumbiaX: CSMM.101x Artificial Intelligence (AI) . In this assignment an agent will be implemented to solve the 8-puzzle game (and the game generalized to an n × n array).

The following description of the problem is taken from the course:

I. Introduction

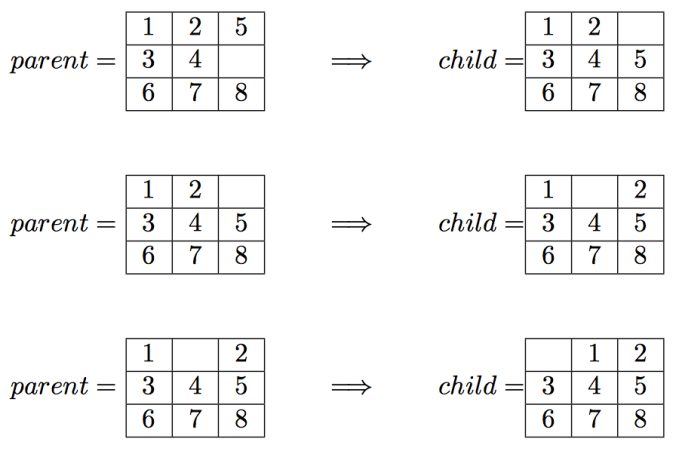

An instance of the n-puzzle game consists of a board holding n^2-1 distinct movable tiles, plus an empty space. The tiles are numbers from the set 1,..,n^2-1 . For any such board, the empty space may be legally swapped with any tile horizontally or vertically adjacent to it. In this assignment, the blank space is going to be represented with the number 0. Given an initial state of the board, the combinatorial search problem is to find a sequence of moves that transitions this state to the goal state; that is, the configuration with all tiles arranged in ascending order 0,1,… ,n^2−1 . The search space is the set of all possible states reachable from the initial state. The blank space may be swapped with a component in one of the four directions {‘Up’, ‘Down’, ‘Left’, ‘Right’} , one move at a time. The cost of moving from one configuration of the board to another is the same and equal to one. Thus, the total cost of path is equal to the number of moves made from the initial state to the goal state.

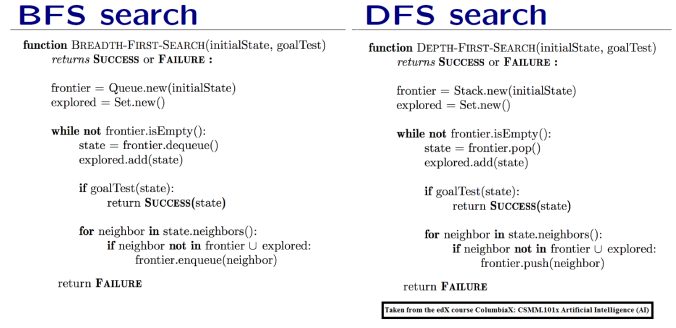

II. Algorithm Review

The searches begin by visiting the root node of the search tree, given by the initial state. Among other book-keeping details, three major things happen in sequence in order to visit a node:

- First, we remove a node from the frontier set.

- Second, we check the state against the goal state to determine if a solution has been found.

- Finally, if the result of the check is negative, we then expand the node. To expand a given node, we generate successor nodes adjacent to the current node, and add them to the frontier set. Note that if these successor nodes are already in the frontier, or have already been visited, then they should not be added to the frontier again.

This describes the life-cycle of a visit, and is the basic order of operations for search agents in this assignment—(1) remove, (2) check, and (3) expand. In this assignment, we will implement algorithms as described here.

III. What The Program Need to Output

Example: breadth-first search.

The output file should contain exactly the following lines:

path_to_goal: [‘Up’, ‘Left’, ‘Left’] cost_of_path: 3 nodes_expanded: 10 fringe_size: 11 max_fringe_size: 12 search_depth: 3 max_search_depth: 4 running_time: 0.00188088 max_ram_usage: 0.07812500

The following algorithms are going to be implemented and taken from the lecture slides from the same course.

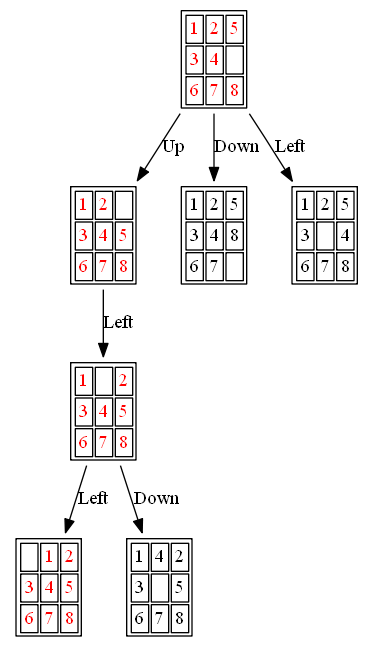

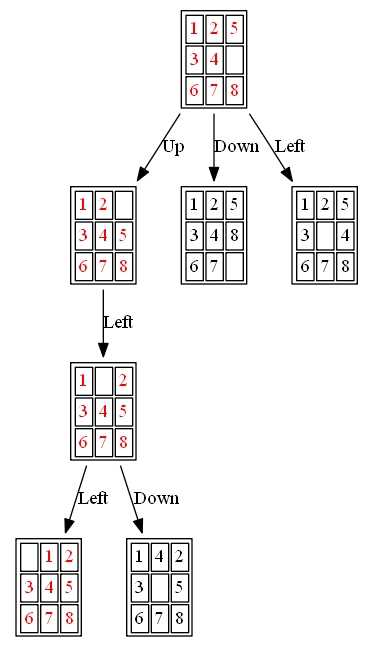

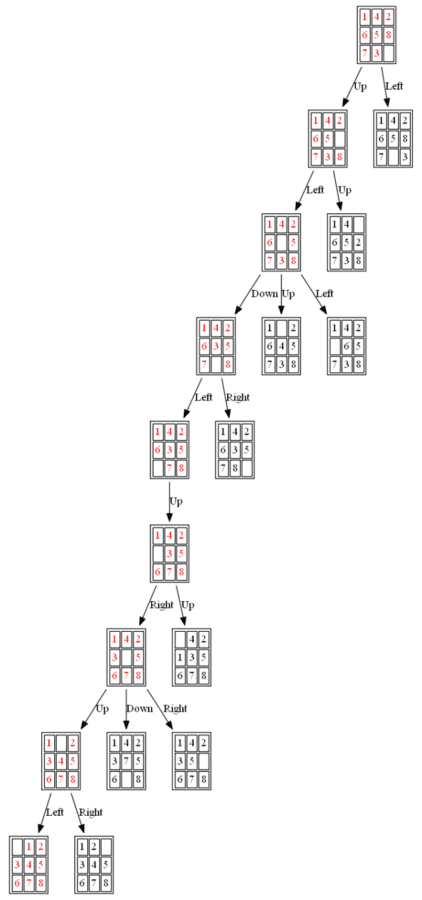

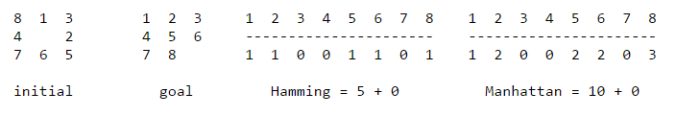

The following figures and animations show how the 8-puzzle was solved starting from different initial states with different algorithms. For A* and ID-A* search we are going to use Manhattan heuristic , which is an admissible heuristic for this problem. Also, the figures display the search paths from starting state to the goal node (the states with red text denote the path chosen). Let’s start with a very simple example. As can be seen, with this simple example all the algorithms find the same path to the goal node from the initial state.

Example 1: Initial State: 1,2,5,3,4,0,6,7,8

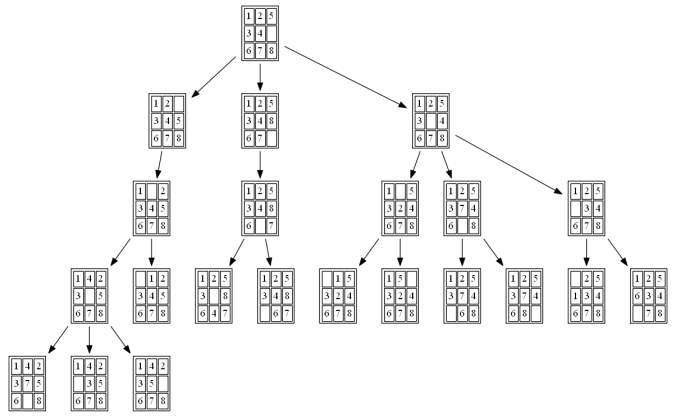

The nodes expanded by BFS (also the nodes that are in the fringe / frontier of the queue) are shown in the following figure:

The path to the goal node (as well as the nodes expanded) with ID-A* is shown in the following figure:

Now let’s try a little more complex examples:

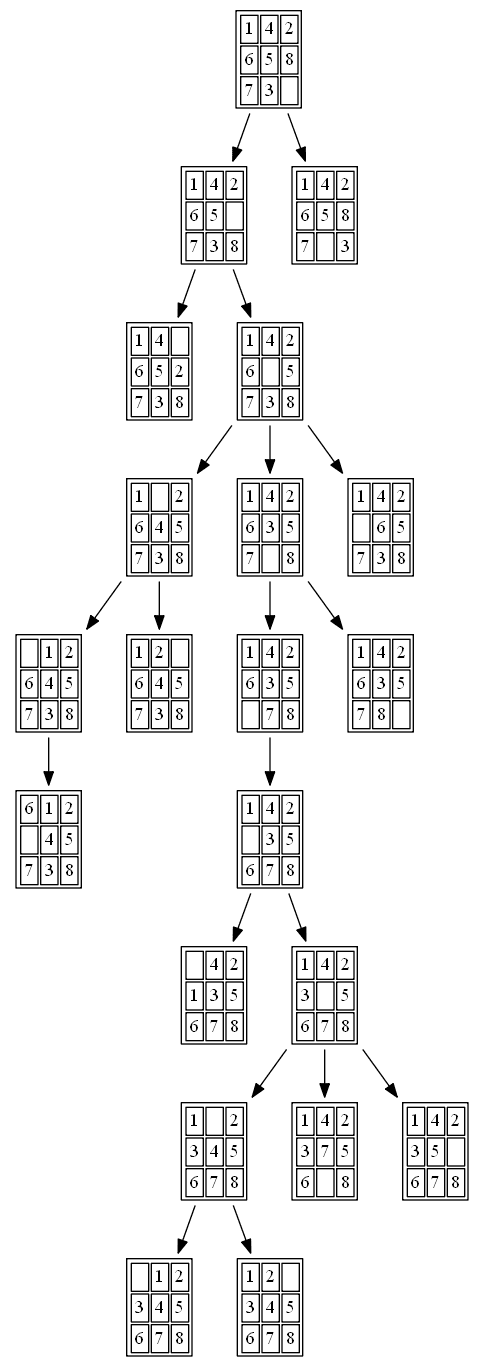

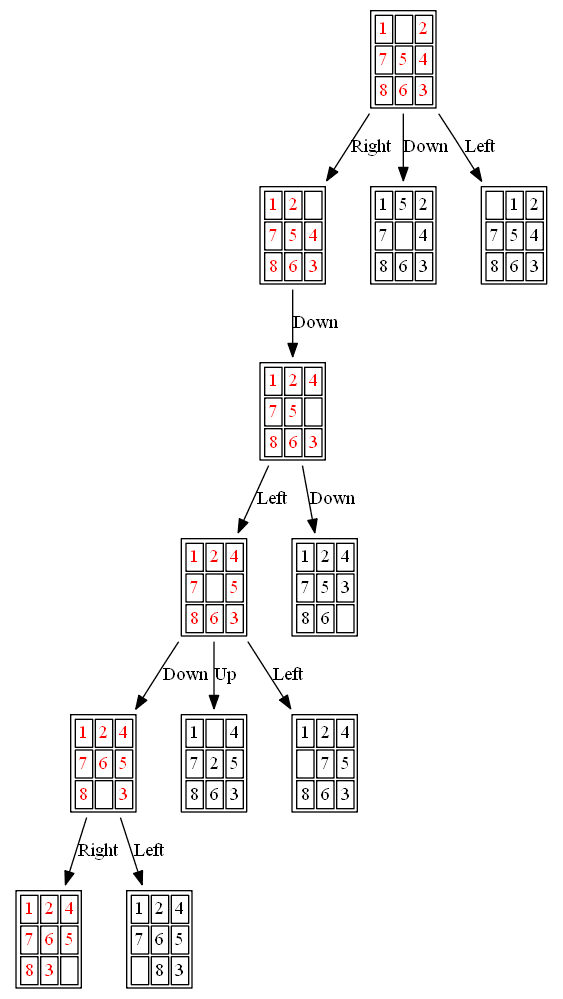

Example 2: Initial State: 1,4,2,6,5,8,7,3,0

The path to the goal node with A* is shown in the following figure:

All the nodes expanded by A* (also the nodes that are in the fringe / frontier of the queue) are shown in the following figure:

The path to the goal node with BFS is shown in the following figure:

All the nodes expanded by BFS are shown in the following figure:

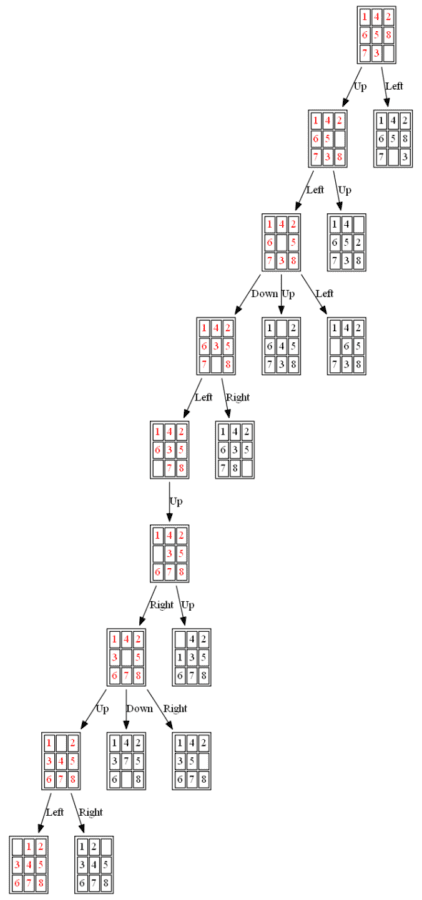

Example 3: Initial State: 1,0,2,7,5,4,8,6,3

The path to the goal node with A* is shown in the following figures:

The nodes expanded by A* (also the nodes that are in the fringe / frontier of the priority queue) are shown in the following figure (the tree is huge, use zoom to view it properly):

The nodes expanded by ID-A* are shown in the following figure (again the tree is huge, use zoom to view it properly):

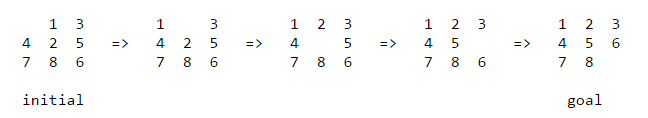

The same problem (with a little variation) also appeared a programming exercise in the Coursera Course Algorithm-I (By Prof. ROBERT SEDGEWICK , Princeton ) . The description of the problem taken from the assignment is shown below (notice that the goal state is different in this version of the same problem):

Write a program to solve the 8-puzzle problem (and its natural generalizations) using the A* search algorithm.

- Hamming priority function. The number of blocks in the wrong position, plus the number of moves made so far to get to the state. Intutively, a state with a small number of blocks in the wrong position is close to the goal state, and we prefer a state that have been reached using a small number of moves.

- Manhattan priority function. The sum of the distances (sum of the vertical and horizontal distance) from the blocks to their goal positions, plus the number of moves made so far to get to the state.

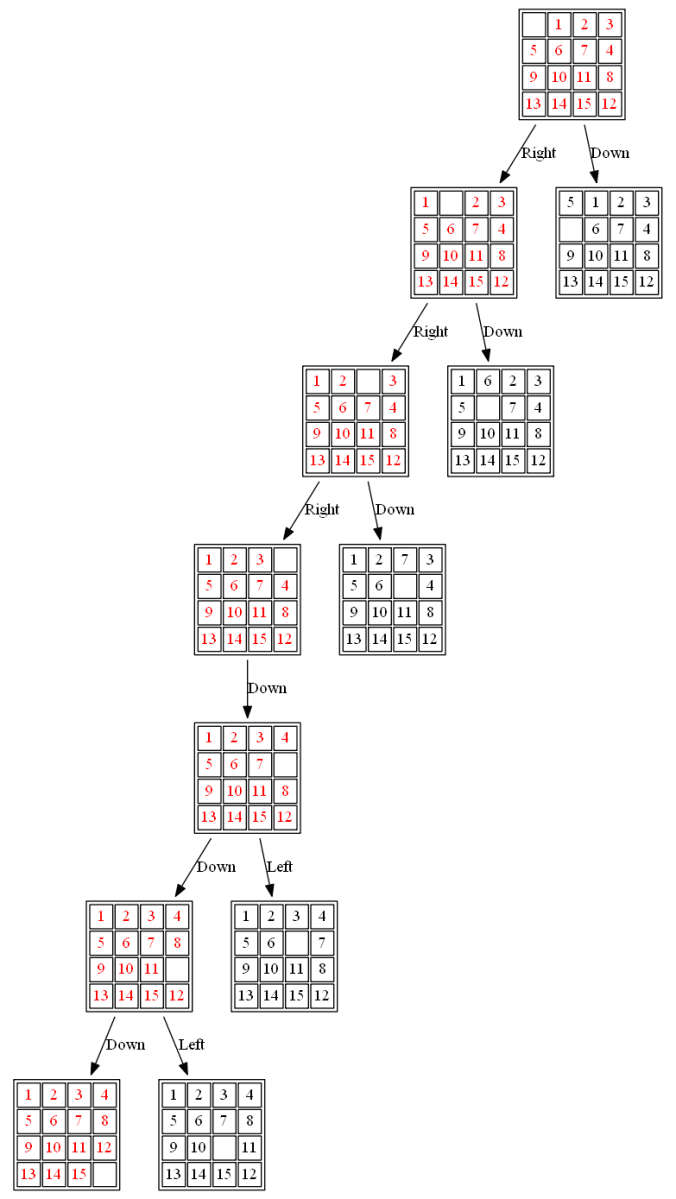

(2) The following 15-puzzle is solvable in 6 steps , as shown below:

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Related Content

EnjoyMathematics

Problem-Solving Approaches in Data Structures and Algorithms

This blog highlights some popular problem-solving strategies for solving problems in DSA. Learning to apply these strategies could be one of the best milestones for the learners in mastering data structure and algorithms.

An Incremental approach using Single and Nested loops

One of the simple ideas of our daily problem-solving activities is that we build the partial solution step by step using a loop. There is a different variation to it:

- Input-centric strategy: At each iteration step, we process one input and build the partial solution.

- Output-centric strategy: At each iteration step, we add one output to the solution and build the partial solution.

- Iterative improvement strategy: Here, we start with some easily available approximations of a solution and continuously improve upon it to reach the final solution.

Here are some approaches based on loop: Using a single loop and variables, Using nested loops and variables, Incrementing the loop by a constant (more than 1), Using the loop twice (Double traversal), Using a single loop and prefix array (or extra memory), etc.

Example problems: Insertion Sort , Finding max and min in an array , Valid mountain array , Find equilibrium index of an array , Dutch national flag problem , Sort an array in a waveform .

Decrease and Conquer Approach

This strategy is based on finding the solution to a given problem via its one sub-problem solution. Such an approach leads naturally to a recursive algorithm, which reduces the problem to a sequence of smaller input sizes. Until it becomes small enough to be solved, i.e., it reaches the recursion’s base case.

Example problems: Euclid algorithm of finding GCD , Binary Search , Josephus problem

Problem-solving using Binary Search

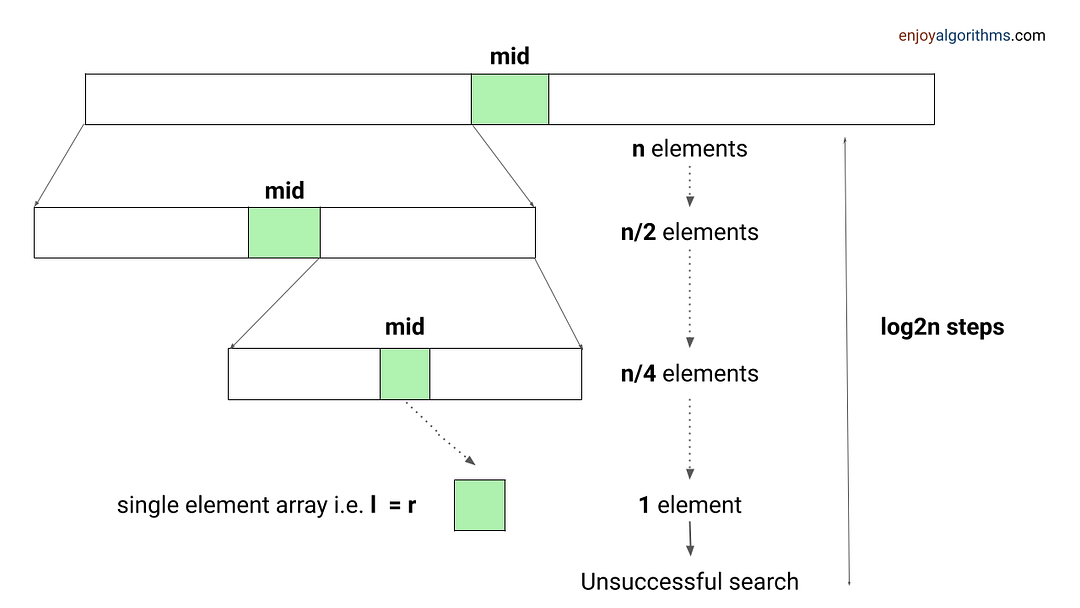

When an array has some order property similar to the sorted array, we can use the binary search idea to solve several searching problems efficiently in O(logn) time complexity. For doing this, we need to modify the standard binary search algorithm based on the conditions given in the problem. The core idea is simple: calculate the mid-index and iterate over the left or right half of the array.

Example problems: Find Peak Element , Search a sorted 2D matrix , Find the square root of an integer , Search in Rotated Sorted Array

Divide and Conquer Approach

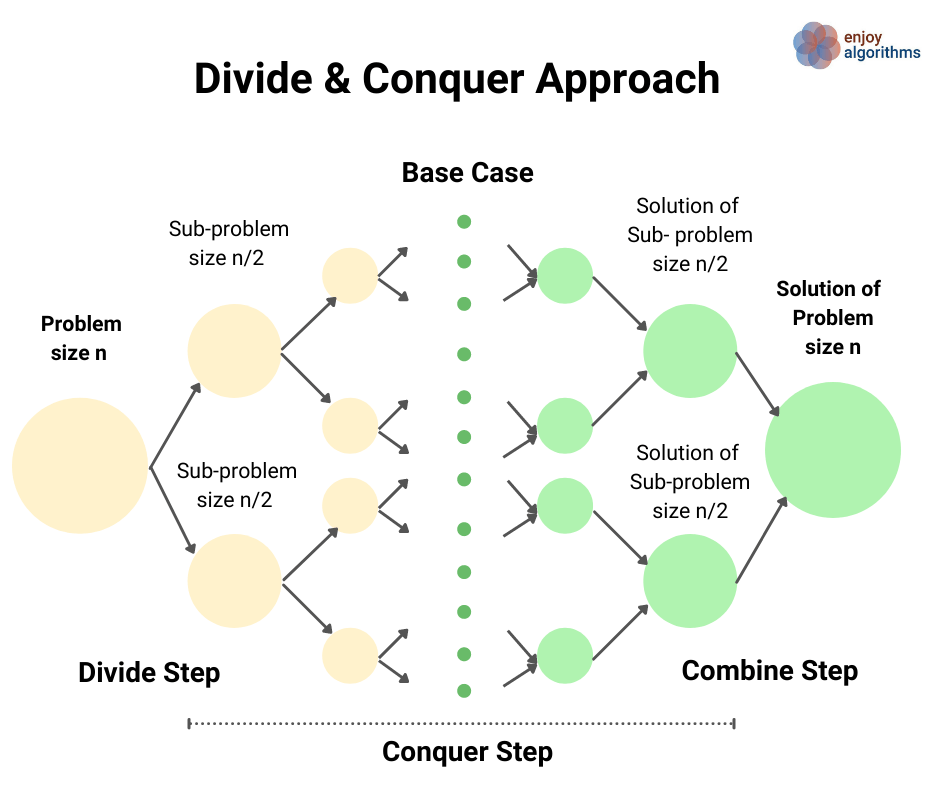

This strategy is about dividing a problem into more than one subproblems, solving each of them, and then, if necessary, combining their solutions to get a solution to the original problem. We solve many fundamental problems efficiently in computer science by using this strategy.

Example problems: Merge Sort , Quick Sort , Median of two sorted arrays

Two Pointers Approach