User Interviews 101

September 17, 2023 2023-09-17

- Email article

- Share on LinkedIn

- Share on Twitter

A user interview is a popular UX research method often used in the discovery phase .

User interview: A research method where the interviewer asks participants questions about a topic, listens to their responses, and follows up with further questions to learn more.

The term “user interview” is unique to the UX field. In other areas, like market research or social science, the same method is called an in-depth interview, a semi-structured interview, or a qualitative interview.

In This Article:

Why conduct user interviews, user interviews vs. usability tests, how to do a user interview, can interviews be used with other methods, limitations of interviews.

When performed well, user interviews provide in-depth insight into who your users are, their lives, experiences, and challenges . Learning these things helps teams identify solutions to make users’ lives easier. As a result, user interviews are an excellent tool to use in discovery.

Here are some of the many things you can learn by interviewing your users:

- What users’ experiences were like, what was memorable, and why

- Users’ pain points during an experience

- How users think or feel about a topic, event, or experience

- What users care about

- Users’ mental models

- Users’ motivations, aspirations, and desires

User interviews help teams build empathy for their users . When teams watch interviews, they can put themselves in their users’ shoes.

Data gathered from user interviews can be used to construct various UX artifacts, including

- User-need statements

- Empathy maps

- Customer-journey maps

Interviews are versatile; they can be used to learn about human experiences or about a customer's experience with one of your existing products. For example, imagine you are working on an app used to track calorie intake. You could interview people about their experiences using the app or about their journey to become healthier (or both).

User Interviews are often confused with usability tests . While they sound similar and are both typically one on one, these two methods are very different and should be used for different purposes.

1. Identify What You’d Like to Learn

Think of an interview as a research study, not a sales session or an informal conversation. Like any research study, an interview should have research goals (or research questions). Goals that are too broad (like learning about users ) can result in interviews that fail to produce useful or actionable insights. Examples of reasonable research goals include:

- What causes customers to consider and try our product?

- What are the highs and lows of their experience?

- How much knowledge of the process do users have?

- What makes users abandon the product?

A concise, concrete set of goals that relate to your target users’ specific behaviors or attitudes can help you gather helpful and actionable insights. These goals will influence the questions you’ll ask in your interviews.

2. Prepare a Guide

An interview guide is used to direct the conversation and help you answer your research goals. An interview guide should contain a few well-designed, open-ended questions that get participants talking and sharing their experiences.

Examples of good open-ended questions include:

- Walk me through a typical day for you.

- Tell me about the last time you [did something].

- Tell me about a time when you [had a particular experience].

Jog the memory by asking about specific events rather than about general processes. Remembering a particular incident will nudge the user’s memory and enable them to give you meaningful details.

An interview will also contain followup questions to gather more-detailed information . When constructing the guide, these questions are often nested underneath the main questions. Construct followup questions based on your research goals. Anticipating different responses can help you identify what followup questions to ask. Examples include:

- When did this happen?

- How long did it take you?

- Has this happened to you before?

- How did you feel during this experience?

An interview guide can be used flexibly : interviewers don’t need to move through questions linearly. They can skip questions, spend longer on some questions, or ask questions not in the guide. (See our interview-guide article for an example guide .)

3. Pilot Your Guide

Even the best guide may need to be tweaked after the first interview. Piloting allows you to identify what tweaks are needed before running all your interviews. You can pilot your guide with a friend or colleague if the interview topic isn’t too specialized. Or, you can recruit a target user (or two).

Piloting helps you learn:

- Whether any questions were misunderstood or caused confusion

- If there are questions that should be added to the guide

- If any questions should be removed because they aren’t likely to provide helpful information

- Whether the order of the questions felt natural

Since interviews are a qualitative research method, it’s okay to continue making minor tweaks to your guide as you complete all your interviews. However, avoid changing your research goals throughout the study. It may become difficult to achieve your research goals without collecting enough relevant data.

4. Start Easy

Some participants can feel nervous at the beginning of the interview, especially if they’re not sure what to expect. Start by talking through the purpose of the interview, what kinds of questions will be asked, and how the information will be used. Slow down your pace of speech. Talking slowly has a calming effect and indicates that you are not anxious and have time to listen.

Start with questions that are easy to answer, such as Tell me a bit about yourself or What do you like to do in your spare time? These questions are easy to answer and can get participants comfortable talking. Avoid asking questions likely to be interpreted as personal or judgmental, such as What was the last book you read? This question assumes the user read a book recently; if they didn’t, they might feel stupid.

5. Build Rapport

People are more likely to remember, talk, and let their guard down if they feel relaxed and trust the interviewer and the process. Keep in mind that there’s a big difference between rapport and friendship . The user does not have to like you, think you’re funny, or want to invite you out for a cup of coffee in order to trust you enough to be interviewed.

Build rapport by showing you’re listening and by asking related questions. You can show you’re listening by using verbal and nonverbal cues. Verbal cues include:

- Neutral acknowledging like, I understand, okay, I see

- Making noises like mmmh

- Echoing what the participant has said

- Adjusting the speed, volume, and intonation of your questions

Nonverbal cues include:

- Frequent eye contact

- Raising eyebrows occasionally

- Smiling when the participant smiles (also known as mirroring)

Avoid interrupting or rushing participants as these behaviors will harm your ability to build rapport.

6. Follow Up and Probe

Ask your prepared followup questions if the participant did not cover them when sharing their experiences. Additionally, ask further questions that probe into your participant’s responses. These questions help you to uncover those important motivations, mental models, perceptions, and attitudes.

Probing questions include:

- Tell me more about that.

- Can you expand on that?

- What do you think about that?

- How do you feel about that?

- Why is that important to you?

Since you won’t know when you might use them, probing questions are not usually prepared in advance. However, if you know you might forget to use them, write them at the top of your guide or on an index card.

Yes. You can mix interviews with other methods, such as:

- With a usability test . You might want to learn about the participant before giving them tasks on a design. Your session might begin with a short interview before transitioning to the test. When user tests and interviews are combined, sessions are usually longer (for example, 90 minutes rather than 60 minutes). If you’re combining these methods, the questions you ask in the interview shouldn’t prime users to pay more attention to certain things in the design.

- With a field study or some kind of observational study. You could perform an interview before or after observing a study participant work on their tasks. An interview before an observational study can give you context into what you will observe. An interview after a field study allows you to follow up on interesting things you observed.

- With a diary study . In longer diary studies, it may be beneficial to have an initial interview before the logging period to learn about the participant and prepare them for the diary study. After the logging period ends, a wrapup interview is often conducted to learn more about the participant’s diary entries.

Since interviews are an attitudinal method, they collect reported behaviors (rather than observed behavior). Some limitations of self-reported data include:

- Poor or faulty recollection : Human memory is flawed, so people don’t recall events fully. If the event in question occurred in the distant past, your participants might not recall it accurately.

- Missing details : Participants don’t know precisely what is relevant for the interviewer, so sometimes leave out important details.

- Social-desirability bias : Some people are very conscious of how they’re perceived and may withhold information or may want to present themselves and their behaviors in a certain light.

If we want to know what users actually do, we need to observe them or collect data about their behavior (such as through analytics and other behavioral metrics).

Another limitation of interviews is that the quality of the data collected is very much dependent on the interviewer's skill. If the interviewer asks many leading questions, the validity of the data will be compromised.

Interviews are a popular method to learn about users: what they think, do, and even need. Treat user interviews like a research study, not an informal chat. Compose research goals before crafting a guide. During your interviews be careful not to lead participants and make sure to follow up with further questions. Finally, complement interviews with observation-based research to attain an accurate and richer picture of users' experiences.

Related Courses

User interviews.

Uncover in-depth, accurate insights about your users

ResearchOps: Scaling User Research

Orchestrate and optimize research to amplify its impact

Survey Design and Execution

Use surveys to drive and evaluate UX design

Related Topics

- Research Methods Research Methods

Learn More:

Please accept marketing cookies to view the embedded video. https://www.youtube.com/watch?v=jy-QGuWE7PQ

The 3 Types of User Interviews: Structured, Semi-Structured, and Unstructured

Why Use 40 Participants in Quantitative UX Research?

Page Laubheimer · 5 min

Coding in Thematic Analysis

Maria Rosala · 3 min

Using the Funnel Technique in User Interviews

Related Articles:

6 Mistakes When Crafting Interview Questions

Maria Rosala · 5 min

How Many Participants for a UX Interview?

Maria Rosala · 6 min

The Funnel Technique in Qualitative User Research

Maria Rosala and Kate Moran · 7 min

Should You Run a Survey?

Maddie Brown · 6 min

Open-Ended vs. Closed Questions in User Research

Writing an Effective Guide for a UX Interview

Product Design (UX/UI) Bundle and save

User Research

Content Design

UX Design Fundamentals

Software and Coding Fundamentals for UX

- UX training for teams

- Hire our alumni

- Student Stories

- State of UX Hiring Report 2024

- Our mission

- Advisory Council

Education for every phase of your UX career

Professional Diploma

Learn the full user experience (UX) process from research to interaction design to prototyping.

Combine the UX Diploma with the UI Certificate to pursue a career as a product designer.

Professional Certificates

Learn how to plan, execute, analyse and communicate user research effectively.

Master content design and UX writing principles, from tone and style to writing for interfaces.

Understand the fundamentals of UI elements and design systems, as well as the role of UI in UX.

Short Courses

Gain a solid foundation in the philosophy, principles and methods of user experience design.

Learn the essentials of software development so you can work more effectively with developers.

Give your team the skills, knowledge and mindset to create great digital products.

Join our hiring programme and access our list of certified professionals.

Learn about our mission to set the global standard in UX education.

Meet our leadership team with UX and education expertise.

Members of the council connect us to the wider UX industry.

Our team are available to answer any of your questions.

Fresh insights from experts, alumni and the wider design community.

Success stories from our course alumni building thriving careers.

Discover a wealth of UX expertise on our YouTube channel.

Latest industry insights. A practical guide to landing a job in UX.

How to conduct effective user interviews for UX research

User interviews are a popular UX research technique, providing valuable insight into how your users think and feel. Learn about the different types of user interviews and how to conduct your own in this guide.

Free course: Introduction to UX Design

What is UX? Why has it become so important? Could it be a career for you? Learn the answers, and more, with a free 7-lesson video course.

User interviews are a popular UX research technique, providing valuable insight into how your users think and feel. Learn about the different types of user interviews and how to conduct your own in this guide.

User research is fundamental for good UX. It helps you get to know your users and design products that meet their needs and solve their pain-points.

One of the most popular UX research methods is user interviews. With this technique, you get to hear from your users first-hand, learning about their needs, goals, expectations, and frustrations—anything they think and feel in relation to the problem space.

But when should you conduct user interviews and how do you make sure they yield valuable results?

Follow this guide and you’ll be a user interview pro. We explain:

What are user interviews in UX research?

What are the different types of user interviews, when should you conduct user interviews, what data and insights do you get from user interviews, how to conduct effective user interviews for ux research: a step-by-step guide.

- What happens next? How to analyse your user interview data

First things first: What are user interviews?

[GET CERTIFIED IN USER RESEARCH]

Interviews are one of the most popular UX research methods. They provide valuable insight into how your users think, feel, and talk about a particular topic or scenario—allowing you to paint a rich and detailed picture of their needs and goals.

interviews take place on a one-to-one basis, with a UX designer or UX researcher asking the user questions and recording their answers. They can last anywhere between 30 minutes and an hour, and they can be done at various stages of a UX design project.

There are several different types of user interviews. They can be:

- Structured, semi-structured, or unstructured

- Generative, contextual, or continuous

- Remote or in-person

Let’s explore these in more detail.

Structured vs. semi-structured vs. unstructured user interviews

Structured interviews follow a set list of questions in a set order. The questions are usually closed—i.e. there’s a limit to how participants can respond (e.g. “Yes” or “No”). Structured interviews ensure that all research participants get exactly the same questions, and are most appropriate when you already have a good understanding of the topic/area you’re researching.

Structured interviews also make it easier to compare the data gathered from each interview. However, a disadvantage is that they are rather restrictive; they don’t invite much elaboration or nuance.

Semi-structured interviews are based on an interview guide rather than a full script, providing some pre-written questions. These tend to be open-ended questions, allowing the user to answer freely. The interviewer will then ask follow-up questions to gain a deeper understanding of the user’s answers. Semi-structured interviews are great for eliciting rich user insights—but, without a set script of questions, there’s a high risk of researcher bias (for example, asking questions that unintentionally lead the participant in a certain direction).

Unstructured user interviews are completely unscripted. It’s up to the interviewer to come up with questions on the spot, based on the user’s previous answers. These are some of the trickiest types of user interviews—you’re under pressure to think fast while avoiding questions that might bias the user’s answer. Still, if done well, unstructured interviews are great if you have very little knowledge or data about the domain and want to explore it openly.

Generative vs. contextual vs. continuous user interviews

Generative user interviews are ideal for early-stage exploration and discovery. They help you to uncover what you don’t know—in other words, what insights are you missing? What user problem should you be trying to solve? Which areas and topics can you identify for further user research? Generative interviews are usually unstructured or semi-structured.

Contextual user interviews take place in a specific context—while the user is carrying out a certain task, for example. This allows you to not only observe the user’s actions/behaviour first-hand, but also to ask questions and learn more about why the user takes certain actions and how they feel in the process. Contextual interviews tend to be semi-structured.

Continuous user interviews are conducted as part of continuous UX research. While traditional user research is done within the scope of a specific project, continuous UX research is ongoing, conducted at regular interviews (e.g. weekly or monthly) with the goal of continuous product improvement. Continuous interviews are like regular check-ins with your users, giving you ongoing insight into their needs, goals, and pain-points.

[GET CERTIFIED IN UX]

Remote vs. in-person user interviews

A final distinction to make is between remote and in-person interviews.

In-person user interviews take place with the user and researcher in the same room. A big advantage of in-person interviews is that you’re privy to the user’s body language—an additional insight into how they feel.

Remote user interviews take place via video call. Like any kind of remote work, they’re more flexible and may be more accessible for research participants as they don’t require any travel.

User interviews provide value at various stages of a design project. You can use them for:

- Discovery and ideation —when you want to learn more about your target users and the problems they need you to solve.

- UX testing and product improvement —when you want to get user feedback on an existing design concept or solution.

- Continuous UX research —you can run regular interviews as part of a continuous UX research framework.

Let’s take a closer look.

User interviews for discovery and ideation

User interviews can be useful right at the beginning of a UX project, when you don’t know much (or anything) about the domain and don’t yet have a design direction. At this stage, everything is pretty open and your user interviews will be exploratory.

Conducting user interviews early in the process will help you to answer questions such as “Who are our target users?”, “What problems do they need us to solve?” and “What are their goals and expectations in relation to the problem space?”

Here you’ll be focusing on generative user interviews (i.e. finding out what you don’t know), and they’ll likely be unstructured or semi-structured.

User interviews as part of UX testing and product improvement

User interviews also come in handy when you have an idea or concept you want to evaluate, or even a working product you want to test.

At this stage, you might present the user with a prototype and ask them questions about it. If you’re further along in the design process, you can run user interviews as an add-on to UX testing —having the user interact with a working prototype (or the product itself) and asking them questions at the same time. These are the contextual interviews we described earlier.

Conducting user interviews at this stage will help you gain insight into how your users feel about a concept/product/experience and to identify pain-points or usability issues within the existing design.

User interviews as part of continuous UX research

User interviews are also valuable as part of a continuous UX research framework. Here, there is no project-specific goal—rather, you’re interviewing users regularly to gain ongoing user insights. This enables you to maintain a user-centric design process and to evolve your product continuously as you learn more about your users.

You can learn more about the importance of continuous UX research here .

User interviews allow you to hear from the user, in their own words, how they think and feel about a particular problem space/experience/task. This provides rich insights into their thoughts, beliefs, experiences, problems, goals, desires, motivations, and expectations, as well as the rationale or thought process behind certain actions.

As such, user interviews generate qualitative data . That is, data which tells you about a person’s thoughts, feelings, and subjective experiences. It’s the opposite of quantitative data which is objective, numerical, and measurable. You can learn more about the difference between quantitative and qualitative user research data here .

Note that user interviews generate self-reported data . Self-reported data is based on what the user chooses to share with you (you’re not observing it; rather, you’re hearing it from the user). It’s how they report to be feeling or thinking.

If you conduct contextual user interviews, you’ll gather a mixture of observational data (based on what you observe the user doing) and self-reported data.

After conducting user interviews, you’ll end up with lots of data in the form of interview transcripts, audio or video recordings, and your own notes. We’ll look at how to analyse your user interview data in the final section of this guide.

First, though, here’s a step-by-step plan you can follow to conduct effective user interviews.

Ready to conduct your own user interviews? Follow our step-by-step guide to get started.

- Determine what type of user interviews you’ll conduct

- Write your user interview script (or guide)

- Set up the necessary tools

- Recruit your interview participants

- Perfect your interview technique

Let’s walk through our plan step by step.

1. Determine what type of user interviews you’ll conduct

Earlier in this guide, we outlined the different types of user interviews: Structured, semi-structured, and unstructured; generative, contextual, and continuous; and remote and in-person.

The first step is to determine what format your user interviews will take. This depends on:

- What stage you’re at in the project/process

- What your research goals are

If you’re at the very early stages of a design project, you’ll likely want to keep your user interviews open and exploratory—opting for unstructured or semi-structured interviews.

Perhaps you’ve already got a design underway and want to interview your users as they interact with it. In that case, structured or semi-structured contextual interviews may work best.

Consider what you want to learn from your user interviews and go from there.

2. Write your user interview script (or guide)

How you approach this step will depend on whether you’re conducting structured, semi-structured, or unstructured user interviews.

For structured interviews, you’ll need to write a full interview script—paying attention to the order of the questions. The script should also incorporate follow-up questions; you won’t have the freedom to improvise or ask additional questions outside of your script, so make sure you’re covering all possible ground.

For semi-structured interviews, you’ll write an interview guide rather than a rigid script. Come up with a set list of questions you definitely want to ask and use these—and your users’ answers—as a springboard for follow-up questions during the interview itself.

For unstructured user interviews, you can go in without a script. However, it’s useful to at least brainstorm some questions you might ask to get the interview started.

Regardless of whether you’re conducting structured, semi-structured, or unstructured interviews, it’s essential that your questions are:

- Open-ended . These are questions that cannot be answered with a simple “yes” or “no”. They require more elaboration from the user, providing you with much more insightful answers. An example of an open question could be “Can you tell me about your experience of using mobile apps to book train tickets?” versus a closed question such as “Have you ever used a mobile app to book train tickets?”

- Unbiased and non-leading . You want to be very careful about how you word your questions. It’s important that you don’t unintentionally lead the user or bias their answer in any way. For example, if you ask “How often do you practise app-based meditation?”, you’re assuming that the user practises meditation at all. A better question would be “What are your thoughts on app-based meditation?”

It’s worth having someone else check your questions before you use them in a user interview. This will help you to remove any unintentionally biased or leading questions which may compromise the quality of your research data.

3. Recruit your interview participants

Your user interviews should involve people who represent your target users. This might be existing customers and/or representative users who fit the persona you would be designing for.

Some common methods for recruiting user research participants include:

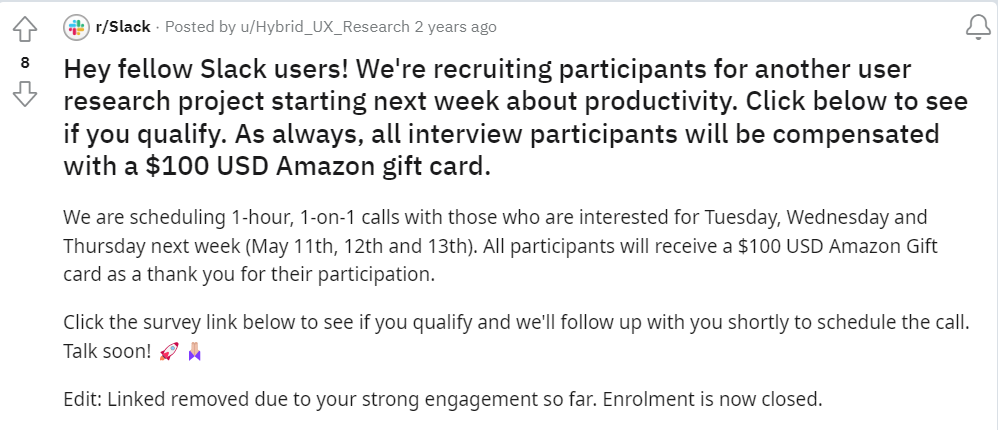

- Posting on social media

- Working with a dedicated agency or platform which will connect you with suitable participants

- Recruiting from your own customer or user database

The good thing about user interviews is that you don’t need loads of participants to gather valuable data. Focus on quality over quantity, recruiting between five and ten interviewees who closely match your target group.

4. Set up the necessary tools

Now for the practical matter of getting your user interviews underway. If you’re conducting in-person user interviews, you’ll need to choose an appropriate setting—ideally somewhere quiet and neutral where the user will feel relaxed.

For remote user interviews, you’ll need to set up the necessary software, such as Zoom , dscout , or Lookback . Consult this guide for more UX research tools .

You’ll also need to consider how you’re going to record the user’s answers. Will you use good old fashioned pen and paper, a simple note-taking app, or a recording and transcription software?

Make a list of all the tools you’ll need for a seamless user interview and get everything set up in advance.

5. Perfect your interview technique

As the interviewer, you have an important role to play in ensuring the success of your user interviews. So what makes a good interviewer? Here are some tips to help you perfect your interview technique:

- Practise active listening . Show the user that you’re listening to them; maintain eye contact (try not to be too distracted with taking notes), let them speak without rushing, and don’t give any verbal or non-verbal cues that you’re judging their responses.

- Get comfortable with silence . In everyday conversations, it can be tempting to fill silences. But, in an interview situation, it’s important to lean into the power of the pause. Let the user think and speak when they’re ready—this is usually when you elicit the most interesting insights.

- Speak the user’s language . Communication is everything in user interviews. Don’t alienate the user by speaking “UX speak”—they may not be familiar with industry-specific terms, and this can add unnecessary friction to the experience. Keep it simple, conversational, and accessible.

Ultimately, the key is to put your users at ease and create a space where they can talk openly and honestly. Perfect your interview technique and you’ll find it much easier to build a rapport with your research participants and uncover valuable, candid insights.

What happens next? How to analyse your user interview data

You’ve conducted your user interviews. Now you’re left with lots of unstructured, unorganised qualitative data—i.e. reams of notes. So how do you turn all those interview answers into useful, actionable insights?

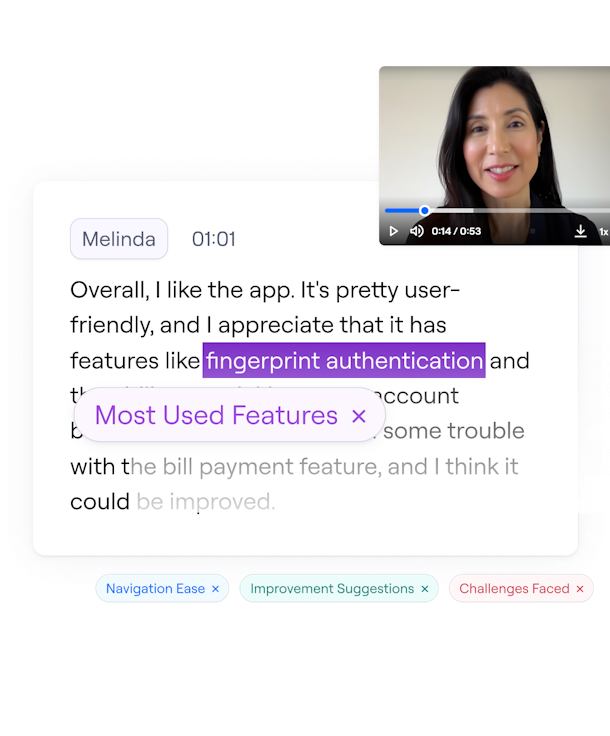

The most common technique for analysing qualitative data is thematic analysis . This is where you read through all the data you’ve gathered (in this case, your notes and transcripts) and use ‘codes’ to denote different patterns that emerge across the dataset.

You’ll then ‘code’ different excerpts within your interview notes and transcripts, eventually sorting the coded data into a group of overarching themes.

At this stage, you can create an affinity diagram —writing all relevant findings and data points onto Post-it notes and ‘mapping’ them into topic clusters on a board. This is a great technique for physically working through your data and creating a visualisation of your themes, allowing you to step back and spot important patterns.

With your research data organised and categorised, you can review your findings in relation to your original research objectives. What do the themes and patterns tell you? What actions can you take from your findings? What gaps still need to be filled with further UX research?

As a final step, you might write up a UX research report and present your findings to relevant stakeholders.

Learn more about UX research

We hope you now have a clear understanding of what user interviews are, why they’re such a valuable UX research method, and how to conduct your own user interviews. If you’d like to learn more about user research, continue with these guides:

- A complete introduction to card sorting: What is it and how do you do it?

- What are UX personas and what are they used for?

- What’s the future of UX research? An interview with Mitchell Wakefield, User Researcher at NHS Digital

- user interviews

- ux research

Subscribe to our newsletter

Get the best UX insights and career advice direct to your inbox each month.

Thanks for subscribing to our newsletter

You'll now get the best career advice, industry insights and UX community content, direct to your inbox every month.

Upcoming courses

Professional diploma in ux design.

Learn the full UX process, from research to design to prototyping.

Professional Certificate in UI Design

Master key concepts and techniques of UI design.

Certificate in Software and Coding Fundamentals for UX

Collaborate effectively with software developers.

Certificate in UX Design Fundamentals

Get a comprehensive introduction to UX design.

Professional Certificate in Content Design

Learn the skills you need to start a career in content design.

Professional Certificate in User Research

Master the research skills that make UX professionals so valuable.

Upcoming course

Build your UX career with a globally-recognised, industry-approved certification. Get the mindset, the skills and the confidence of UX designers.

You may also like

What are the Gestalt principles and how do designers use them

The 9 best UI design courses to consider in 2024

The importance of ethnography in user research

Build your UX career with a globally recognised, industry-approved qualification. Get the mindset, the confidence and the skills that make UX designers so valuable.

5 November 2024

- Reviews / Why join our community?

- For companies

- Frequently asked questions

User Interviews

What are user interviews.

User interviews are a qualitative research method where researchers engage in a dialogue with participants to understand their mental models, motivations, pain points, and latent needs.

“To find ideas, find problems, to find problems, talk to people.” – Julie Zhou , former VP, Product Design at Facebook, author of The Making of a Manager

Research is the initial step in the design process . It helps you understand what your user feels, wants, and appreciates. It also helps gain insights for future designs and identify the pain points in the current solution. User interviews will help you structure your design process and deliver optimized solutions that will resonate with the users.

User Interviews: What and How?

Design teams typically perform user interviews with the potential users of a design as part of the empathize phase of the design thinking process. User interviews follow a structured methodology whereby the interviewer prepares several topics to cover, records what is said, and systematically analyzes the conversation after the interview.

User interviews are one of the most commonly used methods in user research . They can cover almost all user-related topics and be used, for example, to gather information on users’ feelings, motivations, daily routines, or how they use various products.

The interviews often follow the same methodology as qualitative interviews in other fields but with the specific purpose of informing a design project. Because user interviews typically have to fit into a design or development process , practical concerns such as limited time or resources often play a role when deciding how to conduct such interviews. For instance, user interviews can be conducted over a video or voice call if time is restricted. On the other hand, in projects with sufficient time and resources, researchers can perform the interview in the user’s home, and designers might even be flown overseas if the users reside in another country.

While many interview methods used in design projects are borrowed from other fields, such as ethnography and psychology, some have been created specifically for use in design contexts . An example is contextual interviews in the participant’s everyday environment. Contextual interviews can provide more insights about the environment in which a design will be used. As such, a contextual interview might uncover flaws within a product’s design (e.g., the product is too heavy to be carried around the house by the user) that a typical user interview might not

User interviews can be used in different stages of product development, from discovery to usability testing. Conducting a user interview is simply a question of choosing the right user or users to interview, asking them pre-determined questions (or free-form questions if used following an observation), and then reporting on their answers to enable further decision-making. Ann Blandford, Professor of Human-Computer Interaction at University College London, emphasizes the need to keep an open mind when approaching a user interview.

- Transcript loading…

8 Types of User Interviews

User Interviews are of 8 different types such as structured, unstructured, contextual, and group, to name a few.

© Interaction Design Foundation, CC BY-SA 4.0

User interviews are a versatile and indispensable design research tool, serving as a gateway to invaluable insights that shape user-centric solutions. Depending on your goals and who the interview participants are, we can classify user interviews into eight categories.

1. Structured Interviews

Structured interviews adhere to a meticulously planned set of questions, providing a systematic approach to information gathering. This type is characterized by its rigidity, ensuring a standardized process that facilitates easy participant comparison. Designers opt for structured interviews when seeking specific, targeted information. These interviews require an organized means of collecting quantitative data.

2. Unstructured Interviews

In contrast, unstructured interviews embrace a more open-ended and flexible approach. Participants are encouraged to express themselves freely, leading to a qualitative exploration of their thoughts and experiences. This type is favored when the goal is to uncover niche insights that may not emerge through predefined questions. This allows for a deeper understanding of user perspectives and motivations .

3. Semi-Structured Interviews

Semi-structured interviews balance the rigidity of structured interviews and the flexibility of unstructured ones. Designers prepare a set of predetermined questions but allow room for participants to elaborate on their responses. This format combines the benefits of both worlds, offering depth and consistency while accommodating the richness of qualitative data.

Here’s Ann Blandford with more on semi-structured interviews and how they differ from structured and unstructured ones.

4. Contextual Interviews

Contextual interviews unfold in the user’s natural environment, providing a unique perspective on how they interact with products in their day-to-day lives. This type ventures beyond the controlled setting, uncovering insights that might be missed in a traditional interview setup. Observing users in their context allows designers to identify specific pain points, preferences, and behaviors that influence the user experience in a real-world scenario.

5. Expert Interviews

Expert interviews involve engaging with individuals possessing specialized knowledge or experience relevant to the design context. These individuals could be industry experts or professionals with specific domain expertise. Their insights contribute a layer of knowledge to the design process. These interviews help refine solutions with experienced perspectives that are not apparent through user interviews alone.

6. Remote Interviews

In the era of digital connectivity, remote interviews overcome geographical constraints. They leverage technology to facilitate conversations between designers and participants. This type is particularly convenient when engaging with users located in different regions. Remote interviews ensure accessibility and flexibility, allowing designers to gather insights without the limitations of physical proximity.

7. Group Interviews

Group interviews involve the simultaneous participation of multiple individuals, fostering dynamic interactions. This format encourages participants to build on each other's responses, unveiling shared experiences and diverse perspectives within the group. Group interviews are beneficial when exploring collective opinions and group dynamics or seeking insights into how individuals influence each other's perspectives.

8. Stakeholder Interviews

Stakeholder interviews extend beyond end users to include individuals with a vested interest in the project's success. These could be internal stakeholders, decision-makers, or individuals representing different organizational departments. Engaging with stakeholders ensures alignment between design goals and broader organizational objectives. This also helps foster a holistic approach that considers the overall impact of the design solution. Understanding the applications of these eight types of user interviews empowers designers to strategically choose the most fitting approach based on project goals and the specific information sought. Each type brings a unique flavor to the user research process and contributes to creating designs that resonate with user needs and expectations.

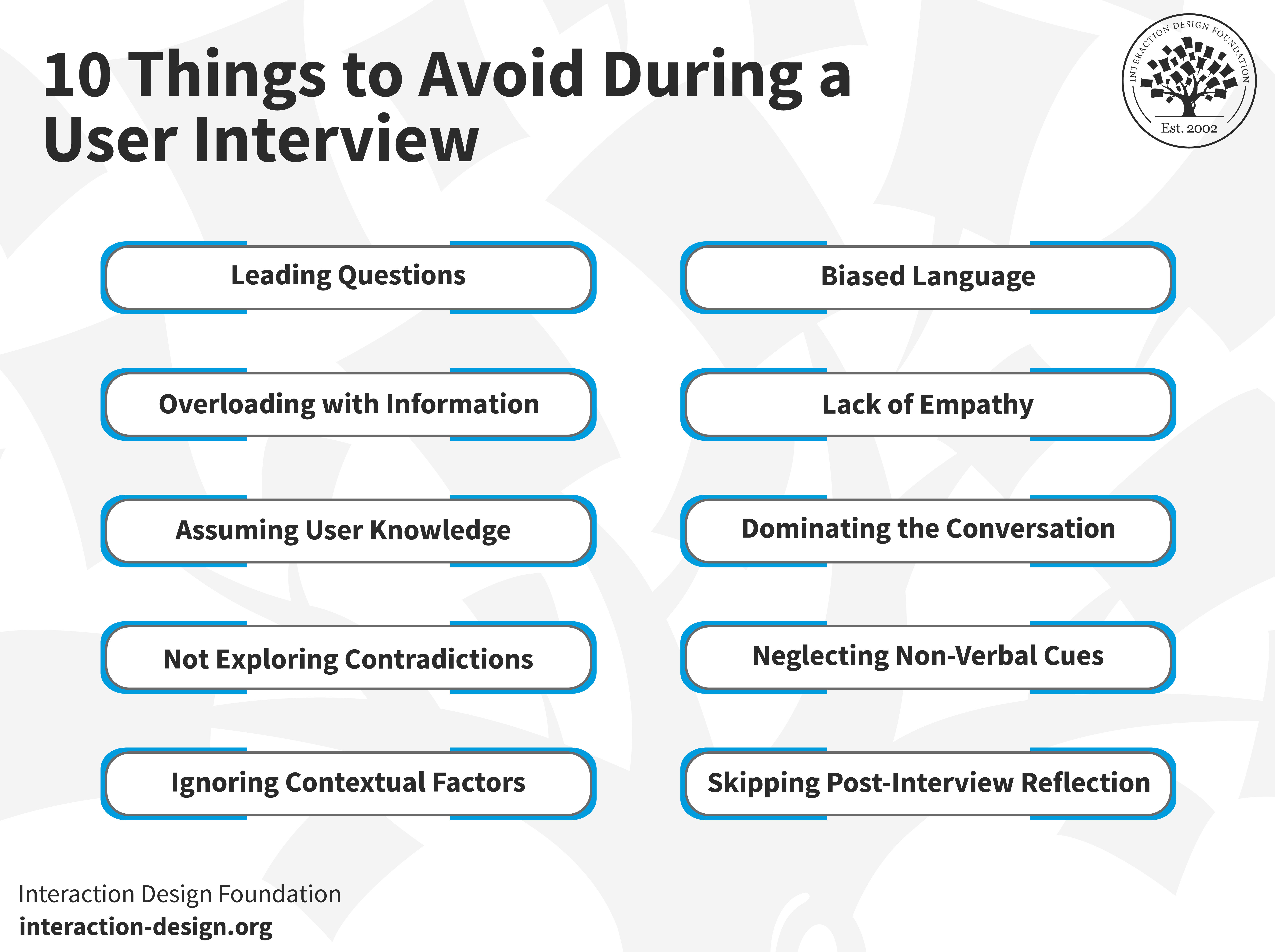

Common Mistakes to Avoid During a User Interview

Practices like Leading Questions, Biased Language, Lack of Empathy, etc. should be avoided during a User Interview

User interviews are powerful for extracting meaningful insights, but their success depends on careful planning and execution. To ensure a fruitful user research process, designers must navigate potential hindrances that could compromise the authenticity and depth of the gathered information.

1. Leading Questions

Avoid steering participants toward specific responses with leading questions. Instead, try to craft questions that are neutral and open-ended. This encourages genuine and unbiased insights. Leading questions can unknowingly influence participants, compromising the integrity of the data collected.

2. Biased Language

Be vigilant about using language that may introduce bias into the interview. Phrasing questions in a way that favors a particular response can distort the authenticity of participants' answers. Designers should aim for neutrality and clarity to ensure participants feel comfortable expressing their thoughts.

3. Overloading with Information

Resist the temptation to overwhelm participants with excessive information before or during the interview. Providing too much context leads participants to tailor their responses based on what they think the interviewer wants to hear. In contrast, user interviews aim to make participants express their natural thoughts and experiences.

4. Lack of Empathy

Build rapport and create a comfortable environment in a user interview . Refrain from rushing through questions without allowing participants the space to share their thoughts. Acknowledge their experiences and be genuinely interested in their perspective to foster open communication. Ann Blandford offers practical tips on how to build rapport with interviewees through the structure of questions.

5. Assuming User Knowledge

Steer clear of assumptions regarding the user's knowledge or familiarity with the product or topic. Clarify terms, avoid industry jargon, and ensure that participants fully understand the context are some of the key pointers. This prevents misunderstandings that could impact the accuracy of their feedback.

6. Dominating the Conversation

It is vital to balance guiding the conversation and allowing participants to express themselves freely. Avoid dominating the dialogue or interrupting excessively. You should create an environment where participants feel valued, listened to, and are encouraged to share their experiences without interruption.

7. Not Exploring Contradictions

Explore contradictions or inconsistencies in participants' responses with appropriate sensitivity. There may be occasions where people contradict their statements. These variations can offer valuable insights into the complexity of user experiences. However, when you question them on such discrepancies, they might get defensive or uncomfortable. Approach these contradictions carefully and delve deeper to uncover the nuances that might not be immediately apparent.

8. Neglecting Non-Verbal Cues

User interviews extend beyond verbal communication. Pay attention to non-verbal cues such as body language, facial expressions, and tone of voice. Neglecting these cues may result in overlooking subtle yet significant indicators of a participant's sentiments or attitudes.

9. Ignoring Contextual Factors

Avoid separating user insights from their broader context. Consider external factors influencing participants' responses, such as cultural differences or situational circumstances. It is crucial to account for these factors to avoid misinterpretations and incomplete understandings of the user experience .

10. Skipping Post-Interview Reflection

By removing these pitfalls, designers can elevate the quality of user interviews. This will ensure the data collected is authentic, unbiased, and representative of the user's experiences.

User Interview Alternatives

While user interviews are a go-to method in user research, time or budgetary constraints may make it difficult to conduct interviews. Here are some other research methods to consider under such circumstances.

.jpg)

Methods like Focus Groups, Usability Tests etc, can be tried as an alternative to User Interview.

1. Focus Groups

Focus groups offer a dynamic alternative to one-on-one interviews. Bringing together a small group of participants fosters group dynamics, allowing researchers to observe interactions and gather collective insights. This method is particularly effective for exploring diverse perspectives, uncovering shared experiences, and understanding group dynamics that might not surface in individual interviews. Remember that your designs will be used by multiple users different from one another.

2. Usability Testing and Prototypes

Usability testing involves evaluating the effectiveness of a product's interface through real-time user interaction. This alternative method employs prototypes or actual product versions, allowing researchers to observe users navigating the system. Usability testing provides insights into user interactions, pain points, and preferences in a controlled environment. By incorporating prototypes, designers can assess the functionality of specific features, ensuring a user-friendly design. This method is especially valuable for refining the user experience iteratively, based on direct user feedback, ultimately leading to more robust and user-centric design solutions.

3. In-Person Observation

In-person observation involves directly witnessing users' behaviors and actions in their natural environment. By immersing researchers in the users' context, this method unveils nuances that may be missed in a controlled setting. The in-person approach provides a holistic understanding of how users integrate products or services into their daily lives. Designers should conduct the observation without external influence on the subject user’s behavior .

4. Market Research

Market research extends the scope beyond individual user experiences to broader market trends and preferences. This alternative leverages quantitative data, surveys, and statistical analysis to uncover patterns at a larger scale. Market research complements user interviews by providing a macro-level understanding that informs strategic decisions and market positioning.

Discovery research focuses on the initial exploration of a problem space or a new product idea. It involves gathering insights from various sources, including user interviews, surveys, and secondary research. By combining diverse methods, discovery research lays the foundation for understanding the landscape before diving into more targeted investigations. Rather than relying solely on one method, integrate various user research methods to understand user needs comprehensively. You can get insights from different angles by combining interviews, usability testing, and surveys. This eventually results in more informed and user-centric design decisions.

Learn More about User Interviews

Take the IxDF course User Research – Methods and Best Practices to learn more about interviews and other qualitative research methods.

For a concise overview of user interviews, watch How To Conduct Effective User Interviews , a Master Class webinar with Joshua Seiden, Co-Author of Lean UX and Founder of Seiden Consulting.

User interviews for UX research : Refer to this article to learn how User interviews are incorporated into UX research.

User Interviews 101 : Learn about all the do’s and don’ts of User Interviews.

To learn how to make sense of all the qualitative data, see How to Do a Thematic Analysis of User Interviews .

Questions related to User Interviews

Successful research interviews hinge on a systematic approach. The basic steps include defining clear research objectives, selecting appropriate participants, crafting well-structured and open-ended questions, ensuring a comfortable environment, actively listening to participants, documenting findings accurately, and conducting thoughtful follow-up analysis. These steps collectively contribute to the success of a research interview. They foster genuine engagement, extract meaningful insights, and lay the groundwork for informed design decisions . Designers should also carefully consider selecting the research methodology that best suits their objectives. Selection of the sample groups to conduct the interviews is also vital to maintain the research context.

Watch this video to learn about the interview analysis process.

A user interview focus framework is a practical plan that ensures we create things people genuinely need. It aligns with the idea that people don't just buy products; they buy solutions to their problems. This framework consists of five straightforward steps: find the right people looking for a solution, share your idea with them, check if they're willing to pay, make sure your solution works, and, if successful, grow and automate. It's a smart way to use user interviews to build things that truly solve real problems.

Conducting user interviews involves a systematic approach. Begin by defining clear research objectives and identifying the target audience. Craft open-ended questions to encourage participants to share their experiences openly. The next step is to choose a suitable interview format. Depending on the research goals, it could be structured, unstructured, or semi-structured. Ensure a comfortable environment for participants, whether in-person or remote. Listen to responses, ask follow-up questions for depth, and document findings meticulously. Post-interview, analyze the data, identify patterns and iterate the design process based on the insights gathered. By following this structured approach, you can conduct an insightful user interview.

The number of user interviews needed depends on the research goals and the project’s complexity . While there's no one-size-fits-all answer, a common rule of thumb is to conduct at least five to eight interviews per user segment. This typically uncovers recurring patterns and provides a solid foundation for decision-making. However, the ideal number may vary based on the project's scope, the diversity of the user base, and the level of detail required. Iterative testing and continuous feedback loops may prompt additional interviews as the design evolves. If you continuously upgrade your designs based on your research insights, consider conducting another round of interviews after every significant update.

User interviews are generally safe when conducted ethically and with the well-being of participants in mind. Researchers should prioritize informed consent , clearly communicate the purpose of the interview, and ensure participants' anonymity when necessary. Protect sensitive information and adhere to data privacy regulations. Remote interviews should be conducted on secure platforms, and any incentives offered should be reasonable and ethical. By following ethical guidelines , user interviews can provide valuable insights while respecting participants' rights and privacy. Questions asked in the interviews should be carefully curated to avoid hurting any participant’s personal, emotional, or cultural sentiments. After considering the above factors, user interviews can be safely used for design research.

User interviews offer a personalized and in-depth understanding of user experiences, allowing designers to uncover insights beyond quantitative data. They facilitate empathy by connecting directly with users, leading to more human-centered designs. The qualitative nature of user interviews is invaluable for exploring motivations , pain points, and emotions. Additionally, the adaptability of user interviews, whether in-person or remote and their ability to uncover contextual insights make them a versatile tool for various stages of the design process. User interviews can be used in multiple types and areas of research design. Choosing user interviews empowers designers to create solutions that authentically resonate with user needs.

Answer a Short Quiz to Earn a Gift

What is the primary purpose of user interviews in UX design?

- To gather technical data from users.

- To understand users' needs, pain points, and motivations.

- To test design prototypes with users.

Why should interviewers avoid leading questions during user interviews?

- Leading questions produce shorter answers.

- Leading questions can bias the participant’s responses.

- Leading questions help users express their thoughts quickly.

How do contextual interviews differ from typical user interviews?

- They are conducted in the user's natural environment.

- They focus only on usability testing.

- They rely solely on predefined questions.

What is a key advantage of semi-structured interviews?

- They provide rigid, quantifiable data.

- They balance predefined questions with the flexibility for open-ended responses.

- They limit participant engagement.

What is a common mistake to avoid during user interviews?

- Using neutral language in questions.

- Ignoring non-verbal cues from participants.

- Allowing participants to elaborate on their answers.

Better luck next time!

Do you want to improve your UX / UI Design skills? Join us now

Congratulations! You did amazing

You earned your gift with a perfect score! Let us send it to you.

Check Your Inbox

We’ve emailed your gift to [email protected] .

Literature on User Interviews

Here’s the entire UX literature on User Interviews by the Interaction Design Foundation, collated in one place:

Learn more about User Interviews

Take a deep dive into User Interviews with our course User Research – Methods and Best Practices .

How do you plan to design a product or service that your users will love , if you don't know what they want in the first place? As a user experience designer, you shouldn't leave it to chance to design something outstanding; you should make the effort to understand your users and build on that knowledge from the outset. User research is the way to do this, and it can therefore be thought of as the largest part of user experience design .

In fact, user research is often the first step of a UX design process—after all, you cannot begin to design a product or service without first understanding what your users want! As you gain the skills required, and learn about the best practices in user research, you’ll get first-hand knowledge of your users and be able to design the optimal product—one that’s truly relevant for your users and, subsequently, outperforms your competitors’ .

This course will give you insights into the most essential qualitative research methods around and will teach you how to put them into practice in your design work. You’ll also have the opportunity to embark on three practical projects where you can apply what you’ve learned to carry out user research in the real world . You’ll learn details about how to plan user research projects and fit them into your own work processes in a way that maximizes the impact your research can have on your designs. On top of that, you’ll gain practice with different methods that will help you analyze the results of your research and communicate your findings to your clients and stakeholders—workshops, user journeys and personas, just to name a few!

By the end of the course, you’ll have not only a Course Certificate but also three case studies to add to your portfolio. And remember, a portfolio with engaging case studies is invaluable if you are looking to break into a career in UX design or user research!

We believe you should learn from the best, so we’ve gathered a team of experts to help teach this course alongside our own course instructors. That means you’ll meet a new instructor in each of the lessons on research methods who is an expert in their field—we hope you enjoy what they have in store for you!

All open-source articles on User Interviews

How to do a thematic analysis of user interviews.

- 1.3k shares

- 4 years ago

How to Conduct User Interviews

- 1.2k shares

Design for All

How to Prepare for a User Interview and Ask the Right Questions

Pros and Cons of Conducting User Interviews

Laddering Questions Drilling Down Deep and Moving Sideways in UX Research

- 8 years ago

Understand the “Why” of User Behavior to Design Better

How to Moderate User Interviews

Revolutionize UX Design with VR Experiences

Open Access—Link to us!

We believe in Open Access and the democratization of knowledge . Unfortunately, world-class educational materials such as this page are normally hidden behind paywalls or in expensive textbooks.

If you want this to change , cite this page , link to us, or join us to help us democratize design knowledge !

Privacy Settings

Our digital services use necessary tracking technologies, including third-party cookies, for security, functionality, and to uphold user rights. Optional cookies offer enhanced features, and analytics.

Experience the full potential of our site that remembers your preferences and supports secure sign-in.

Governs the storage of data necessary for maintaining website security, user authentication, and fraud prevention mechanisms.

Enhanced Functionality

Saves your settings and preferences, like your location, for a more personalized experience.

Referral Program

We use cookies to enable our referral program, giving you and your friends discounts.

Error Reporting

We share user ID with Bugsnag and NewRelic to help us track errors and fix issues.

Optimize your experience by allowing us to monitor site usage. You’ll enjoy a smoother, more personalized journey without compromising your privacy.

Analytics Storage

Collects anonymous data on how you navigate and interact, helping us make informed improvements.

Differentiates real visitors from automated bots, ensuring accurate usage data and improving your website experience.

Lets us tailor your digital ads to match your interests, making them more relevant and useful to you.

Advertising Storage

Stores information for better-targeted advertising, enhancing your online ad experience.

Personalization Storage

Permits storing data to personalize content and ads across Google services based on user behavior, enhancing overall user experience.

Advertising Personalization

Allows for content and ad personalization across Google services based on user behavior. This consent enhances user experiences.

Enables personalizing ads based on user data and interactions, allowing for more relevant advertising experiences across Google services.

Receive more relevant advertisements by sharing your interests and behavior with our trusted advertising partners.

Enables better ad targeting and measurement on Meta platforms, making ads you see more relevant.

Allows for improved ad effectiveness and measurement through Meta’s Conversions API, ensuring privacy-compliant data sharing.

LinkedIn Insights

Tracks conversions, retargeting, and web analytics for LinkedIn ad campaigns, enhancing ad relevance and performance.

LinkedIn CAPI

Enhances LinkedIn advertising through server-side event tracking, offering more accurate measurement and personalization.

Google Ads Tag

Tracks ad performance and user engagement, helping deliver ads that are most useful to you.

Share Knowledge, Get Respect!

or copy link

Cite according to academic standards

Simply copy and paste the text below into your bibliographic reference list, onto your blog, or anywhere else. You can also just hyperlink to this page.

New to UX Design? We’re Giving You a Free ebook!

Download our free ebook “ The Basics of User Experience Design ” to learn about core concepts of UX design.

In 9 chapters, we’ll cover: conducting user interviews, design thinking, interaction design, mobile UX design, usability, UX research, and many more!

Integrations

What's new?

In-Product Prompts

Participant Management

Interview Studies

Prototype Testing

Card Sorting

Tree Testing

Live Website Testing

Automated Reports

Templates Gallery

Choose from our library of pre-built mazes to copy, customize, and share with your own users

Browse all templates

Financial Services

Tech & Software

Product Designers

Product Managers

User Researchers

By use case

Concept & Idea Validation

Wireframe & Usability Test

Content & Copy Testing

Feedback & Satisfaction

Content Hub

Educational resources for product, research and design teams

Explore all resources

Question Bank

Maze Research Success Hub

Guides & Reports

Help Center

Future of User Research Report

The Optimal Path Podcast

Maze Guides | Resources Hub

Unpacking User Interviews: Turning Conversations into Insights

0% complete

How to conduct user interviews: Step by step + best practices

It’s time to sit down and listen up to what users have to say about your product.

Now that you’ve made the decision to run user interviews, there’s likely one big question on your mind: how can you conduct user interviews in a way that guarantees the in-depth insights you need?

While interviews may seem like sitting down for a chat, there’s more to this UX research method than meets the eye. Pushing beyond a simple conversation can garner you rich, descriptive feedback to inform practical UX decisions.

In this chapter, we’ll share how to conduct user interview in six key steps, to ensure you’re making the most of your users’ time and responses. We’ll also cover off some industry best practices to elicit the most valuable, actionable responses from your participants.

Moderated research has never been so easy

Simplify your interview workflow from start to finish with automated scheduling, seamless video sessions, and Al-driven analysis and reporting.

How to conduct user interviews: step-by-step

Interviewing users is a lot like ice skating—it looks easy, but requires careful planning, practice, and balance.

From recruiting the right participants, preparing interview questions, navigating the conversation, and, perhaps most importantly, analyzing the responses—conducting interviews is a detailed process.

We’ve outlined the six key steps on how to structure user interviews to help you get the insights you need from your user interviews .

1. Set your goals

Start by defining what you want to achieve from this research process. Are you launching a product and want to understand user expectations? Are you validating a new functionality or feature for your product? Are you collecting customer feedback to optimize the user onboarding experience?

These research objectives will guide your research in the right direction and ensure everyone’s on the same page. What’s more, all other aspects of planning this research—like the type of users you interview and the questions you ask—are closely tied to your objectives.

Hillary Omitogun , UX Research Consultant and Founder of HerSynergy Tribe , shares how she prepares for user interviews:

“I always start with a research plan . This has clear goals that align with the business's north star. I also create an interview guide. This includes questions I plan to ask, which are typically gathered from myself and team members, like a PM or designer. It also outlines how many people I'll be interviewing and who they are.”

Let’s look at a quick example of a good research goal:

Imagine you're building an AI-powered design tool to simplify and accelerate the UX design process . You want to test your assumptions about user behavior and refine your foundational ideas for this app with user interviews. So, the objectives of your user research process will be to identify:

- The shortcomings of current design tools

- Primary use cases for AI-powered design features

- Users’ expectations from an AI design solution

With goals like these, you’re looking at holding semi-structured, generative user interviews to find out more about user experiences and expectations.

💡 Head to Chapter 1 to learn more about the different types of user interview and which is right for your research project.

2. Prepare your interview questions

Once you're clear on the research project's purpose, you can start outlining your interview scenario. This will include a basic script for how you want an interview to pan out. Divide this plan into segments for every theme or topic you want to cover.

Coming up with the right research questions involves a lot of effort—and your first set of questions likely won’t be the same as the questions you ask your last interviewee. You have to constantly refine the questions based on people's responses to get valuable insights, so consider question creation a continuous effort.

Haley Stracher , Design Director and CEO of Iris Design Collaborative , notes what to be wary of when you’re drafting user interview questions:

“Avoid asking leading questions at all costs. I use a lot of unbiased and open-ended questions like ‘how did this feel to you?’, instead of ‘did you like this process?’ This will get you the best data you’re looking for and avoid any cognitive biases creeping in.”

✨ Not sure where to start with user interview questions? Check out the Maze Question Bank for over 350 ready-to-go research questions, tried and tested by our own research team.

3. Recruit participants for your user research

When you’ve done this initial legwork, it’s time to find the right candidates for your interviews. Before you start searching and recruiting research participants , study your target audience and create ideal user personas for your product.

These fictional personas will tell you the characteristics to look for when shortlisting candidates. They'll also help in filtering out those who don't fit the bill, saving you the effort of interviewing the wrong users.

You can also go back to your goals and define the types of target users you want to interview. For example, if you want to introduce your product to a new market, you can interview prospective customers that fit your personas to understand their expectations for your product. However, if your goal is to assess the effectiveness of your self-serve support setup, then you have to talk to your existing users.

Here's the tricky part: finding the right users that match your defined personas. There are many ways to recruit participants for UX research , including:

- Spreading the word in relevant communities with project details and incentives

- Amplifying the message on social media channels and among your network

- Leveraging guerilla testing tactics to approach your target audience

- Checking out participant databases or working with recruitment agencies

An example post calling for research participants

For example, the Maze Panel offers a quicker and more convenient option to find testers for research. You can browse a massive pool of over 280 million participants and narrow your search based on demographic and campaign engagement metrics.

What’s more, with Reach , you can go beyond recruitment to implement your complete outreach workflow from start to finish. Analyze individual profiles, create and send custom recruitment campaigns, and make your research process a breeze.

4. Introductions and build rapport

Before conducting your interviews, ask participants to fill out a research consent form. UX research ethics and best practices require informed consent to ensure participants have a complete understanding of their role in the study, its expected outcomes, and how their contributions will be used.

Once you’ve taken this written consent, you can start the interview by introducing yourself and sharing more context about the conversation.

Without delving into too much detail, briefly explain what the interview is for and your role in this project. Then, start with some lighter questions to learn more about each participant before moving on to your main points.

Pay attention to your body language, whether it's an in-person interview or a remote one. Your actions can make a huge difference in the interviewee's comfort and confidence. Consider these pointers when chatting with interviewees:

- Open with a smile and shake hands with interviewees (if in person, of course)

- Call interviewees by their names to build a connection

- Share some info on yourself to put them at ease e.g. your job role

- Be mindful of your body language and how it influences their perception of you

Overall, you want to maintain relaxed eye contact, nod frequently, and use empathetic, person-centered language.

5. Conduct the interview

This is what you’ve been working toward—it’s interview time. Both you and your participant should be ready to move into the interview stage after some introductions and chit chat.

Switching from small talk to the interview should be seamless—you don’t want participants to suddenly feel like they’re under pressure.

Ease into the interview; it’s even worth considering whether there’s a way you can link your first question to some expected small talk. This way, you know you’re consciously transitioning into the interview, but the interviewee feels it’s a natural progression of the conversation.

How your interview will pan out depends on the type of interview you’re conducting. If it’s structured you’ll likely rattle through the questions one at a time, but if it’s unstructured it’ll feel more like an open conversation. If it’s semi-structured, you’ll find yourself somewhere between these two.

Before starting, I make it a point to set clear expectations and answer any questions the participants might have.

Hillary Omitogun , UX Research Consultant

It’s a good idea to keep your interview goals front and center when conducting interviews. You can’t get interview moments back—and you don’t want to find yourself wishing you’d asked an insight-packed follow-up question once you’ve completed the interview.

As with the introductions, keep your body language and tone positive and open. Keep participants engaged by using their name from time to time, and try to keep the interview as conversational as possible.

🛑 Remember to hit record Taking notes is a great way to ensure you don’t miss anything throughout the interview, but don’t overdo it—you don’t want interviewees to feel like they’re under a microscope, and getting too stuck on notetaking can mean you lose rapport or miss important follow-up questions.

Recording your interview is an easy way to keep track of everything that’s been said, and allows you to be present in the moment, before going over the participant’s answers with a fine-tooth comb after the interview. It also means you have a permanent record of the interview, can share it with colleagues, and can use user interview tools to speed up analysis.

Alternatively, if you prefer pen and paper, Hillary suggests inviting a colleague to take notes during the interview: “In the past, I’ve had a PM or designer join user interviews to help take notes. I have a note-taking guide and template I share ahead of time—note-taking isn’t as simple as just relaying what the user said in the interview.”

6. Wrapping up

As a final step, debrief participants about the interview and thank them for participating. You can also encourage them to ask any follow-up questions about the project or get additional information.

This is also a good time to confirm the participant’s continued consent. Although they gave it at the start, they now know the full context of the interview and are in a better position to give complete, informed consent.

You should also discuss the next steps at this stage—like the incentive you promised or the project timeline. This quick debriefing round aims to keep them informed about the study and end on a positive note.

💡 What’s next?

We cover user interview analysis in Chapter Four—jump ahead to learn how you can turn your user interview data into actionable insights.

6 Best practices for conducting user interviews in UX research

The steps above are how you conduct user interviews, but running user interviews isn’t just about going through the motions—you’ll need to work within a framework of UX interview best practices to ensure you’re getting quality insights.

Think of the six steps to conduct user interviews as the seeds you plant, and these six best practices as the fertilizer sprinkled on top to enhance growth and make sure those plants are as vibrant as possible!

Here’s how to fertilize your user interviews to ensure they produce the in-depth, focused customer insights you’re looking for.

1. Get consent

Getting participants' consent is non-negotiable in UX research interviews. This written consent has a twofold purpose: first, it informs users of what they're interviewing for and how their data will be used, and secondly, it ensures researcher accountability when it comes to protecting participants.

Researchers of any kind have a duty to protect research participants from any type of psychological or physical harm. During user interviews, you’re held to the same ethical standards as any other researcher; obtaining informed consent is a key step in the user interview process.

2. Prepare, prepare, prepare

Nobody wants user interviews to fall flat—it’s a time and energy investment for both researchers and participants. Whether it’s clear notes to yourself, pre-prepared user interview questions or visual aids, ensuring you’re fully prepared is crucial for conducting successful user interviews.

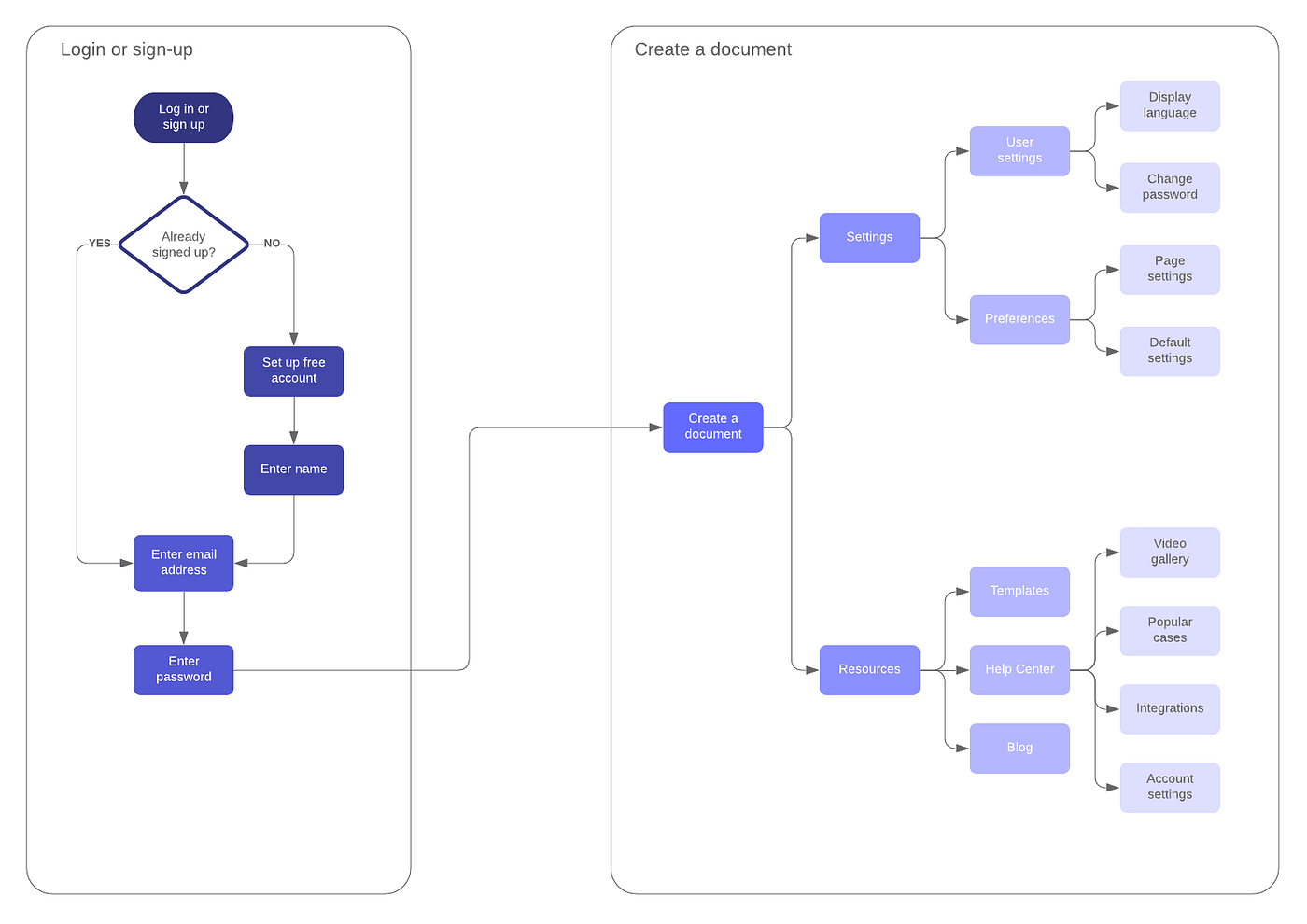

One example is focusing your interview around journey flow. Journey flows are visualizations of a process users go through in order to complete a task with your product, service, or platform. Angling your user interview around a journey flow provides structure to the interview, and helps you pinpoint each decision a user makes from initial product contact to feature interactions.

An example journey flow

3. Respect your user’s time

Interviews require considerable time and effort from both sides. Even after participants have agreed to the interview, make sure to confirm specifics like the topic of the interview, where it will be hosted (online or in-person), the expected time commitment, interview process, and any other details.

This does two things:

- It makes your interviewee feel respected and at ease, increasing the honesty and openness of their answers

- It increases the chance of your interviewee participating in follow-ups and future UX research projects

4. Make your interviewee comfortable

A good way to elicit authentic responses is to make users feel relaxed during the interview. Instead of hosting the session as a strict interview, carry it out like a relaxed conversation. Acknowledge user responses to help them feel confident and comfortable. Interviews can put participants under pressure—do your best to put them at ease.

That being said, Hillary highlights balancing warmth with remaining impartial:

“At the start of my career, I tried to be friendly by offering positive feedback like 'Oh, good information.' I realized this could unintentionally lead or bias the participants' responses, encouraging them to just repeat what they think I want to hear.”

5. Watch participant body language

While a user will be verbally answering your questions, be sure to pay close attention to subtle cues they communicate through body language. You want to understand what users do, not just what they say.

Reading body language complements verbal responses, giving you a clearer picture of your interviewee’s experience. It helps you identify the emotions that accompany the user's answers and gauge how comfortable or receptive they are during the interviews.

Here are a few body language signs, and what they can mean:

- Averted eye contact: Indicates your interviewee feels bored, deceived, or uninterested

- Raised eyebrows: usually reflects a state of surprise, uncertainty, or fear

- Mirroring: If a interviewee mimics your body language, it's a sign of rapport and agreement, indicating the interviewee is comfortable and engaged

⚠️ Read social cues with caution Being mindful of nonverbal cues is helpful but it's crucial not to overestimate them. Our stereotypical understanding of body language doesn’t take into account neurodiversity, cultural differences, or even simple nerves.

For example, many neurodivergent people may avoid direct eye contact or fidget, but this doesn’t mean they’re not listening or feel bored. An anxious user may stutter their words, but it’s not a reflection of their feelings about the product.

6. Offer incentives to participants

For many UX teams , preparing a questions list and conducting a user interview won’t be the most challenging part of the process—the challenge will be recruiting the right participants for the job. Offering an incentive will entice and encourage users to partake in your interview process.