NPTEL Introduction to Machine Learning Assignment 1 Answers 2023

In this post, We have provided answers of NPTEL Introduction to Machine Learning Assignment 1. We provided answers here only for reference. Plz, do your assignment at your own knowledge.

NPTEL Introduction To Machine Learning Week 1 Assignment Answer 2023

1. Which of the following is a supervised learning problem ?

- Grouping related documents from an unannotated corpus.

- Predicting credit approval based on historical data.

- Predicting if a new image has cat or dog based on the historical data of other images of cats and dogs, where you are supplied the information about which image is cat or dog.

- Fingerprint recognition of a particular person used in biometric attendance from the fingerprint data of various other people and that particular person.

2. Which of the following are classification problems?

- Predict the runs a cricketer will score in a particular match.

- Predict which team will win a tournament.

- Predict whether it will rain today.

- Predict your mood tomorrow.

3. Which of the following is a regression task?

- Predicting the monthly sales of a cloth store in rupees.

- Predicting if a user would like to listen to a newly released song or not based on historical data.

- Predicting the confirmation probability (in fraction) of your train ticket whose current status is waiting list based on historical data.

- Predicting if a patient has diabetes or not based on historical medical records.

- Predicting if a customer is satisfied or unsatisfied from the product purchased from ecommerce website using the the reviews he/she wrote for the purchased product.

4. Which of the following is an unsupervised learning task?

- Group audio files based on language of the speakers.

- Group applicants to a university based on their nationality.

- Predict a student’s performance in the final exams.

- Predict the trajectory of a meteorite.

5. Which of the following is a categorical feature?

- Number of rooms in a hostel.

- Gender of a person

- Your weekly expenditure in rupees.

- Ethnicity of a p e rson

- Area (in sq. centimeter) of your laptop screen.

- The color of the curtains in your room.

- Number of legs an animal.

- Minimum RAM requirement (in GB) of a system to play a game like FIFA, DOTA.

6. Which of the following is a reinforcement learning task?

- Learning to drive a cycle

- Learning to predict stock prices

- Learning to play chess

- Leaning to predict spam labels for e-mails

7. Let X and Y be a uniformly distributed random variable over the interval [0,4][0,4] and [0,6][0,6] respectively. If X and Y are independent events, then compute the probability, P(max(X,Y)>3)

- None of the above

9. Which of the following statements are true? Check all that apply.

- A model with more parameters is more prone to overfitting and typically has higher variance.

- If a learning algorithm is suffering from high bias, only adding more training examples may not improve the test error significantly.

- When debugging learning algorithms, it is useful to plot a learning curve to understand if there is a high bias or high variance problem.

- If a neural network has much lower training error than test error, then adding more layers will help bring the test error down because we can fit the test set better.

10. Bias and variance are given by :

- E[f^(x)]−f(x),E[(E[f^(x)]−f^(x)) 2 ]

- E[f^(x)]−f(x),E[(E[f^(x)]−f^(x))] 2

- (E[f^(x)]−f(x))2,E[(E[f^(x)]−f^(x)) 2 ]

- (E[f^(x)]−f(x))2,E[(E[f^(x)]−f^(x))] 2

NPTEL Introduction to Machine Learning Assignment 1 Answers 2022 [July-Dec]

1. Which of the following are supervised learning problems? (multiple may be correct) a. Learning to drive using a reward signal. b. Predicting disease from blood sample. c. Grouping students in the same class based on similar features. d. Face recognition to unlock your phone.

2. Which of the following are classification problems? (multiple may be correct) a. Predict the runs a cricketer will score in a particular match. b. Predict which team will win a tournament. c. Predict whether it will rain today. d. Predict your mood tomorrow.

Answers will be Uploaded Shortly and it will be Notified on Telegram, So JOIN NOW

3. Which of the following is a regression task? (multiple options may be correct) a. Predict the price of a house 10 years after it is constructed. b. Predict if a house will be standing 50 years after it is constructed. c. Predict the weight of food wasted in a restaurant during next month. d. Predict the sales of a new Apple product.

4. Which of the following is an unsupervised learning task? (multiple options may be correct) a. Group audio files based on language of the speakers. b. Group applicants to a university based on their nationality. c. Predict a student’s performance in the final exams. d. Predict the trajectory of a meteorite.

5. Given below is your dataset. You are using KNN regression with K=3. What is the prediction for a new input value (3, 2)?

6. Which of the following is a reinforcement learning task? (multiple options may be correct)

7. Find the mean of squared error for the given predictions:

8. Find the mean of 0-1 loss for the given predictions:

👇 For Week 02 Assignment Answers 👇

9. Bias and variance are given by:

10. Which of the following are true about bias and variance? (multiple options may be correct)

For More NPTEL Answers:- CLICK HERE Join Our Telegram:- CLICK HERE

| Assignment 1 | |

| Assignment 2 | |

| Assignment 3 | |

| Assignment 4 | |

| Assignment 5 | |

| Assignment 6 | |

| Assignment 7 | |

| Assignment 8 | |

| Assignment 9 | |

| Assignment 10 | |

| Assignment 11 | NA |

| Assignment 12 | NA |

About Introduction to Machine Learning

With the increased availability of data from varied sources there has been increasing attention paid to the various data driven disciplines such as analytics and machine learning. In this course we intend to introduce some of the basic concepts of machine learning from a mathematically well motivated perspective. We will cover the different learning paradigms and some of the more popular algorithms and architectures used in each of these paradigms.

COURSE LAYOUT

- Week 0: Probability Theory, Linear Algebra, Convex Optimization – (Recap)

- Week 1: Introduction: Statistical Decision Theory – Regression, Classification, Bias Variance

- Week 2: Linear Regression, Multivariate Regression, Subset Selection, Shrinkage Methods, Principal Component Regression, Partial Least squares

- Week 3: Linear Classification, Logistic Regression, Linear Discriminant Analysis

- Week 4: Perceptron, Support Vector Machines

- Week 5: Neural Networks – Introduction, Early Models, Perceptron Learning, Backpropagation, Initialization, Training & Validation, Parameter Estimation – MLE, MAP, Bayesian Estimation

- Week 6: Decision Trees, Regression Trees, Stopping Criterion & Pruning loss functions, Categorical Attributes, Multiway Splits, Missing Values, Decision Trees – Instability Evaluation Measures

- Week 7: Bootstrapping & Cross Validation, Class Evaluation Measures, ROC curve, MDL, Ensemble Methods – Bagging, Committee Machines and Stacking, Boosting

- Week 8: Gradient Boosting, Random Forests, Multi-class Classification, Naive Bayes, Bayesian Networks

- Week 9: Undirected Graphical Models, HMM, Variable Elimination, Belief Propagation

- Week 10: Partitional Clustering, Hierarchical Clustering, Birch Algorithm, CURE Algorithm, Density-based Clustering

- Week 11: Gaussian Mixture Models, Expectation Maximization

- Week 12: Learning Theory, Introduction to Reinforcement Learning, Optional videos (RL framework, TD learning, Solution Methods, Applications)

CRITERIA TO GET A CERTIFICATE

Average assignment score = 25% of average of best 8 assignments out of the total 12 assignments given in the course. Exam score = 75% of the proctored certification exam score out of 100

Final score = Average assignment score + Exam score

YOU WILL BE ELIGIBLE FOR A CERTIFICATE ONLY IF AVERAGE ASSIGNMENT SCORE >=10/25 AND EXAM SCORE >= 30/75. If one of the 2 criteria is not met, you will not get the certificate even if the Final score >= 40/100.

NPTEL Introduction to Machine Learning Assignment 1 Answers [Jan – June 2022]

Q1. Which of the following is a supervised learning problem?

a. Grouping related documents from an unannotated corpus. b. Predicting credit approval based on historical data c. Predicting rainfall based on historical data d. Predicting if a customer is going to return or keep a particular product he/she purchased from e-commerce website based on the historical data about the customer purchases and the particular product. e. Fingerprint recognition of a particular person used in biometric attendance from the fingerprint data of various other people and that particular person

Answer:- b, c, d , e

Q2. Which of the following is not a classification problem?

a. Predicting the temperature (in Celsius) of a room from other environmental features (such as atmospheric pressure, humidity etc). b.Predicting if a cricket player is a batsman or bowler given his playing records. c. Predicting the price of house (in INR) based on the data consisting prices of other house (in INR) and its features such as area, number of rooms, location etc. d. Filtering of spam messages e. Predicting the weather for tomorrow as “hot”, “cold”, or “rainy” based on the historical data wind speed, humidity, temperature, and precipitation.

Answer:- a, c

Q3. Which of the following is a regression task? (multiple options may be correct)

a. Predicting the monthly sales of a cloth store in rupees. b. Predicting if a user would like to listen to a newly released song or not based on historical data. c. Predicting the confirmation probability (in fraction) of your train ticket whose current status is waiting list based on historical data. d. Predicting if a patient has diabetes or not based on historical medical records. e. Predicting if a customer is satisfied or unsatisfied from the product purchased from e-commerce website using the the reviews he/she wrote for the purchased product.

Q4. Which of the following is an unsupervised task?

a. Predicting if a new edible item is sweet or spicy based on the information of the ingredients, their quantities, and labels (sweet or spicy) for many other similar dishes. b. Grouping related documents from an unannotated corpus. c. Grouping of hand-written digits from their image. d. Predicting the time (in days) a PhD student will take to complete his/her thesis to earn a degree based on the historical data such as qualifications, department, institute, research area, and time taken by other scholars to earn the degree. e. all of the above

Answer:- c, d

Q5. Which of the following is a categorical feature?

a. Number of rooms in a hostel. b. Minimum RAM requirement (in GB) of a system to play a game like FIFA, DOTA. c. Your weekly expenditure in rupees. d. Ethnicity of a person e. Area (in sq. centimeter) of your laptop screen. f. The color of the curtains in your room.

Answer:- d, f

Q6. Let X and Y be a uniformly distributed random variable over the interval [0, 4] and [0, 6] respectively. If X and Y are independent events, then compute the probability, P(max(X,Y)>3

a. 1/6 b. 5/6 c. 2/3 d. 1/2 e. 2/6 f. 5/8 g. None of the above

NOTE:- Answers of Introduction to Machine Learning Assignment 1 will be uploaded shortly and it will be notified on Telegram, So JOIN NOW

Q7. Let the trace and determinant of a matrix A[acbd] be 6 and 16 respectively. The eigenvalues of A are

Q8. What happens when your model complexity increases? (multiple options may be correct)

a. Model Bias decreases b. Model Bias increases c. Variance of the model decreases d. Variance of the model increases

Answer:- a, d

Q9. A new phone, E-Corp X1 has been announced and it is what you’ve been waiting for, all along. You decide to read the reviews before buying it. From past experiences, you’ve figured out that good reviews mean that the product is good 90% of the time and bad reviews mean that it is bad 70% of the time. Upon glancing through the reviews section, you find out that the X1 has been reviewed 1269 times and only 172 of them were bad reviews. What is the probability that, if you order the X1, it is a bad phone?

a. 0.136 b. 0.160 c. 0.360 d. 0.840 e. 0.773 f. 0.573 g. 0.181

Q10. Which of the following are false about bias and variance of overfitted and underfitted models? (multiple options may be correct)

a. Underfitted models have high bias. b. Underfitted models have low bias. c. Overfitted models have low variance. d. Overfitted models have high variance.

NPTEL Introduction to Machine Learning Assignment 1 Answers 2022:- In This article, we have provided the answers of Introduction to Machine Learning Assignment 1.

Disclaimer :- We do not claim 100% surety of solutions, these solutions are based on our sole expertise, and by using posting these answers we are simply looking to help students as a reference, so we urge do your assignment on your own.

For More NPTEL Answers:- CLICK HERE

Join Our Telegram:- CLICK HERE

Leave a Comment Cancel reply

You must be logged in to post a comment.

Please Enable JavaScript in your Browser to Visit this Site.

Assignments

Jump to: [Homeworks] [Projects] [Quizzes] [Exams]

There will be one homework (HW) for each topical unit of the course. Due about a week after we finish that unit.

These are intended to build your conceptual analysis skills plus your implementation skills in Python.

- HW0 : Numerical Programming Fundamentals

- HW1 : Regression, Cross-Validation, and Regularization

- HW2 : Evaluating Binary Classifiers and Implementing Logistic Regression

- HW3 : Neural Networks and Stochastic Gradient Descent

- HW4 : Trees

- HW5 : Kernel Methods and PCA

After completing each unit, there will be a 20 minute quiz (taken online via gradescope).

Each quiz will be designed to assess your conceptual understanding about each unit.

Probably 10 questions. Most questions will be true/false or multiple choice, with perhaps 1-3 short answer questions.

You can view the conceptual questions in each unit's in-class demos/labs and homework as good practice for the corresponding quiz.

There will be three larger "projects" throughout the semester:

- Project A: Classifying Images with Feature Transformations

- Project B: Classifying Sentiment from Text Reviews

- Project C: Recommendation Systems for Movies

Projects are meant to be open-ended and encourage creativity. They are meant to be case studies of applications of the ML concepts from class to three "real world" use cases: image classification, text classification, and recommendations of movies to users.

Each project will due approximately 4 weeks after being handed out. Start early! Do not wait until the last few days.

Projects will generally be centered around a particular methodology for solving a specific task and involve significant programming (with some combination of developing core methods from scratch or using existing libraries). You will need to consider some conceptual issues, write a program to solve the task, and evaluate your program through experiments to compare the performance of different algorithms and methods.

Your main deliverable will be a short report (2-4 pages), describing your approach and providing several figures/tables to explain your results to the reader.

You’ll be assessed on effort, the sophistication of your technical approach, the clarity of your explanations, the evidence that you present to support your evaluative claims, and the performance of your implementation. A high-performing approach with little explanation will receive little credit, while a careful set of experiments that illuminate why a particular direction turned out to be a dead end may receive close to full credit.

Introduction to Machine Learning

Assignment 1: classifier by hand, assignment 1: classifier by hand #.

In this assignment, you learn step by step how to code a binary classifier by hand!

Don’t worry, it will be guided through.

Introduction: calorimeter showers #

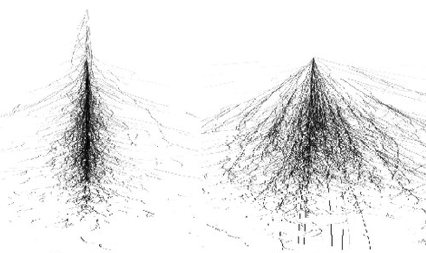

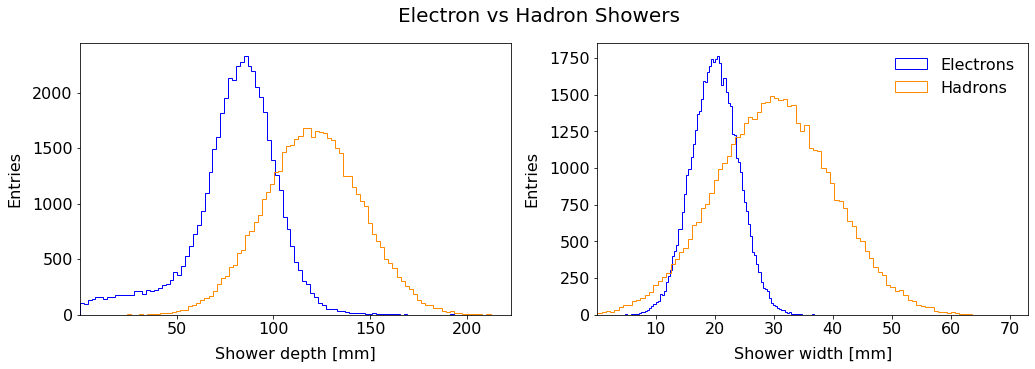

A calorimeter in the context of experimental particle physics is a sub-detector aiming at measuring the energy of incoming particles. At CERN Large Hadron Collider, the giant multipurpose detectors ATLAS and CMS are both equipped with electromagnetic and hadronic calorimeters. The electronic calorimeter, as its name indicates, is measuring the energy of incoming electrons. It is a destructive method: the energetic electron entering the calorimeter will interact with its dense material via the electromagnetic force. It eventually results in the generation of a shower of particles (electromagnetic shower), with a characteristic average depth and width. The depth is along the direction of the incoming particle and the width is the dimension perpendicular to it.

Problem? There can be noisy signals in electromagnetic calorimeters that are generated by hadrons, not electrons.

Your mission is to help physicists by coding a classifier to select electron-showers (signal) from hadron-showers (background).

To this end, you are given a dataset of shower characterists from previous measurements where the incoming particle was known. The main features are the depth and the width.

Visualization of an electron shower (left) and hadron shower (right). From ResearchGate #

Hadron showers are on average longer in the direction of the incoming hadron (depth) and large in the transverse direction (width).

1. Get the Data #

Download the dataset and put it on your GDrive. Open a new Jupyter-notebook from Google-Colab . To mount your drive:

For the following, import the NumPy and pandas libraries:

1.1 Get and load the data Read the dataset into a dataframe df . What are the columns? Which column stores the labels (targets)?

1.2 How many samples are there?

2. Feature Scaling #

2.1 Explain If the parameters are initialized randomly between 0 and 1 and the data are not zero-centered, what happens to the gradient descent? Explain the behaviour.

2.2 Standardization Create for each input feature an extra column in the dataframe to rescale it to a distribution of zero-mean and unit-variance. To see statistical information on a dataframe, a convenient method is:

We will take \(x_1\) and \(x_2\) in the order of the dataframe’s columns. By searching in the documentation for methods retrieving the mean and standard deviation, complete the following code:

Hint: recall Definition 12 and the equation to scale a feature according to the standardization method.

Check your results by calling df.describe() on the updated dataframe.

3. Data Prep #

Let’s make the dataset ready for the classifier. As seen in class, the hypothesis function in the linear assumption has the dot product \(\sum_{j=0}^n x^{(i)}_j \theta_j = x^{(i)} \theta^{\; T}\) , where by convention \(x_0 = 1\) . With 2 input features, there are two parameters to optimize for each feature and the intercept term \(\theta_0\) . To perform the dot product above, let’s add to the dataframe a column:

3.1 Adding x0 column Add a column x0 to the dataframe df filled entirely with ones.

3.2 Matrix X Create a new dataframe X that contain the x0 column and the columns of the two scaled input features.

3.3 Labels to binary The target column contains alphabetical labels. Create an extra column in your dataframe called y containing 1 if the sample is an electron shower and 0 if it is a hadron one.

Hint: you can first create an extra column full of 0, then apply a filter using the .loc property from DataFrame. It can be in the form:

Read the pandas documentation on .loc .

3.4 Vector y Extract from the dataframe this y column with the binary labels in a separate dataframe y .

4. DataFrames to Numpy #

The inputs are almost ready, yet there are still some steps. As we saw in Lecture 3 in Part Performance Metrics , we need to split the dataset in a training and a testing sets. We will use the very convenient train_test_split method from Sciki-Learn. Then, we convert the resulting dataframes to NumPy arrays. This Python library is the standard in data science. In this assignment, you will manipulate NumPy arrays and build the foundations you need to master the programming aspect of machine learning.

Copy this code into your notebook:

4.1 Shapes Show the dimensions of the four NumPy arrays using the .shape property. Comment the numbers with respect to the notations defined in class. Does it make sense?

4.2 Test size Looking at the shapes, explain what test_size represents.

5. Useful Functions #

We saw a lot of functions in the lectures: hypothesis, logistic, cost, etc. We will code these functions as python functions to make the code more modular and easier to read.

In the following you will work with two dimensional NumPy arrays. Make sure the objects you declare in the code have the correct dimension (number of rows and number of columns).

To help you, look at the examples below. This creates a 2 x 3 matrix:

This is a list:

This makes a 1D NumPy array:

This declares a 2D NumPy array with one row (aka arow vector):

5.1 Linear Sum Write a function computing the linear sum of the features \(X\) with the \(\boldsymbol{\theta}\) parameters for each input sample.

What should be the dimensions of the returned object? Make sure your function returns a 2D array with the correct shape.

5.2 Logistic Function Write a function computing the logistic function:

5.3 Hypothesis Function Using the two functions above, write the hypothesis function \(h_\theta(\boldsymbol{x^{(i)}})\) :

5.4 Partial Derivatives of Cross-Entropy Cost Function In the linear assumption where \(z(\boldsymbol{x^{(i)}}) = \sum_{j=0}^n \theta_j x^{(i)}_j\) , the partial derivatives of the cross-entropy cost function are:

Write a function that takes three column vectors (m \(\times\) 1) and computes the partial derivatives \(\frac{\partial}{\partial \theta_j} J(\theta)\) for a given feature \(j\) :

Hint: perform an array operation to store all the derivatives in a column vector. Then sum over the elements of that vector. At the end your function should return a scalar, i.e. one value.

5.5 Cross-Entropy Cost Function Write a function computing the total cost from the 2D column vectors of predictions and observations:

6. Classifier #

The core of the action.

Luckily a skeleton is provided. You will have to replace the statments # ... by proper code. It will mostly consist of calling the functions you defined in the previous section.

Test your code frequently. To do so, you can assign dummy temporary values for the variables you do not use yet, so that python knows they are defined and your code can run.

If you struggle or cannot finish, summarize in your notebook your trials and investigations.

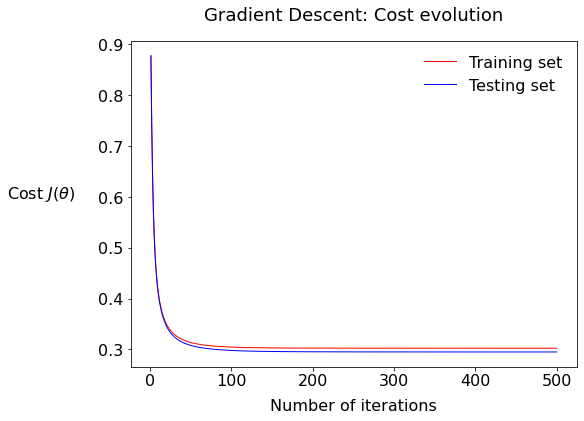

7. Plot cost versus epochs #

Use the following macro to plot the variation of the total cost vs the iteration number:

Call this macro:

You should get something like this:

7.1: Describe the plot; what is the fundamental difference between the two series train and test?

7.2: What would it mean if there would be a bigger gap between the test and training values of the cost?

8. Performance #

We will write our own functions to quantitatively assess the performance of the classifier.

Before counting the true and false predictions, we need… predictions! We already wrote a function h_class outputting a prediction as a continuous variable between 0 and 1 , equivalent to a probabilitiy. The function below is calling h_class and then fills a python list of binary predictions, so either 0 or 1. For the boundary, recall in the lecture that the sigmoid is symmetric around y = 0.5, so we will work with this boundary for now. Copy this to your notebook:

Call the function:

We will work with lists from now on, so flatten the observed test values:

8.1 Accuracy Write a function computing the accuracy of the classifier:

Call your function using the test set and print the result.

Call your function, still with the test set of course, and print the result.

BRAVO! You know now the math behind a binary classifier!

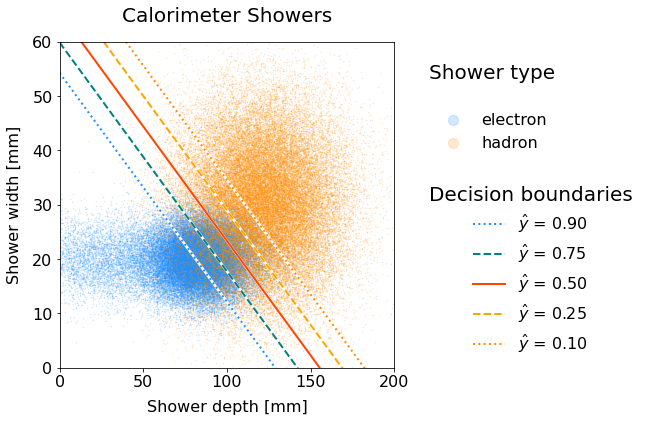

X. BONUS: Decision Boundaries #

This is for advanced programmers and/or your curiosity and/or if you have the time. Bonus points will be given even if you answer with math equations only and not necessarily the associated python code. Of course you if you succeed in getting the python, more bonus points for you!

Goal We want to draw on a scatter plot the lines corresponding to different decision boundaries.

Scroll down at the very end to see where we are heading to.

X.0 Scatter plot The first step is to split the signal and background into two different dataframes. Using the general dataframe df defined at the beginning:

The plotting macro:

To call it:

X.1 Useful functions Recall the logistic function:

Write a function rev_sigmoid that outputs the value \(z = f(\hat{y})\) .

Write a function scale_inputs that scales a list of raw input features, either \(x_1\) or \(x_2\) , according to the standardization procedure.

Write the function unscale_inputs that does the contrary.

X.2 Equation For a given threshold \(\hat{y}\) , write the equation of the line boundary: \(x_2 = f(\boldsymbol{\theta}, x_1, \hat{y})\) .

X.3 Coordinate points To draw a line on a plot in Matplotlib, one needs to provide the coordinates as a set of two data points.

Write a function that compute the coordinates x2_left and x2_right – associated with the values of x1_min and x1_max respectively – of a decision boundary line at a given threshold \(\hat{y}\) . (recall 0.5 is the standard one for logistic regression).

Warrior-level bonus: compute this for several thresholds, i.e. the function returns a list of line properties. Tip: it’s convenient to store the result in a dictionary. For instance you can have keys threshold , x2_left , x2_right .

X.4 Plotting the boundaries (advanced) In the scatter plot code provided, uncomment the boundary section and draw the line(s) using the Matplotlib plot function.

In the very end, this is how it would render:

Scatter plot of electron and hadron showers with decision boundary lines for various thresholds. Code will be shown while releasing solutions. #

The higher the threshold, the more the boundary line shifts downwards in the electron-dense area. Why is that the case?

You are encouraged to work in groups of two, however submissions are individual.

If you have received help from your peers and/or have worked within a group, summarize in the header of your notebook the involved students, how they helped you and the contributions of each group member. This is to ensure fairness in the evaluation.

You can use the internet such as the official pages of relevant libraries, forum websites to debug, etc. However, using an AI such as ChatGPT would be considered cheating (and not good for you anyway to develop your programming skills).

The instructor and tutors are available throughout the week to answer your questions. Write an email with your well-articulated question(s). Put in CC your teammates if any.

Thank you and do not forget to have fun while coding!

- Computer Science and Engineering

- NOC:Introduction to Machine Learning(Course sponsored by Aricent) (Video)

- Co-ordinated by : IIT Madras

- Available from : 2016-01-19

- Intro Video

- A brief introduction to machine learning

- Supervised Learning

- Unsupervised Learning

- Reinforcement Learning

- Probability Basics - 1

- Probability Basics - 2

- Linear Algebra - 1

- Linear Algebra - 2

- Statistical Decision Theory - Regression

- Statistical Decision Theory - Classification

- Bias-Variance

- Linear Regression

- Multivariate Regression

- Subset Selection 1

- Subset Selection 2

- Shrinkage Methods

- Principal Components Regression

- Partial Least Squares

- Linear Classification

- Logistic Regression

- Linear Discriminant Analysis 1

- Linear Discriminant Analysis 2

- Linear Discriminant Analysis 3

- Weka Tutorial

- Optimization

- Perceptron Learning

- SVM - Formulation

- SVM - Interpretation & Analysis

- SVMs for Linearly Non Separable Data

- SVM Kernels

- SVM - Hinge Loss Formulation

- Early Models

- Backpropogation I

- Backpropogation II

- Initialization, Training & Validation

- Maximum Likelihood Estimate

- Priors & MAP Estimate

- Bayesian Parameter Estimation

- Introduction

- Regression Trees

- Stopping Criteria & Pruning

- Loss Functions for Classification

- Categorical Attributes

- Multiway Splits

- Missing Values, Imputation & Surrogate Splits

- Instability, Smoothness & Repeated Subtrees

- Evaluation Measures I

- Bootstrapping & Cross Validation

- 2 Class Evaluation Measures

- The ROC Curve

- Minimum Description Length & Exploratory Analysis

- Introduction to Hypothesis Testing

- Basic Concepts

- Sampling Distributions & the Z Test

- Student\'s t-test

- The Two Sample & Paired Sample t-tests

- Confidence Intervals

- Bagging, Committee Machines & Stacking

- Gradient Boosting

- Random Forest

- Naive Bayes

- Bayesian Networks

- Undirected Graphical Models - Introduction

- Undirected Graphical Models - Potential Functions

- Hidden Markov Models

- Variable Elimination

- Belief Propagation

- Partitional Clustering

- Hierarchical Clustering

- Threshold Graphs

- The BIRCH Algorithm

- The CURE Algorithm

- Density Based Clustering

- Gaussian Mixture Models

- Expectation Maximization

- Expectation Maximization Continued

- Spectral Clustering

- Learning Theory

- Frequent Itemset Mining

- The Apriori Property

- Introduction to Reinforcement Learning

- RL Framework and TD Learning

- Solution Methods & Applications

- Multi-class Classification

- Watch on YouTube

- Assignments

- Download Videos

- Transcripts

- Handouts (1)

| Module Name | Download | Description | Download Size |

|---|---|---|---|

| Linear Regression | Linear Algebra Tutorial | 192 |

| Sl.No | Chapter Name | MP4 Download |

|---|---|---|

| 1 | A brief introduction to machine learning | |

| 2 | Supervised Learning | |

| 3 | Unsupervised Learning | |

| 4 | Reinforcement Learning | |

| 5 | Probability Basics - 1 | |

| 6 | Probability Basics - 2 | |

| 7 | Linear Algebra - 1 | |

| 8 | Linear Algebra - 2 | |

| 9 | Statistical Decision Theory - Regression | |

| 10 | Statistical Decision Theory - Classification | |

| 11 | Bias-Variance | |

| 12 | Linear Regression | |

| 13 | Multivariate Regression | |

| 14 | Subset Selection 1 | |

| 15 | Subset Selection 2 | |

| 16 | Shrinkage Methods | |

| 17 | Principal Components Regression | |

| 18 | Partial Least Squares | |

| 19 | Linear Classification | |

| 20 | Logistic Regression | |

| 21 | Linear Discriminant Analysis 1 | |

| 22 | Linear Discriminant Analysis 2 | |

| 23 | Linear Discriminant Analysis 3 | |

| 24 | Optimization | |

| 25 | Perceptron Learning | |

| 26 | SVM - Formulation | |

| 27 | SVM - Interpretation & Analysis | |

| 28 | SVMs for Linearly Non Separable Data | |

| 29 | SVM Kernels | |

| 30 | SVM - Hinge Loss Formulation | |

| 31 | Weka Tutorial | |

| 32 | Early Models | |

| 33 | Backpropogation I | |

| 34 | Backpropogation II | |

| 35 | Initialization, Training & Validation | |

| 36 | Maximum Likelihood Estimate | |

| 37 | Priors & MAP Estimate | |

| 38 | Bayesian Parameter Estimation | |

| 39 | Introduction | |

| 40 | Regression Trees | |

| 41 | Stopping Criteria & Pruning | |

| 42 | Loss Functions for Classification | |

| 43 | Categorical Attributes | |

| 44 | Multiway Splits | |

| 45 | Missing Values, Imputation & Surrogate Splits | |

| 46 | Instability, Smoothness & Repeated Subtrees | |

| 47 | Tutorial | |

| 48 | Evaluation Measures I | |

| 49 | Bootstrapping & Cross Validation | |

| 50 | 2 Class Evaluation Measures | |

| 51 | The ROC Curve | |

| 52 | Minimum Description Length & Exploratory Analysis | |

| 53 | Introduction to Hypothesis Testing | |

| 54 | Basic Concepts | |

| 55 | Sampling Distributions & the Z Test | |

| 56 | Student\'s t-test | |

| 57 | The Two Sample & Paired Sample t-tests | |

| 58 | Confidence Intervals | |

| 59 | Bagging, Committee Machines & Stacking | |

| 60 | Boosting | |

| 61 | Gradient Boosting | |

| 62 | Random Forest | |

| 63 | Naive Bayes | |

| 64 | Bayesian Networks | |

| 65 | Undirected Graphical Models - Introduction | |

| 66 | Undirected Graphical Models - Potential Functions | |

| 67 | Hidden Markov Models | |

| 68 | Variable Elimination | |

| 69 | Belief Propagation | |

| 70 | Partitional Clustering | |

| 71 | Hierarchical Clustering | |

| 72 | Threshold Graphs | |

| 73 | The BIRCH Algorithm | |

| 74 | The CURE Algorithm | |

| 75 | Density Based Clustering | |

| 76 | Gaussian Mixture Models | |

| 77 | Expectation Maximization | |

| 78 | Expectation Maximization Continued | |

| 79 | Spectral Clustering | |

| 80 | Learning Theory | |

| 81 | Frequent Itemset Mining | |

| 82 | The Apriori Property | |

| 83 | Introduction to Reinforcement Learning | |

| 84 | RL Framework and TD Learning | |

| 85 | Solution Methods & Applications | |

| 86 | Multi-class Classification |

| Sl.No | Chapter Name | English |

|---|---|---|

| 1 | A brief introduction to machine learning | |

| 2 | Supervised Learning | |

| 3 | Unsupervised Learning | |

| 4 | Reinforcement Learning | |

| 5 | Probability Basics - 1 | |

| 6 | Probability Basics - 2 | |

| 7 | Linear Algebra - 1 | |

| 8 | Linear Algebra - 2 | |

| 9 | Statistical Decision Theory - Regression | |

| 10 | Statistical Decision Theory - Classification | |

| 11 | Bias-Variance | |

| 12 | Linear Regression | |

| 13 | Multivariate Regression | |

| 14 | Subset Selection 1 | |

| 15 | Subset Selection 2 | |

| 16 | Shrinkage Methods | |

| 17 | Principal Components Regression | |

| 18 | Partial Least Squares | |

| 19 | Linear Classification | |

| 20 | Logistic Regression | |

| 21 | Linear Discriminant Analysis 1 | |

| 22 | Linear Discriminant Analysis 2 | |

| 23 | Linear Discriminant Analysis 3 | |

| 24 | Optimization | |

| 25 | Perceptron Learning | |

| 26 | SVM - Formulation | |

| 27 | SVM - Interpretation & Analysis | |

| 28 | SVMs for Linearly Non Separable Data | |

| 29 | SVM Kernels | |

| 30 | SVM - Hinge Loss Formulation | |

| 31 | Weka Tutorial | |

| 32 | Early Models | |

| 33 | Backpropogation I | |

| 34 | Backpropogation II | |

| 35 | Initialization, Training & Validation | |

| 36 | Maximum Likelihood Estimate | |

| 37 | Priors & MAP Estimate | |

| 38 | Bayesian Parameter Estimation | |

| 39 | Introduction | |

| 40 | Regression Trees | |

| 41 | Stopping Criteria & Pruning | |

| 42 | Loss Functions for Classification | |

| 43 | Categorical Attributes | |

| 44 | Multiway Splits | |

| 45 | Missing Values, Imputation & Surrogate Splits | |

| 46 | Instability, Smoothness & Repeated Subtrees | |

| 47 | Tutorial | |

| 48 | Evaluation Measures I | |

| 49 | Bootstrapping & Cross Validation | |

| 50 | 2 Class Evaluation Measures | |

| 51 | The ROC Curve | |

| 52 | Minimum Description Length & Exploratory Analysis | |

| 53 | Introduction to Hypothesis Testing | |

| 54 | Basic Concepts | |

| 55 | Sampling Distributions & the Z Test | |

| 56 | Student\'s t-test | |

| 57 | The Two Sample & Paired Sample t-tests | |

| 58 | Confidence Intervals | |

| 59 | Bagging, Committee Machines & Stacking | |

| 60 | Boosting | |

| 61 | Gradient Boosting | |

| 62 | Random Forest | |

| 63 | Naive Bayes | |

| 64 | Bayesian Networks | |

| 65 | Undirected Graphical Models - Introduction | |

| 66 | Undirected Graphical Models - Potential Functions | |

| 67 | Hidden Markov Models | |

| 68 | Variable Elimination | |

| 69 | Belief Propagation | |

| 70 | Partitional Clustering | |

| 71 | Hierarchical Clustering | |

| 72 | Threshold Graphs | |

| 73 | The BIRCH Algorithm | |

| 74 | The CURE Algorithm | |

| 75 | Density Based Clustering | |

| 76 | Gaussian Mixture Models | |

| 77 | Expectation Maximization | |

| 78 | Expectation Maximization Continued | |

| 79 | Spectral Clustering | |

| 80 | Learning Theory | |

| 81 | Frequent Itemset Mining | |

| 82 | The Apriori Property | |

| 83 | Introduction to Reinforcement Learning | |

| 84 | RL Framework and TD Learning | |

| 85 | Solution Methods & Applications | |

| 86 | Multi-class Classification |

| Sl.No | Language | Book link |

|---|---|---|

| 1 | English | |

| 2 | Bengali | Not Available |

| 3 | Gujarati | Not Available |

| 4 | Hindi | Not Available |

| 5 | Kannada | Not Available |

| 6 | Malayalam | Not Available |

| 7 | Marathi | Not Available |

| 8 | Tamil | Not Available |

| 9 | Telugu | Not Available |

IMAGES

COMMENTS

Introduction To Machine Learning Week 1 Assignment 1 Solution | NPTEL | Swayam | Jul - Dec 2023 TechEd Quest 4.54K subscribers Subscribed 90 8K views 1 year ago #python #nptel #machinelearning # ...

In this course we intend to introduce some of the basic concepts of machine learning from a mathematically well motivated perspective.

NPTEL assignment introduction to machine learning prof. ravindran which of the following are supervised learning problems? (multiple may be correct) learning to

Introduction To Machine Learning - IITKGP ABOUT THE COURSE : This course provides a concise introduction to the fundamental concepts in machine learning and popular machine learning algorithms.

Solutions to the 'Applied Machine Learning In Python' Coursera course exercises - amirkeren/applied-machine-learning-in-python

noc19 cs52 assignment Week 1. swayam NPTEL » Introduction to Machine Learning (IITKGP) Announcements Unit 3 - Week 1 About the Course [email protected] Mentor Ask a Question Progress Course outline How to access the portal Week O Assignment O week 1 Lecture 01 : Introduction Lecture 02 : Different Types of Learning Lecture 03 ...

Answer:- a, d. NPTEL Introduction to Machine Learning Assignment 1 Answers 2022:- In This article, we have provided the answers of Introduction to Machine Learning Assignment 1. Disclaimer :- We do not claim 100% surety of solutions, these solutions are based on our sole expertise, and by using posting these answers we are simply looking to ...

Introduction to Machine Learning Prof. B. Ravindran Which of the following tasks can be best solved using clustering. Predicting the amount of rainfall based on various cues

COMP486: Machine Learning Assignment 1: Introduction to Machine Learning (50 points) In this assignment we will revise some of the most fundamental and important concepts in machine learning that every student in this course should know by heart. The goal is to ensure everything is clear to you before we continue to the rest of the course.

Assignment 1 - Introduction to Machine Learning. For this assignment, you will be using the Breast Cancer Wisconsin (Diagnostic) Database to create a classifier that can help diagnose patients. First, read through the description of the dataset (below). :Number of Instances: 569.

Each quiz will be designed to assess your conceptual understanding about each unit. Probably 10 questions. Most questions will be true/false or multiple choice, with perhaps 1-3 short answer questions. You can view the conceptual questions in each unit's in-class demos/labs and homework as good practice for the corresponding quiz.

Introduction To Machine Learning - Week 1 Answers Solution 2024 | NPTEL | SWAYAMYour Queries : introduction to machine learningnptel introduction to machine...

Assignment 1: Classifier By Hand In this assignment, you learn step by step how to code a binary classifier by hand! Don't worry, it will be guided through.

For this assignment, submit a hard copy of all of your answers and of your code for Question 1.

Then Upload it to Assignment 1 in the LMS. This work is licensed under the Creative Commons Attribution 4.0 International license agreement. 50. Introduction to Spark Assignment 2

NPTEL provides E-learning through online Web and Video courses various streams.

# # --- # # Assignment 1 - Introduction to Machine Learning # For this assignment, you will be using the Breast Cancer Wisconsin (Diagnostic) Database to create a classifier that can help diagnose patients.

🔊NPTEL Introduction to Machine Learning - IITKGP Week 1 Quiz Assignment Solutions | July 2022 This course provides a concise introduction to the fundamental concepts in machine learning and ...

He has nearly two decades of research experience in machine learning and specifically reinforcement learning. Currently his research interests are centered on learning from and through interactions and span the areas of data mining, social network analysis, and reinforcement learning.

Assignment - 1 assignment introduction to machine learning prof. ravindran which of the following is supervised learning problem? grouping related documents

🔊NPTEL Introduction to Machine Learning Week 1 Quiz Assignment Solutions | Jan 2022 | IIT MadrasWith the increased availability of data from varied sources ...

Simple Introduction to Machine Learning. Module 1 • 7 hours to complete. The focus of this module is to introduce the concepts of machine learning with as little mathematics as possible. We will introduce basic concepts in machine learning, including logistic regression, a simple but widely employed machine learning (ML) method.